An Informal Introduction to Formal Languages

© 2019 Brian Heinold

Licensed under a Creative Commons Attribution-Noncommercial-Share Alike 4.0 Unported License

Here is a pdf version of the book.

Preface

There are notes I wrote for my Theory of Computation class in 2018. This was my first time teaching the class, and a lot of the material was new to me. Trying to learn the material from textbooks, I found that they were often light on examples and heavy on proofs. These notes are meant to be the opposite of that. There are a lot of examples, and no formal proofs, though I try to give the intuitive ideas behind things. Readers can consult any of the standard textbooks for formal proofs.

If you spot any errors, please send me a note at heinold@msmary.edu.

Strings and Languages

Strings

An alphabet is a set of symbols. We will use the capital Greek letter Σ (sigma) to denote an alphabet. Usually the alphabet will be something simple like Σ = {0,1} or Σ = {a,b,c}.

A string is a finite sequence of symbols from an alphabet. For instance, ab, aaaaa, and abacca are all strings from the alphabet Σ = {a,b,c}.

String operations and notation

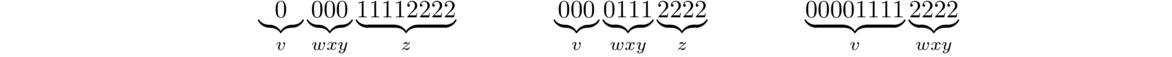

- The concatenation of strings u and v, denoted uv, consists of the symbols of u followed by the symbols of v. For instance, if u = 100 and v = 1111, then uv = 1001111.

- The notation un stands for u concatenated with itself n times. For instance, u2 is uu and u3 is uuu. So if u = 10, then u2 = 1010 and u3 = 101010. Note that u0 is the empty string.

- The notation uR stands for the reverse of u. For instance, if u is 00011, then uR is 11000.

- The length of u is denoted |u|. For instance, if u = 10101010, then |u| = 8.

- The empty string is the string with length 0. It is denoted by the Greek letter λ (lambda). Another common notation for it is ε (epsilon).

- Given an alphabet Σ, the notation Σ* denotes the set of all possible strings from Σ. For instance, if Σ = {a,b}, then Σ* = {λ, a, b, aa, ab, ba, bb, aaa, aab, …}.

Languages

Given an alphabet Σ, a language is a subset of strings built from that alphabet. Here are a few example languages from Σ = {a,b}:

- {a,aa,b,bb} — Any subset of strings from Σ*, such as this subset of four items, is a language.

- {abn : n ≥ 1} — This language consists of ab, abb, abbb, etc. In particular it's all strings consisting of an a followed by at least one b,

- {anbn : n ≥ 0} — This is all strings that start with some number of a's followed by the same number of b's. In particular, it is λ, ab, aabb, aaabbb, etc.

Operations on languages

Since languages are sets, set operations can be applied to them. For instance, if L and M are languages, we can talk about the union L ∪ M, intersection L ∩ M, and complement L.

Another important operation on two languages L and M is the concatenation LM, which consists of all the strings from L with all the strings from M concatenated to the end of them. The notation Ln is used just like with strings to denote L concatenated with itself n times.

One final operation is called the Kleene star operation. Given a language L, L* is all strings that can be created from concatenating together strings from L. Formally, it is L0 ∪ L1 ∪ L2 ∪ …. Informally, think of it as all the strings you can create by putting together strings of L with repeats allowed.

Here are some examples. Assume Σ = {a,b},

- Let L = {a, ab, aa} and M = {a, bb}. Then L ∪ M = {a, ab, aa, bb} and L ∩ M = {a}.

- Let L = {λ, a, b}. Then L consists of any string from Σ* except the three things in L. In particular, it is any string of a's and b's with length at least 2.

- Let L = {b, bb} and M = {an : n ≥ 1}. Note that M is all strings of one or more a's. Then the concatenation LM is {ba, bba, baa, bbaa, baaa, bbaaa, baaaa, bbaaaa, …}.

- Let L = {aa, bbb}. Then some things in L* are aa, aabbb, aabbbaa, and bbbbbbaaaa. In general, L* contains anything we can get by concatenating the strings from L in any order, with repeats allowed. Note that λ is always in L*.

Automata

Introduction

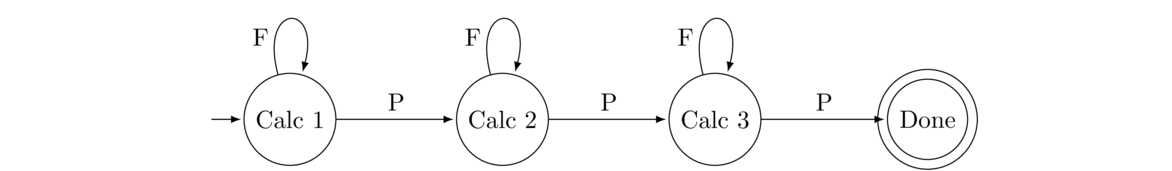

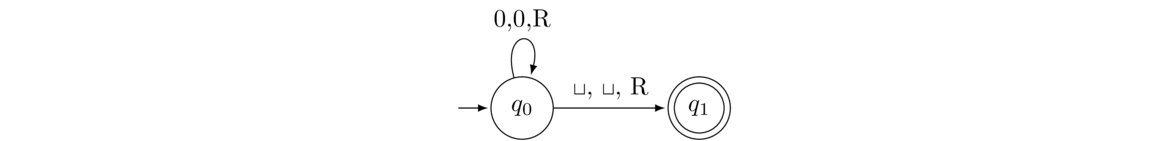

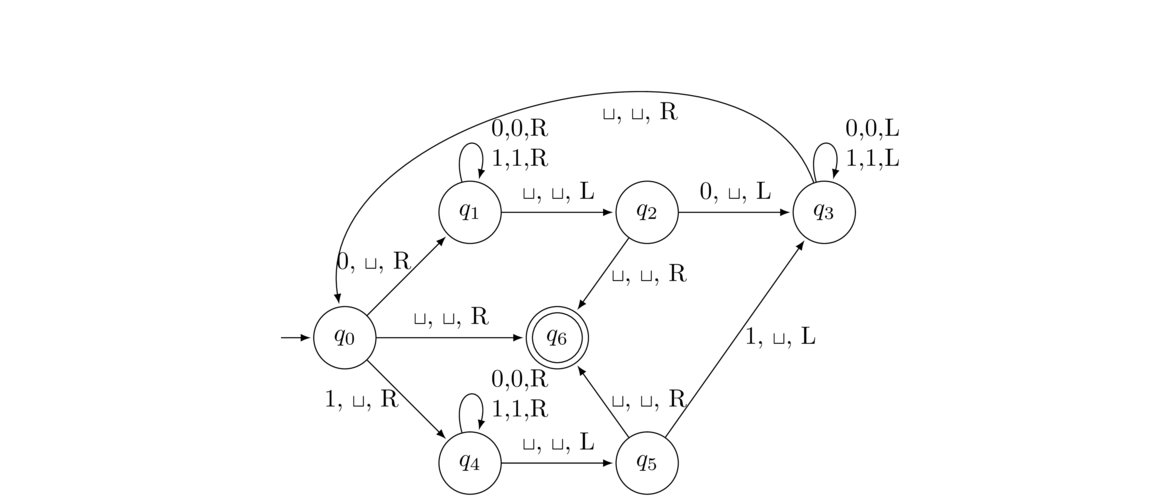

Here is an example of something called a state diagram.

It depicts a hypothetical math minor at a college. P and F stand for pass and fail. The four circles are the possible states you can be in. The arrow pointing to the Calc 1 state indicates that you start at that state. The other arrows indicate which class you move to depending on whether you pass or fail the current class. The state with the double circle is called an accepting state. A student only completes a minor if they get to that accepting state.

We can think of feeding this machine a string of P's and F's, like PPFP, and seeing what state we end up in. For this example, we start at Calc 1, get a P and move to Calc 2, then get another P and move to Calc 3, then get an F and stay at Calc 3, and finally get a P to move to the Done state. This string is considered to be accepted. A different string, like FFPF would not be considered accepted because we would end up in the Calc 2 state, which is not an accepting state.

Deterministic Finite Automata

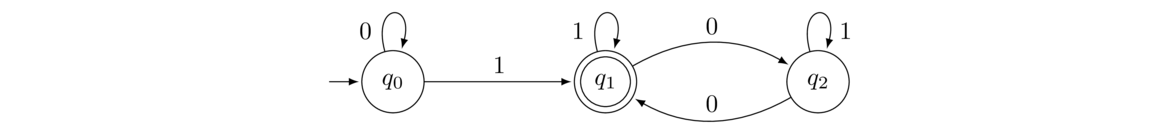

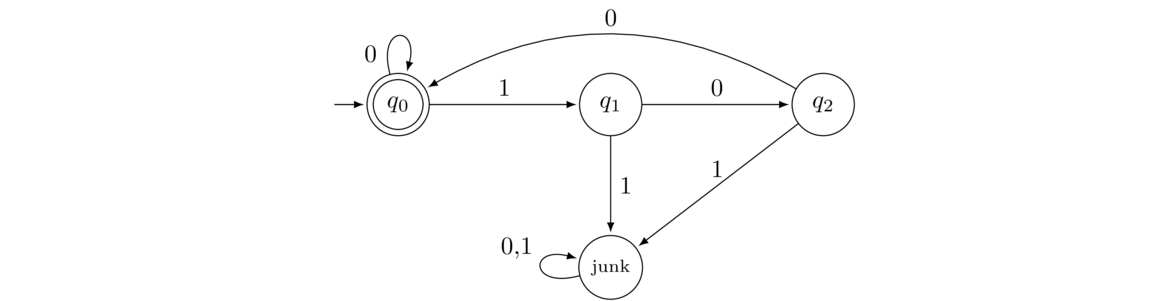

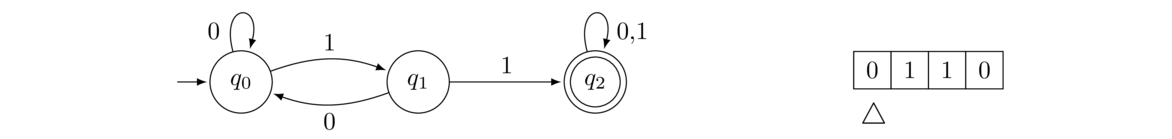

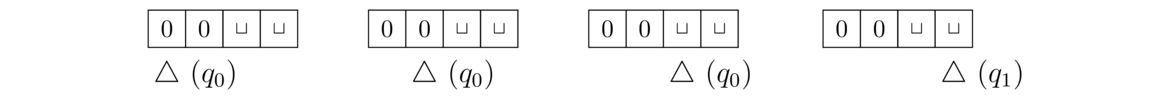

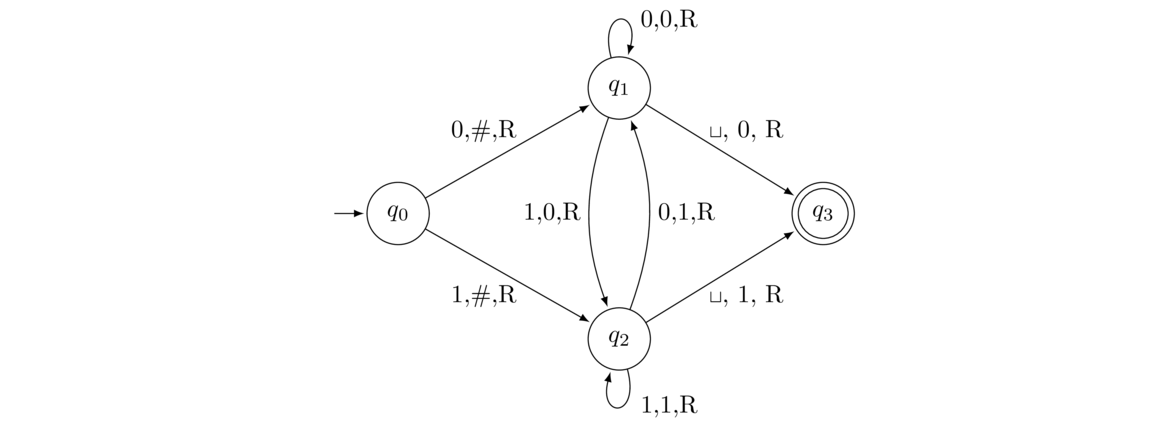

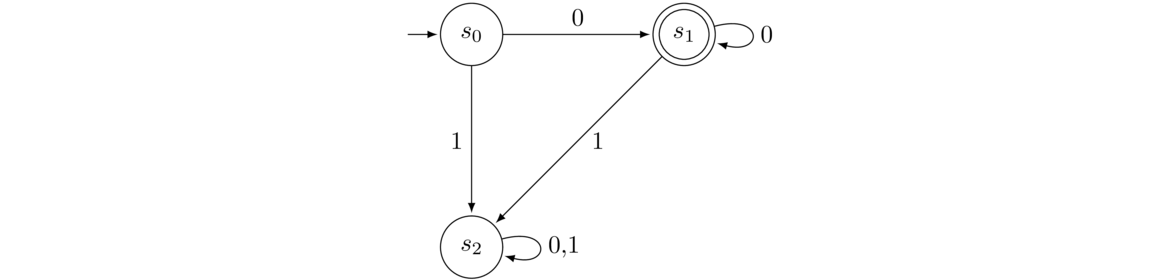

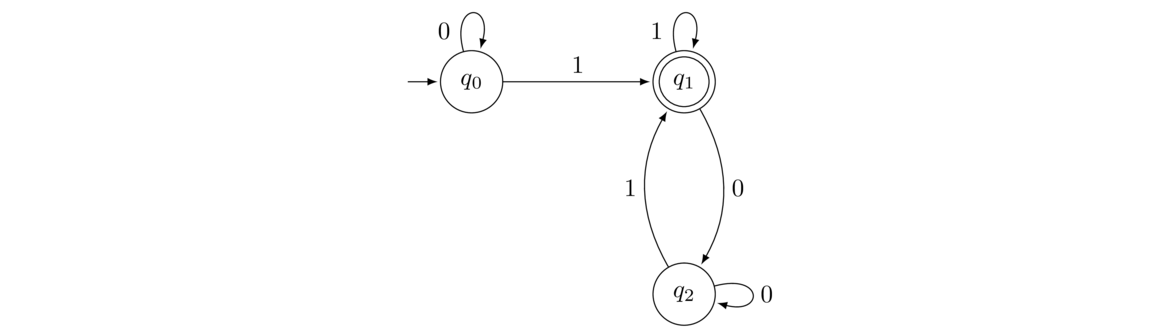

State diagrams like the example above are used all over computer science and elsewhere. We will be looking at a particular type of state diagram called a Deterministic Finite Automaton (DFA). Here is an example DFA:

We feed this DFA input strings of 0s and 1s, follow the arrows, and see if we end up in an accepting state (q1) or not (q0 or q2). For instance, suppose we input the string 001100. We start at state q0 (indicated by the arrow from nowhere pointing into it). With the first 0 we follow the 0 arrow from q0 looping back to itself. We do the same for the second 0. Now we come to the third symbol of the input string, 1, and we move to state q1. We then loop back onto q1 with the next 1. The fifth symbol, 0, moves us to q2, and the last symbol, 0, moves us back to q1. We have reached the end of the input string, and we find ourselves in q1, an accepting state, so the string 001100 is accepted.

On the other hand, if we feed it the string 0111000, we would trace through the states q0 → 0 q0 → 1 q1 → 1 q1 → 1 q1 → 0 q2 → 0 q1 → 0 q2, ending at a non-accepting state, so the string is not accepted.

With a little thought, one can work out that the strings that are accepted by this automaton are those start with some number of 0s (possibly none), followed by a single 1, and then after that are followed by some (possibly empty) string of 0s and 1s that has an even number of 0s.

Formal Definition and Details

The word automaton (plural automata) refers in typical English usage to a machine that runs on its own. In particular, we can think of a DFA as a machine that we feed a string into and get an output of “accepted” or “not accepted”. A DFA is in essence a very simple computer. One of our main goals is to see just how powerful this simple computer is—in particular what things it can and can't compute.The deterministic part of the name refers to the fact that a given input string will always lead to the same path through the string. There is no choice or randomness possible. The same input always gives the exact same sequence of states.

We will mostly be drawing DFAs like in the figures shown earlier, but it's nice to have a formal definition to clear up any potential ambiguities. You can skip this part for now if you think you have a good handle on what a DFA is, but you may want to come back to it later, as formal definitions are useful especially for answering questions you might have (such as if a DFA can have an infinite number of states or if it can have multiple start states).

A DFA is given by five values (Q, Σ, δ, q0, F) with the following definitions:

- Q is a finite set of objects called states

- Σ is a finite alphabet

- δ (delta) is a function δ: Q × Σ → Q that specifies all the transitions from state to state.

- q0 ∈ Q is a particular state called the start or initial state.

- F ⊆ Q is a collection of states that are the final or accepting states.

In short, a DFA is defined as a collection of states with transitions between them along with a single initial state and zero or more accepting states. The definition, though, brings up several important points:

- Q is a finite set. So there can't be an infinite number of states.

- Σ is any finite alphabet. For simplicity, we will almost always use Σ = {0,1}.

- The trickiest part of the definition is probably δ, the function specifying the transitions. A transition from state q0 to q1 via a 1 would be δ(q0,1) = q1. With this definition, we can represent a DFA without a diagram. We could simply specify transitions using function notation or in a table of function values.

- Every state must have a transition for every symbol of the alphabet. We can't leave any possibility undone.

- There can be only one initial state.

- Final states are defined as a subset of the set of states. In particular, any amount of the states can be final, including none at all (the empty set).

- A DFA operates by giving it an input string. It then reads the string's symbols one-by-one from left to right, applying the transition rules and changing the state accordingly. The string is considered accepted if the machine is in an accepting state after all the symbols are read.

- The language accepted by a DFA is the set of all input strings that it accepts.

Finally, note that in some books and references you may see DFAs referred to as deterministic finite accepters or as finite state machines.

Examples

Here are a number of examples where we are given a language and want to build a DFA that accepts all the strings in that language and no others. Doing this can take some thought and creativity. There is no general algorithm that we can use. One bit of advice is that it often helps to create the states first and fill in the transitions later. It also sometimes helps to give the states descriptive names.

For all of these, assume that the strings come from the alphabet Σ = {0,1}, unless otherwise mentioned. Below are all the examples we will cover in case you want to try them before looking at the answers.

- All strings ending in 1.

- All strings with an odd number of 1s.

- All strings with at least two 0s.

- All strings with at most two 0s.

- All strings that start with 01.

- All strings of the form 0n1 with n ≥ 0 (this is all strings that start with any number of 0s (including none) followed by a single 1).

- All strings of the form 0101n with n ≥ 0.

- All strings in which every 1 is followed by at least two 0s.

- All strings that end with the same symbol they start with.

- All strings whose length is a multiple of 3.

- All strings with an even number of 0s and an even number of 1s.

- All strings of 0s and 1s.

- All nonempty strings of 0s and 1s.

- Only the string 001.

- Any string except 001.

- Only the strings 01, 010, and 111.

- All strings that end in 00 or 01.

- All strings with at least three 0s and at least two 1s.

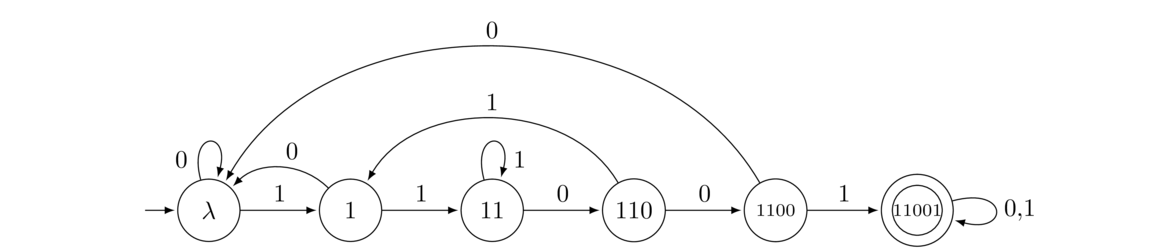

- All strings containing the substring 11001.

Here are the solutions.

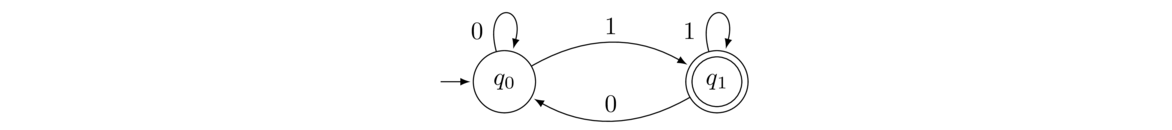

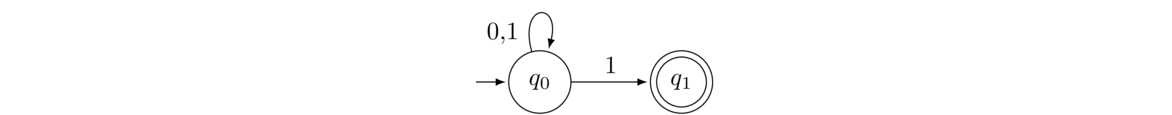

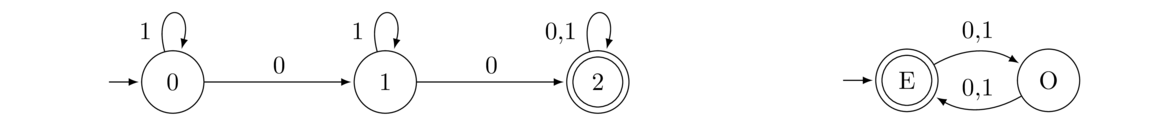

- All strings ending in 1.

We have two states here, q0 and q1. Think of q0 as being the state we're in if the last symbol we've seen is not a 1, and q1 as being the state we're in if the last symbol we've seen is a 1. If we get a 0 at q0, we still don't end in 1, so we stay at q0, but if we get a 1, then we do now end in 1, so we move to q1. From q1, things are revered: a 1 should keep us at q1, but a 0 means we don't end in 1 anymore and we should therefore move back to q0.

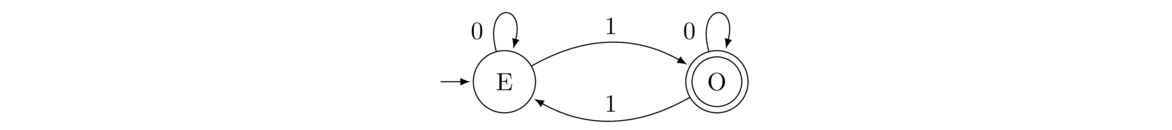

- All strings with an odd number of 1s.

Here we have named the states E for an even number of 1s and O for an odd number. As we move through the input string, if we have seen an even number of 1s so far, then we will be in State E, and otherwise we will be in State O. A 0 won't change the number of 1s, so we stay at the current state whenever we get a 0. A 1 does change the number of 1s, so we flip to the opposite state whenever we get a 1.

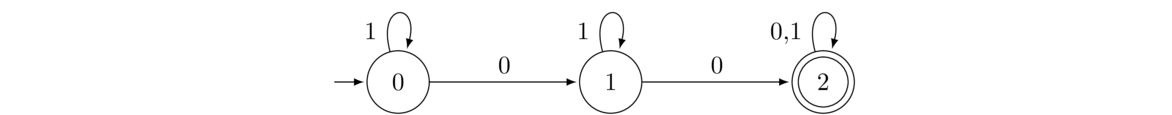

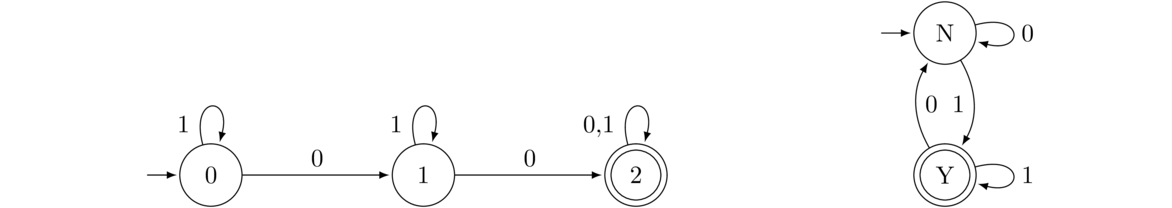

- All strings with at least two 0s.

The states are named 0, 1, and 2 to indicate how many 0s we have seen. Each new 0 moves us to the next state, and a 1 keeps us where we are. Once we get to State 2, we have reached our goal of two 0s, and nothing further in the input string can change that fact, so we loop with 0 and 1 at State 2.

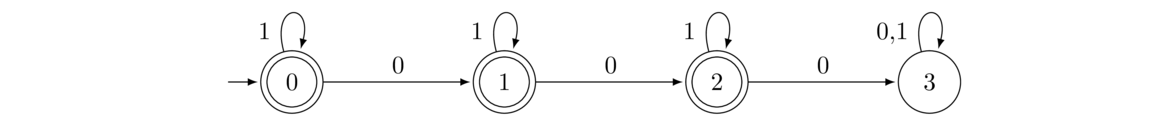

- All strings with at most two 0s.

This is very similar to the previous example. One key difference is we want at most two 0s, so the accepting states are now States 0, 1, and 2. State 3, for three or more 0s, is an example of a junk state (though most people call it a trap state or a dead state). Once we get into a junk state, there is no escape and no way to be accepted. We will use junk states quite a bit in the DFAs we create here.

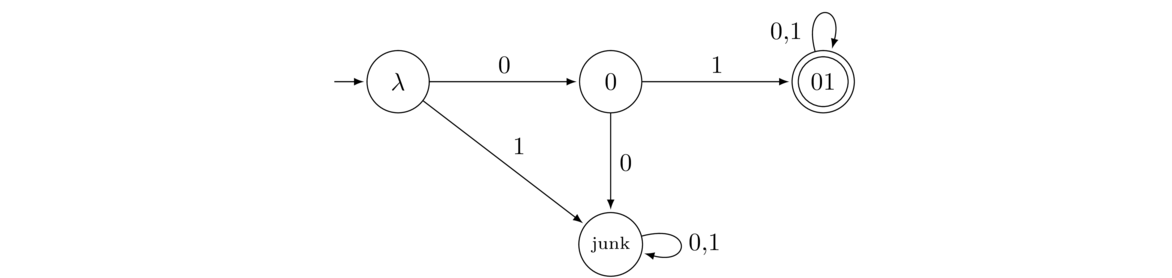

- All strings that start with 01.

Here our states are named λ, 0, and 01, with an explicit junk state. The first three states record our progress towards starting with 01. Initially we haven't seen any symbols, and we are in State λ. If we get a 0 we move to State 0, but if we get a 1, then there's no hope of being accepted, so we move to the junk state. From State 0, if we get a 1, then we move to State 01, but if we get a 0, we go to the junk state since the string would then start with 00, which means the string cannot be accepted. Once we get to State 01, whatever symbols come after the initial 01 are of no consequence, as the string starts with 01 and those symbols cannot change that fact. So we stay in that accepting state.

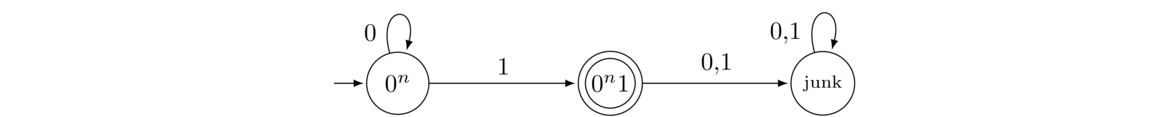

- All strings of the form 0n1 with n ≥ 0. This is all strings that start with any number of 0s (including none) followed by a single 1.

Here State 0n is where we accumulate 0s at the start of the string. When we get a 1, we move to State 0n1. That state is accepting, but if we get anything at all after that first 1, then our string is not of the form 0n1, so we move to the junk state.

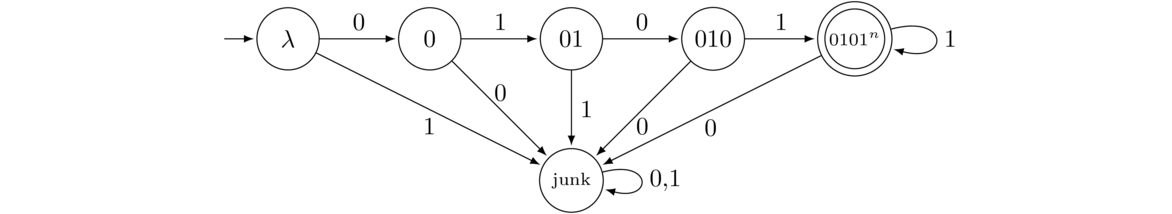

- All strings of the form 0101n with n ≥ 0.

To create this DFA, we consider moving along building up the string from λ to 0 to 01 to 010 to 0101. If we get a symbol that doesn't help in this building process, then we go to the junk state. When we finally get to 0101, we are in an accepting state and stay there as long as we keep getting 1s, with any 0 sending us to the junk state.

- All strings in which every 1 is immediately followed by at least two 0s.

The initial state q0 is an accepting state because initially we haven't seen any 1s, so every 1 is vacuously followed by at least two 0s. And we can stay there if we get 0s since 0s don't cause problems; only 1s do. When we get a 1, we move to q1 where the string has been put on notice, so to speak. If it wants to be accepted, we now need to see two 0s in a row. If we don't get a 0 at this point, we move to the junk state. If we do get a 0, we move to q2, where we still need to see one more 0. If we don't get it, we go to the junk state. If we do get it, then we move back to the accepting state q0. We could move to a separate accepting state if we want, but it's more efficient to move back to q0.

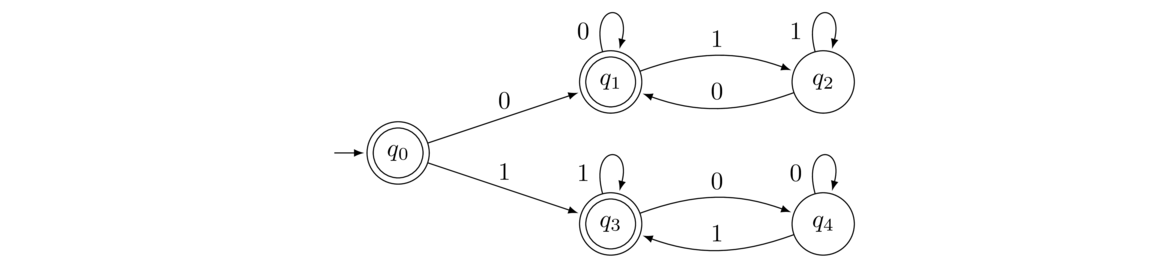

- All strings that end with the same symbol they start with.

First, the initial state q0 is accepting because the empty string vacuously starts with the same symbol it ends with. From q0 we branch off with a 0 or a 1, sort of like an if statement in a programming language. The top branch is for strings that start with 0, and the bottom is for strings that start with 1. In the top branch, any time we get a 0, we end up in q1, which is accepting, and any time we get a 1, we move to the non-accepting state q2. The other branch works similarly, but with the roles of 0s and 1s reversed.

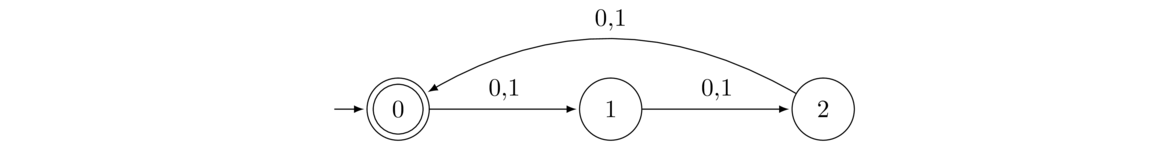

- All strings whose length is a multiple of 3.

For this DFA, the states 0, 1, and 2 stand for the congruence modulo 3. State 0 is for all strings with a multiple of 3 symbols. State 1 is for all strings that leave remainder 1 when divided by 3, and State 2 is for remainder 2. Any symbol at all moves us from State k to State (k+1) mod 3.

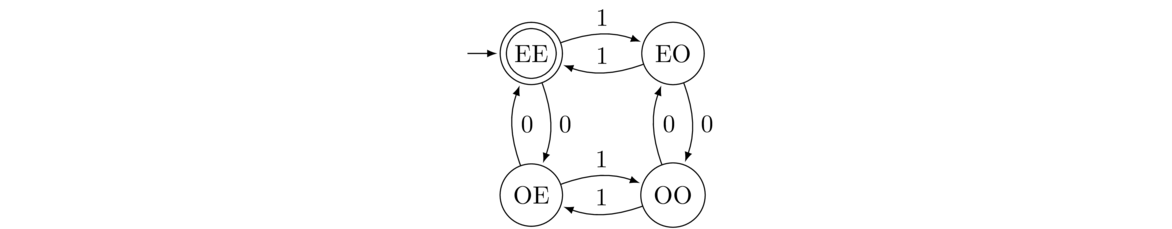

- All strings with an even number of 0s and an even number of 1s.

The state names tell us whether we have an even or odd number of 0s and 1s. For instance EO is an even number of 0s and an odd number of 1s. Once we have these states, the transitions fall into place naturally. For instance, a 1 from State EE should take us to State EO since a 1 will not change the number of 0s, but it will change the number of 1s from even to odd.

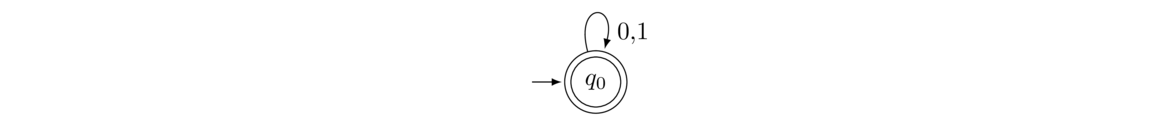

- All strings of 0s and 1s.

Since every string is accepted, we only need one accepting state that we start at and never leave.

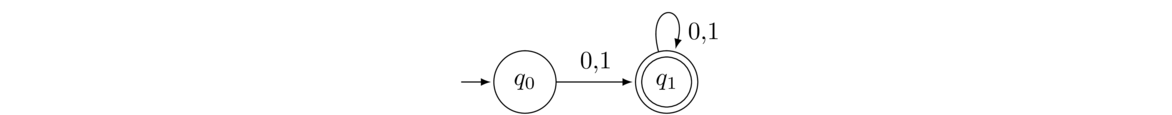

- All nonempty strings of 0s and 1s.

This is similar to the above, but we need to do a little extra work to avoid accepting the empty string.

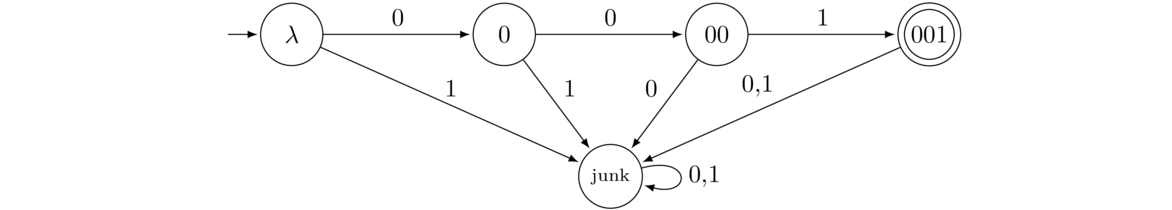

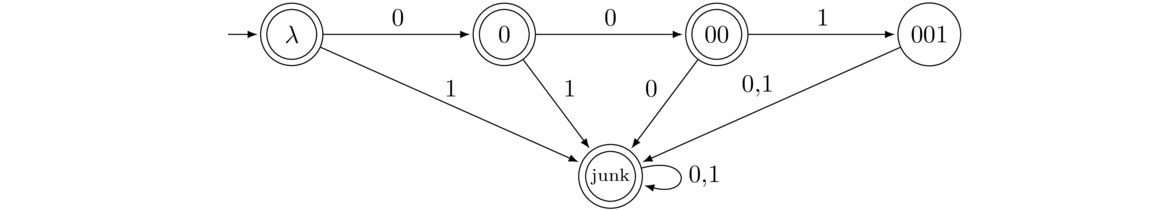

- Only the string 001.

Like the 0101n example earlier, we build up symbol-by-symbol to the string 001, with anything not leading to that heading off to a junk state. Once we get to 001, we accept it, but if we see any other symbols, then we head to the junk state.

- Any string except 001.

This is the exact DFA as above but with the accepting and non-accepting states flip-flopped.

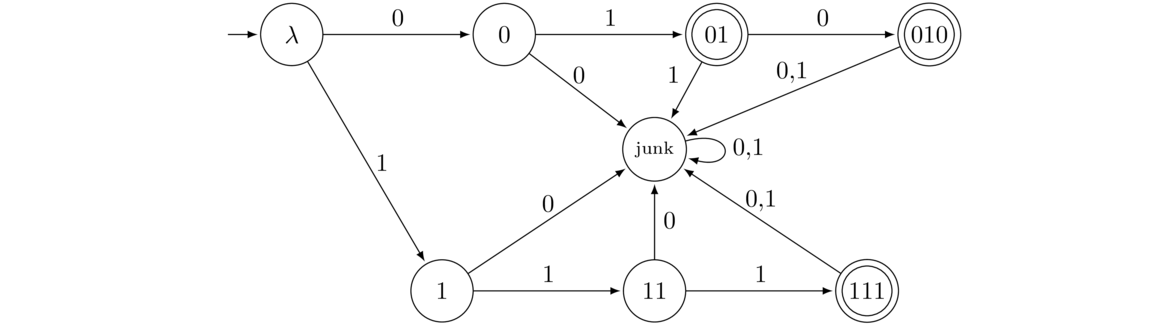

- Only the strings 01, 010, and 111.

To create this DFA, consider building up the three strings 01, 010, and 111, with anything not helping with that process going to a junk state. A similar approach can be used to build a DFA that accepts any finite language.

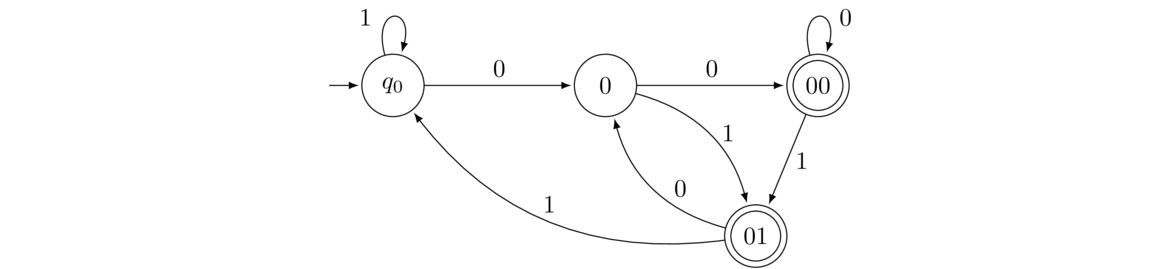

- All strings that end in 00 or 01.

Starting from q0, a 1 doesn't take us any closer to ending with 00 or 01, so we stay put. A 0 takes us to State 0. After that 0, we take note of whether we get a 0 or a 1. Both take us to accepting states. From the 00 accepting state, if we get another 0 we still end with 00, so we stay. Getting a 1 at State 00 means the last two symbols are now 01, so we move to the 01 accepting state. From the 01 accepting state, getting a 1 takes us back to the start, but getting a 0 takes us back to 0 because that 0 takes us part of the way to ending with 00 or 01.

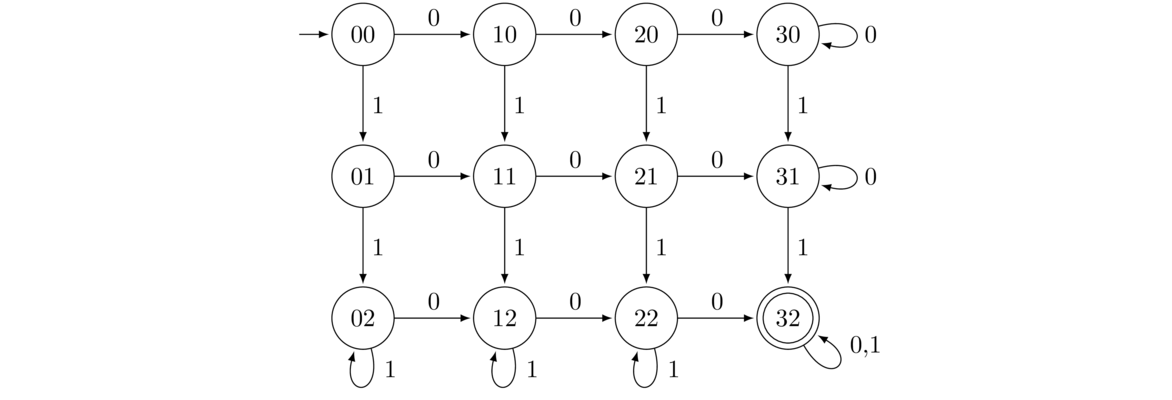

- All strings with at least three 0s and at least two 1s.

For this DFA, we lay things out in a grid. The labels correspond to how many 0s and 1s we have seen so far. For instance, 21 corresponds to two 0s and one 1. Once we have these states, the transitions fall into place, noting that once we get to the right edge of the grid, we have gotten to three 0s, and getting more 0s doesn't change that we have at least three of them, so we loop. A similar thing happens at the bottom of the grid.

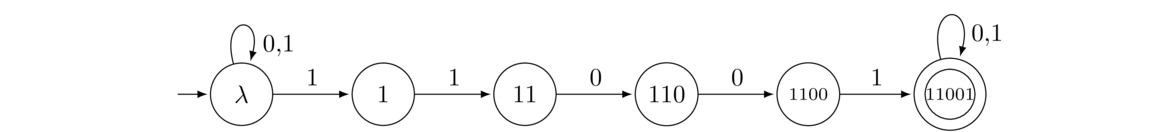

- All strings containing the substring 11001.

To construct this DFA, we consider building up the substring symbol by symbol. If we get a symbol that advances that construction, we move right, and if we don't, then we move backwards or stay in place. When we get a “wrong” symbol, we don't necessarily have to move all the way back to the start since that wrong symbol might still leave us partway toward completing the substring. For instance, from State 110, if we get a 1, that 1 can be used as the first symbol of a new start at the substring. On the other hand, from State 1100, if we get a 0, then that 0 gives us no start on a new substring, so we have to go back to the start.

Note: There is a nice, free program called JFLAP that allows you to build DFAs by clicking and dragging. It also allows you to run test cases to see what strings are and aren't accepted by the DFA. Besides this, it does a number of other things with automata and languages. See https://www.jflap.org.

Applications of DFAs

A lot of programs and controllers for devices can be thought of as finite state machines. Instead of transitions of 0s and 1s, as in the examples above, the transitions can be various things. And instead of accepting or rejecting an input, we are more interested in the sequence of states the machine goes through.

For example, a traffic light has three states: red, yellow, and green. Transitions between those states usually come from a timer or a sensor going off. A washing machine is another physical object that can be thought of as a state machine. Its states are things like the spin cycle, rinse cycle, etc. Transitions again come from a timer.

As another example, consider a video game where a player is battling monsters. There might be states for when the player is attacking a monster, being attacked, when there is no battle happening, when their health gets to 0, when the monster's health gets to 0, etc. Transitions between the states come from various events.

Thinking about programs as finite state machines in this way can be a powerful way to program.

Nondeterministic Finite Automata

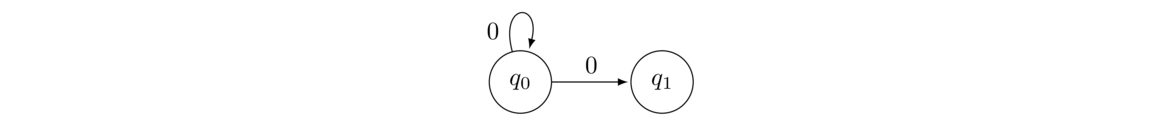

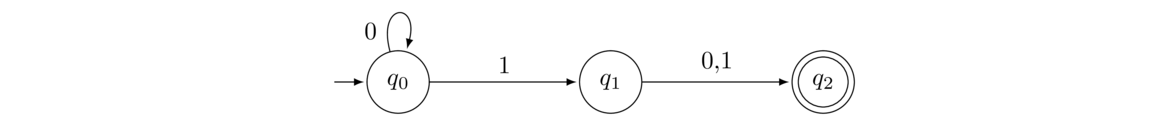

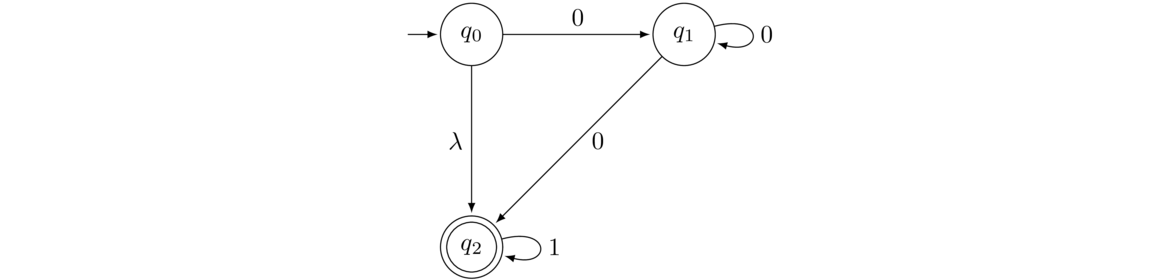

A close relative of the deterministic finite automaton is the nondeterministic finite automaton (NFA). The defining feature of NFAs, not present in DFAs, is a choice in transitions, like below:

With this NFA, a 0 from q0 could either leave us at q0 or move us to q1. This is the nondeterministic part of the name. With an NFA, we don't necessarily know what path an input string will take.

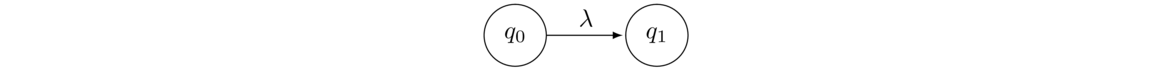

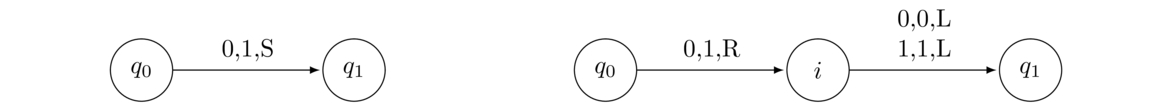

There is a second type of nondeterminism possible:

This is a λ transition. It is sort of like a “free move” where we can move to a state without using up part of the input string. This is nondeterministic because of the choice. We can either take the free move, or use one of the ordinary transitions.

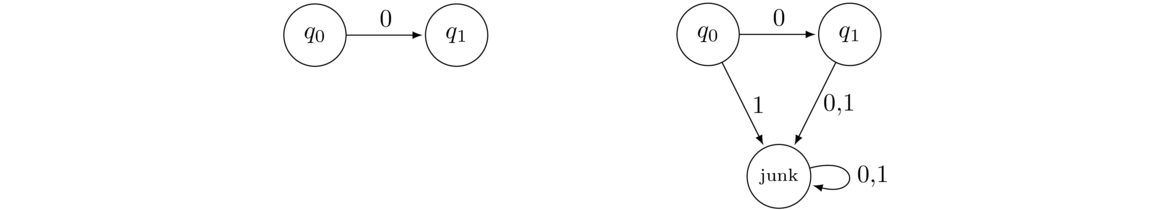

A third feature of NFAs, not present in DFAs, is that we don't need to specify every possible transition from each state. Any transitions that are left out are assumed to go to a junk state. For instance, the NFA on the left corresponds the DFA on the right.

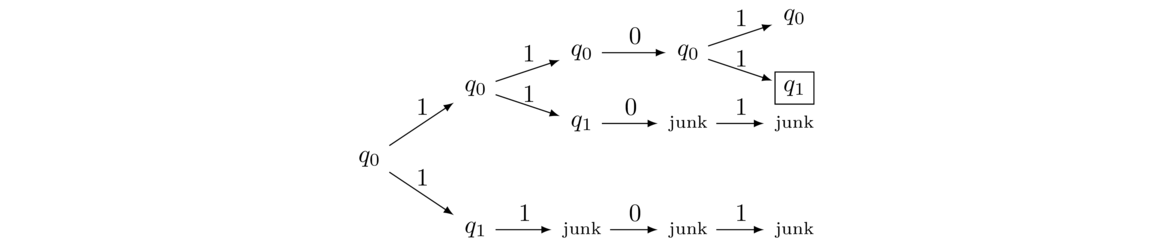

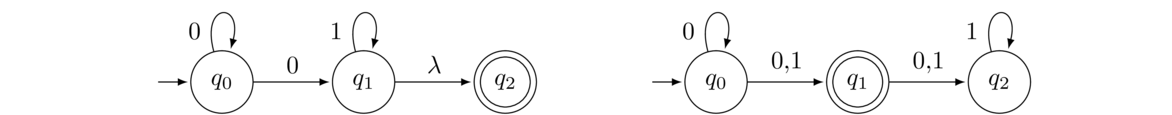

Here is an example NFA:

Consider the input string 11. There are multiple paths this string can lead to. One is q0 → 1 q0 → 1 q1. Another is q0 → 1 q1 → 1 junk (remember that any transitions not indicated are assumed to go to a junk state). The first path leads to an accepting state, while the second doesn't. The general rule is that a string is considered to be accepted if there is at least one path that leads to an accepting state, even if some other paths might not end up at accepting states.

From this definition, we can see that the strings that are accepted by this NFA are all strings that end in 1. We can think about the NFA as permitting us to bounce around at state q0 for as long as we need until we get to the last symbol in the string, at which point we can move to q1 if that last symbol is a 1.

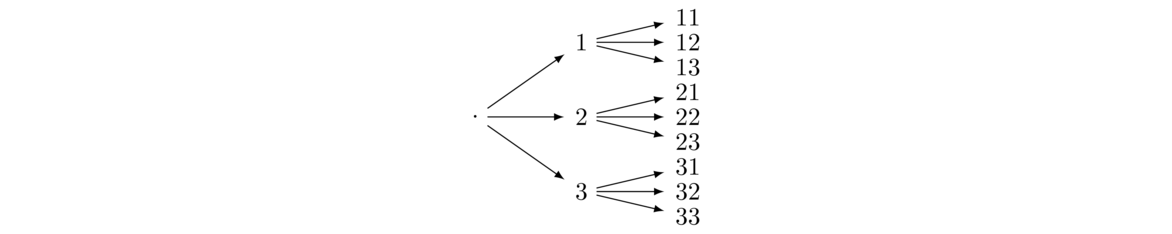

One way to understand how an NFA works is to think in terms of a tree diagram. Every time we have a choice, we create a branch in the tree. For instance, here is a tree showing all the possible paths that the input string 1101 can take through the NFA above.

As we see, there are four possible paths. One of them does lead to an accepting state, so the string 1101 is accepted.

We could draw a similar tree diagram for a DFA, but it would be boring. A DFA, being deterministic, leaves no room for choices or branching. Its tree diagram would be a single straight-line path.

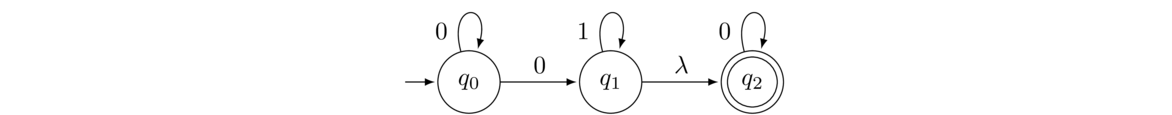

Here is another example NFA.

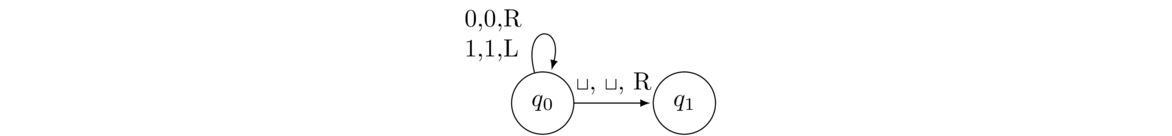

Consider the input string 00. There are again a number of paths that this string can take through the NFA. One possible path through this NFA is q0 → 0 q1 → λ q2 → 0 q2. That is, we can choose to move directly to q1 with the first 0. Then we can take the λ transition (free move) to go to q2 without using that second 0. Then at q2 we can use that 0 to loop. Since we end at the accepting state, the string 00 is accepted. So just to reiterate, think of a λ transition as a free move, where we can move to another state without using up a symbol from the input string.

Nondeterminism

Nondeterminism is a different way of thinking about computing than we are used to. Sometimes people describe an NFA as trying all possible paths in parallel, looking for one that ends in an accepting state.

One way to see the difference between DFAs and NFAs is to think about how a program that simulates DFAs would be different from one that simulates NFAs. To simulate a DFA, our program would have a variable for the current state and a table of transitions. That variable would start at the initial state, and as we read symbols from the input string, we would consult the table and change the state accordingly.

To simulate a nondeterministic machine, we would do something similar, except that every time we come to a choice, where there are two or more transitions to choose from, we would spawn off a new thread. If any of the threads ends in an accepting state, then the input string is accepted. This parallelism allows NFAs to be in some sense faster than DFAs.

Constructing NFAs

Here we will construct some NFAs to accept a given language. Building NFAs requires a bit of a different thought process from building DFAs. Here are the examples we will be doing. It is worth trying them before looking at the answers.

- All strings containing the substring 11001.

- All strings whose third-to-last symbol is 1.

- All strings that either end in 1 or have an odd number of symbols.

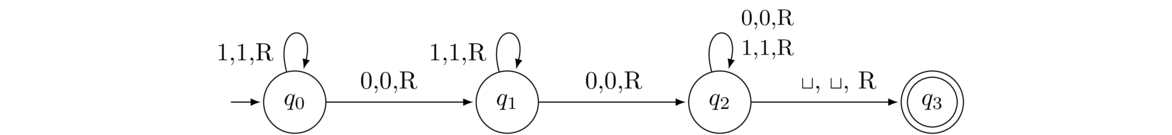

- All strings containing the substring 11001.

We earlier constructed a DFA for this language. The NFA solution is a fair bit simpler. The states are the same, but we don't worry about all the backwards transitions. Instead we think of the initial state as chewing up all the symbols of the input string that it needs to until we (magically) get to the point where the 11001 substring starts. Then we march through all the states, arriving at the accepting state, where we stay for the rest of the string.

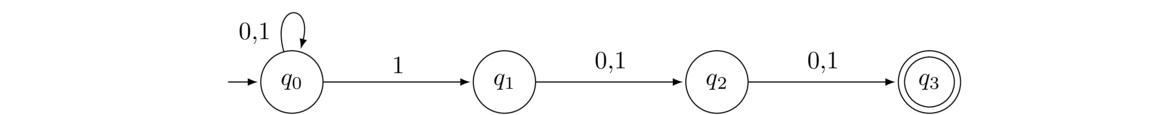

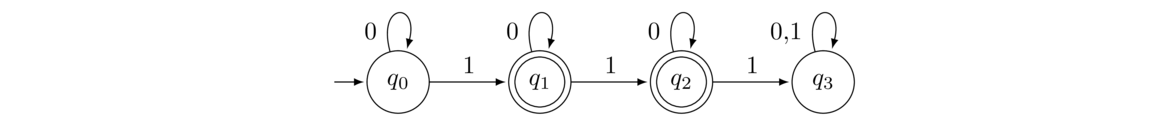

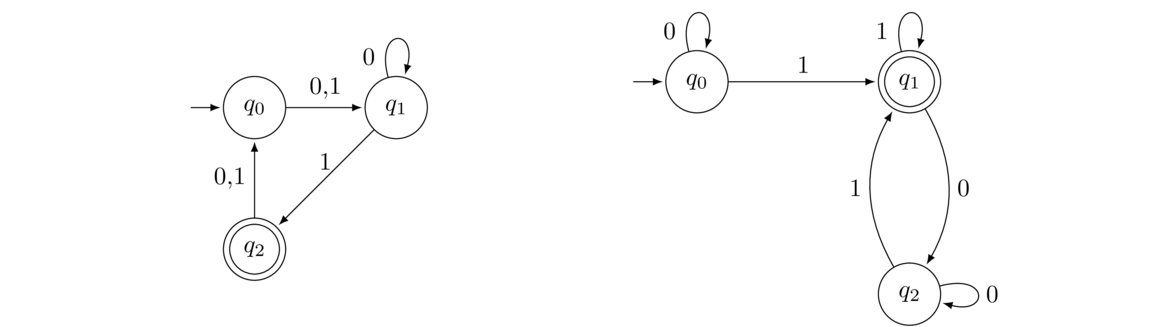

- All strings whose third-to-last symbol is 1.

We think of the initial state as chewing up all the symbols of the input string we need until we get to the third-to-last symbol. If it is a 1, we can use the 1 transition to q1 and then use the final two symbols to get to the accepting state q3.

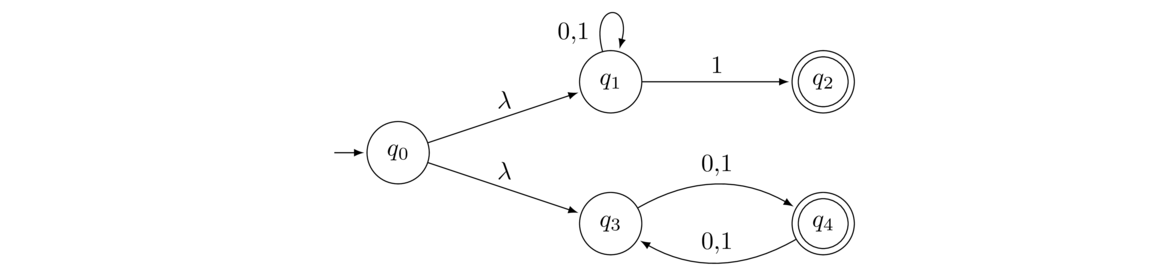

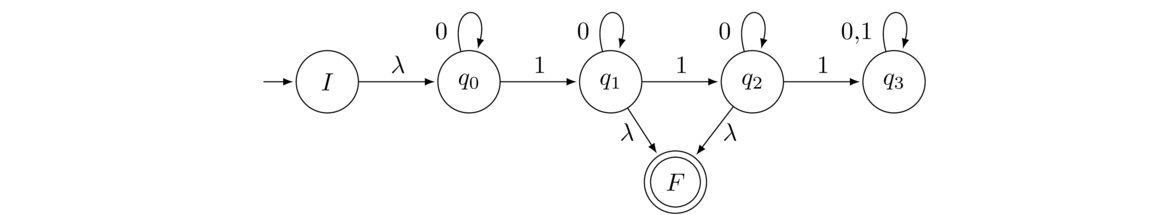

- All strings that either end in 1 or have an odd number of symbols.

The trick to this problem is to create NFAs or DFAs for strings that end in 1 and for strings with an odd number of symbols. Then we connect the two via the λ transitions from the start state. This gives the NFA the option of choosing either of the two parts, which gives us the “or” part of the problem.

Formal definition of an NFA

The definition of an NFA is the same as that of a DFA except that the transition function δ is defined differently.

For DFAs, the transition function is defined as δ: Q × Σ → Q. Each possible input (state, symbol) pair is assigned an output state. With an NFA, δ is defined as

δ: Q × Σ ∪ {λ} → P(Q). The difference is that we add λ as a possible transition, and the output, instead of being a state in Q, is an element of P(Q), the power set of Q. In other words, the output is a set of states, allowing multiple possibilities (or none) for a given symbol.

One thing to note in particular, is that every DFA is an NFA.

Equivalence of NFAs and DFAs

Question: are there any languages we cannot construct a DFA to accept? Answer: yes, there are many. For instance, it is not possible to construct a DFA that accepts the language 0n1n, which consists of all strings of a number of 0s followed by the same number of 1s. A DFA is not able to remember exactly how many 0s it has seen in order to compare with the number of 1s. Some other things that turn out to be impossible are the language of all palindromes (strings that are the same forward and backward, like 110101011 or 10011001), and all strings whose number of 0s is a perfect square. We will see a little later why these languages cannot be done with DFAs.

The next question we might ask is how much more powerful NFAs are than DFAs. In particular, what languages can we build an NFA to accept that we can't build a DFA to accept? The somewhat surprising answer is nothing. NFAs make it easier to build an automaton to accept a given language, but they don't allow us to do anything that we couldn't already do with a DFA. It's analogous to building a house with power tools versus hand tools—power tools make it easier to build a house, but you can still build a house with hand tools.

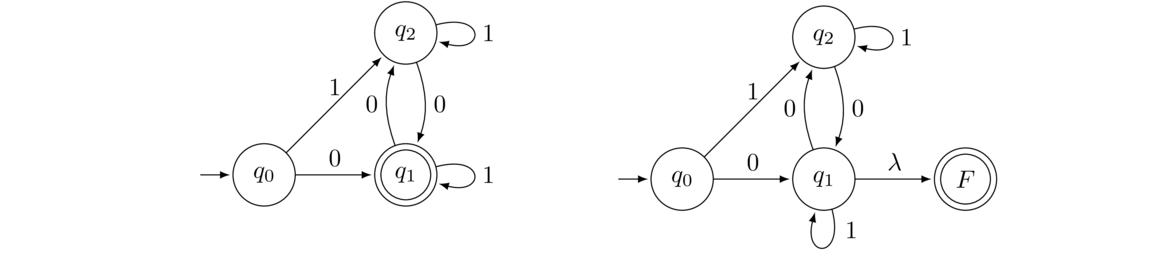

To show that NFAs are no more powerful than DFAs, we will show that given an NFA it is possible to construct a DFA that accepts the same language as the NFA. The trick to doing this is something called the powerset construction. It's probably easiest to follow by starting with an example.

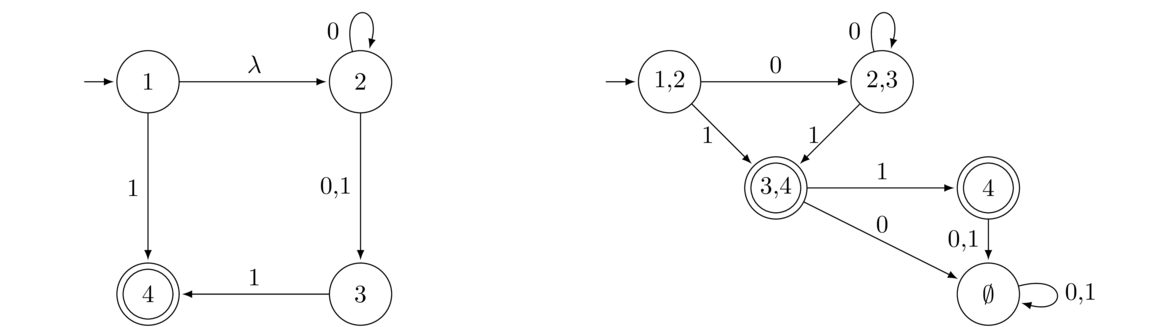

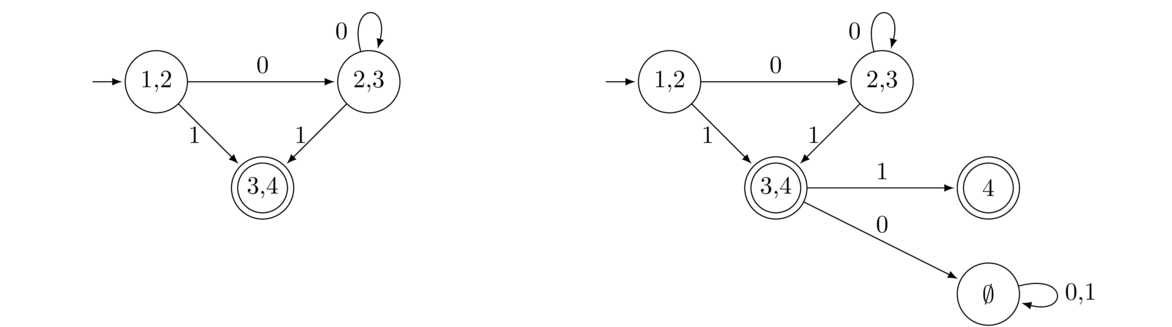

Shown below on the left is an NFA and on the right is a DFA that accepts the same language.

Each state of the DFA is labeled with a subset of the states of the NFA. The DFA's initial state, labeled with the subset {1,2}, represents all the states the NFA can be in before any symbols have been read. The 1 comes from the fact that the initial state is 1 and the 2 comes from the fact that we can move to State 2 via a λ move without reading a symbol from the input string.

The 0 transition from that initial state goes to a state labeled with {2,3}. The reason for this if we are in States 1 or 2 of the NFA and we get a 0, then States 2 or 3 are all the possible states we can end up in.

This same idea can be used to build up the rest of the DFA. To determine where a transition with symbol x from a given DFA state S will go, we look at all the NFA states that are in the label of S and see in what NFA states we would end up if we take an x transition from them. The x transition from S goes to a DFA state labeled with a set of all those NFA states. If any of those states is an accepting state, then the DFA state we create will be accepting. The overall idea of this process is that each state of the DFA represents all the possible states the NFA can be in at various points in reading the input string.

Here is a step-by-step breakdown of the process of building the DFA: We start building the DFA by thinking about all the states of the NFA that we can be in at the start. This includes the initial state and anything that can be reached from it via a λ transition. For this NFA those are States 1 and 2. Therefore, the initial state of the DFA we are constructing will be a state with the label 1,2. This is shown below on the left.

Next, we need to know where our initial state will go with 0 and 1 transitions. To do this, we look at all the places we can end up if we start in States 1 or 2 of the NFA and get a 0 or a 1. We can make a table like below to help.

1 → 0 junk 1 → 1 4

2 → 0 2 or 3 2 → 1 3

Putting this together, the 0 transition from the 1,2 state in the DFA will need to go to a new state labeled 2,3 (ignore the junk here). Similarly, the 1 transition from the 1,2 state in the DFA will go to a new state labeled 3,4. Note that since State 4 is accepting in the NFA and the new State 3,4 contains 4, we will make State 3,4 accepting in the DFA. All of this is shown above on the right.

Let's now figure out where the 0 and 1 transitions from the 2,3 state should go. We make the following table looking at where we can go from States 2 or 3 of the NFA:

2 → 0 2 or 3 2 → 1 3

3 → 0 junk 3 → 1 4

So we consolidate this into having 2,3 loop back onto itself with a 0 transition and go to a new state labeled 4 with a 1 transition. See below on the left.

Next, lets look at where the transitions out of State 3,4 must go.

3 → 0 junk 3 → 1 4

4 → 0 junk 4 → 1 junk

Notice that we can go nowhere in the NFA but the implicit junk state with a 0 transition. In other words, there are no real states that we can get to via a 0 from States 3 or 4. This leads us to create a new state labeled ∅ that will act as a junk state in the DFA. Next, as far as the 1 transition goes, the only possibility coming out of States 3 and 4 is to go to 4. See above on the right.

The last step of the process is to figure out the transitions out of the new State 4. There are no transitions out of it in the NFA, so both 0 and 1 will go to the junk State ∅ in the DFA. See above at the start of the problem for the finished DFA.

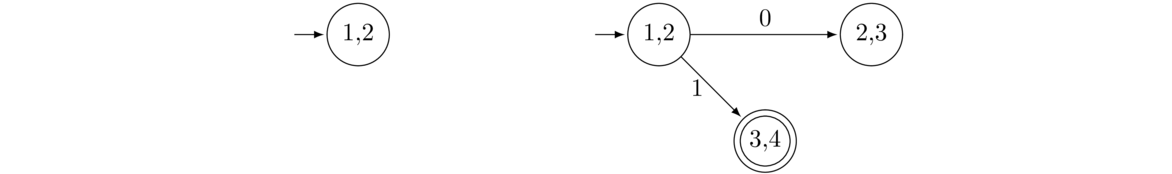

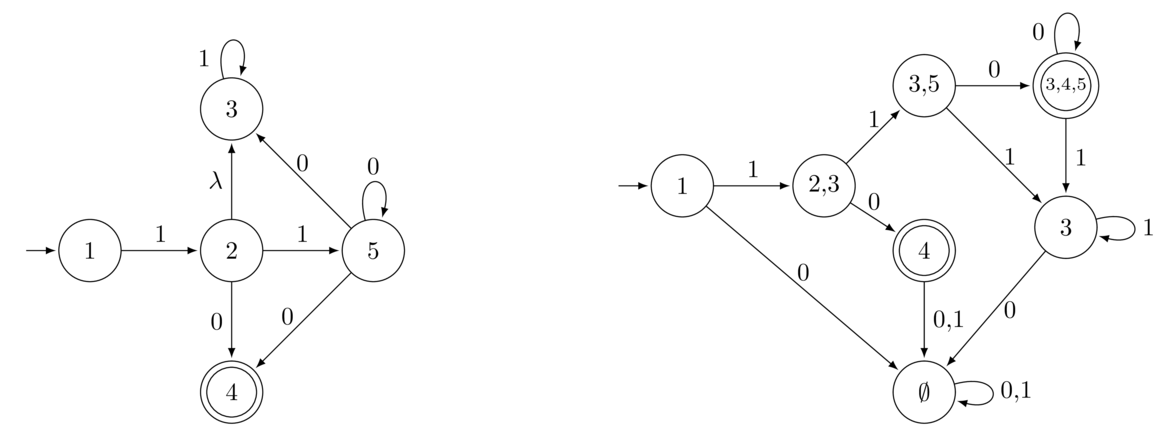

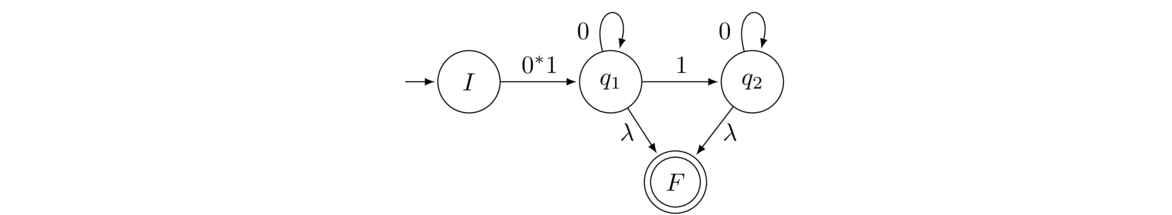

Here is a second example where the λ transition plays a bit more of a role.

To start, the only state we can be in initially is State 1, so that is our starting state in the DFA. From there, we look at the possibilities from State 1. If we get a 0, we end up in the implicit junk state. If we get a 1, we can end up in States 2 or 3. The reason State 3 is a possibility is that once we get to State 2, we can take the free move (λ) up to State 3. In general, when accounting for λ transitions, we need to be consistent about when we use them, and the rule we will follow is that we only take them at the end of a move (not the beginning). We will leave out the rest of the details about constructing the DFA for this problem in order to not get too bogged down in details. It's a good exercise to try to fill work out the rest of the DFA and make sure you get the same thing as above.

General rules for converting an NFA to a DFA

Here is a brief rundown of the process.

- The initial state of the DFA will be labeled with the initial state of the NFA along with anything reachable from it via a λ transition.

- To figure out the transition from a state S of the DFA with the symbol x, look at all the states in the label of S. For each of those states, look at the x transitions from that state in the NFA. Collect all of the states we can get to from S via an x transition, along with any states we can get to from those states via a λ transition. Create a new state in the DFA whose label is all of the states just collected (unless that state was already created), and add an x transition from S to that state.

- If in the step above, the only state collected is a junk state, then create a new state with the label ∅ (unless it has already been created) and add an x transition from S to that state. This new state acts as a junk state and all possible transitions from that state should loop back onto itself.

- A state in the DFA will be set as accepting if any of the states in its label is an accepting state in the NFA.

General Questions about DFAs and NFAs

In this section we will ask some general questions about DFAs and NFAs.

Complements

If we can build a DFA to accept a language L, can we build one that accepts L? That is, can we build a DFA that accepts the exact opposite of what a given DFA accepts?

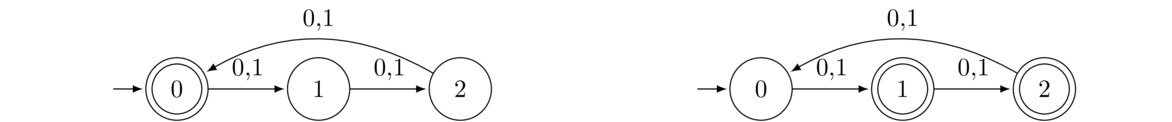

The answer is yes, and the way to do it is pretty simple: flip-flop what is accepting and what isn't. For instance, shown below on the left is a DFA accepting all strings whose number of symbols is a multiple of 3. On the right is its complement, a DFA accepting all strings whose number of symbols is not a multiple of 3.

Note that this doesn't work to complement an NFA. This is because acceptance for an NFA is more complicated than for a DFA. If you want to complement an NFA, first convert it to a DFA using the process of the preceding section and then flip-flop the accepting states.

Unions

If we can build NFAs to accept languages L and M, can we build one to accept L ∪ M? Yes. For instance, suppose D is a DFA for all strings with at least two 0s and E is a DFA for all strings with an even number of symbols. Here are both machines:

Here is a machine accepting the union:

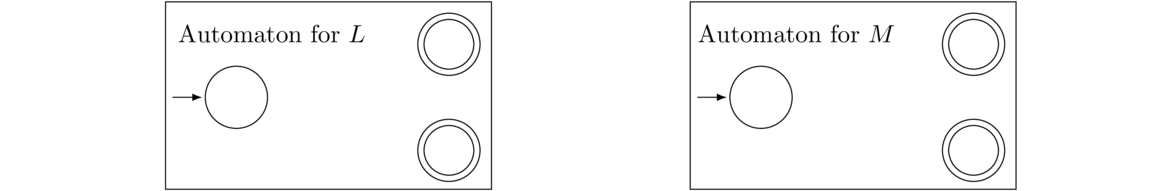

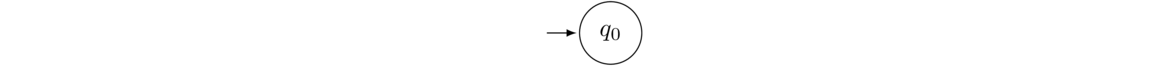

This simple approach works in general to give us an NFA that accepts the union of any two NFAs (or DFAs). Suppose we have two NFAs, as pictured below, that accept languages L and M.

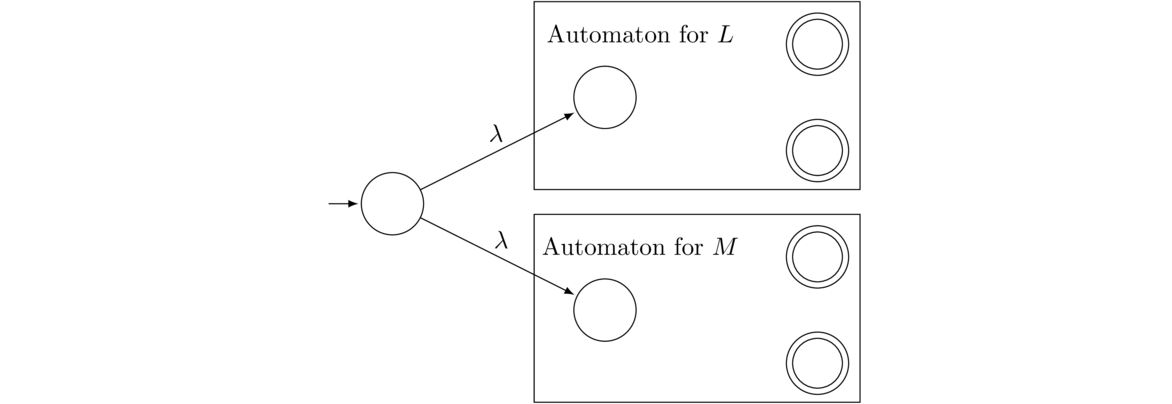

We can create an NFA that accepts L ∪ M by creating a new initial state and creating a λ transition to the initial states of the two NFAs. Those two states cease being initial in the new NFA. Everything else stays the same. See below:

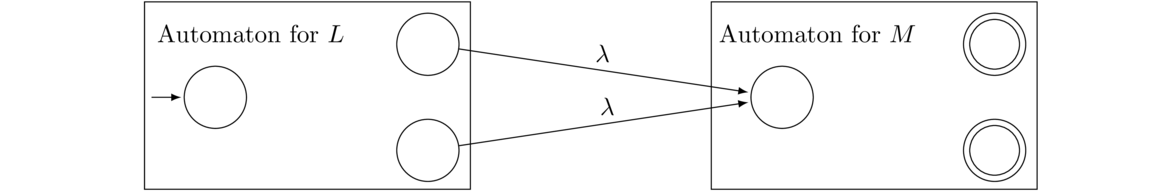

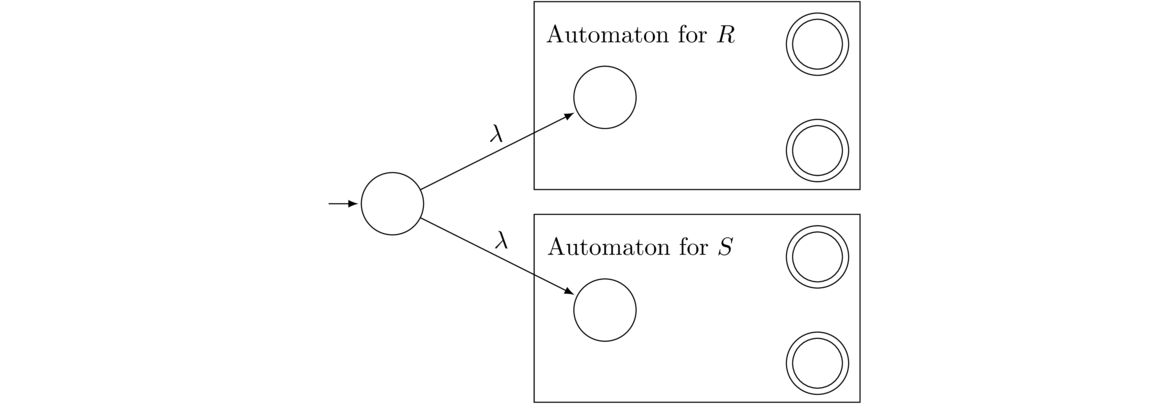

Concatenation

Given NFAs that accept languages L and M, it is possible to construct an NFA that accepts the concatenation LM. The idea is to feed the result of the L NFA into the M NFA. We do this by taking the machine for L and adding λ transitions from its accepting states into the initial state of M. The accepting states of L will lose their accepting status in the machine for LM, and the initial state for M is no longer initial. Everything else stays the same. The construction is illustrated below:

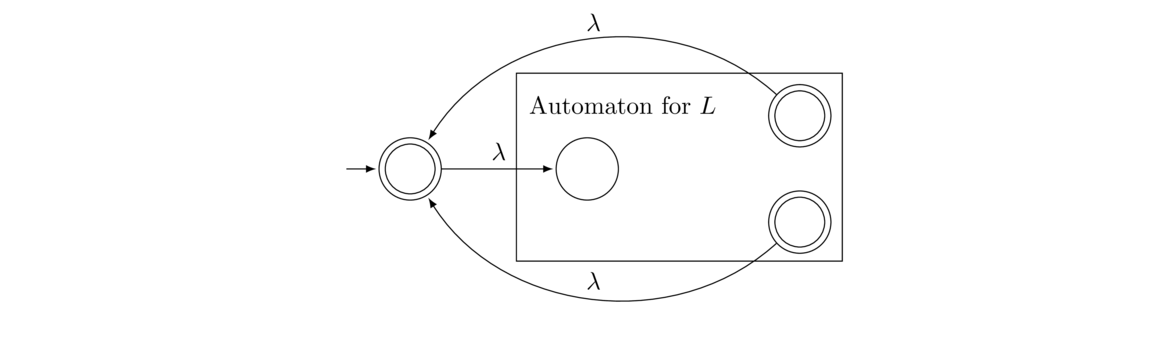

Kleene star

Given an NFA that accepts a language L, is it possible to create an NFA that accepts L*? Recall that L* is, roughly speaking, all the strings we can build from the strings of L.

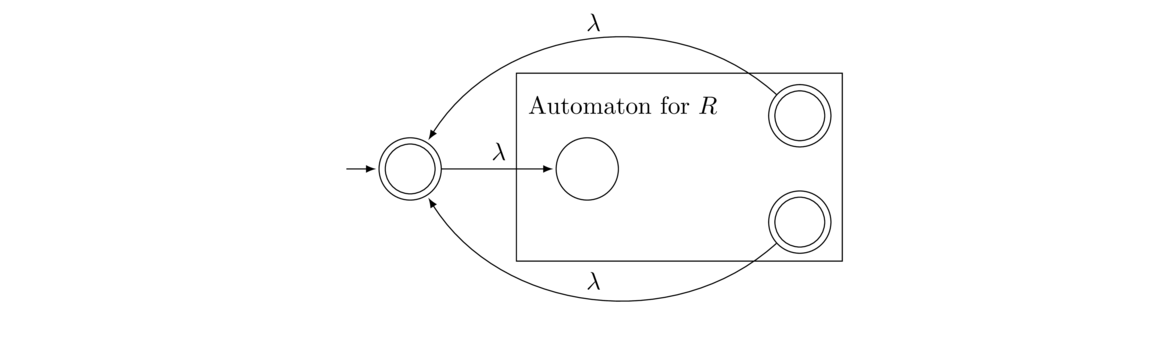

The answer is yes, and the trick is to feed the accepting states of L back to the initial state with λ transitions. However, we also have to make sure that we accept the empty string, which is a part of L*. It would be tempting to make the initial state of L accepting, which would accomplish our goal. But that could also have unintended consequences if some states have transitions into the initial state. In that case, some strings could end up getting accepted that shouldn't be. It's a good exercise to find an example for why this won't work. So to avoid this problem, we create a new initial state that is also accepting and feeds into the old initial state. The whole construction is shown below.

Intersections

We saw above that there is a relatively straightforward construction that accepts L ∪ M given machines that accept L and M. What about the intersection L ∩ M? There is no corresponding simple NFA construction, but there is a nice way to do this for DFAs.

The trick to doing this can be seen in an earlier example we had about building a DFA to accept all strings with at least three 0s and at least two 1s (Example 18 of Section 2.2). For that example, we ended up building a grid of states, where each state keeps track of both how many zeroes and how many ones had been seen so far.

Given two DFAs D and E, we create a new DFA whose states are D × E, the Cartesian product. So every possible pair of states, the first from D and second from E, will be the states of the intersection DFA. A state in the intersection DFA will be accepting if and only if both parts of the pair are accepting in their respective DFAs.

Transitions are determined by looking at each part separately. For example, to determine the transition for 0 from the state (d1,e1), suppose that in D, that a 0 takes us from d1 to d2 and suppose that in E that a 0 takes us from e1 to e4. Then the 0 transition from (d1,e1) in the intersection will go to (d2, e4).

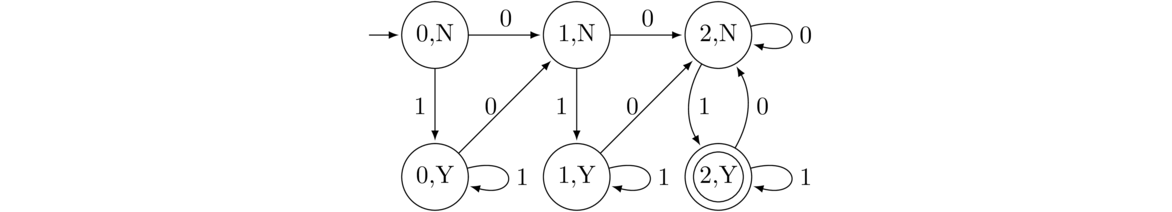

Here is an example to make all of this clear. Suppose D is a DFA for all strings with at least two 0s and E is a DFA for all strings that end in 1. Here are both machines:

Here is the DFA for the intersection:

One DFA has states 0, 1, and 2, and the other has states N and Y. Thus there are 3 × 2 = 6 states, gotten by pairing up the states from the two DFAs. The only accepting state is (2,Y) since it is the only one in which both pieces (2 and Y in this case) are accepting in their respective DFAs. As an example of how the transitions work, let's look at state (0,N). In the three-state machine, a 0 from State 0 transitions us to State 1, and in the two-state machine, a 0 transition from State N loops from State N to itself. Thus, in the intersection, the 0 transition from (0,N) will go to (1,N).

We could easily turn this into a DFA for the union L ∪ M by changing what states are accepting. The intersection's accepting states are all pairs where both states in the pair are accepting in their respective DFAs. For the union, on the other hand, we just need one or the other of the states to be accepting.

Note that this approach to intersections will not work in general for NFAs. We would have to first convert the NFA into a DFA and then apply this construction.

Other things

There are many other questions we can ask and answer about NFAs and DFAs. For instance, a nice exercise is the following: given an automaton that accepts L, construct an automaton that accepts the reverses of all the strings of L.

Here are some questions we can ask about an automaton.

- Does it accept any strings at all?

- Does it accept every possible string from Σ*?

- Does it accept an infinite or a finite language?

- Given some other automaton, does it accept the same language as this one?

- Given some other automaton, is its language a subset of this one's language?

All of these are answerable. To give a taste of how some of these work, note that we can tell if an automaton accepts an infinite set if there is a cycle (in the graph-theoretic sense) that contains a final state. And we can tell if two automata accept the same language by constructing the intersection of one with the complement of the other and seeing if that automaton accepts anything at all.

In fact, most interesting things we might ask about NFAs and DFAs can be answered little work. As we build more sophisticated types of machines, we will find that this no longer holds—most of the interesting questions are unanswerable in general.

Regular Languages

Regular Expressions

We start with a definition. A regular language is a language that we can construct some NFA (or DFA) to accept. Regular languages are nicely described by a particular type of notation known as a regular expression (regex for short). You may have used regular expressions before in a programming language. Those regular expressions were inspired by the type of regular expressions that we will consider here. These regular expressions are considerably simpler than the ones used in modern programming languages.

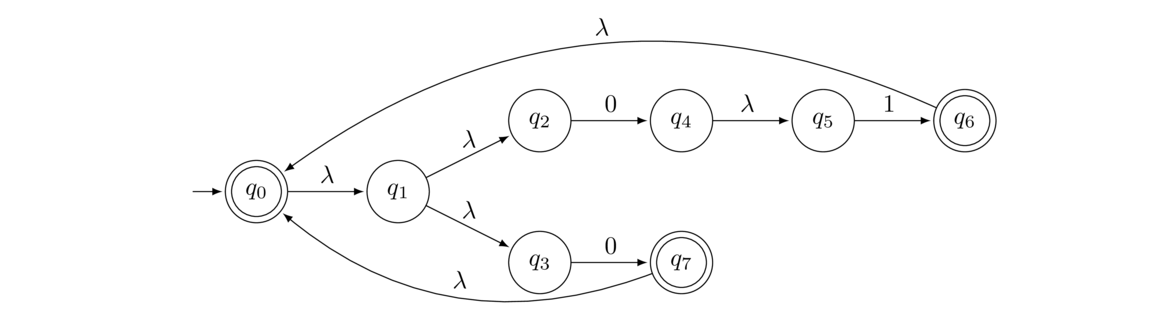

Here is an example regex: 0*1(0+1). This stands for any number of 0s, followed by a 1, followed by a 0 or 1. Here is an NFA for the language described by this regex:

Regular expressions are closely related to NFAs. A regular expression is basically a convenient notation for describing regular languages.

Here is a description of what a regular expression over an alphabet Σ can consist of:

- Any symbol from Σ.

- The empty string, λ.

- The empty set, ∅ (this is not used very often).

- The + operator, which is used for the union of two regexes.

- Concatenation, obtained by placing one regex immediately after another.

- The * operator, which is used like the * operator on languages.

- Parentheses, which are used for clarity and order of operations.

Example regular expressions

Here are some small examples. Assume Σ = {0,1} for all of these.

- 01+110 — This describes the language consisting only of the strings 01 or 110, and nothing else.

- (0+1)1 — This is a 0 or a 1 followed by a 1. In particular, it is the strings 01 and 11.

- (0+1)(0+1) — This is a 0 or a 1 followed by another 0 or 1. In particular, it is the strings 00, 01, 10, and 11.

- (0+λ)1 — This is a 0 or the empty string followed by a 1, namely the strings 01 and 1.

- 0* — This is all strings of 0s, including no 0s at all. It is λ, 0, 00, 000, 0000, etc.

- (01)* — This is zero or more copies of 01. In particular, it is λ, 01, 0101, 010101, etc.

- (0+1)* — This is all possible strings of 0s and 1s.

- (0+1)(0+1)* — This is a 0 or 1 followed by any string of 0s and 1s. In other words, it is all strings of length one or more.

- 0*10* — This is any number of 0s, followed by a single 1, followed by any number of 0s.

- 0*+1* — This is any string of all 0s or any string of all 1s.

- λ + 0(0+1)* + 1(0+1)(0+1)* — This is the empty string, or a 0 followed by any string of symbols, or a 1 followed by at least one symbol. In short, it is all strings except a single 1.

Note: The order of operations is that * is done first, followed by concatenation, followed by +. This is very similar to how the order of operations works with ordinary arithmetic, thinking of * as exponentiation, concatenation as multiplication, and + as addition. Parentheses can be used to force something to be done first.

Constructing regular expressions

Just like with constructing automata, constructing regexes is more art than science. However, there are certain tricks that come up repeatedly that we will see. Here are some examples. It's worth trying to do these on your own before looking at the answers. Assume Σ = {0,1} unless otherwise noted.

- The language consisting of only the strings 111, 0110, and 10101

- All strings starting with 0.

- All strings ending with 1.

- All strings containing the substring 101.

- All strings with exactly one 1.

- All strings with at most one 1.

- All strings with at most two 1s.

- All strings with at least two 1s.

- All strings of length at most 2.

- All strings that begin and end with a repeated symbol.

- Using Σ = {0}, all strings with an even number of 0s.

- Using Σ = {0}, all strings with an odd number of 0s.

- All strings with an even number of 0s.

- All strings with no consecutive 0s.

Below are the solutions. Note that these are not the only possible solutions.

- The language consisting of only the strings 111, 0110, and 10101.

Solution: 111 + 0110 + 10101 — Any finite set like this can be done using the + operator.

- All strings starting with 0.

Solution: 0(0+1)* — Remember that (0+1)* is the way to get any string of 0s and 1s. So to do this problem, we start with a 0 and follow the 0 with (0+1)* to indicate a 0 followed by anything.

- All strings ending with 1.

Solution: (0+1)*1 — This is a lot like the previous problem.

- All strings containing the substring 101.

Solution: (0+1)*101(0+1)* — We can have any string, followed by 101, followed by any string. Note the similarity to how we earlier constructed an NFA for a substring.

- All strings with exactly one 1.

Solution: 0*10* — The string might start with some 0s or not. Using 0* accomplishes this. Then comes the required 1. Then the string can end with some 0s or not.

- All strings with at most one 1.

Solution: 0*(λ + 1)0* — This is like the above, but in place of 1, we use (λ + 1), which allows us to either take a 1 or nothing at all. Note also the flexibility in the star operator: if we choose λ from the (λ+1) part of the regex, we could end up with an expression like 0*0*, but since the star operator allows the possibility of none at all, 0*0* is the same as 0*.

- All strings with at most two 1s.

Solution: 0*(λ + 1)0*(λ + 1)0* — This is a lot like the previous example, but a little more complicated.

- All strings with at least two 1s.

Solution: 0*10*1(0+1)* — We can start with some 0s or not (this is 0*). Then we need a 1. There then could be some 0s or not, and after that we get our second required 1. After that, we can have anything at all.

- All strings of length at most 2.

Solution: λ + (0+1) + (0+1)(0+1) — We have this broken into λ (length 0),(0+1) (length 1), or (0+1)(0+1) (length 2). An alternate solution is (λ+0+1)(λ+0+1).

- All strings that begin and end with a repeated symbol.

Solution: (00+11)(0+1)*(00+11) — The (00+11) at the start allows us to start with a double symbol. Then we can have anything in the middle, and (00+11) at the end allows us to end with a double symbol.

- Using Σ = {0}, all strings with an even number of 0s.

Solution: (00)* — We force the 0s to come in pairs, which makes there be an even number of 0s.

- Using Σ = {0}, all strings with an odd number of 0s.

Solution: 0(00)* — Every odd number is an even number plus 1, so we take our even number solution and add an additional 0.

- All strings with an even number of 0s (with Σ = {0,1}).

Solution: (1*01*01*)* + 1* — What makes this harder than the Σ = {0} case is that 1s can come anywhere in the string, like in the string 0101100 containing four 0s. We can build on the (00)* idea, but we need to get the 1s in there somehow. Using (1*01*01*)* accomplishes this. Basically, every 0 is paired with another 0, and there can be some 1s intervening, along with some before that first 0 of the pair and after the second 0 of the pair. We also need to union with 1* because the first part of the expression doesn't account for strings with no 0s.

- All strings with no consecutive 0s.

Solution: 1*(011*)*(0+λ) — Every 0 needs to have a 1 following it, except for a 0 at the end of the string. Using (011*)* forces each 0 (except a 0 as the last symbol) to be followed by at least one 1. We then add 1* at the start to allow the string to possibly start with 1s. For a 0 as the last symbol, we have (0+λ) to allow the possibility of ending with a 0 or not.

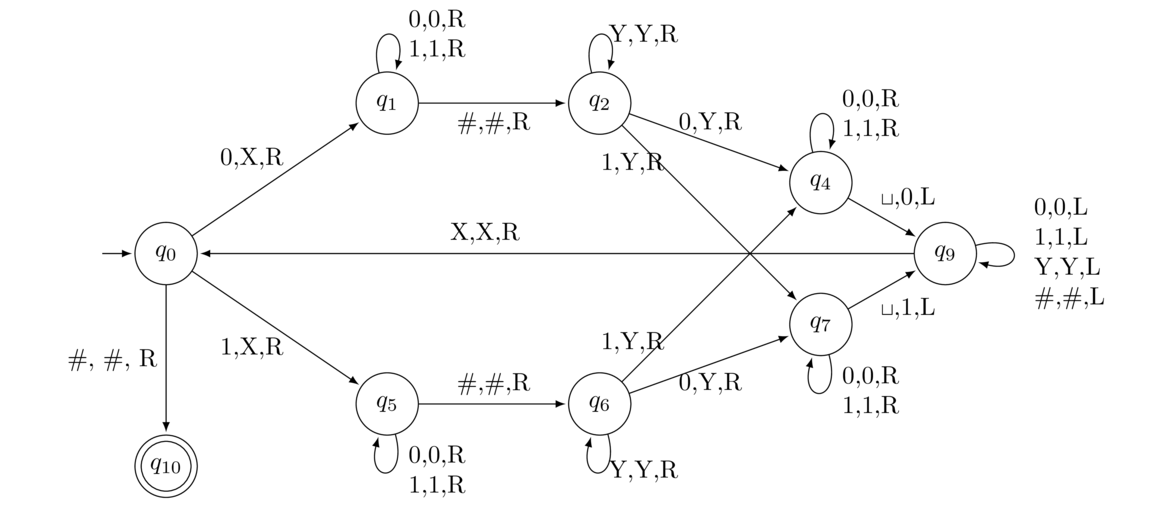

Converting Regular Expressions to NFAs

There is a close relationship between regexes and NFAs. Recall that regexes can consist of the following: symbols from the alphabet, λ, ∅, R+S, RS, or R*, where R and S are themselves regexes. Below are the NFAs corresponding to all six of these. Assume that Σ = {0,1}. Notice the similarity in the last three to the NFA constructions for the union, concatenation, and star operation from Section 2.5.

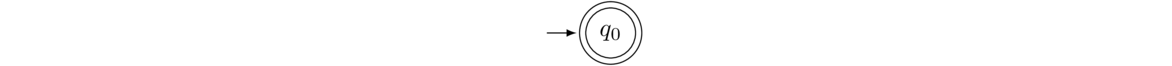

- An NFA for the regex 0.

- An NFA for the regex λ.

- An NFA for the regex ∅.

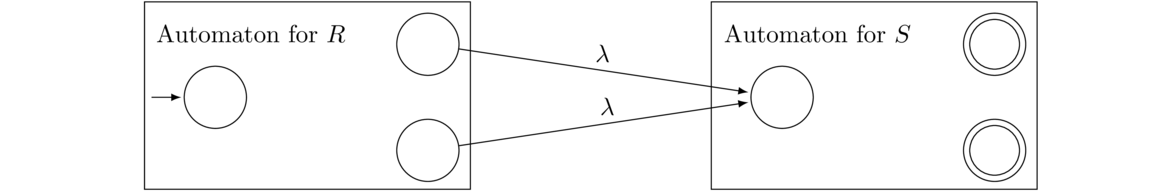

- An NFA for the regex R+S.

- An NFA for the regex RS.

- An NFA for the regex R*.

We can mechanically use these rules to convert any regex into an NFA. For example, here is an NFA for the regex (01+0)*.

Mechanically applying the rules can lead to somewhat bloated NFAs, like the one above. By hand, it's often possible to reason out something simpler. However, a benefit of the mechanical process is that it lends itself to being programmed. In fact, JFLAP has this algorithm (as well as many others) programmed in. It's worth taking a look at the software just because it's pretty nice, and it's helpful for checking your work.

Converting DFAs to Regular Expressions

We have just seen how to convert a regex to an NFA. Earlier we saw how to convert an NFA to a DFA. Now we will close the loop by showing how to convert a DFA to a regex. This shows that all three objects — DFAs, NFAs, and regexes — are equivalent. That is, they are three different ways of representing the same thing.

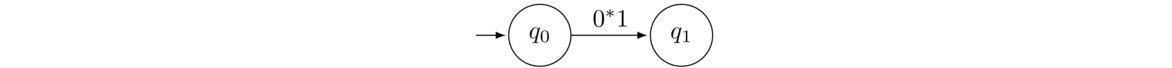

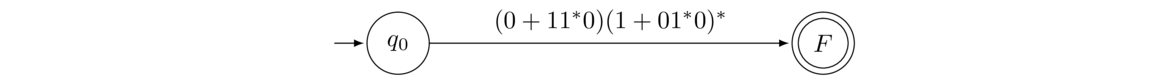

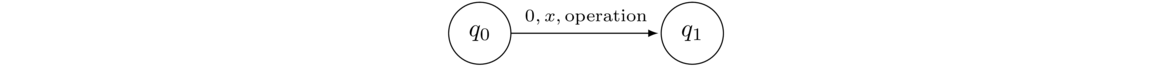

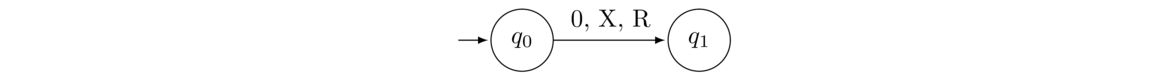

To convert DFAs to regexes, we use something called a generalized NFA (GNFA). This is an NFA whose transitions are labeled with regexes instead of single symbols. For instance, the GNFA below means that we move from State 1 to State 2 whenever we read any sequence of 0s followed by a 1.

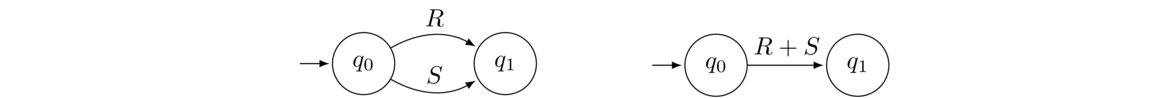

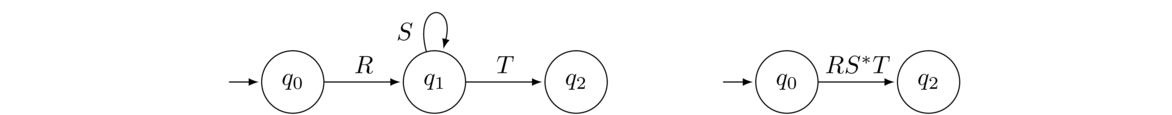

There is a pretty logical process for converting a DFA into a GNFA that accepts the same language. There are two key pieces, shown below:

- Transitions between the same two states of a GNFA can be combined using the or (+) regex operation, like below (assume that R and S are both regexes):

This applies to loops as well.

- The GNFA shown on the left can be reduced to the one on the right by bypassing the state in the middle.

The idea is that as we move in the left GNFA from q0 to q2, we get R, then we can loop for as long as we like picking up S's on q1, and then we get a T as we move to q2. This is the same as the regex RS*T.

We can combine these operations in a process to turn any DFA into a regex. Here is the process:

- If the initial state of the DFA has any transitions coming into it, then create a new initial state with a λ transition to the old initial state.

- If any accepting state has a transition going out of it to another state, or if there are multiple accepting states, then create a new accepting state with λ transitions coming from the (now no longer) accepting states into the new accepting state.

- Repeatedly bypass states and combine transitions, as described earlier, until only the initial state and final state remain. The regex on the transition between those two states is a regex that accepts the same language as the original DFA. States can be bypassed in any order.

Examples

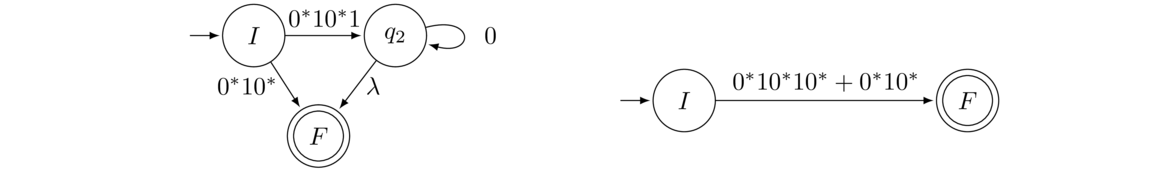

Convert the DFA below on the left into a regex.

To start, the initial state has no transitions into it, so we don't need to add a new initial state. However, the final state does have a transition out of it, so we add a new final state, as shown above on the right.

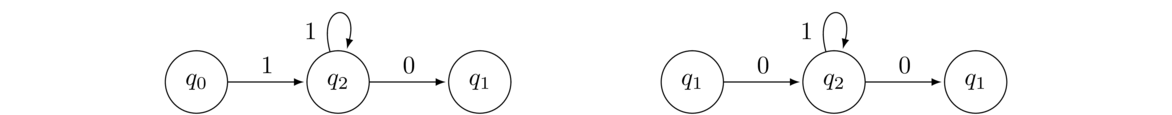

Now we start bypassing vertices. We can do this in any order. Let's start by bypassing q2. To bypass it, we look for all paths of the form x → q2 → y, where x and y can be any states besides q2. There are two such paths: q0 → q2 → q1 and q1 → q2 → q1. The first path generates the regex 11*0 and the second generates the regex 01*0. These paths are shown below.

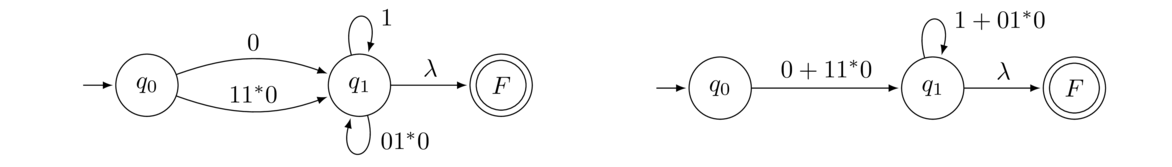

The resulting GNFA is shown below on the left. We then combine the two paths from q0 to q1 and the two loops on q1, as shown on the right.

Next, let's bypass q1. The result is shown below.

Only the initial and accepting states are left, so we have our answer: (0+11*0)(1+01*0)*

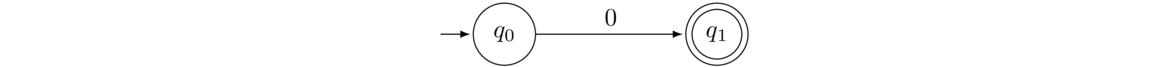

Convert the DFA below on the left into a regex.

The start state has a transition (a loop) coming into it, and there are multiple accepting states, so add a new start state and a new accepting state, like below.

Now we can start bypassing states. Right away, we can bypass q3 easily. There are no paths of the form x → q3 → y that pass through it (it's a junk state), so we can safely remove it. Let's then bypass q0. The only path through it is I → q0 → q1. The result of bypassing it (and removing q3) is shown below.

Next, let's remove q1. There are paths I → q1 → q2 and I → q1 → F that pass through q1. The result of bypassing q1 is shown below on the left. After that, we bypass q2 and combine the two transitions from I to F to get our final solution, shown at right.

Therefore, the regex matching our initial DFA is 0*10*10*+0*10*.

Non-Regular Languages

Not all languages are regular. One example is the language 0n1n of all strings with some number of 0s followed by the same number of 1s. Another example is the language of all palindromes — strings that read the same forward as backwards (like 0110 or 10101).

If we want to show that a language is not regular, then according to the definition, we would need an argument to explain why it would be impossible to construct an NFA (or equivalently an DFA or regex) that accepts the language. The most common technique for doing this is the Pumping Lemma.

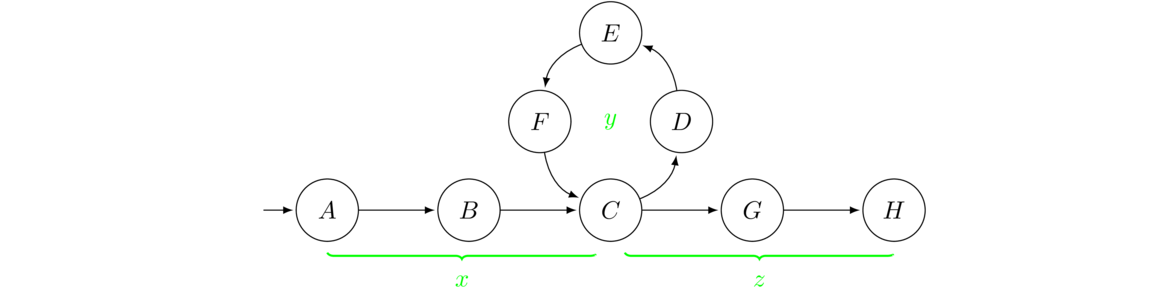

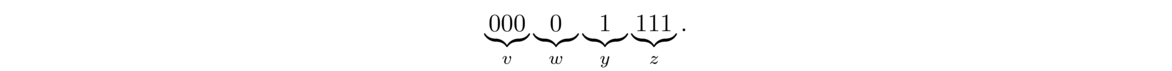

Here is the main idea behind the Pumping Lemma: If you feed an NFA an input string that is longer than the number of states into the automaton, then by the pigeonhole principle, some state will have to be visited more than once. That is, there will be a “loop” in the in the sequence of states that the string takes through the automaton. See the figure below.

The figure above shows the sequence of states a certain input string might travel through. Imagine that the string as broken up into xyz, where x is the part that takes the machine from A to C, y is the part that takes the machine from C back to C along the loop, and z is the part that takes the machine from C to H. The string xyz is in the language accepted by the machine, as is the string xy2z, which comes from taking the loop twice. This is called “pumping up” the string xyz to obtain a new string in the language.

There is no restriction on how many times we take the loop, so we can pump the string up to xykz for any k > 0, and we can even “pump it down” to xy0z (which is xz) by skipping the loop entirely.

We can use this idea to show the language of all strings of the form 0n1n is not regular. These are all strings that contain a string of 0s followed by a string of the same amount of 1s. Suppose there exists a DFA for it. Let k be the number of states in the DFA, and look at the string 0k+11k+1, which is accepted by the DFA. The first part of the string, the 0k+1 part has more 0s than there are states in the machine, so there must be a cycle, and the cycle must only involve 0s. We can then pump up that cycle to get another string that is accepted. However, when we pump up the cycle, we end up getting more 0s giving us a string that has more 0s than 1s. But this is not a string in the language, so we have a contradiction. The DFA can't possibly exist because it leads to an impossible situation.

We can formalize all of this into a technique for showing some languages are not regular. We use this pumping property, that given any string whose length is longer than the number of states in an NFA for that language, we can find a way to break it into xyz such that xykz is also in the language. This is the called the Pumping Lemma. Here is a rigorous statement of it.

Pumping Lemma. If L is a regular language, then there is an integer n such that any string in L of length at least n can be broken into xyz with |xy| ≤ n and |y| > 0 such that xykz is also in L for all k ≥ 0.

Note the restrictions on the lengths of xy and y given above. The restriction |xy| ≤ n says that the “loop” has to happen within the first n symbols of the string, and the restriction |y| > 0 says there must be a (nonempty) loop.

The Pumping Lemma gives us a property that all regular languages must satisfy. If a language does not satisfy this property, then it is not regular. This is how we usually use the Pumping Lemma — we find a (long enough) string in the language that cannot be “pumped up”.

The first time through, it can be a little tricky to understand exactly how to use the Pumping Lemma, so it is often formulated as a game, like below:

- First, your opponent picks n.

- Then you pick a string s of length at least n that is in the language.

- Your opponent breaks s into xyz however they want, as long as the breakdown satisfies that |xy| ≤ n and |y| > 0.

- Then you show that no matter what breakdown they picked, there is a k ≥ 0 such that xykz is not in the language.

Here a few key points to remember:

- The opponent chooses n, not you.

- Your string must be of length at least n and must be in the language.

- You don't get to pick the breakdown into xyz. Your opponent does. You need a logical argument that shows why all possible breakdowns the opponent chooses will fail.

In terms of playing this game, try to choose s so that the opponent is forced into picking an x and y that are advantageous to you. Also, in the last step, a lot of the time k = 2 or k = 0 is what works. Here are some examples.

Show the language of all strings of the form 0n1n is not regular.

First, let n be given. We choose s = 0n1n. Then suppose s is broken into xyz with |xy| ≤ n and |y| > 0. Because |xy| ≤ n, and s starts with the substring 0n, we must have that x and y both consist of all 0s. Therefore, since |y| > 0, xy2 will consist of more 0s than xy does. Hence xy2z will be of the form 0k1n with k > n, which is not in the language. Thus the language fails the pumping property and cannot be regular.

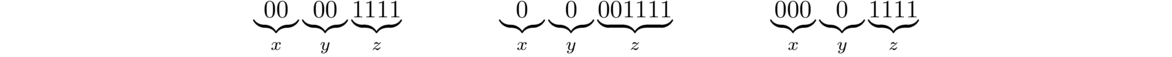

As an example of what we did above, consider n = 4. In that case, we pick s = 00001111. There are a variety of breakdowns our opponent could use, as shown below.

The key is that since |xy| < 4, we are guaranteed that x and y will lie completely within the first block of 0s. And since |y| > 0, we are guaranteed that y has at least one 0. Thus when we look at xy2, we know it will contain more 0s than xy. So xy2z will have more 0s than 1s and won't be of the right form.

If the opponent, for instance, chooses the breakdown above on the left, then xy2 will become 000000 and xy2z becomes 0000001111, which is no longer of the proper form for strings in the language.

Show that the language of all palindromes on Σ = {0,1} is not regular.

First, let n be given. We choose s = 0n10n. This is a palindrome of length at least n. Now suppose s is broken into xyz with |xy| ≤ n and |y| > 0. Since |xy| ≤ n, we must have x and y contained in the initial substring 0n of s. Hence x and y consist of all 0s. Therefore, since |y| > 0, the string xy2z will be of the form 0k10n with k > n. This is not a palindrome, so it is not in the language. Therefore, the pumping property fails and the language is not regular.

Show that the language on Σ = {0} of all strings of the form 0p, where p is prime, is not regular.

First, let n be given. We choose s = 0p, where p is the first odd prime greater than n. Now suppose s is broken into xyz with |xy| ≤ n and |y| > 0. Consider the string xyp+1z, where we pump up the string p+1 times. We can think of it as consisting of x followed by p copies of y, followed by y again, followed by z. So it contains all of the symbols of s along with p additional copies of y. Thus we have

The number (p+1)|y| cannot be prime. If |y| > 1, then (p+1)|y| is the product of two numbers greater than 1, making it not prime, and if |y| = 1, then (p+1)|y| = p+1, which can't be prime because p is an odd prime, making p+1 is even. Thus the pumping property fails, and the language is not regular.

An interesting thing happens if we try to show that the language of all strings of the form 0c, where c is composite (a non-prime), is not regular. Any attempts to use the Pumping Lemma will fail. Yet the language is still not regular. So the Pumping Lemma is not the be-all and end-all of showing things are not regular. However, we can indirectly use the Pumping Lemma. Recall that if we can construct a DFA that accepts a language, then we can easily construct one that accepts the language's complement by flipping the accepting and non-accepting states. So if we could build a DFA for composites, then we could build one for primes by this flip-flop process. But we just showed that primes are not regular, which means there is no DFA that accepts primes. Thus, it would be impossible to build one for composites.

Just to examine the Pumping Lemma a little further, suppose we were to try to use it to show that the language given by the regex 0*1* is not regular. This language is obviously regular (since it is given by a regular expression), so let's see where things would break down. Suppose n is given and we choose s = 0n1n. Suppose the opponent chooses the breakdown where x is empty, y is the first symbol of the string, and z is everything else. No matter how we pump the string up into xykz, we always get a string that fits the regex 0*1*. We aren't able to get something not in the language. So our Pumping Lemma attempt does not work.

What is and isn't regular

Anything that we can describe by a regular expression is a regular language. This includes any finite language, and many other things. Regular languages do not include anything that requires sophisticated counting or keeping track of too much. For instance, 0n1n requires that we somehow keep count of the number of 0s we have seen, which isn't possible for a finite automaton. Similarly, palindromes require us to remember any arbitrarily large number of previous symbols to compare them with later symbols, which is also impossible for a finite automaton.

We have also seen that we can't tell if a string has a prime length. Generally, finite automata are not powerful enough to handle things like primes, powers of two, or even perfect squares. They can handle linear things, like telling if a length is odd or a multiple of 3, but nothing beyond that.

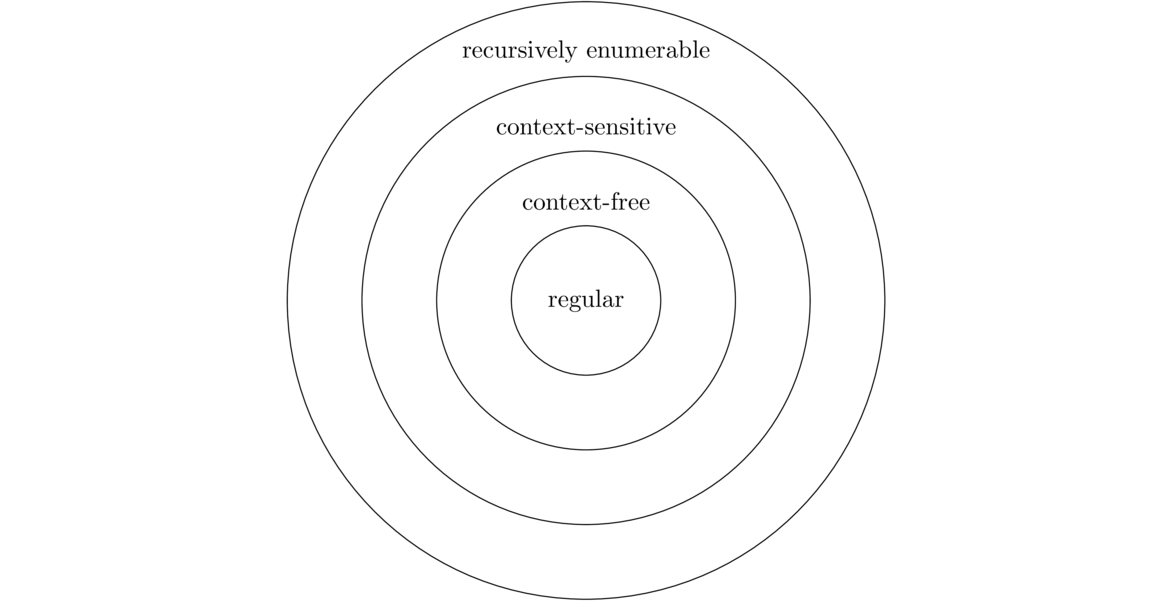

Context-Free Grammars

Definitions and Notations

A grammar is a way of describing a language. Grammars have four components:

- A finite set of items called terminals.

- A finite set of items called variables or non-terminals.

- A specific variable S that is the start variable.

- A finite set of rules called productions that specify substitutions, where a string of variables and terminals is replaced by another string of variables and terminals.

The grammars we will be considering here are context-free grammars, where all the productions involve replacing a single variable by a string of variables and terminals. Here is an example context-free grammar:

A → aB | λ

B → bBb | BB | ab

Let's identify the different parts of this grammar:

- In these notes, our variables will always be capital letters, and our terminals will always be lowercase letters or numbers. So in the grammar above, A and B are the variables, while a and b are the terminals. The empty string λ is also a terminal.

- The production A → aB | λ gives the possible things we can replace A with. Namely, we can replace it with aB or with the empty string. The | symbol in a production is read as “or”. It allows us to specify multiple rules in the same line. We could also separate this into two rules A → ab and A → λ.

- The start variable is A. The start variable is always the one on the left side of the first production.

For any grammar, the language generated by the grammar is all the strings of terminals it is possible to get by beginning with the start variable and repeatedly applying the productions (i.e. making substitutions allowed by the productions.)

Here is an example of using the grammar to generate a string. Starting with A in the grammar above, we can apply the rule A → aB. Then we can apply the rule B → BB to turn aB into aBB. Then we can apply the rule B → ab to the first B to get aabB. After that, we can apply the rule B → bBb to get aabbBb. Finally, we can apply the rule B → ab to get the aabbabb. This is one of the many strings in the language generated by this grammar.

We can display the sequence of substitutions above like below (new substitutions shown in bold):

This is called a derivation. When doing derivations, there can sometimes be a choice between two variables as to which one to expand first, like above where we have aBB. Often it doesn't matter, but sometimes it helps to have a standard way of doing things. A leftmost derivation is one such standard, where we always choose the variable farthest left as the one to expand first.

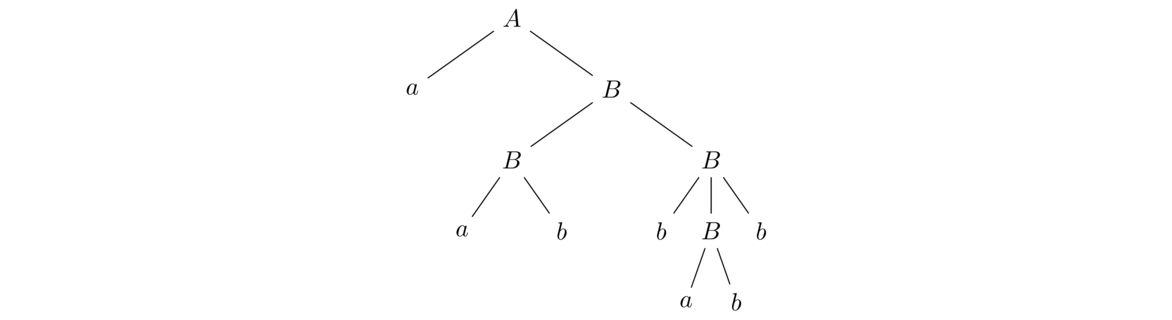

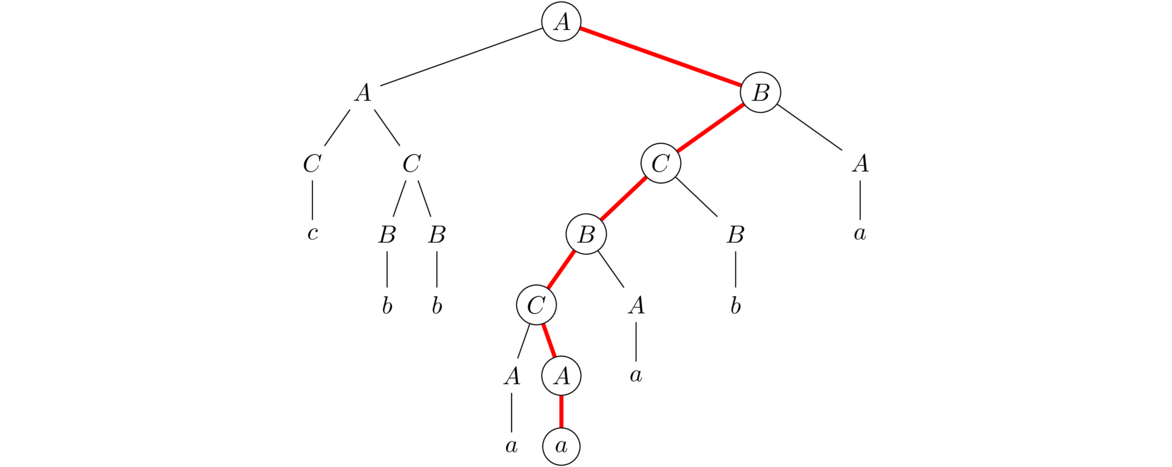

A second way of displaying a sequence of substitutions is to use a parse tree. Here is a parse tree for the string derived above.

The string this derives can be found by reading the terminals from the ends of the tree (its leaves) from left to right (technically in a preorder traversal).

There may be multiple possible parse trees for a given string. If that's the case, the grammar is called ambiguous.

Examples

Finding the language generated by a grammar

- Find the language generated by this grammar:

A → aA | λ

One approach is to start with the start variable and see what we can get to. Starting with A, we can apply A → aA to get aA. We can apply it again to get aaA. If we apply it a third time, we get aaaA. We can keep doing this for as long as we like, and we can stop by choosing A → λ at any point. So we see that this grammar generates strings of any number of a's, including none at all. It fits the regular expression a*.

Notice the recursion in the grammar's definition. The variable A is defined in terms of itself. The rule A → λ acts as a base case to stop the recursion.

- Find the language generated by this grammar:

S → AB

A → aA | λ

B → bB | b

Notice that the grammar for A is the same as the grammar from the previous problem. The grammar for B is nearly the same. The B → b production, however, does not allow the possibility of no bs at all. We must have at least one b.

The first production, S → AB, means that all strings generated by this grammar will consist of something generated by A followed by something generated by B. Thus, the language generated by this grammar is all strings of the form a*bb*; that is, the language is all strings consisting of any number of a's followed by at least one b.

- Find the language generated by this grammar:

S → A | B

A → aA | λ

B → bB | b

This is almost the same grammar as above, except that S → AB has been replaced with S → A | B. Remember that | means “or”, so the language generated by this grammar is all strings that are either all a's or at least one b. As a regular expression, this is a*+bb*.

- Find the language generated by this grammar:

S → 0S1 | λ

Let's look at a typical derivation:

S ⇒ 0S1 ⇒ 00S11 ⇒ 000S111 ⇒ 000111We see that every iteration of the process generates another 0 and another 1. So the language generated by this grammar is all strings of the form 0n1n for n ≥ 0.

Recall that 0n1n is not a regular language, so this example shows that context-free grammars can do things that NFAs and DFAs can't.

- Find the language generated by this grammar:

S → aSa | bSb | a | b | λ

Let's look at a typical derivation:

S ⇒ aSa ⇒ aaSaa ⇒ aabSbaa → aabbaaWe see that each a comes with a matching a on the other side of the string and likewise with each b. This forces the string to read the same backwards as forwards. This grammar generates all palindromes.

- Find the language generated by this grammar, where the symbols ( and ) are the terminals of the grammar:

S → SS | (S) | λ

This is an important grammar. It generates all strings of balanced parentheses. The production S → (S) allows for nesting of parentheses, and the production S → SS allows expressions like ()(), where one set of parentheses closes and then another starts.

Creating grammars

Now let's try creating grammars to recognize given languages. Here are the problems first. It's a good idea to try them before looking at the answers. Assume Σ = {a,b} unless otherwise noted.

- All strings of a's containing at least three a's.

- All strings of a's and b's.

- All strings with exactly three a's.

- All strings with at most three a's.

- All strings with at least three a's.

- All strings containing the substring ab.

- All strings of the form ambncn for m,n ≥ 0.

- All strings of the form ambn with m > n ≥ 0.

- All strings of the form ambncm+n with m,n ≥ 0.

- All strings of the form akbmcn with n = m or n = k and k,m ≥ 0.

- All strings where the number of a's and b's are equal.

Here are the solutions.

- All strings of a's containing at least three a's.

A → aA | aaa

The grammar we did earlier, A → aA | λ, is a useful thing to keep in mind. Here we modify it so that instead of stopping the recursion at an empty string, we stop at aaa, which forces there to always be at least three a's.

- All strings.

S → aS | bS | λ

The idea behind this is that at each step of the string generation, we can recursively add either an a or a b. So this grammar builds up strings one symbol at a time. For instance, to derive the string abba, we would do S ⇒ aS ⇒ abS ⇒ abbS ⇒ abbaS ⇒ abba.

- All strings with exactly three a's.

S → BaBaBaB

B → bB | λ

We use the B production to get a (possibly empty) string of bs. Then we imagine the string as being constructed of three a's, with each preceded and followed by a (possibly empty) string of b's.

- All strings with at most three a's.

S → BABABAB

A → a | λ

B → bB | λ

This is similar to the above, but instead of using the terminal a in the S production, we use A, which has the option of being a or empty, allowing us to possibly not use an a.

- All strings with at least three a's.

S → TaTaTaT

T → aT | bT | λ

This is somewhat like the previous two examples. In the S production, we force there to be three a's. Then before, after, and in between those a's, we have a T, which allows any string at all.

- All strings containing the substring ab.

S → TabT

T → aT | bT | λ

This is similar to the previous example in that we force the string to contain ab, while the rest of the string can be anything at all.

- All strings of the form ambncn for m,n ≥ 0.

S → AT

A → aA | λ

T → bTc | λ

All strings in this language consist of a string of a's followed by a string containing a bunch of b's followed by the same number of c's. We have a production A that produces a string of A's and a production T that produces bncn. We join them together with the production S → AT.

- All strings of the form ambn with m > n ≥ 0.

S → AT

A → aA | a

T → aTb | λ

This is similar to the previous example. The strings in this language can be thought of as consisting of some nonzero number of a's followed by a string of the form anbn.

- All strings of the form ambncm+n with m,n ≥ 0.

One approach would be to try the following.

S → aSc | bSc | λ

Every time we get an a or a b, we also get a c. This allows the number of a's and b's combined to equal the number of c's. However, there is the possibility for the a's and b's to come out of order, as in the derivation S ⇒ aSc ⇒ abScc ⇒ abaSccc ⇒ abaccc. To fix this problem, do the following:

S → aSc | T | λ

T → bTc | λ

It's still true that every time we get an a or a b, we also get a c. However, now we can't get any b's until we are done getting a's.

- All strings of the form akbmcn with n = m or n = k and k,m ≥ 0.

S → AT | U

A → aA | λ

T → bTc | λ

U → aUc | B

B → bB | λ

The key here is to break it into two parts: n = m or n = k. The S → AT production handles the n = m case by generating strings that start with some number of a's followed by an equal number of b's and c's. Then S → U production handles the n = k case by using U → aUc to balance the a's and c's and using U → B to allow us to insert the b's once we are done with the a's and c's.

- All strings where the number of a's and b's are equal.

S → SS | aSb | bSa | λ

The productions S → aSb and S → bSa guarantee that whenever we get an a, we get a b elsewhere in the string and vice-versa. However, these productions alone will not allow for a string that starts and ends with the same letter like abba. The production S → SS allows for this by allowing two derivations to essentially happen side-by-side, like in the parse tree below for abba.

A longer string like abbaabba could be generated by applying the S → SS production multiple times.

A few practical grammar examples

One very practical application of grammars is they can be used to describe many real-world languages, such as programming languages. Most programming languages are defined by a grammar. There are automated tools available that read the grammar and generate C or Java code that reads strings and parses them to determine if they are in the grammar, as well as breaking them into their component parts. This greatly simplifies the process of creating a new programming language. Here are a few more examples.

Here is a grammar for some simple arithmetic expressions.

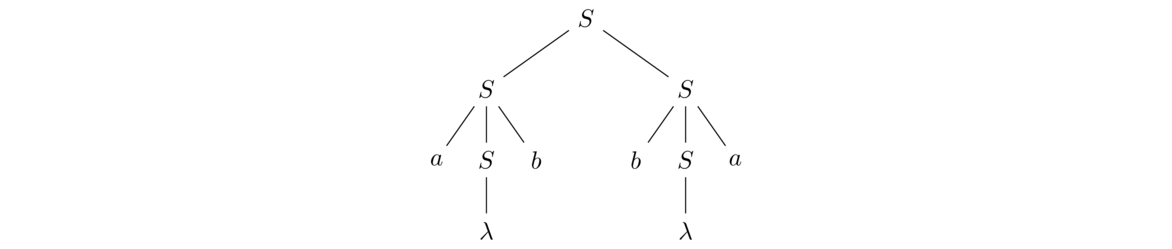

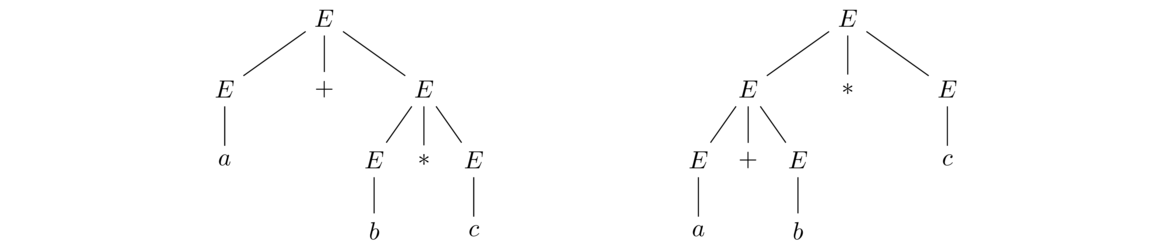

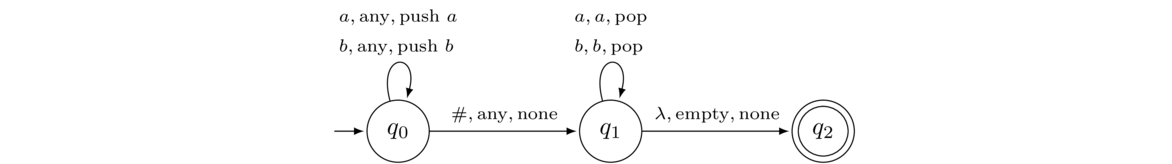

E → E+E | E*E | a | b | c

This grammar generates expressions like a+b+c or a*b+b*a. It's not hard to expand this into a grammar for all common arithmetic expressions. However, one problem with this grammar is that it is ambiguous. For example, the string a*b+c has two possible parse trees, as shown below.

The two parse trees correspond to interpreting the expression as (a+b)*c or a+(b*c). This type of ambiguity is not desirable in a programming language. We would like there to be one and only one way to derive a particular string. It wouldn't be good if we entered 2+3*4 into a programming language and could get either 20 or 14, depending on the compiler's mood. There are ways to patch up this grammar to remove the ambiguity. Here is one approach:

E → E+M | M

M → M*B | B

B → a | b | c

The idea here is we have separated addition and multiplication into different rules. There is a lot more to creating grammars for expressions like this. If you're interested, see any text on programming language theory.

One interesting note about ambiguity is that there are some languages for which any grammar for them will have to be ambiguous, with no way to remove the ambiguity. The standard example of this is the language ambmcn with m,n ≥ 0.

Here is another practical grammar. It generates all valid real numbers in decimal form.

S → -N | N

N → D.D | .D | D

D → ED | E

E → 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9

In constructing this, the idea is to break things into their component parts. The production for S has two options to allow for negatives or positives. The next production covers the different ways a decimal point can be involved. The last two productions are used to create an arbitrary string of digits.

Below is a grammar for a very simple programming language. For clarity, the grammar's variable names are multiple letters long. To tell where a variable name starts and ends, we use ⟨ and ⟩.

⟨ Expr ⟩ → ⟨ Loop ⟩ | ⟨ Print ⟩

⟨ Loop ⟩ → for ⟨ var ⟩ = ⟨ Int ⟩ to ⟨ Int ⟩ ⟨ Block ⟩

⟨ Var ⟩ → a | b | c | d | e

⟨ Int ⟩ → ⟨ Digit ⟩ ⟨ Int ⟩ | ⟨ Digit ⟩

⟨ Digit ⟩ → 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9

⟨ Block ⟩ → { ⟨ ExprList ⟩ }

⟨ ExprList ⟩ → ⟨ ExprList ⟩ ⟨ Expr ⟩ | λ

⟨ Print ⟩ → print "⟨ String ⟩ "

⟨ String ⟩ → ⟨ String ⟩ ⟨ Symbol ⟩ | λ

⟨ Symbol ⟩ → a | b | c | d | e | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9

This grammar produces a programming language that allows simple for loops and print statements. Here is an example string in this language (with whitespace added for clarity):

for a = 1 to 5 {

for b = 2 to 20 {

print("123")

}

}

Grammars and Regular Languages

Converting NFAs into context-free grammars

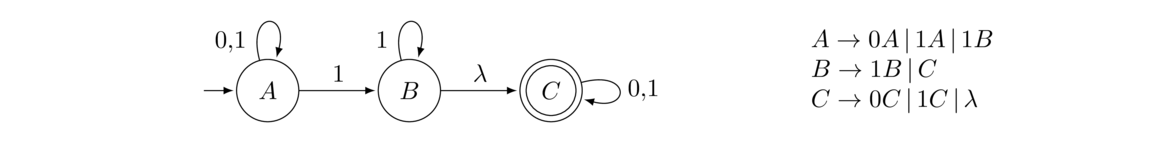

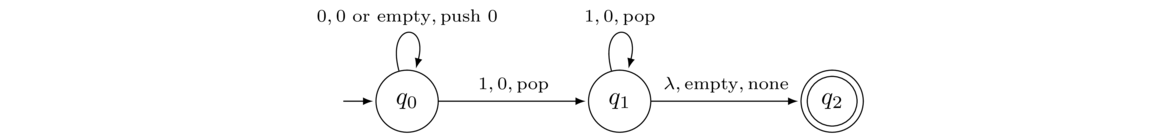

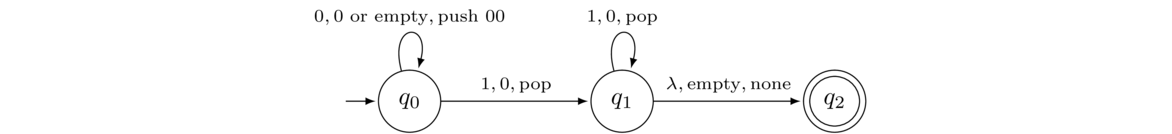

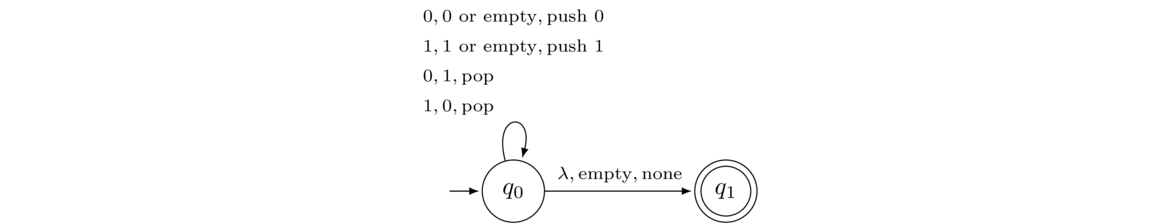

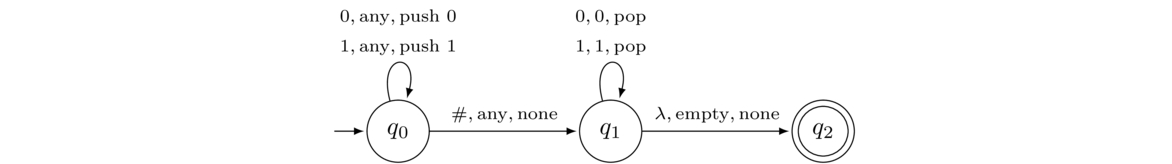

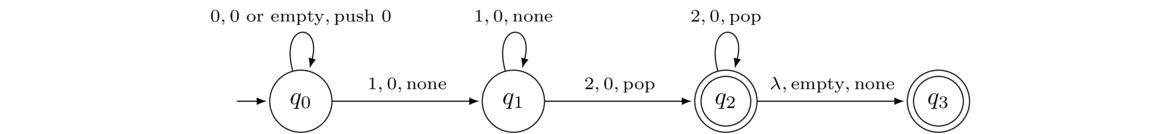

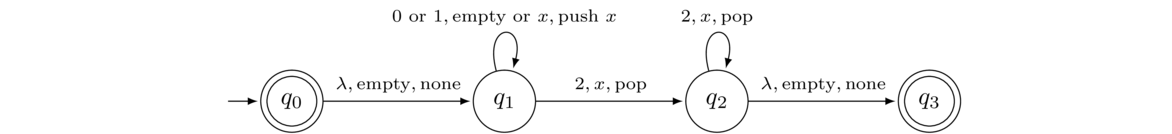

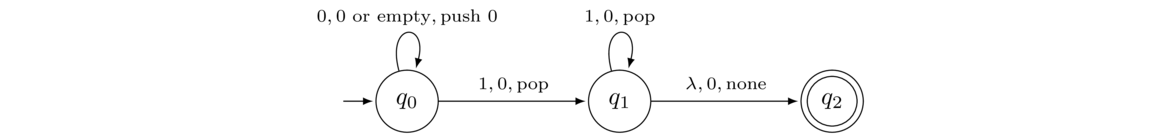

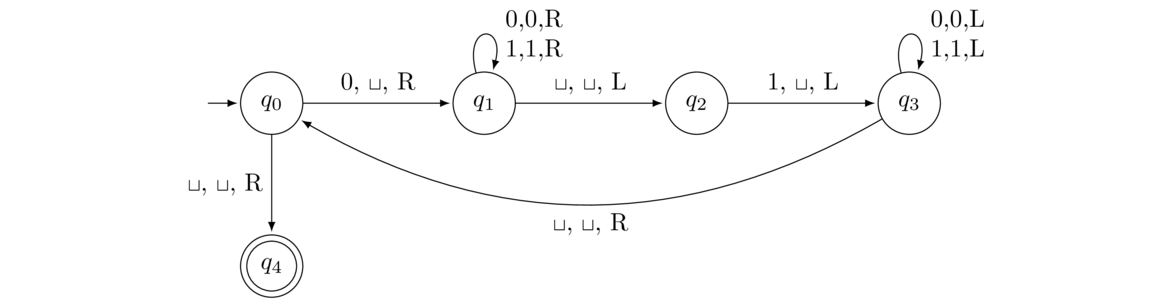

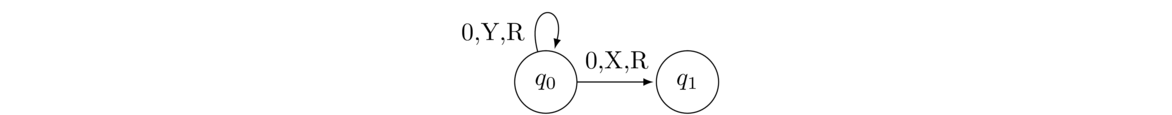

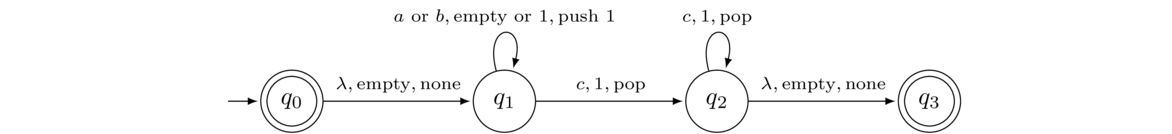

Many of the example grammars we saw were for regular languages. It turns out to be possible to create a context-free grammar for any regular language. The procedure is a straightforward conversion of an NFA to a context-free grammar. On the left below is an NFA, and on the right is a grammar that generates the same language as the one accepted by the NFA.

Each state becomes a variable in the grammar. The start state becomes the start variable. A transition like A → 1B becomes the production A → 1B. A λ transition like B → λC becomes the production B → C. Any final state gets a λ production.

Tracing through a derivation in the grammar exactly corresponds to moving through the NFA. For instance, the derivation A ⇒ 0A ⇒ 01B ⇒ 01C ⇒ 01 corresponds to moving from A to A to B to C in the NFA.

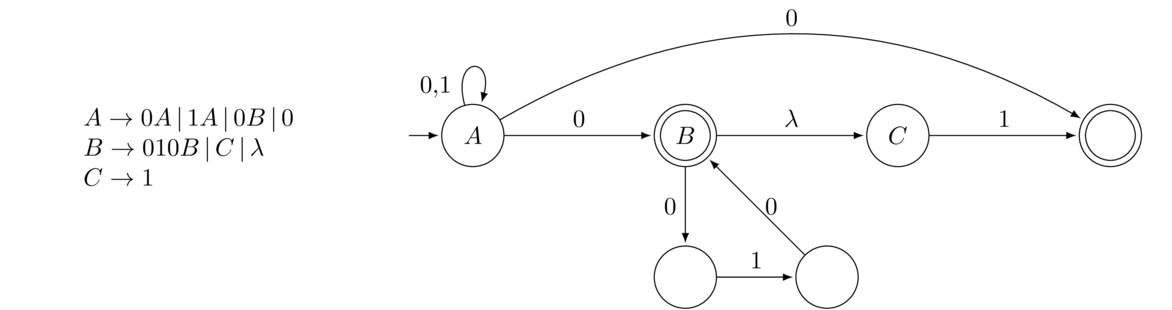

Converting certain context-free grammars into NFAs

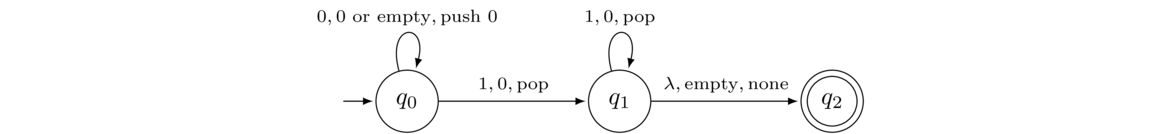

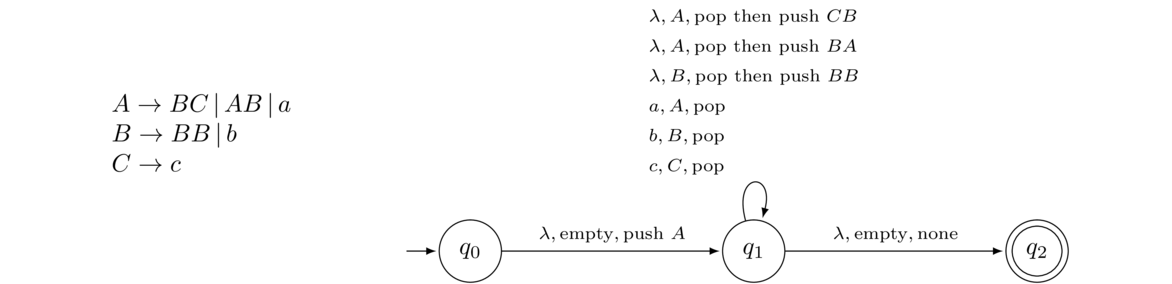

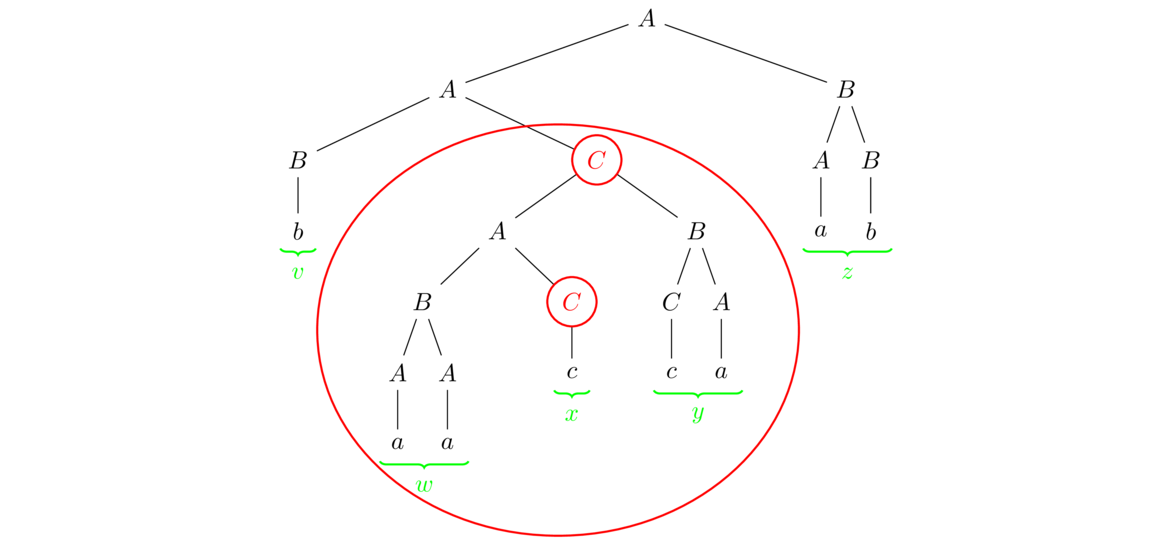

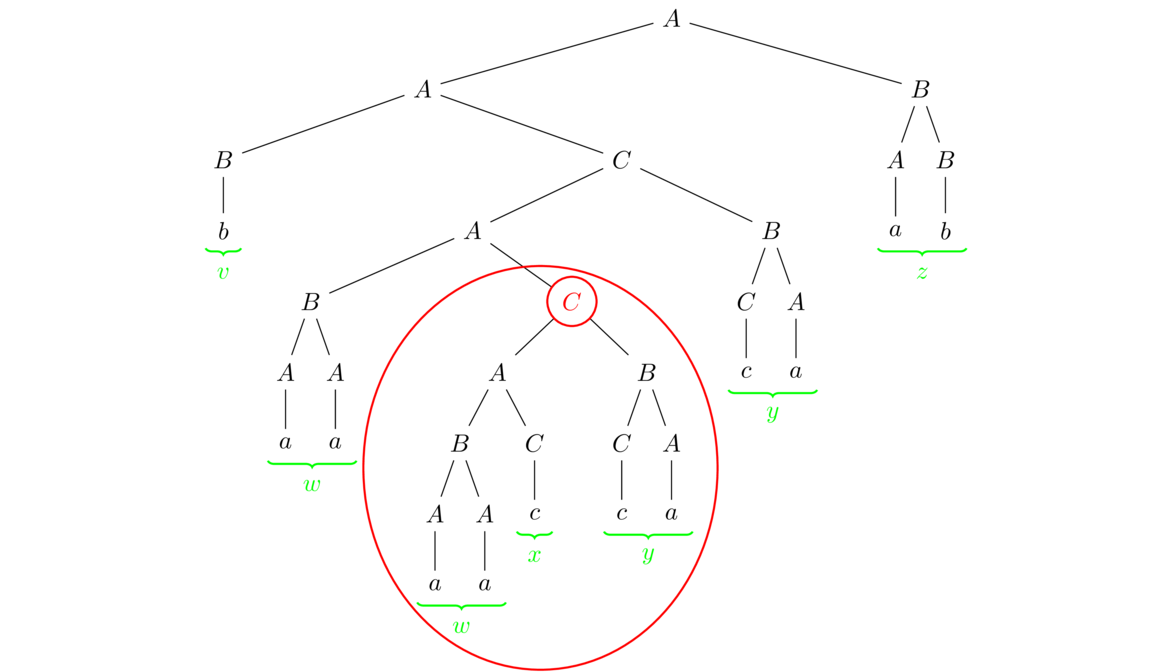

We have seen that some context free grammars generate languages that are not regular. The standard example is S → 0S1 | λ, which generates the language 0n1n. So we can't convert every context-free grammar to an NFA. But we can convert certain grammars that are known as right-linear grammars.