An Intuitive Guide to Numerical Methods

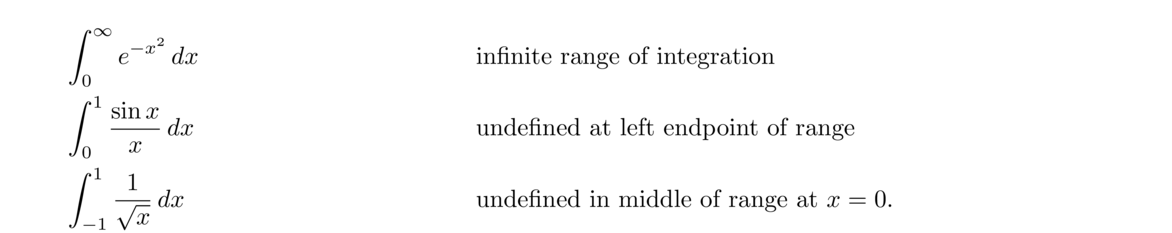

© 2019 Brian Heinold Here is a pdf version of the book.

One thing that is missing here is linear algebra. Most of my students hadn't yet taken linear algebra, so it wasn't something I covered.

I have included Python code for many of the methods. I find that sometimes it is easier for me to understand how a numerical method works by looking at a program. Python is easy to read, being almost like pseudocode (with the added benefit that it actually runs). However, everything has been coded for clarity, not for efficiency or robustness. In real-life there are lots of edge cases that need to be checked, but checking them clutters the code, and that would defeat the purpose of using the code to illustrate the method. In short, do not use the code in this book for anything important.

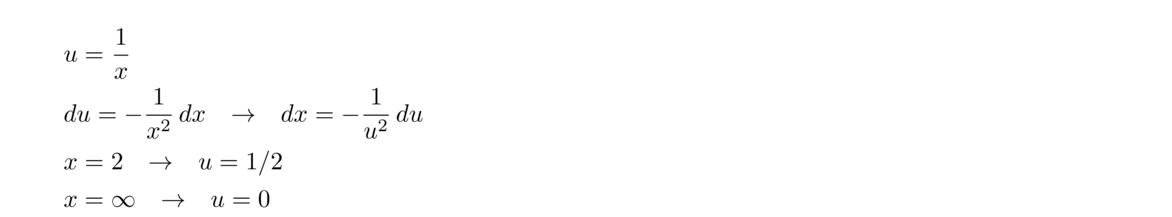

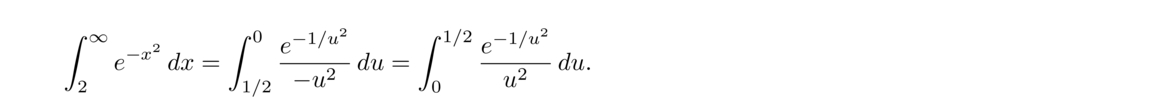

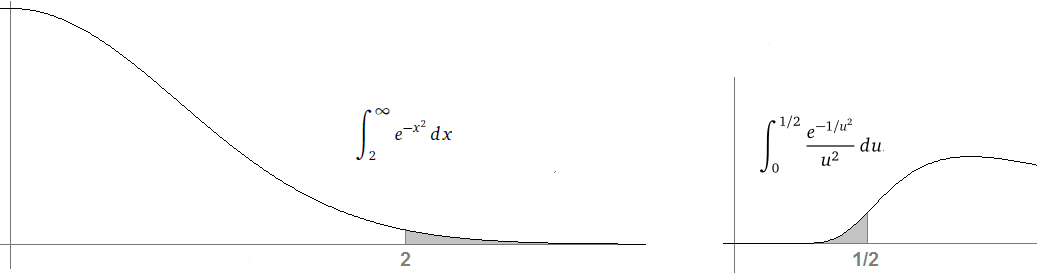

There are a few hundred exercises, mostly of my own creation. They are all grouped together in Chapter 7.

If you see anything wrong (including typos), please send me a note at heinold@msmary.edu.

Equations like 3x+4 = 7 or x2–2x+9 = 0 can be solved using high school algebra. Equations like 2x3+4x2+3x+1 = 0 or x4+x+1 = 0 can be solved by some more complicated algebra.

But the equation x5–x+1 = 0 cannot be solved algebraically. There are no algebraic techniques that will give us an answer in terms of anything familiar. If we need a solution to an equation like this, we use a numerical method, a technique that will give us an approximate solution.

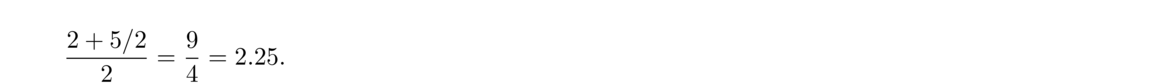

Here is a simple example of a numerical method that estimates √5: Start with a guess for √5, say x = 2 (since √4 = 2 and 5 is pretty close to 4). Then compute

This is what a numerical method is—a process that produces approximate solutions to some problem. The process is often repeated like this, where approximations are plugged into the method to generate newer, better approximations. Often a method can be run over and over until a desired amount of accuracy is obtained.

It is important to study how well the method performs. There are a number of questions to ask: Does it always work? How quickly does it work? How well does it work on a computer?

Now, in case a method to find the square root seems silly to you, consider the following: How would you find the square root of a number without a calculator? Notice that this method allows us to estimate the square root using only the basic operations of addition and division. So you could do it by hand if you wanted to. In fact it's called the Babylonian method, or Hero's method, as it was used in Babylon and ancient Greece. It used to be taught in school until calculators came along.**Calculators themselves use numerical methods to find square roots. Many calculators use efficient numerical methods to compute ex and ln x and use the following identity to obtain √x from ex and ln x:

Most numerical methods are implemented on computers and calculators, so we need to understand a little about how computers and calculators do their computations.

Computer hardware cannot not represent most real numbers exactly. For instance, π has an infinite, non-repeating decimal expansion that would require an infinite amount of space to store. Most computers use something called floating-point arithmetic, specifically the IEEE 754 standard. We'll just give a brief overview of how things work here, leaving out a lot of details.

The most common way to store real numbers uses 8 bytes (64 bits). It helps to think of the number as written in scientific notation. For instance, write 25.394 as 2.5394 × 102. In this expression, 2.5394 is called the mantissa. The 2 in 102 is the exponent. Note that instead of a base 10 exponent, computers, which work in binary, use a base 2 exponent. For instance, .3 is written as 1.2 × 2–2.

Of the 64 bits, 53 are used to store the mantissa, 11 are for the exponent, and 1 bit is used for the sign (+ or –). These numbers actually add to 65, not 64, as a special trick allows an extra bit of info for the mantissa. The 53 bits for the mantissa allow for about 15 digits of precision. The 11 bits for the exponent allow numbers to range from as small as 10–307 to as large as 10308.

The precision limits mean that we cannot distinguish 123.000000000000007 from 123.000000000000008. However, we would be able to distinguish .000000000000000007 and .000000000000000008 because those numbers would be stored as 7 × 10–18 and 8 × 10–18. The first set of numbers requires more than 15 digits of precision, while the second pair requires very little precision.

Also, doing something like 1000000000 + .00000004 will just result in 1000000000 as the 4 falls beyond the precision limit.

When reading stuff about numerical methods, you will often come across the term machine epsilon. It is defined as the difference between 1 and the closest floating-point number larger than 1. In the 64-bit system described above, that value is about 2.22 × 10–16. Machine epsilon is a good estimate of the limit of accuracy of floating-point arithmetic. If the difference between two real numbers is less than machine epsilon, it is possible that the computer would not be able to tell them apart.

One interesting thing is that many numbers, like .1 or .2, cannot be represented exactly using IEEE 754. The reason for this is that computers store numbers in binary (base 2), whereas our number system is decimal (base 10), and fractions behave differently in the two bases. For instance, converting .2 to binary yields .00110011001100…, an endlessly repeating expansion. Since there are only 53 bits available for the mantissa, any repeating representation has to be cut off somewhere.**It's interesting to note that the only decimal fractions that have terminating binary expansions are those whose denominators are powers of 2. In base 10, the only terminating expansions come from denominators that are of the form 2j5k. This is because the only proper divisors of 10 are 2 and 5. This is called roundoff error. If you convert the cut-off representation back to decimal, you actually get .19999999999999998.

If you write a lot of computer programs, you may have run into this problem when printing numbers. For example, consider the following simple Python program:

We would expect it to print out .3, but in fact, because of roundoff errors in both .2 and .1, the result is 0.30000000000000004.

Actually, if we want to see exactly how a number such as .1 is represented in floating-point, we can do the following:

The result is 0.1000000000000000055511151231257827021181583404541015625000000000000000.

This number is the closest IEEE 754 floating-point number to .1. Specifically, we can write .1 as .8 × 2–3, and in binary, .8 is the has a repeating binary expansion .110011001100…. That expansion gets cut off after 53 bits and that eventually leads to the strange digits that show up around the 18th decimal place.

One big problem with this is that these small roundoff errors can accumulate, or propagate. For instance, consider the following Python program:

Here is something else that can go wrong: Try computing the following:

The exact answer should be .1, but the program gives 0.11102230246251565, only correct to one decimal place. The problem here is that we are subtracting two numbers that are very close to each other. This causes all the incorrect digits beyond the 15th place in the representation of .1 to essentially take over the calculation. Subtraction of very close numbers can cause serious problems for numerical methods and it is usually necessary to rework the method, often using algebra, to eliminate some subtractions.

This is sometimes called the Golden rule of numerical analysis: don't subtract nearly equal numbers.

The 64-bit storage method above is called IEEE 754 double-precision (this is where the data type double in many programming languages gets its name). There is also 32-bit single-precision and 128-bit quadruple-precision specified in the IEEE 754 standard.

Single-precision is the float data type in many programming languages. It uses 24 bits for the mantissa and 8 for the exponent, giving about 6 digits of precision and values as small as 10–128 to as large as 10127. The advantages of single-precision over double-precision are that it uses half as much memory and computations with single-precision numbers often run a little more quickly. On the other hand, for many applications these considerations are not too important.

Quadruple-precision is implemented by some programming languages.**There is also an 80-bit extended precision method on x86 processors that is accessible via the long double type in C and some other languages. One big difference between it and single- and double-precision is that the latter are usually implemented in hardware, while the former is usually implemented in software. Implementing in software, rather than having specially designed circuitry to do computations, results in a significant slowdown.

For most scientific calculations, like the ones we are interested in a numerical methods course, double-precision will be what we use. Support for it is built directly into hardware and most programming languages. If we need more precision, quadruple-precision and arbitrary precision are available in libraries for most programming languages. For instance, Java's BigDecimal class and Python's Decimal class can represent real numbers to very high precision. The drawback to these is that they are implemented in software and thus are much slower than a native double-precision type. For many numerical methods this slowdown makes the methods too slow to use.

See the article, “What Every Computer Scientist Should Know About Floating-Point Arithmetic” at http://docs.oracle.com/cd/E19957-01/806-3568/ncg_goldberg.html for more details about floating-point arithmetic.

Solving equations is of fundamental importance. Often many problems at their core come down to solving equations. For instance, one of the most useful parts of calculus is maximizing and minimizing things, and this usually comes down to setting the derivative to 0 and solving.

As noted earlier, there are many equations, such as x5–x+1 = 0, which cannot be solved exactly in terms of familiar functions. The next several sections are about how to find approximate solutions to equations.

The bisection method is one of the simpler root-finding methods. If you were to put someone in a room and tell them not to come out until they come up with a root-finding method, the bisection method is probably the one they would come up with.

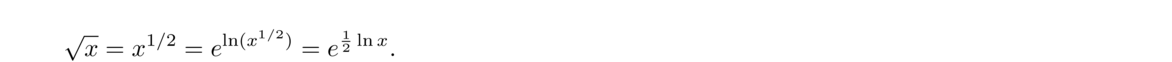

Here is the idea of how it works. Suppose we want to find a root of f(x) = 1–2x–x5. First notice that f(0) = 1, which is above the x-axis and f(1) = –2, which is below the axis. For the function to get from 1 to –2, it must cross the axis somewhere, and that point is the root.**This is common sense, but it also comes from the Intermediate Value Theorem. So we know a root lies somewhere between x = 0 and x = 1. See the figure below.

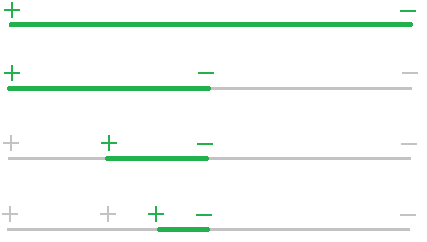

Next we look at the midpoint of 0 and 1, which is x = .5 (i.e., we bisect the interval) and check the value of the function there. It turns out that f(.5) = –0.03125. Since this is negative and f(0) = 1 is positive, we know that the function must cross the axis (and thus have a root) between x = 0 and x = .5. We can then bisect again, by looking at the value of f(.25), which turns out to be around .499. Since this is positive and f(.5) is negative, we know the root must be between x = .25 and x = .5. We can keep up this bisection procedure as long as we like, and we will eventually zero in on the location of the root. Shown below is a graphical representation of this process.

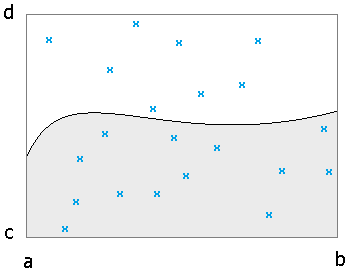

In general, here is how the bisection method works: We start with values a and b such that f(a) and f(b) have opposite signs. If f is continuous, then we are guaranteed that a root lies between a and b. We say that a and b bracket a root.

We then compute f(m), where m = (a+b)/2. If f(m) and f(b) are of opposite signs, we set a = m; if f(m) and f(a) are of opposite signs, we set b = m. In the unlikely event that f(m) = 0, we stop because we have found a root. We then repeat the process again and again until a desired accuracy is reached. Here is how we might code this in Python:

For simplicity, we have ignored the possibility that f(m) = 0. Notice also that an easy way to check if f(a) and f(m) are of opposite signs is to check if f(a)f(m) < 0. Here is how we might call this function to do 20 bisection steps to estimate a root of x2–2:

In Python, lambda creates an anonymous function. It's basically a quick way to create a function that we can pass to our bisection function.

Let a0 and b0 denote the starting values of a and b. At each step we cut the previous interval exactly in half, so after n steps the interval has length (b0–a0)/2n. At any step, our best estimate for the root is the midpoint of the interval, which is no more than half the length of the interval away from the root, meaning that after n steps, the error is no more than (b0–a0)/2n+1.

For example, if a0 = 0, b0 = 1, and we perform n = 10 iterations, we would be within 1/211 ≈ .00049 of the actual root.

If we have a certain error tolerance ε that we want to achieve, we can solve (b0–a0)/2n+1 = ε for n to get

In the world of numerical methods for solving equations, the bisection method is very reliable, but very slow. We essentially get about one new correct decimal place for every three iterations (since log2(10) ≈ 3). Some of the methods we will consider below can double and even triple the number of correct digits at every step. Some can do even better. But this performance comes at the price of reliability. The bisection method, however slow it may be, will usually work.

However, it will miss the root of something like x2 because that function is never negative. It can also be fooled around vertical asymptotes, where a function may change from positive to negative but have no root, such as with 1/x near x = 0.

The false position method is a lot like the bisection method. The difference is that instead of choosing the midpoint of a and b, we draw a line between (a, f(a)) and (b, f(b)) and see where it crosses the axis. In particular, the crossing point can be found by finding the equation of the line, which is y–f(a) = f(b)–f(a)b–a(x–a), and setting y = 0 to get its intersection with the x axis. Solving and simplifying, we get

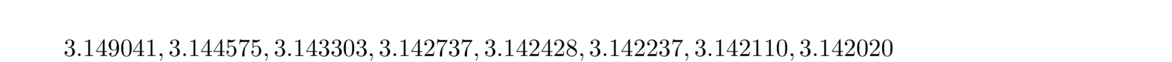

Try the following experiment: Pick any number. Take the cosine of it. Then take the cosine of your result. Then take the cosine of that. Keep doing this for a while. On a TI calculator, you can automate this process by typing in your number, pressing enter, then entering cos(Ans) and repeatedly pressing enter. Here's a Python program to do this along with its output:

We can see that the numbers seem to be settling down around .739. If we were to carry this out for a few dozen more iterations, we would eventually get stuck at (to 15 decimal places) 0.7390851332151607. And we will eventually settle in on this number no matter what our initial value of x is.

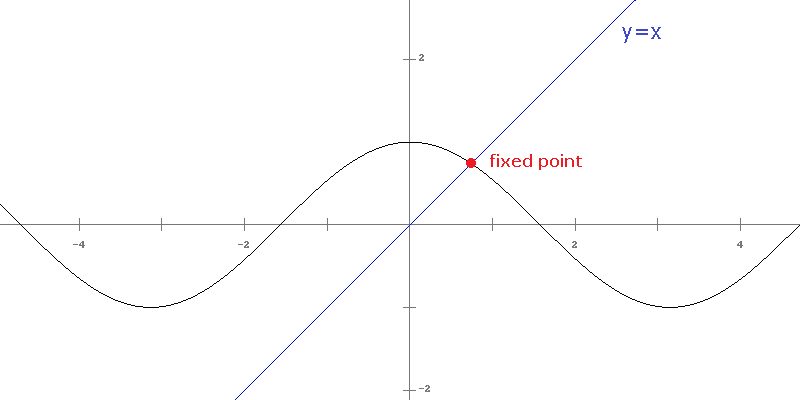

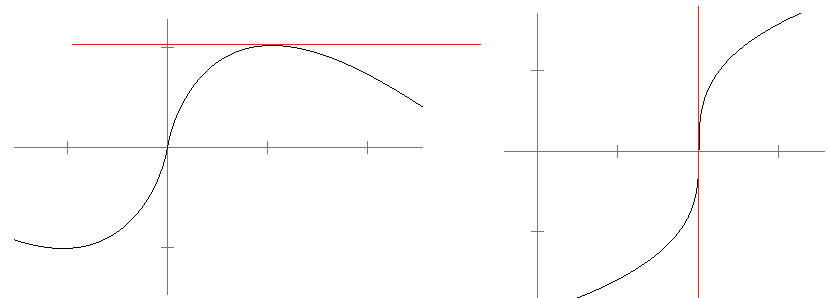

What is special about this value, .739…, is that it is a fixed point of cosine. It is a value which is unchanged by the cosine function; i.e., cos(.739…) = .739…. Formally, a fixed point of a function g(x) is a value p such that g(p) = p. Graphically, it's a point where the graph crosses the line y = x. See the figure below:

Some fixed points have the property that they attract nearby points towards them in the sort of iteration we did above. That is, if we start with some initial value x0, and compute g(x0), g(g(x0)), g(g(g(x0))), etc., those values will approach the fixed point. The point .739… is an attracting fixed point of cos x.

Other fixed points have the opposite property. They repel nearby points, so that it is very hard to converge to a repelling fixed point. For instance, g(x) = 2 cos x has a repelling fixed point at p = 1.02985…. If we start with a value, say x = 1, and iterate, computing g(1), g(g(1)), etc., we get 1.08, .94, 1.17, .76, 1.44, numbers which are drifting pretty quickly away from the fixed point.

Here is a way to picture fixed points: Imagine a pendulum rocking back and forth. This system has two fixed points. There is a fixed point where the pendulum is hanging straight down. This is an attracting state as the pendulum naturally wants to come to rest in that position. If you give it a small push from that point, it will soon return back to that point. There is also a repelling fixed point when the pendulum is pointing straight up. At that point you can theoretically balance the pendulum, if you could position it just right, and that fixed point is repelling because just the slighted push will move the pendulum from that point. Phenomena in physics, weather, economics, and many other fields have natural interpretations in terms of fixed points.

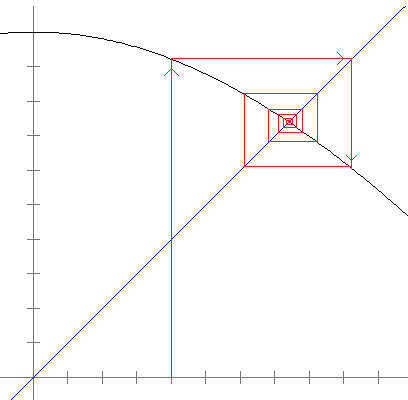

We can visualize the fact that the fixed point of cos x is attracting by using something called a cobweb diagram. Here is one:

In the diagram above, the function shown is g(x) = cos(x), and we start with x0 = .4. We then plug that into the function to get g(.4) = .9210…. This is represented in the cobweb diagram by the line that goes from the x-axis to the cosine graph. We then compute g(g(.4)) (which is g(.9210…)). What we are doing here is essentially taking the previous y-value, turning it into an x-value, and plugging that into the function. Turning the y-value into an x-value is represented graphically by the horizontal line that goes from our previous location on the graph to the line y = x. Then plugging in that value into the function is represented by the vertical line that goes down to the graph of the function.

We then repeat the process by going over to y = x, then to y = cos(x), then over to y = x, then to y = cos(x), etc. We see that the iteration is eventually sucked into the fixed point.

Here is an example of a repelling fixed point, iterating g(x) = 1–2x–x5.

The key to whether a fixed point p is attracting or repelling turns out to be the value of g′(p), the slope of the graph at the fixed point. If |g′(p)| < 1 then the fixed point is attracting. If |g′(p)| > 1, the fixed point is repelling.**This result is sometimes called the Banach fixed point theorem and can be formally proved using the Mean Value Theorem. Graphically, if the function is relatively flat near the fixed point, the lines in the cobweb diagram will be drawn in towards the fixed point, whereas if the function is steeply sloped, the lines will be pushed away. If |g′(p)| = 1, a more sophisticated analysis is needed to determine the point's behavior, as it can be (slowly) attracting, (slowly) repelling, possibly both, or divergent.

The value of |g′(p)| also determines the speed at which the iterates converge towards the fixed point. The closer |g′(p)| is to 0, the faster the iterates will converge. A very flat graph, for instance, will draw the cobweb diagram quickly into the fixed point.

One caveat here is that an attracting fixed point is only guaranteed to attract nearby points to it, and the definition of “nearby” will vary from function to function. In the case of cosine, “nearby” includes all of ℝ, whereas with other functions “nearby” might mean only on a very small interval.

The process described above, where we compute g(x0), g(g(x0)), g(g(g(x0))), … is called fixed point iteration. It can be used to find an (attracting) fixed point of f. We can use this to find roots of equations if we rewrite the equation as a fixed point problem.

For instance, suppose we want to solve 1–2x–x5 = 0. We can rewrite this as a fixed point problem by moving the 2x over and dividing by 2 to get (1–x5)/2 = x. It is now of the form g(x) = x for g(x) = (1–x5)/2. We can then iterate this just like we iterated cos(x) earlier.

We start by picking a value, preferably one close to a root of 1–2x–x5. Looking at a graph of the function, x0 = .5 seems like a good choice. We then iterate. Here is result of the first 20 iterations:

We see that the iterates pretty quickly settle down to about .486389035934543.

On a TI calculator, you can get the above (possibly to less decimal places) by entering in .5, pressing enter, then entering in There are a couple of things to note here about the results. First, the value of |g′(p)| is about .14, which is pretty small, so the convergence is relatively fast. We add about one new correct digit with every iteration. We can see in the figure below that the graph is pretty flat around the fixed point. If you try drawing a cobweb diagram, you will see that the iteration gets pulled into the fixed point very quickly.

Second, the starting value matters. If our starting value is larger than about 1.25 or smaller than -1.25, we are in the steeply sloped portion of the graph, and the iterates will be pushed off to infinity. We are essentially outside the realm of influence of the fixed point.

Third, the way we rewrote 1–2x–x5 = 0 was not the only way to do so. For instance, we could instead move the x5 term over and take the fifth root of both sides to get 5√1–2x = x. However, this would be a bad choice, as the derivative of this function at the fixed point is about -7, which makes the point repelling. A different option would be to write 1 = 2x+x5, factor out an x and solve to get 1x4+2 = x. For this iteration, the derivative at the fixed point is about –.11, so this is a good choice.

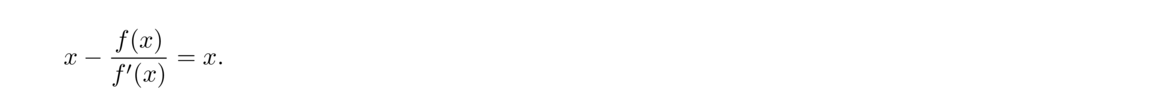

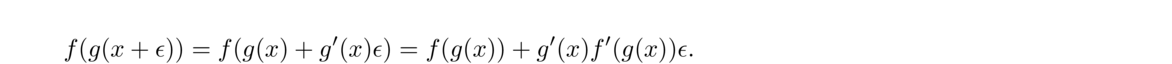

Here is a clever way to turn f(x) = 0 into a fixed point problem: divide both sides of the equation by –f′(x) and then add x to both sides. This gives

Iterating x–f(x)f′(x) to find a root of f is known as Newton's method.**It is also called the Newton-Raphson method. As an example, suppose we want a root of f(x) = 1–2x–x5. To use Newton's method, we first compute f′(x) = –2–4x5. So we will be iterating

After just a few iterations, Newton's method has already found as many digits as possible in double-precision floating-point arithmetic.

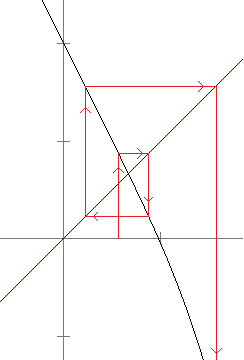

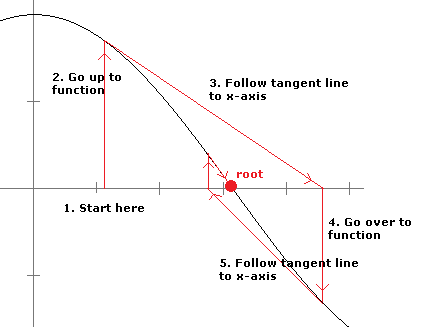

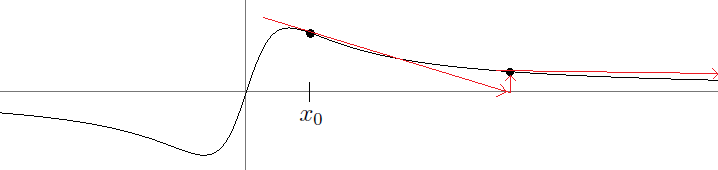

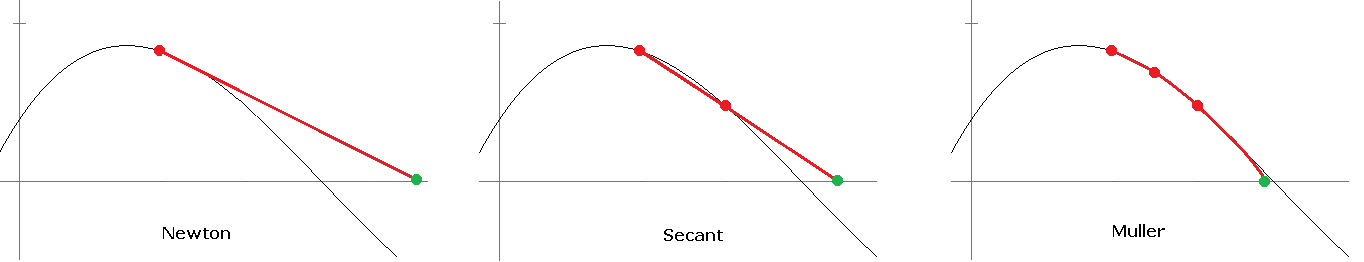

To get this on a TI calculator, put in .5, press enter, then put in The figure below shows a nice way to picture how Newton's method works. The main idea is that if x0 is sufficiently close to a root of f, then the tangent line to the graph at (x0, f(x0)) will cross the x-axis at a point closer to the root than x0.

What we do is start with an initial guess for the root and draw a line up to the graph of the function. Then we follow the tangent line down to the x-axis. From there, we draw a line up to the graph of the function and another tangent line down to the x-axis. We keep repeating this process until we (hopefully) get close to a root. It's a kind of slanted and translated version of a cobweb diagram.

This geometric process helps motivate the formula we derived for Newton's method. If x0 is our initial guess, then (x0, f(x0)) is the point where the vertical line meets the graph. We then draw the tangent line to the graph. The equation of this line is y–f(x0) = f′(x0)(x–x0). This line crosses the x-axis when y = 0, so plugging y = 0 and solving for x, we get that the new x value is x0–f(x0)f′(x0).

There are a few things that can go wrong with Newton's method. One thing is that f′(x) might be 0 at a point that is being evaluated. In that case the tangent line will be horizontal and will never meet the x-axis (note that the denominator in the formula will be 0). See below on the left. Another possibility can be seen with f(x) = 3√x–1. Its derivative is infinite at its root, x = 1, which has the effect of pushing tangent lines away from the root. See below on the right.

Also, just like with fixed-point iteration, if we choose a starting value that is too far from the root, the slopes of the function might push us away from the root, never to come back, like in the figure below:

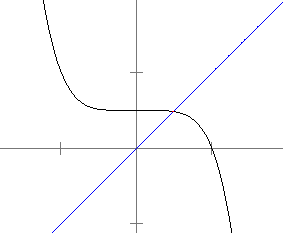

There are stranger things that can happen. Consider using Newton's method to find a root of f(x) = 3√(3–4x)/x.

After a fair amount of simplification, the formula x–f(x)f′(x) for this function becomes 4x(1–x). Now, a numerical method is not really needed here because it is easy to see that the function has a root at x = 3/4 (and this is its only root). But it's interesting to see how the method behaves. One thing to note is that the derivative goes vertical at x = 3/4, so we would expect Newton's method to have some trouble. But the trouble it has is surprising.

Here are the first 36 values we get if we use a starting value of .4. There is no hint of this settling down on a value, and indeed it won't ever settle down on a value.

But things are even stranger. Here are the first 10 iterations for two very close starting values, .400 and .401:

We see these values very quickly diverge from each other. This is called sensitive dependence on initial conditions. Even if our starting values were vanishingly close, say only 10–20 apart, it would only take several dozen iterations for them to start to diverge. This is a hallmark of chaos. Basically, Newton's method is hopeless here.

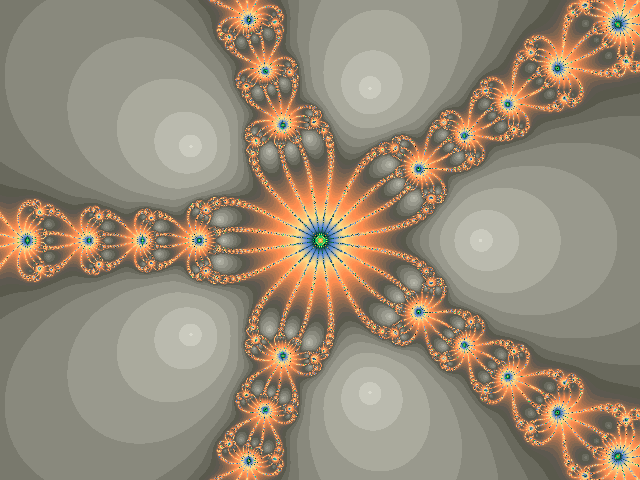

Finally, to show a little more of the interesting behavior of Newton's method, the figure below looks at iterating Newton's method on x5–1 with starting values that could be real or complex numbers. Each point in the picture corresponds to a different starting value, and the color of the point is based on the number of iterations it takes until the iterates get within 10–5 of each other.

The five light areas correspond to the five roots of x5–1 (there is one real root at x = 1 and four complex roots). Starting values close to those roots get sucked into those roots pretty quickly. However, points in between the two roots bounce back and forth rather chaotically before finally getting trapped by one of the roots. The end result is an extremely intricate picture. It is actually a fractal in that one could continuously zoom in on that figure and see more and more intricate detail that retains the same chaining structure as in the zoomed-out figure.

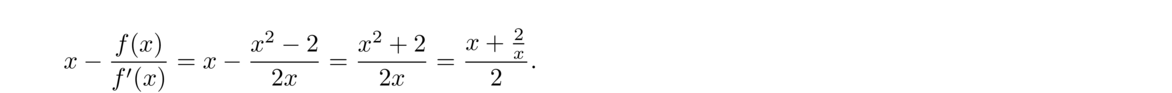

We can use Newton's method to derive a method for estimating square roots. The trick is to find an appropriate function to apply Newton's method to. The simplest possibility is f(x) = x2–2. Its roots are ±√2. We have f′(x) = 2x and so the Newton's method formula is

The numerical methods we have examined thus far try to approximate a root r of a function f by computing approximations x1, x2, …, xn, which are hopefully getting closer and closer to r. We can measure the error at step i with |xi–r|, which is how far apart the approximation is from the root.

Suppose we are using FPI to estimate a solution of x3+x–1 = 0. To four decimal places, a solution turns out to be x = .6823. One way to rewrite the problem as a fixed-point problem is to write x(x2+1)–1 = 0 and solve for x to get x = 1/(x2+1). Let's start with x0 = 1 and iterate. Below are the iterates x1 through x10 along with their corresponding errors (how far off they are from .6823):

The third column is the ratio of successive errors. If we look carefully at the errors, it looks like each error is roughly 2/3 of the previous error, and the ratio ei+1/ei bears that out. In fact, after enough iterations it ends up being true that ei+1 ≈ .635 ei. When the errors go down by a nearly constant factor at each step like this, we have what is called linear convergence.

For some methods, like Newton's method, the error can go down at a faster than constant rate. For instance, if we apply Newton's method to the same problem as above, starting with x0 = 1, we get the following sequence of errors:

The errors are going down at a faster than linear rate. It turns out that they are going down roughly according to the rule ei+1 ≈ .854 e2i. This is an example of quadratic convergence.

Formally, if limi → ∞ei+1ei = s and 0 < s < 1, the method is said to converge linearly.**If s = 0, the convergence will be faster than linear. If limi → ∞ei+1e2i = s and 0 < s < 1, the method is said to converge quadratically. Cubic, quartic, etc. convergence are defined similarly. The exponent in the denominator is the key quantity of interest. It is called the order of convergence. (The order of linear convergence is 1, the order of quadratic convergence is 2, etc.)

An order of convergence greater than 1 is referred to as superlinear.

Let's examine the difference between linear and quadratic convergence: Suppose we have linear convergence of the form ei+1 ≈ .1ei. Each error is about 10% of the previous, so that if for example, e1 = .1, then we have

We add about one new decimal place of accuracy with each iteration. If we had a larger constant, say ei+1 = .5ei, then the errors would go down more slowly, but we would still add about one new decimal place of accuracy every couple of iterations.

On the other hand, suppose we have quadratic convergence of the form ei+1 ≈ e2i. If we start with e1 = .1, then we have

So we actually double the number of correct decimal places of accuracy at each step. So there is a big difference between linear and quadratic convergence. For the linear convergence described above, it would take 1000 iterations to get 1000 decimal places of accuracy, whereas for the quadratic convergence described above, it would take about 10 iterations (since 210 = 1024).

Similarly, cubic convergence roughly triples the number of correct decimal places at each step, quartic quadruples it, etc.

Suppose we are solving f(x) = 0 and that f′ and f″ exist and are continuous. Then the following hold:

In the above, “close to r” formally means that there is some interval around r for which if the initial guess falls in that interval, then the iterates will converge to r. The formal term for this is local convergence. The size of that interval varies with the function.

In summary, if a few assumptions are met, then we know that FPI converges linearly with rate |g′(r)|, bisection converges linearly with rate .5, and Newton's method converges quadratically (at simple roots).

And those assumptions can't entirely be ignored. For instance, f(x) = √x has a root at x = 0, and f″(0) does not exist. For this function, Newton's method turns out to converge very slowly to the root at 0, not quadratically.

There are generalizations of Newton's method that converge even more quickly. For instance, there is Halley's method, which involves iterating

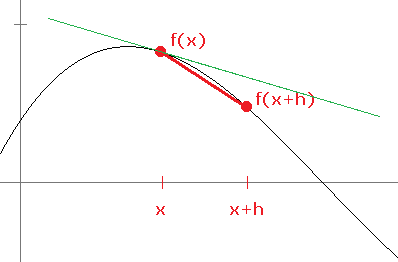

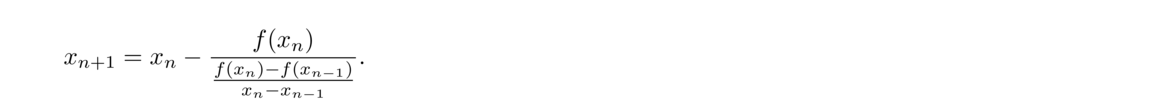

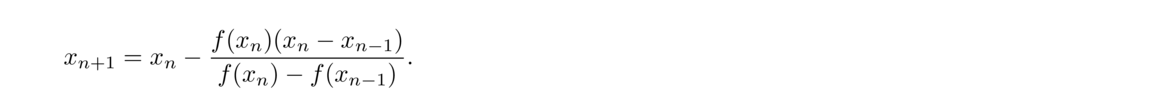

One weakness of Newton's method is that it requires derivatives. This is usually not a problem, but for certain functions, getting and using the derivative might be inconvenient, difficult, or computationally expensive. The secant method uses the same ideas from Newton's method, but it approximates the derivative (slope of the tangent line) with the slope of a secant line.

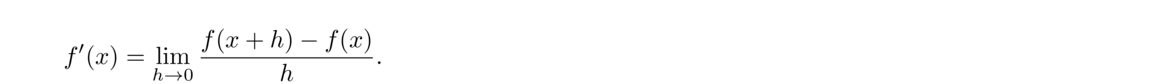

Remember that the derivative of a function f at a point x is the slope of the tangent line at that point. The definition of the derivative, given by f′(x) = limh → 0f(x+h)–f(x)x+h, is gotten by approximating the slope of that tangent line by the slopes of secant lines between x and a nearby point x+h. As h gets smaller, x+h gets closer to x and the secant lines start to look more like tangent lines. In the figure below a secant line is shown in red and the tangent line in green.

The formula we iterate for Newton's method is

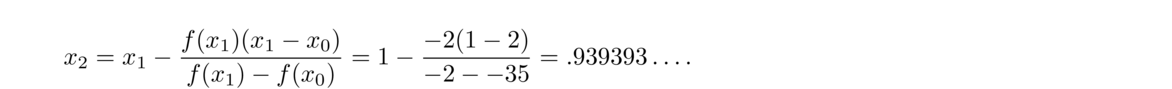

As an example, let's use the secant method to estimate a root of f(x) = 1–2x–x5. Since the formula needs two previous iterations, we will need two starting values. For simplicity, we'll pick x0 = 2 and x1 = 1. We have f(x0) = –35 and f(x1) = –2. We then get

We can see that this converges quickly, but not as quickly as Newton's method. By approximating the derivative, we lose some efficiency. Whereas the order of convergence of Newton's method is usually 2, the order of convergence of the secant method is usually the golden ratio, φ = 1.618….**Note we are only guaranteed converge if we are sufficiently close to the root. We also need the function to be relatively smooth—specifically, f″ must exist and be continuous. Also, the root must be a simple root (i.e. of multiplicity 1). Function are pretty flat near a multiple root, which makes it slow for tangent and secant lines to approach the root.

Here is a simple implementation of the secant method in Python:

Here is how we might call this to estimate a solution to x5–x+1 = 0 correct to within 10–10:

In the function, a and b are used for xn–1 and xn in the formula. The loop runs until either f(b) is 0 or the difference between two successive iterations is less than some (small) tolerance. The default tolerance is 10–10, but the caller can specify a different tolerance. The assignment line sets Our implementation is simple, but it is not very efficient or robust. For one thing, we recompute f(b) (which is f(xn)) at each step, even though we already computed it when we did f(a) (which is f(xn–1) at the previous step. A smarter approach would be to save the value. Also, it is quite possible for us to get caught in an infinite loop, especially if our starting values are poorly chosen. See Numerical Recipes for a faster and safer implementation.

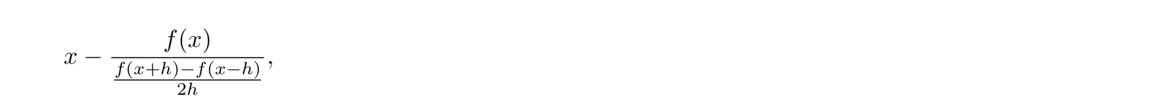

A close relative of Newton's method and the secant method is to iterate the formula below:

Muller's method is a generalization of the secant method. Recall that Newton's method involves following the tangent line down to the x-axis. That point where the line crosses the axis will often be closer to the root than the previous point. The secant method is similar except that it follows a secant line down to the axis. What Muller's method does is it follows a parabola down to the axis. Whereas a secant line approximates the function via two points, a parabola uses three points, which can give a closer approximation.

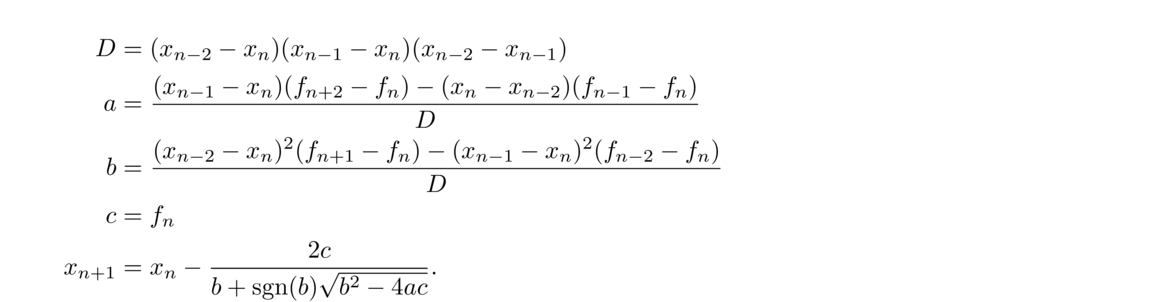

Here is the formula for Muller's (where fi denotes f(xi) for any integer i):

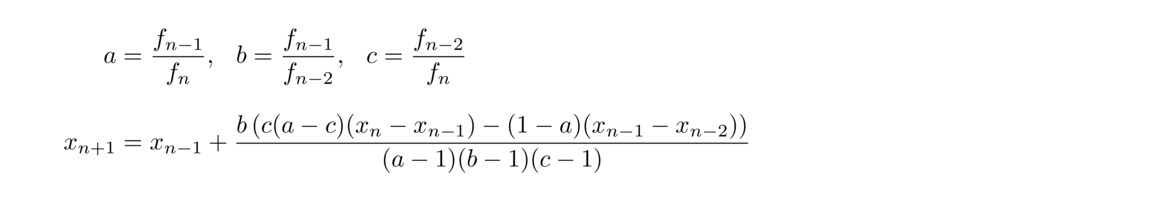

Inverse quadratic interpolation is a relative of Muller's method. Like Muller's, it uses a parabola, but it approximates f–1 instead of f. This is essentially the same as approximating f by the inverse of a quadratic function. After some work (which we omit), we get the following iteration:

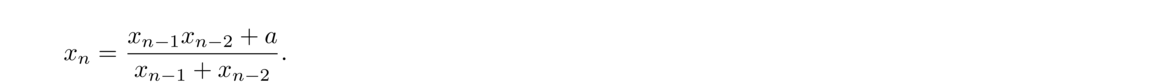

Both Muller's method and inverse quadratic interpolation have an order of convergence of about 1.839.**Like with the secant method, this result holds for starting values near a simple root, and the function must be relatively smooth. Note also that the order of the secant method, 1.618…, is the golden ratio, related to the Fibonacci numbers. The order of Muller's and IQI, 1.839…, is the tribonacci constant, related to the tribonacci numbers, defined by the recurrence xn+1 = xn + xn–1 + xn–2. That number is the solution to x3 = x2+x+1, whereas the golden ratio is the solution to x2 = x+1.

Recall that we have a and b with f(a) and f(b) of opposite signs, like in the bisection method, we say a root is bracketed by a and b. That is, the root is contained inside that interval.

Brent's method is one of the most widely used root-finding methods. You start by giving it a bracketing interval. It then uses a combination of the bisection method and inverse quadratic interpolation to find a root in that interval. In simple terms, Brent's method uses inverse quadratic interpolation unless it senses something is going awry (like an iterate leaving the bracketing interval, for example), then it switches to the safe bisection method for a bit before trying inverse quadratic interpolation again. In practice, it's a little more complicated than this. There are a number of special cases built into the algorithm. It has been carefully designed to ensure quick, guaranteed convergence in a variety of situations. It converges at least as fast as the bisection method and most of the time is better, converging superlinearly.

There are enough special cases to consider that the description of the algorithm becomes a bit messy. So we won't present it here, but a web search for Brent's method will turn up a variety of resources.

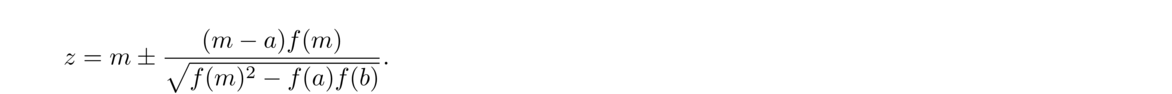

Ridder's method is a variation on bisection/false position that is easy to implement and works fairly well. Numerical Recipes recommends it as an alternative to Brent's method in many situations.

The method starts out with a and b, like in the bisection method, where one of f(a) and f(b) is positive and the other is negative. We then compute the midpoint m = (a+b)/2 and the expression

This equation gives us an improvement over the bisection method, which uses m as its estimate, leading to quadratic convergence. It is, however, slower than Newton's method, as it requires two new function evaluations at each step as opposed to only one for Newton's method. But it is more reliable than Newton's method.

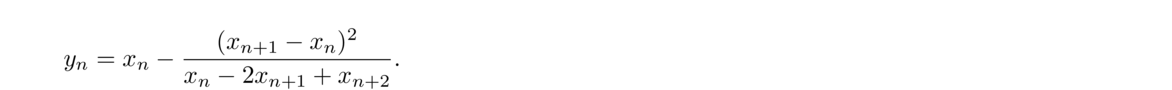

There is an algorithm called Aitken's Δ2 method that can take a slowly converging sequence of iterates and speeds things up. Given a sequence {xn} create a new sequence {yn} defined as below:

Treating this as an equation and solving for r gives the formula above (after a fair bit of algebra). Here is a Python function that displays an FPI sequence and the sped up version side by side.

Here are the first few iterations we get from iterating cos x.

The fixed point of cos x is .73908 to five decimal places and we can see that after 10 iterations, the sped-up version is correct to that point. On the other hand, it takes the regular FPI about 20 more iterations to get to that point (at which point the sped-up version is already correct to 12 places).

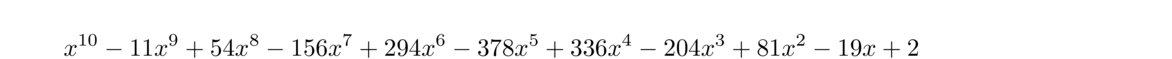

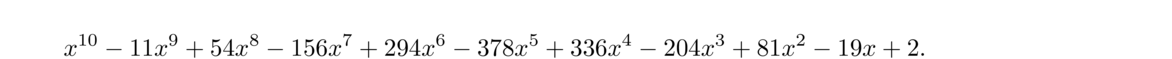

The root-finding methods considered in previous sections can be used to find roots of all sorts of functions. But probably the most important and useful functions are polynomials. There are a few methods that are designed specifically for finding roots of polynomials. Because they are specifically tailored to one type of function, namely polynomials, they can be faster than a method that has to work for any type of function. An important method is Laguerre's method. Here is how it works to find a root of a polynomial p:

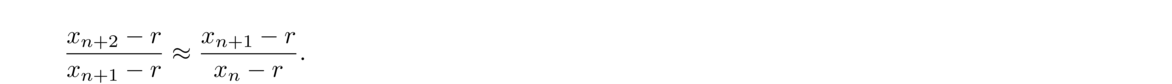

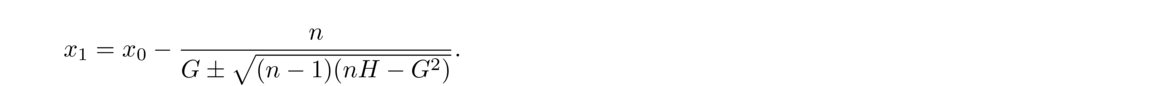

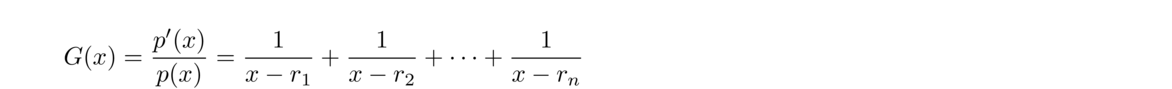

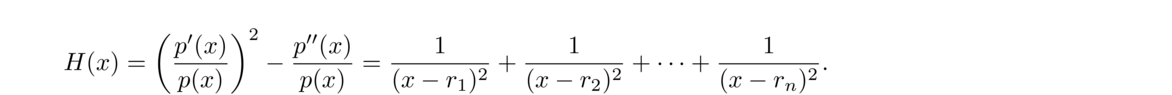

Pick a starting value x0. Compute G = p′(x0)p(x0) and H = G2–p″(x0)p(x0). Letting n be the degree of the polynomial, compute

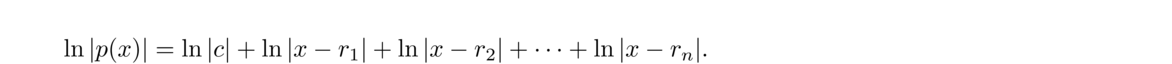

Laguerre's method actually comes from writing the polynomial in factored form p(x) = c(x–r1)(x–r2)…(x–rn). If we take the logarithm of both sides and use the multiplicative property of logarithms, we get

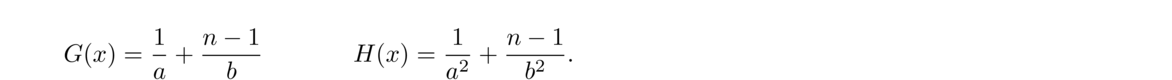

Now, for a given value of x, |x–ri| represents the distance between x and ri. Suppose we are trying to estimate r1. Once we get close to r1, it might be true that all the other roots will seem far away by comparison, and so in some sense, they are all the same distance away, at least in comparison to the distance from r1. Laguerre's method suggests that we pretend this is true and assume |x–r2| = |x–r3| = … = |x–rn|. If we do this, the expressions for G and H above simplify considerably, and we get an equation that we can solve. In particular, let a = |x–r1| and b = |x–r2| = |x–r3| = … = |x–rn|. Then the expressions for G and H simplify into

Laguerre's method works surprisingly well, usually converging to a root and doing so cubically.

One useful fact is that we can use a simple algorithm to compute p(x0), p′(x0), and p″(x0) all at once. The idea is that if we use long or synthetic division to compute p(x)/(x–x0), we are essentially rewriting p(x) as (x–x0)q(x)+r for some polynomial q and integer r (the quotient and remainder). Plugging in x = x0 gives p(x0) = r. Taking a derivative of this and plugging in x0 also gives p′(x0) = q(x0). So division actually gives both the function value and derivative at once. We can then apply this process to q(x) to get p″(x0). Here is a Python function that does this. The polynomial is given as a list of its coefficients (with the constant term first and the coefficient of the highest term last).

There are many other methods for finding roots of polynomials. One important one is the Jenkins-Traub method, which is used in a number of software packages. It is quite a bit more complicated than Laguerre's method, so we won't describe it here. But it is fast and reliable.

There is also a linear-algebraic approach that involves constructing a matrix, called the companion matrix, from the polynomial, and using a numerical method to find its eigenvalues (which coincide with the roots of the polynomial). This is essentially the inverse of what you learn in an introductory linear algebra class, where you solve polynomials to find eigenvalues. Here we are going in the reverse direction and taking advantage of some fast eigenvalue algorithms.

Another approach is to use a root isolation method to find small disjoint intervals, each containing one of the roots and then to apply a method, such as Newton's, to zero in on it. One popular, recent algorithm uses a result called Vincent's theorem is isolate the roots. Finally, there is the Lindsey-Fox method, uses the Fast Fourier Transform to compute the roots.

Some of the methods above, like the bisection method, will only find real roots. Others, like Newton's method or the secant method can find complex roots if the starting values are complex. For instance, starting Newton's method with x0 = .5+.5i will lead us to the root x = –.5+√32i for f(x) = x3–1. Muller's method can find complex roots, even if the starting value is real, owing to the square root in its formula. Another method, called Bairstow's method can find complex roots using only real arithmetic.

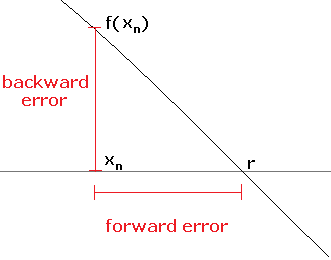

There are a couple of ways to measure how accurate an approximation is. One way, assuming we know what the actual root is, would be to compute the difference between the root r and the approximation xn, namely, |xn–r|. This is called the forward error. In practice, we don't usually have the exact root (since that is what we are trying to find). For some methods, we can derive estimates of the error. For example, for the bisection method we have |xn–r| < |a0–b0|/2n+1.

If the difference between successive iterates, |xn – xn–1| is less than some tolerance ε, then we can be pretty sure (but unfortunately not certain) that the iterates are within ε of the actual root. This is often used to determine when to stop the iteration. For example, here is how we might code this stopping condition in Python:

Sometimes it is better to compute the relative error: |xn–xn–1xn–1|.

Another way to measure accuracy is the backward error. For a given iterate xn, the backward error is |f(xn)|. For example, suppose we are looking for a root of f(x) = x2–2 and we have an approximation xn = 1.414. The backward error here is |f(1.414)| = 0.000604. We know that at the exact root r, f(r) = 0, and the backward error is measuring how far away we are from that.

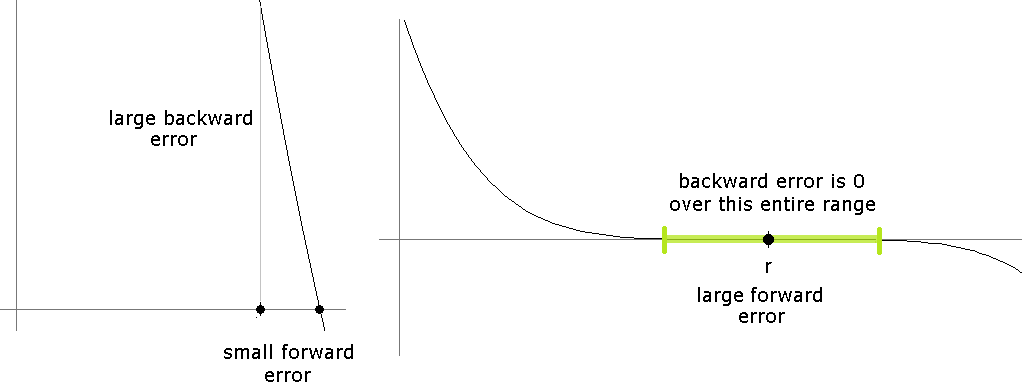

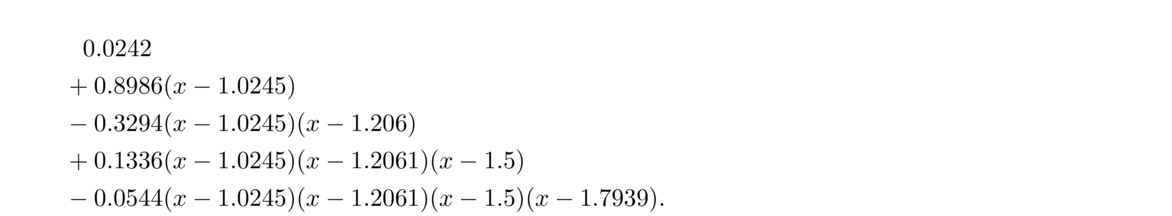

The forward error is essentially the error in the x-direction, while the backward error is the error in the y-direction.**Some authors use a different terminology, using the terms forward and backward in the opposite way that we do here. See the figure below:

We can also use backward error as a stopping condition.

If a function is almost vertical near a root, the forward error will not be a good measure as we could be quite near a root, but f(xn) will still be far from 0. On the other hand, if a function is almost horizontal near a root, the backward error will not be a good measure as f(xn) will be close to 0 even for values far from the root. See the figure below.

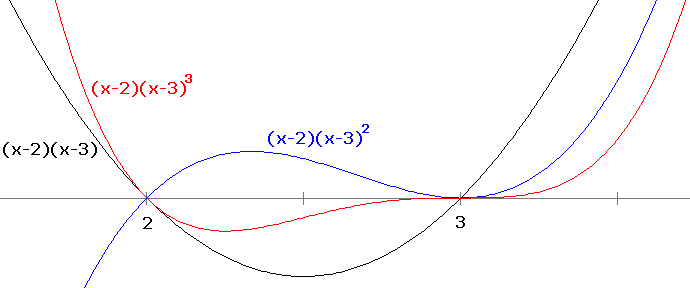

There are two types of roots: simple roots and multiple roots. For a polynomial, we can tell whether a root is simple or multiple by looking at its factored form. Those roots that are raised to the power of 1 are simple and the others are multiple. For instance, in (x–3)4(x–5)2(x–6), 6 is a simple root, while 3 and 5 are multiple roots. The definition of simple and multiple roots that works for functions in general involves the derivative: namely, a root r is simple if f′(r)≠ 0 and multiple otherwise. The multiplicity of the root is the smallest m such that f(m)(r)≠ 0. Graphically, the higher the multiplicity of a root, the flatter the graph is around that root. Shown below are the graphs of (x–2)(x–3), (x–2)(x–3)2, and (x–2)(x–3)3.

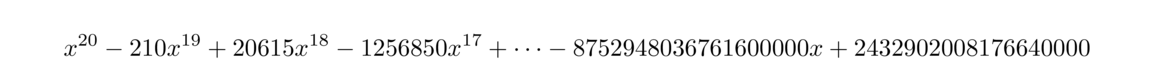

When the graph is flat near a root, it means that it will be hard to distinguish the function values of nearby points from 0, making it hard for a numerical method to get close to the root. For example, consider f(x) = (x–1)5. In practice, we might encounter this polynomial in its expanded form, x5–5x4+10x3–10x2+5x–1. Suppose we try Newton's method on this with a starting value of 1.01. After about 20 iterations, the iterates stabilize around 1.0011417013971329. We are only correct to two decimal places. If we use a computer (using double-precision floating-point) to evaluate the function at this point, we get f(1.0011417013971329) = 0 (the actual value should be about 1.94 × 10–15, but floating-point issues have made it indistinguishable from 0). For x-values in this range and closer, the value of f(x) is less than machine epsilon away from 0. For instance, f(1.000001) = 10–30, so there is no chance of distinguishing that from 0 in double-precision floating point.

The lesson is that the root-finding methods we have considered can have serious problems at multiple roots. More sophisticated approaches or more precision can overcome these problems.

James Wilkinson (who investigated it), examined what happened if the second coefficient was changed from –210 to –210.0000001192, which was a change on the order of machine epsilon on the machine he was working on. He discovered that even this small change moved the roots quite a bit, with the root at 20 moving to roughly 20.85 and the roots at 16 and 17 actually moving to roughly 16.73±2.81i.

Such a problem is sometimes called ill-conditioned. An ill-conditioned problem is one in which a small change to the problem can create a big change in the result. On the other hand, a well-conditioned problem is one where small changes have small effects.

The methods we have talked about will not work well if the problem is ill-conditioned. The problem will have to be approached from a different angle or else higher precision will be needed.

At this point, it's good to remind ourselves of why this stuff is important. The reason is that a ton of real-life problems require solutions to equations that are too hard to solve analytically. Numerical methods allow us to approximate solutions to such equations, often to whatever amount of accuracy is needed for the problem at hand.

It's also worth going back over the methods. The bisection method is essentially a binary search for the root that is probably the first method someone would come up with on their own, and the surprising thing is that it is one of the most reliable methods, albeit a slow one. It is a basis for faster approaches like Brent's method and Ridder's method.

Fixed point iteration is not the most practical method to use to find roots since it requires some work to rewrite the problem as a fixed point problem, but an understanding of FPI is useful for further studies of things like dynamical systems.

Newton's method is one of the most important root-finding methods. It works by following the tangent line down to the axis, then back up to the function and back down the tangent line, etc. It is fast, converging quadratically for a simple root. However, there is a good chance that Newton's method will fail if the starting point is not sufficiently close to the root. Often a reliable method is used to get relatively close to a root, and then a few iterations of Newton's method are used to quickly add some more precision.

Related to Newton's method is the secant method, which, instead of following tangent lines, follows secant lines built from the points of the previous two iterations. It is a little slower than Newton's method (order 1.618 vs. 2), but it does not require any derivatives. Building off of the secant method are Muller's method, which uses a parabola in place of a secant line, and inverse quadratic interpolation, which uses an inverse quadratic function in place of a secant line. These methods have order around 1.839. Brent's method, which is used in some software packages, uses a combination of IQI and bisection as a compromise between speed and reliability. Ridder's method is another reliable and fast algorithm that has the benefit of being much simpler than Brent's method. If we restrict ourselves to polynomials, there are a number of other, more efficient, approaches we can take.

We have just presented the most fundamental numerical root-finding methods here. They are prerequisite for understanding the more sophisticated methods, of which there are many, as root-finding is an active and important field of research. Hopefully, you will find the ideas behind the methods helpful in other, completely different contexts.

Many existing software packages (such as Mathematica, Matlab, Maple, Sage, etc.) have root-finding methods. The material covered here should hopefully give you an idea of what sorts of things these software packages might be using, as well as their limitations.

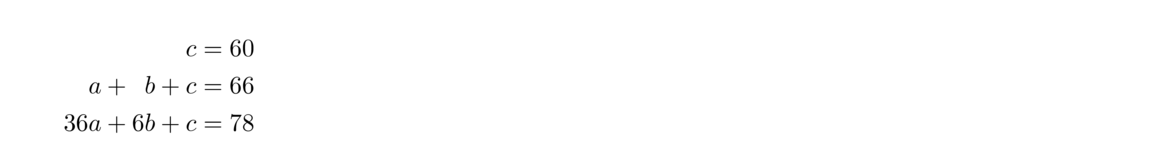

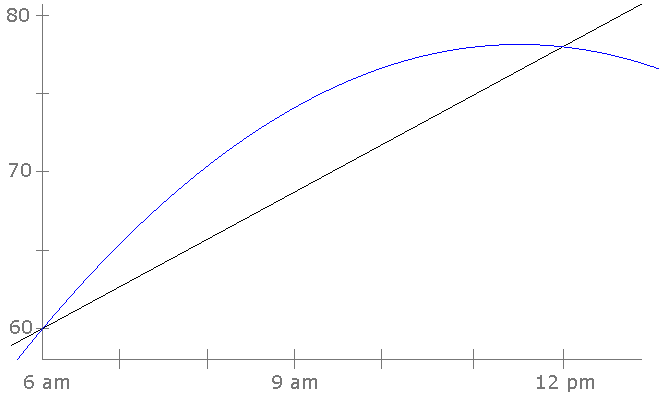

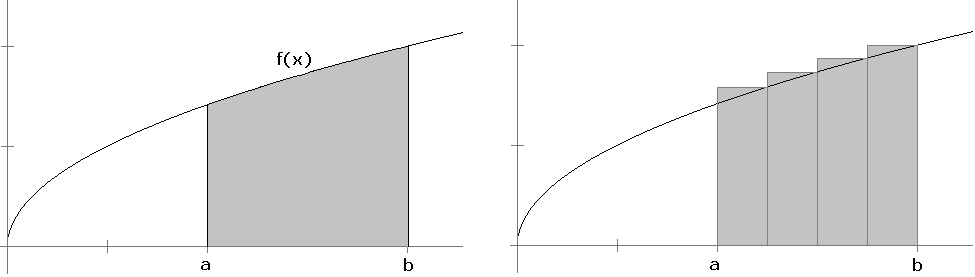

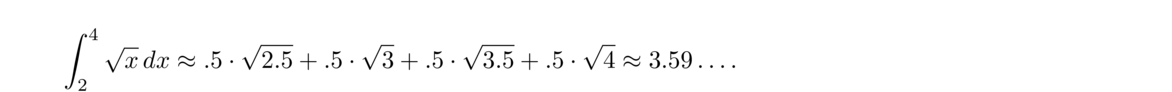

Very roughly speaking, interpolation is what you use when you have a few data points and want to use them to estimate the values between those data points. For example, suppose one day the temperature at 6 am is 60° and at noon it is 78°. What was the temperature at 10 am? We can't know for sure, but we can make a guess. If we assume the temperature rose at a constant rate, then 18° in 6 hours corresponds to a 3° rise per hour, which would give us a 10 am estimate of 60° + 4 · 3° = 72°. What we just did here is called linear interpolation: if we have a function whose value we know at two points and we assume it grows at a constant rate, we can use that to make guesses for function values between the two points.

However, a linear model is often just a rough approximation to real life. Suppose in the temperature example that we had another data point, namely that it was 66° at 7 am. How do we best make use of this new data point? The geometric fact that is useful here is that while two points determine a unique line, three points determine a unique parabola. So we will try to find the parabola that goes through the three data points.

One way to do this is to assume the equation is of the form ax2+bx+c and find the coefficients a, b, and c. Take 6 am as x = 0, 7 am as x = 1, and noon as x = 6, and plug these values in to get the following system of equations:

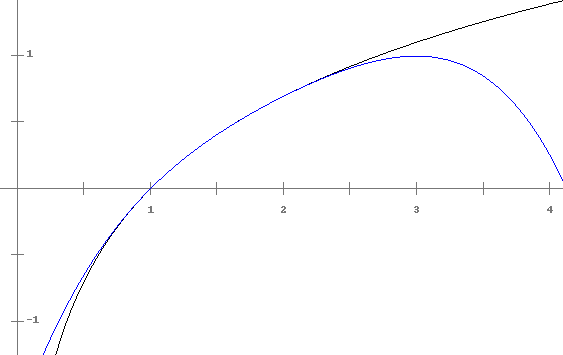

We can solve this system using basic algebra or linear algebra to get a = –3/5, b = 33/5, and c = 60. So the formula we get is –35x2+335x+60, and plugging in x = 4, we get that at 10 am the temperature is predicted to be 76.8°. The linear and quadratic models are shown below.

Notice that beyond noon, the models lose accuracy. The quadratic model predicts temperature will start dropping at noon, while linear model predicts the temperature will never stop growing. The “inter” in interpolation means “between” and these models may not be accurate outside of the data range.

The geometric facts we implicitly used in the example above are that two points determine a unique line (linear or degree 1 equation) and three points determine a unique parabola (quadratic or degree 2 equation). This fact extends to more points. For instance, four points determine a unique cubic (degree 3) equation, and in general, n points determine a unique degree n–1 polynomial.

So, given n points, (x1, y1), (x2, y2), …, (xn, yn), we want to find a formula for the unique polynomial of degree n–1 that passes directly through all of the points. One approach is a system of equations approach like the one we used above. This is fine if n is small, but for large values of n, the coefficients get very large leading to an ill-conditioned problem.**There are numerical methods for finding solutions of systems of linear equations, and large coefficients can cause problems similar to the ones we saw with polynomial root-finding.

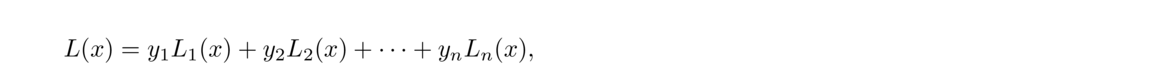

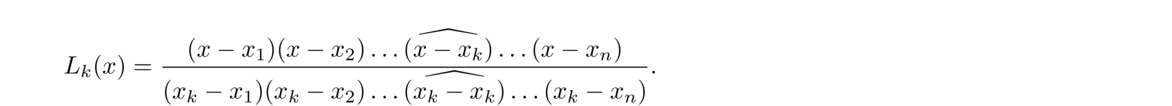

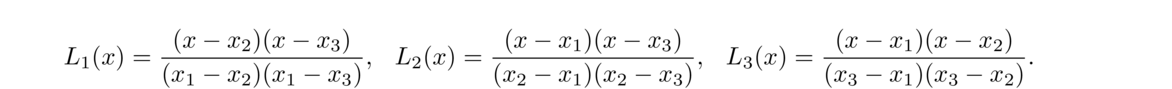

A different approach is the Lagrange formula for the interpolating polynomial. It is given by

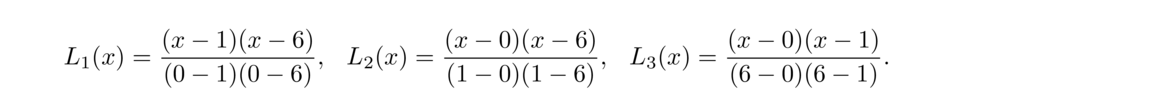

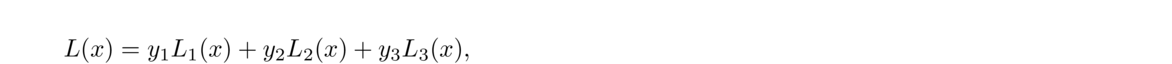

As an example, let's use this formula on the temperature data, (0, 60), (1, 66), (6, 78), from the previous section. To start, L1(x), L2(x), and L3(x) are given by

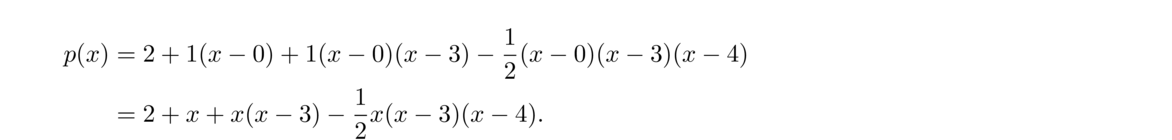

Newton's divided differences is a method for finding the interpolating polynomial for collection of data points. It's probably easiest to start with some examples. Suppose we have data points (0, 2), (2, 2), and (3, 4). We create the following table:

The first two columns are the x- and y-values. The next column's values are essentially slopes, computed by subtracting the two adjacent y-values and dividing by the corresponding x-values. The last column is computed by subtracting the adjacent values in the previous column and dividing the result by the difference 3–0 from the column of x-values. In this column, we subtract x-values that are two rows apart.

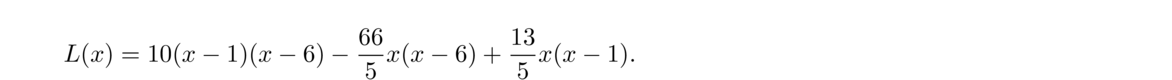

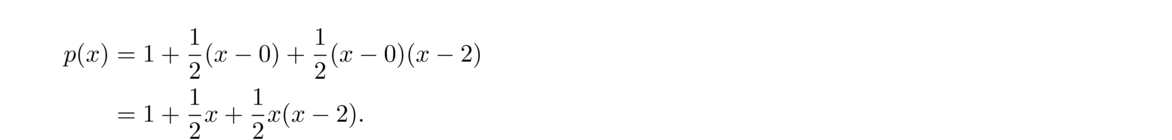

We then create the polynomial like below:

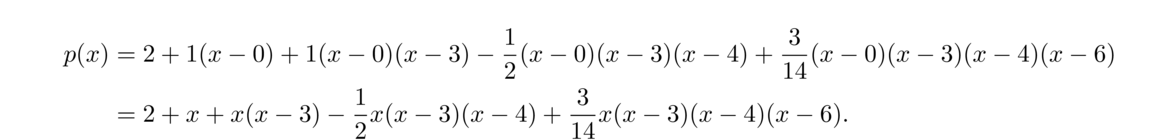

Let's try another example using the points (0, 2), (3, 5), (4, 10) and (6, 8). Here is the table:

Notice that in the third column, the x-values that are subtracted come from rows that are two apart in the first column. In the fourth column, the x-values come from rows that are three apart. We then create the polynomial from the x-coordinates and the boxed entries:

Here is the polynomial we get:

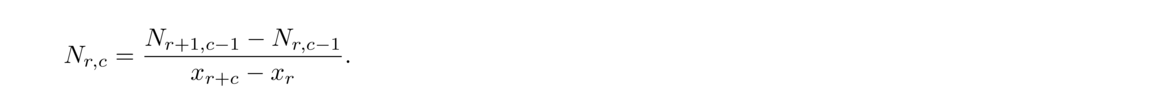

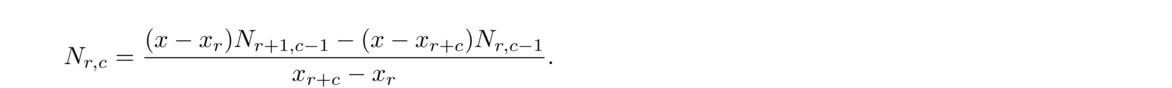

Here is a succinct way to describe the process: Assume the data points are (x0, y0), (x1, y1), …, (xn–1, yn–1). We can denote the table entry at row r, column c (to the right of the line) by Nr, c. The entries of the first column are the y-values, i.e., Ni, 0 = yi, and the other entries are given by

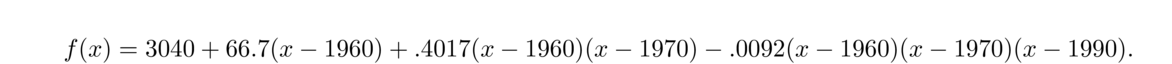

Let's interpolate to estimate the population in 1980 and compare it to the actual value. Using Newton's divided differences, we get the following:

This gives

Related to Newton's divided differences is Neville's algorithm, which uses a similar table approach to compute the interpolating polynomial at a specific point. The entries in the table are computed a little differently, and the end result is just the value at the specific point, not the coefficients of the polynomial. This approach is numerically a bit more accurate than using Newton's divided differences to find the coefficients and then plugging in the value. Here is a small example to give an idea of how it works. Suppose we have data points (1, 11), (3, 15), (4, 20), and (5, 22), and we want to interpolate at x = 2. We create the following table:

The value of the interpolating polynomial at 2 is 10.75, the last entry in the table. The denominators work just like with Newton's divided differences. The numerators are similar in that they work with the two adjacent values from the previous column, but there are additional terms involving the point we are interpolating at and the x-values.

We can formally describe the entries in the table in a similar way to what we did with Newton's divided differences. Assume the data points are (x0, y0), (x1, y1), …, (xn–1, yn–1). We can denote the table entry at row r, column c (to the right of the line) by Nr, c. The entries of the first column are the y-values, i.e., Ni, 0 = yi, and the other entries are given by the following, where x is the value we are evaluating the interpolating polynomial at:

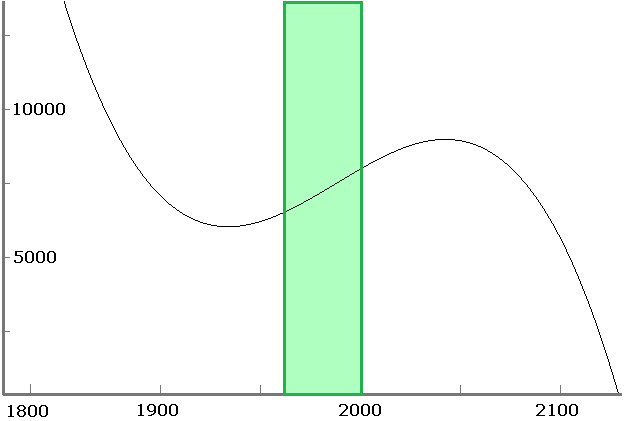

At http://www.census.gov/population/international/data/worldpop/table_history.php there is a table of the estimated world population by year going back several thousand years. Suppose we take the data from 1000 to 1900 (several dozen data points) and use it to interpolate to years that are not in the table. Using Newton's divided differences we get the polynomial that is graphed below.**The units for x-values are 100s of years from 1000 AD. For instance x = 2.5 corresponds to 1250. We could have used the actual years as x-values but that would make the coefficients of the polynomials extremely small. Rescaling so that the units are closer to zero helps keep the coefficients reasonable.

The thing to notice is the bad behavior between x = 0 and x = 3 (corresponding to years 1000 through 1200). In the middle of its range, the polynomial is well behaved, but around the edges there are problems. For instance, the interpolating polynomial gives a population of almost 20000 million people (20 billion) around 1025 and a negative population around 1250.

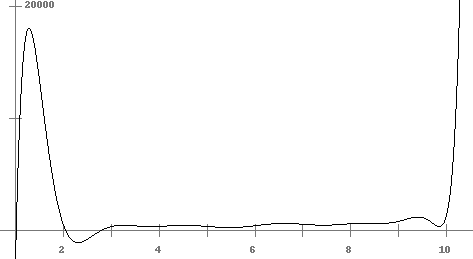

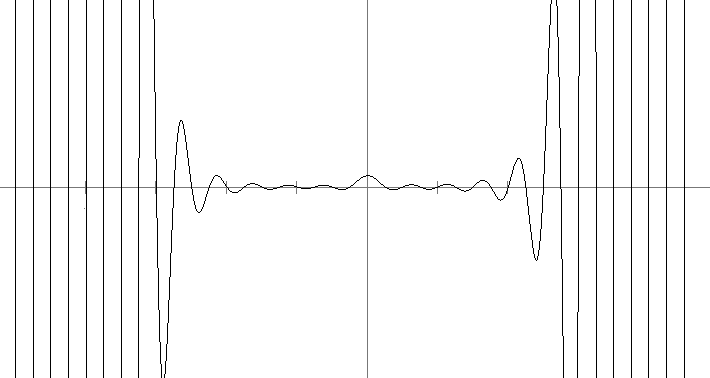

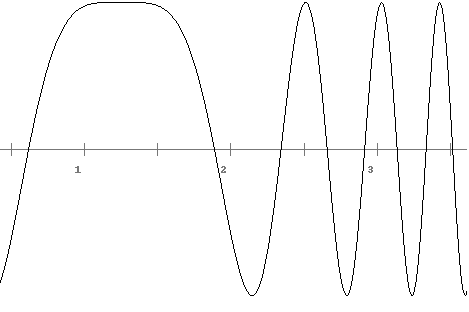

This is a common problem and is known as the Runge phenomenon. Basically, polynomials want to bounce up and down between points. We can control the oscillations in the middle of the range, but the edge can cause problems. For example, suppose we try to interpolate a function that is 0 everywhere except that f(0) = 1. If we use 20 evenly spaced points between -5 and 5, we get the following awful result:

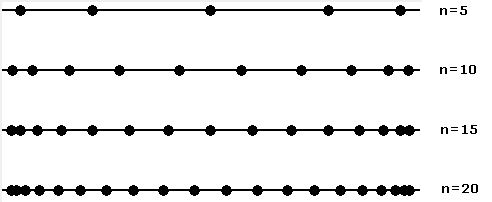

The solution to this problem is to pick our points so that they are not evenly spaced.**In fact, evenly spaced points are usually one of the worst choices, which is unfortunate since a lot of real data is collected in that way. We will use more points near the outside of our range and fewer inside. This will help keep the edges of the range under control. Here is an example of evenly-spaced points versus our improvement:

We will see exactly how to space those points shortly. First, we need to know a little about the maximum possible error we get from interpolation.

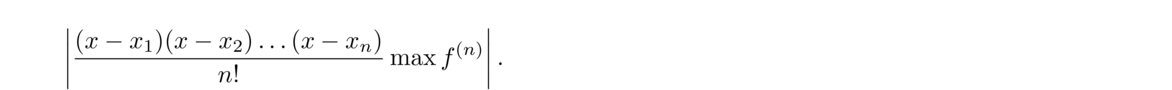

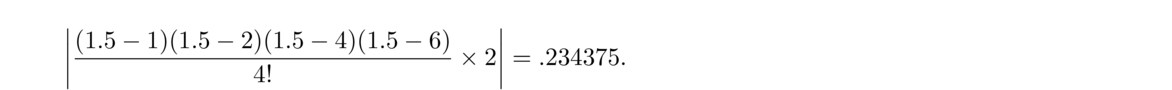

When interpolating we are actually trying to estimate some underlying function f. We usually don't know exactly what f is. However, we may have some idea about its behavior. If so, the following expression gives an upper bound for the error between the interpolated value at x and its actual value:

Here, f(n) refers to the nth derivative of f. The max is taken over the range that contains x and all the xi. Here is an example of how the formula works: suppose we know that function changes relatively slowly, namely that |f(n)(x)| < 2 for all n and all x. Suppose also that the points we are using have x-values at x = 1, 2, 4, and 6. The interpolation error at x = 1.5 must be less than

With a fixed number of points, the only part of the error term that we can control is the (x–x1)(x–x2)…(x–xn) part. We want to find a way to make this as small as possible. In particular, we want to minimize the worst-case scenario. That is, we want to know what points to choose so that (x–x1)(x–x2)…(x–xn) never gets too out of hand.

In terms of where exactly to space the interpolation points, the solution involves something called the Chebyshev polynomials. They will tell us exactly where to position our points to minimize the effect of the Runge phenomenon. In particular, they are the polynomials that minimize the (x–x1)(x–x2)…(x–xn) term in the error formula.

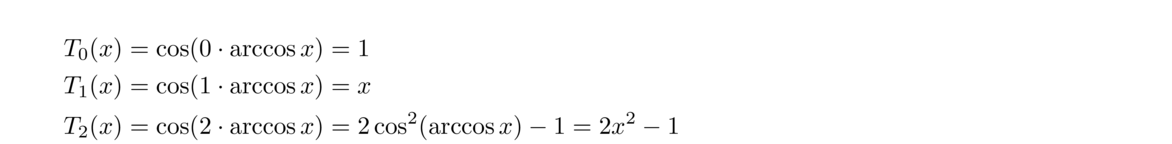

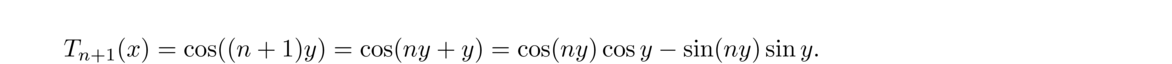

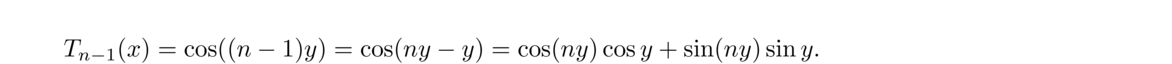

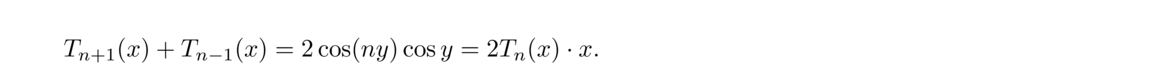

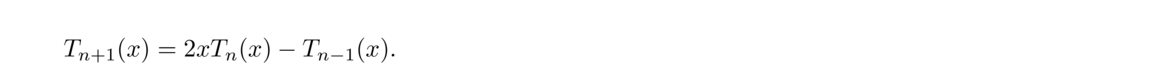

The nth Chebyshev polynomial is defined by

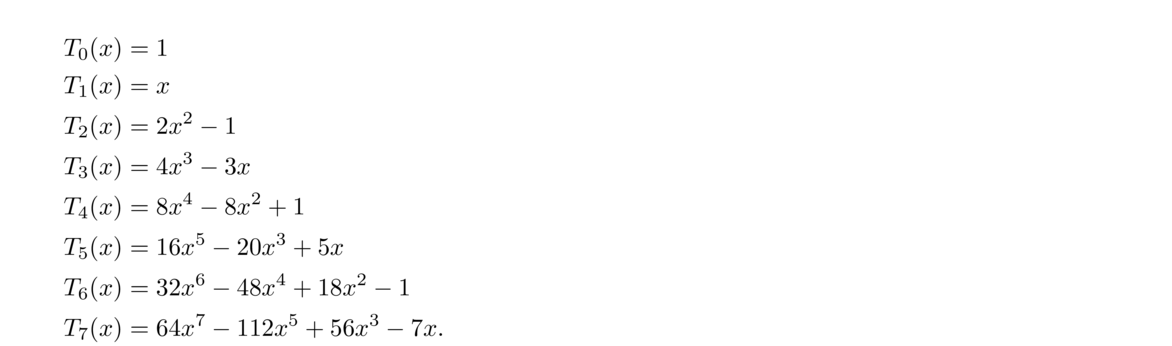

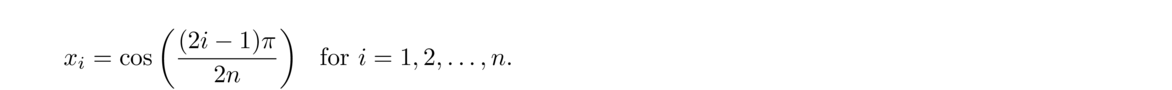

The roots of the Chebyshev polynomials (which we will call Chebyshev points tell us exactly where to best position the interpolation points in order to minimize interpolation error and the Runge effect.

Here are the points for a few values of n. We see that the points become more numerous as we reach the edge of the range.

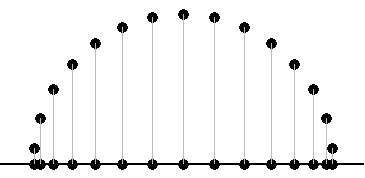

In fact, the points being cosines of linearly increasing angles means that they are equally spaced around a circle, like below:

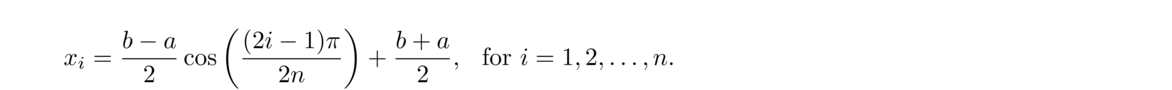

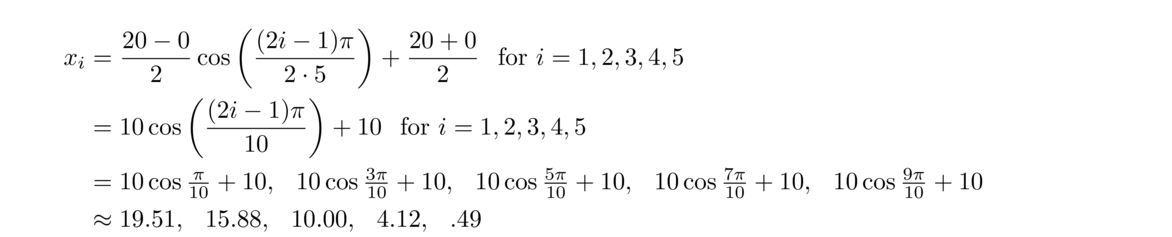

If we have a more general interval, [a, b], we have to scale and translate from [–1, 1]. The key is that to go from [–1, 1] to [a, b], we have to stretch or contract [–1, 1] by a factor of b–a1––1 = b–a2 and translate it so that its center moves to b+a2. Applying these transformations to the Chebyshev points, we get the following

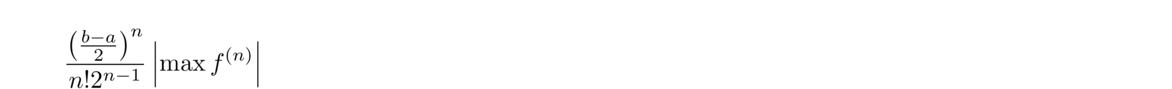

Plugging the Chebyshev points into the error formula, we are guaranteed that for any x ∈ [a, b] the interpolation error will be less than

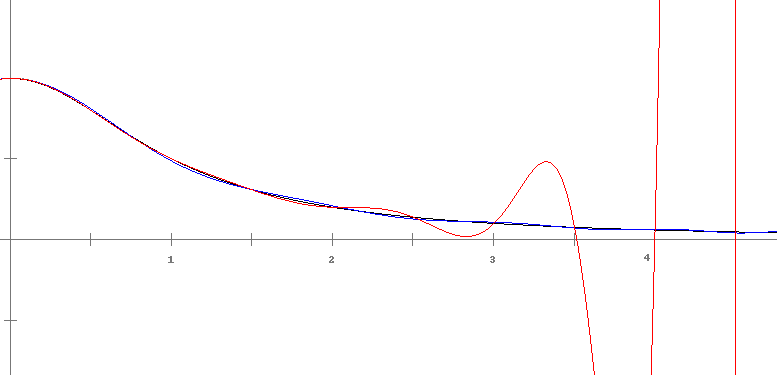

Below is a comparison of interpolation using 21 evenly-spaced nodes (in red) versus 21 Chebyshev nodes (in blue) to approximate the function f(x) = 1/(1+x2) on [–5, 5]. Just the interval [0, 5] is shown, as the other half is the similar. We see that the Runge phenomenon has been mostly eliminated by the Chebyshev nodes.

One use for interpolation is to approximate functions with polynomials. This is a useful thing because a polynomial approximation is often easier to work with than the original function.

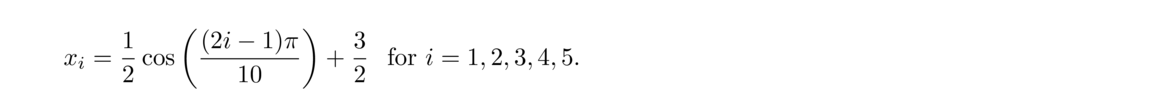

As an example, let's approximate ln x on the interval [1, 2] using five points. The Chebyshev points are

We can see that they match up pretty well on [1, 2]. Using the error formula to compute the maximum error over the range, we see that the error must be less than 4!/(5! · 24) = 1/80. (The 4! comes from finding the maximum of the fifth derivative of ln x.) But in fact, this is an overestimate. The maximum error actually happens at x = 1 and we can compute it to be roughly 7.94 × 10–5.

You might quibble that we actually needed to know some values of ln x to create the polynomial. This is true, but it's just a couple of values and they can be computed by some other means. Those couple of values are then used to estimate ln x over the entire interval. In fact, to within an error of about 7.94 × 10–5, we are representing the entire range of values the logarithm can take on in [1, 2] by just 9 numbers (the coefficients and interpolation points).

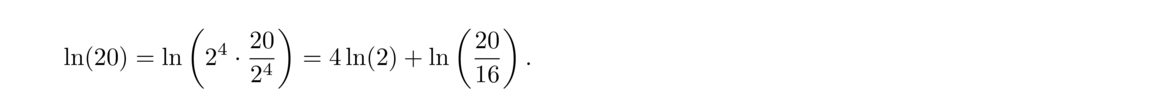

But we can actually use this to get ln x on its entire range, (0, ∞). Suppose we want to compute ln(20). If we keep dividing 20 by 2, eventually we will get to a value between 1 and 2. In fact, 20/24 is between 1 and 2. We then have

This technique works in general. Given any x ∈ (2, ∞), we repeatedly divide x by 2 until we get a value between 1 and 2. Say this takes d divisions. Then our estimate for ln(x) is d ln 2 + P(x/2d), where P is our interpolating polynomial.

A similar method can give us ln(x) for x ∈ (0, 1): namely, we repeatedly multiply x until we are between 1 and 2, and our estimate is P(2dx)–d ln 2.

The example above brings up the question of how exactly computers and calculators compute functions.

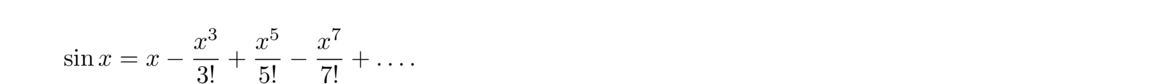

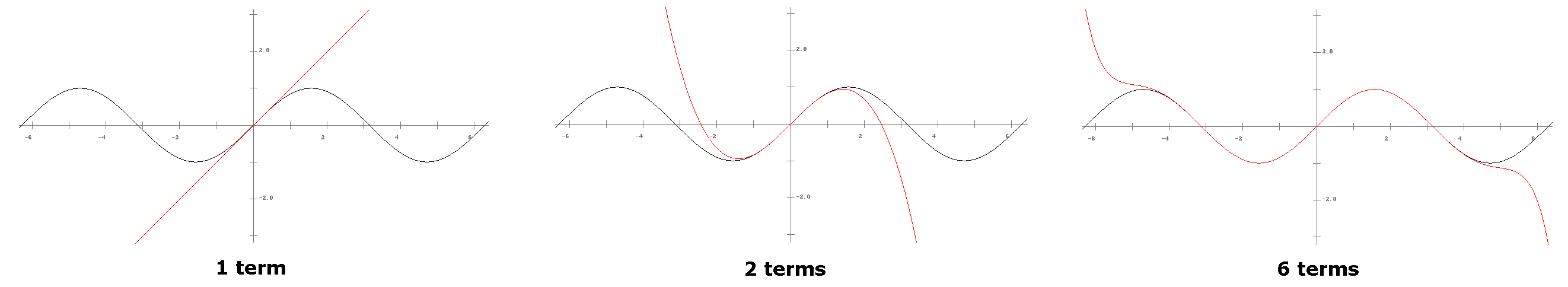

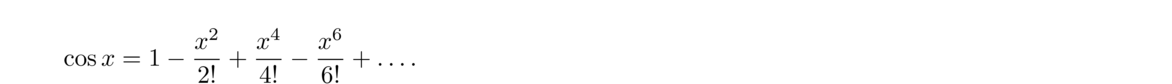

One simple way to approximate a function is to use its Taylor series. If a function is reasonably well-behaved, it can be written as an infinitely long polynomial. For instance, we can write

The first approximation, sin x ≈ x, is used quite often in practice. It is quite accurate for small angles.

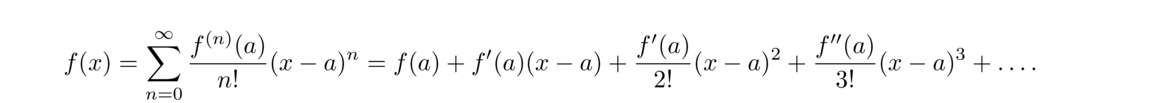

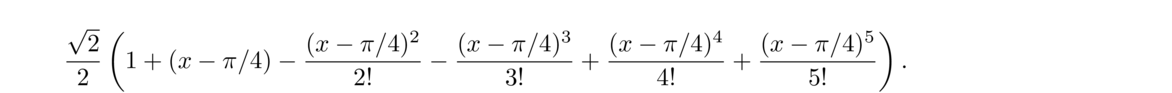

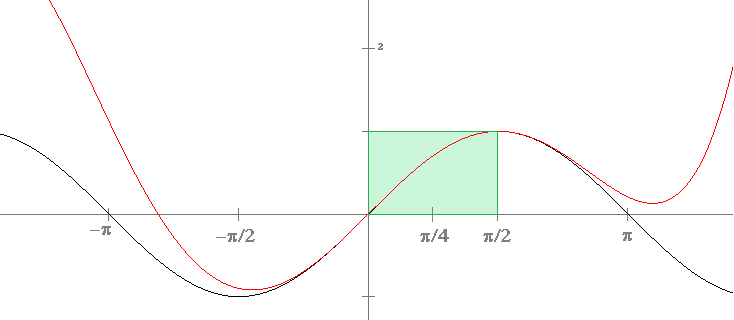

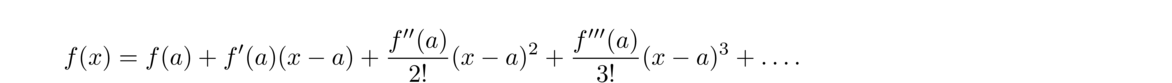

In general, the Taylor series of a function f(x) centered at a is

It is a very close approximation on the interval [0, π/2]. But this is all we need to get cos x for any real number x. Looking at figure above, we see that cos x between –π/2 and π is a mirror image of cos x between 0 and π/2. In fact, there is a trig identity that says cos(π/2+x) = cos(π/2–x). So, since we know cos x on [0, π/2], we can use that to get cos x on [π/2, π]. Similar use of trig identities can then get us cos x for any other x.

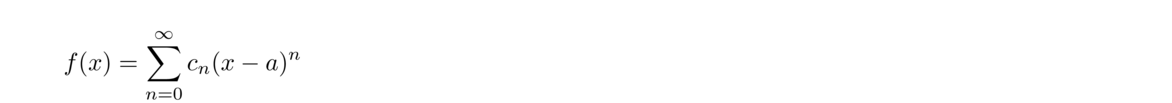

Taylor series are simple, but they often aren't the best approximation to use. One way to think of Taylor series is we are trying to write a function using terms of the form (x–a)n. Basically, we are given a function f(x) and we are trying to write

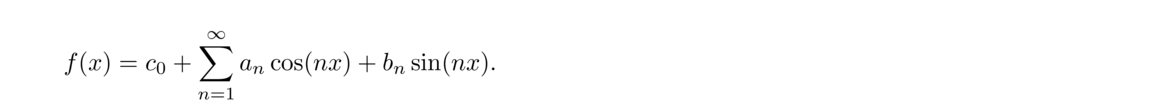

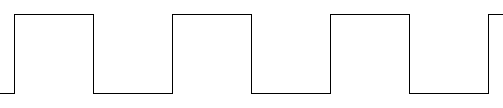

For example, the function shown partially below is called a square wave.

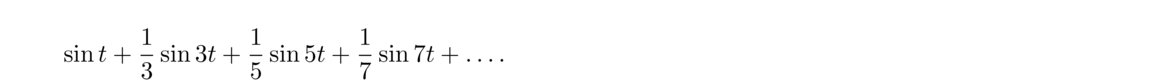

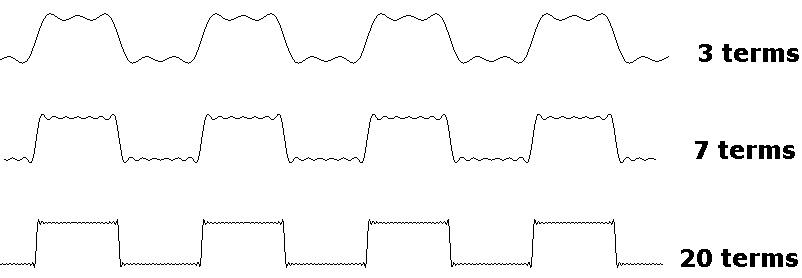

Choosing the frequency and amplitude of the wave to make things work out, the Fourier series turns out to be

Fourier series are used extensively in signal processing.

One of the most accurate approaches of all is simply to apply Newton's Divided Differences to the Chebyshev points, (xi, f(xi)), where xi = b–a2 cos((2i–1)π2n) + b+a2 for i = 1, 2, …, n.

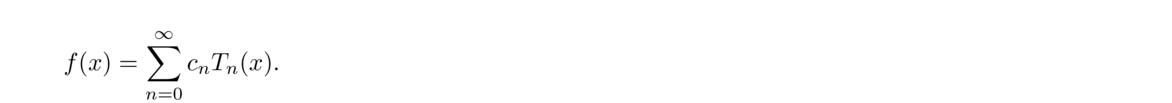

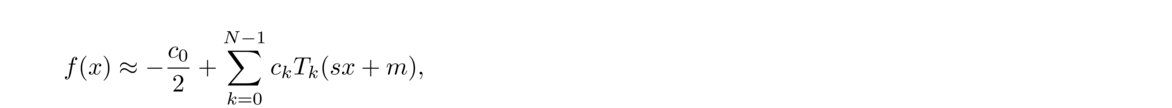

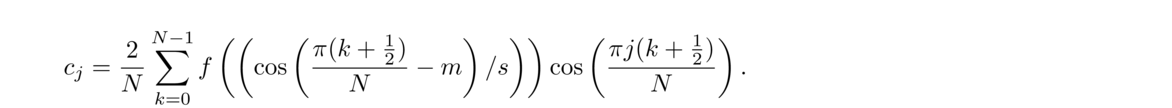

Another way of looking at this is that we are trying to write the function in terms of the Chebyshev polynomials, namely

At the CPU level, many chips use something called the CORDIC algorithm to compute trig functions, logarithms, and exponentials. It approximates these functions using only addition, subtraction, multiplication and division by 2, and small tables of precomputed function values. Multiplication and division by 2 at the CPU level correspond to just shifting bits left or right, which is something that can be done very efficiently.

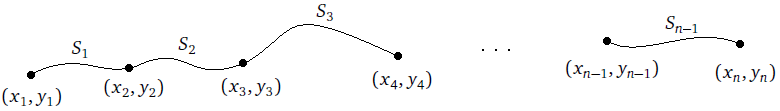

One problem with using a single polynomial to interpolate a large collection of points is that polynomials want to oscillate, and that oscillation can lead to unacceptable errors. We can use the Cheybshev nodes to space the points to reduce this error, but real-life constraints often don't allow us to choose our data points, like with census data, which comes in ten-year increments.

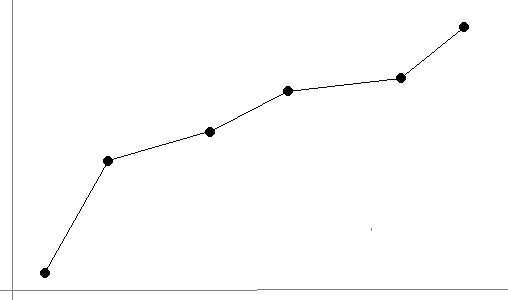

A simple solution to this problem is piecewise linear interpolation, which is where we connect the points by straight lines, like in the figure below:

One benefit of this approach is that it is easy to do. Given two points, (x1, y1) and (x2, y2), the equation of the line between them is y = y1 + y2–y1x2–x1(x–x1).

The interpolation error for piecewise linear interpolation is on the order of h2, where h is the maximum of xi+1–xi over all the points, i.e., the size of the largest gap in the x-direction between the data points. So unlike polynomial interpolation, which can suffer from the Runge phenomenon if large numbers of equally spaced points are used, piecewise linear interpolation benefits when more points are used. In theory, by choosing a fine enough mesh of points, we can bring the error below any desired threshold.

By doing a bit more work, we can improve the error term of piecewise linear interpolation from h2 to h4 and also get a piecewise interpolating function that is free from sharp turns. Sharp turns are occasionally a problem for physical applications, where smoothness (differentiability) is needed.

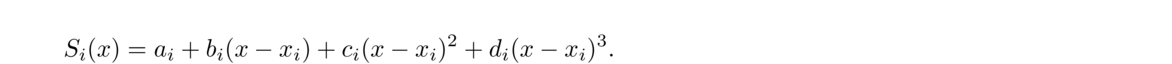

Instead of using lines to approximate between (xi, yi) and (xi+1, yi+1), we will use cubic equations of the form

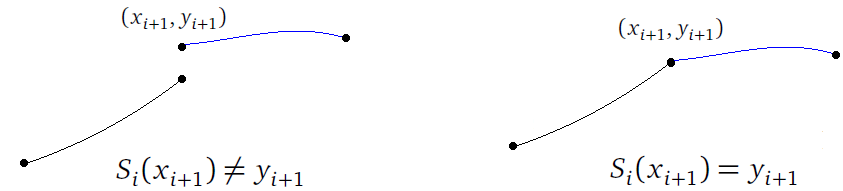

If we have n points, then we will have n–1 equations, and we will need to determine the coefficients ai, bi, ci, and di for each equation. However, right away we can determine that ai = yi for each i, since the constant term ai tells the height that curve Si starts at, and that must be the y-coordinate of the corresponding data value, (xi, yi). So this leaves bi, ci, and di to be determined for each curve, a total of 3(n–1) coefficients. To uniquely determine these coefficients, we will want to find a system of 3(n–1) equations that we can solve to get the coefficients.

We will first require for each i = 1, 2, … n that Si(xi+1) = yi+1. This says that at each data point, the curves on either side must meet up without a jump. This implies that the overall combined function is continuous. See the figure below.

This gives us a total of n–1 equations to use in determining the coefficients.

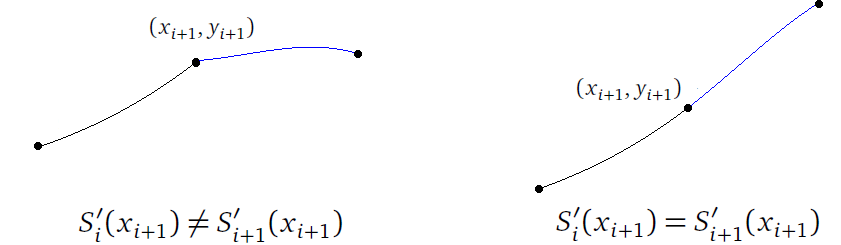

Next, for each i = 1, 2, … n, we require that S′i(xi+1) = S′i+1(xi+1). This says that where the individual curves come together, there can't be a sharp turn. The functions have to meet up smoothly. This implies that the overall function is differentiable. See the figure below.

This gives us n–2 more equations for determining the coefficients.

We further require for each i = 1, 2, … n, that S″i(xi+1) = S″i+1(xi+1). This adds a further smoothness condition at each data point that says that the curves have meet up very smoothly. This also gives us n–2 more equations for determining the coefficients.

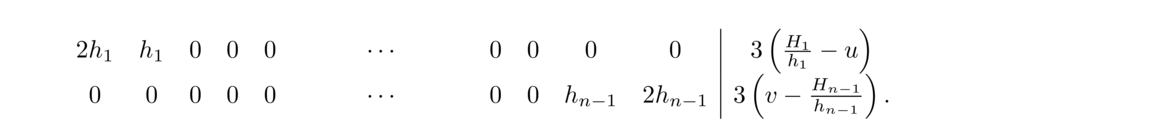

At this point, we have a total of (n–1)+(n–2)+(n–2) = 3n–5 equations to use for determining the coefficients, but we need 3n–3 equations. There are a few different ways that people approach this. The simplest approach is the so-called natural spline equations, which are S″1(x1) = 0 and S″n–1(xn) = 0. Geometrically, these say the overall curve has inflection points at its two endpoints.

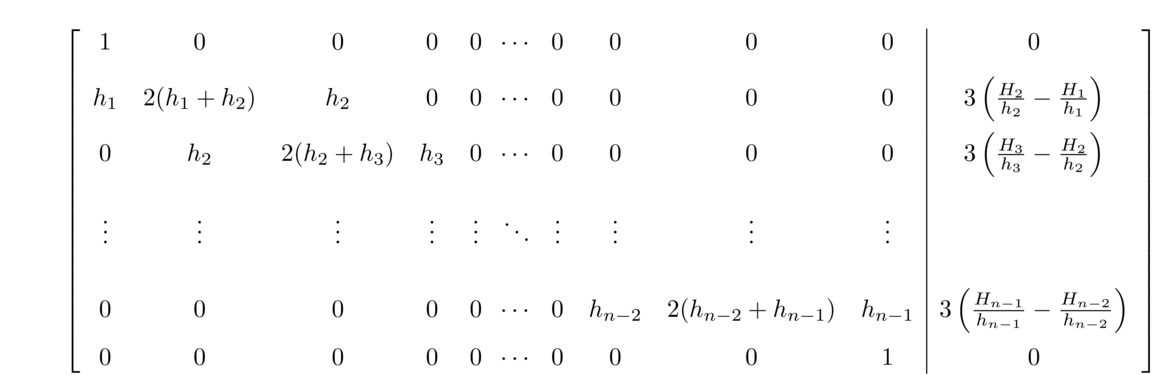

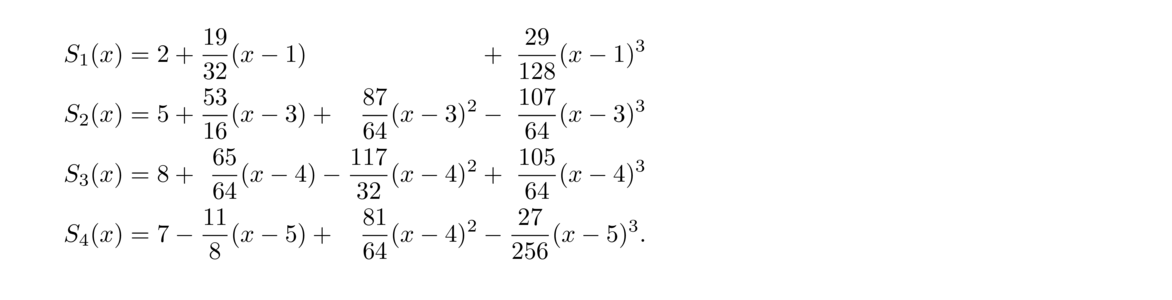

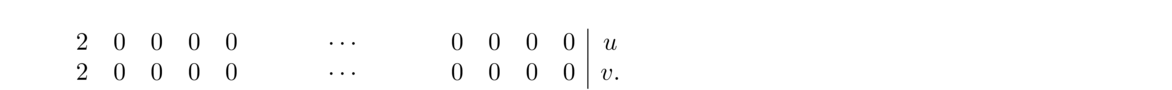

Systems of equations like this are usually solved with techniques from linear algebra. With some clever manipulations (which we will omit), we can turn this system into one that can be solved by efficient algorithms. The end result for a natural spline is the following**The derivation here follows Sauer's Numerical Analysis section 3.4 pretty closely.:

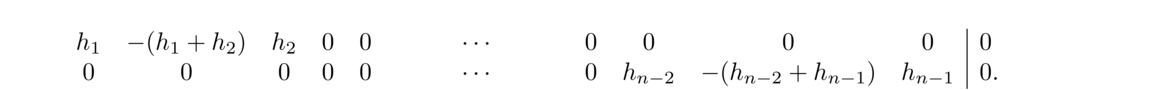

Set Hi = yi+1–yi and hi = xi+1–xi for i = 1, 2…, n–1. Then set up the following matrix.

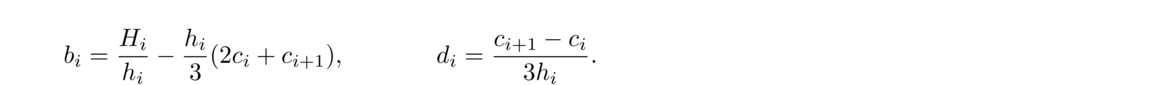

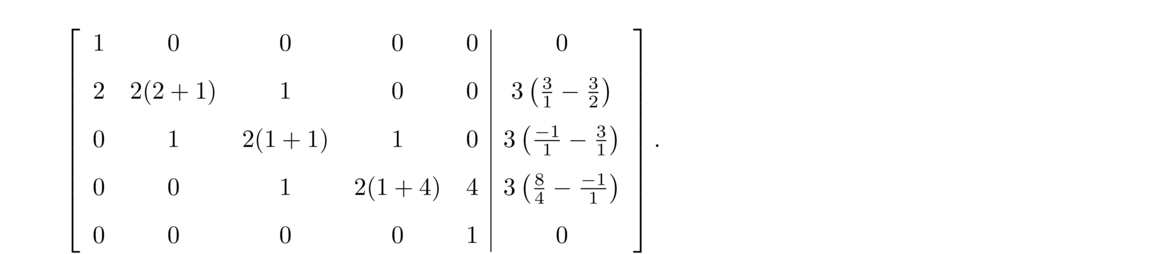

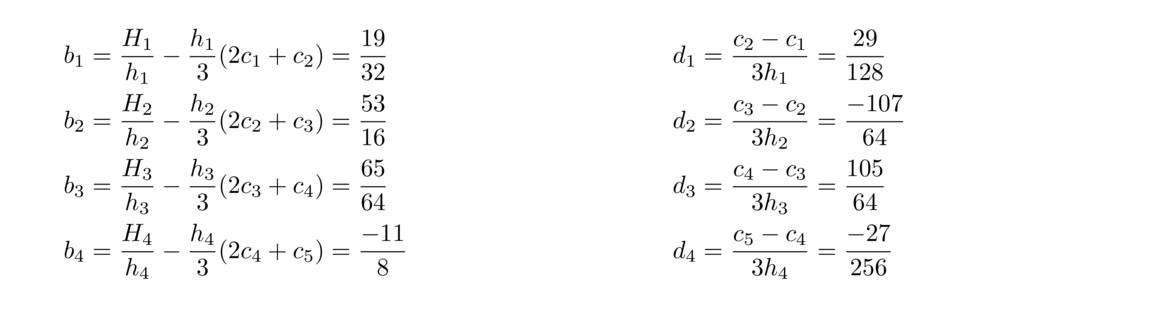

This matrix is tridiagonal (in each row there are only three nonzero entries, and those are centered around the diagonal). There are fast algorithms for solving tridiagonal matrix equations. The solutions to this system are c1, c2, …, cn. Once we have these, we can then get bi and di for i = 1, 2, … n–1 as follows:

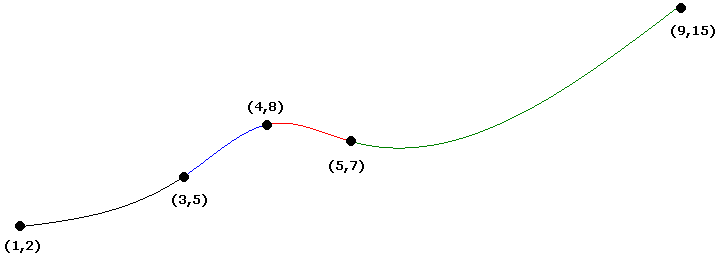

Suppose we want the natural cubic spline through (1, 2), (3, 5), (4, 8), (5, 7), and (9, 15).

The h's are the differences between the x-values, which are h1 = 2, h2 = 1, h3 = 1, and h4 = 4. The H's are the differences between the y-values, which are H1 = 3, H2 = 3, H3 = –1, and H4 = 8. We then put these into the following matrix:

Besides the natural cubic spline, there are a few other types. Recall that the natural spline involves setting S″1(x1) = 0 and S″n–1(xn) = 0. If we require these second derivatives to equal other values, say u and v, we have what is called a curvature-adjusted cubic spline (curvature being given by the second derivative). The procedure above works nearly the same, except that the first and last rows of the matrix become the following:

If the derivative of the function being interpolated is known at the endpoints of the range, we can incorporate that information to get a more accurate interpolation. This approach is called a clamped cubic spline. Assuming the values of the derivative at the two endpoints are u and v, we set S′(x1) = u and S′(xn) = v. After running through everything, the only changes are in the second and second-to-last rows of the matrix. They become:

One final approach that is recommended in the absence of any other information is the not-a-knot cubic spline. It is determined by setting S‴1(x2) = S‴2(x2) and S‴n–2(xn–1 = S‴n–1(xn–1). These are equivalent to requiring d1 = d2 and dn–2 = dn–1, which has the effect of forcing S1 and S2 to be identical as well as forcing Sn2 and Sn–1 to be identical. The only changes to the procedure are in the second and second-to-last rows:

The interpolation error for cubic splines is on the order of h4, where h is the maximum of xi+1–xi over all the points xi (i.e., the largest gap between the x coordinates of the interpolating points). Compare this with piecewise linear interpolation, whose interpolation error is on the order of h2.

Bézier curves were first developed around 1960 by mathematician Paul de Casteljau and engineer Pierre Bézier, working at competing French car companies. Nowadays, Bézier curves are very important in computer graphics. For example, the curve and path drawing tools in many graphics programs use Bézier curves. They are also used for animations in Adobe Flash and other programs to specify the path that an object will move along. Another important application for Bézier curves is in specifying the curves in modern fonts. We can also use Bézier curves in place of ordinary cubic curves in splines.

Before we define what a Bézier curve is, we need to review a little about parametric equations.

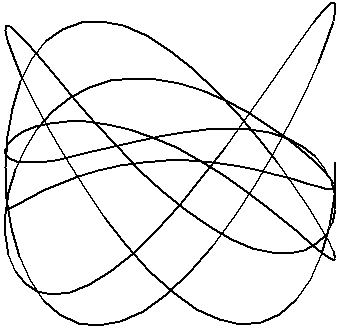

Maybe it represents a trace of the motion of an object under the influence of several forces, like a rock in the asteroid belt. Such a curve is not easily represented in the usual way. However, we can define it as follows:

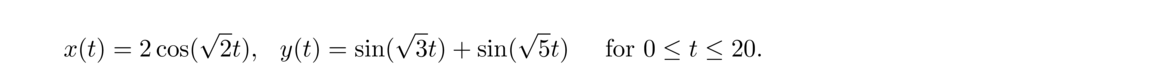

Parametric equations are useful for describing many curves. For instance, a circle is described by x = cos(t), y = sin t, 0 ≤ t ≤ 2π. A function of the form y = f(x) is described by the equations x = t, y = f(t). In three-dimensions, a helix (the shape of a spring and DNA) is given by x = cos t, y = sin t, z = t. There are also parametric surfaces, where the equations for x, y, and z are each functions of two variables. Various interesting shapes, like toruses and Möbius strips can be described simply by parametric surface equations. Here a few interesting curves that can be described by parametric equations.

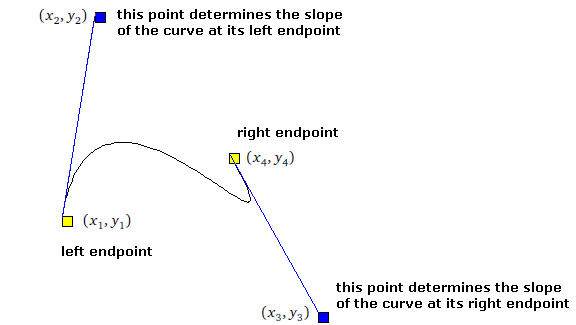

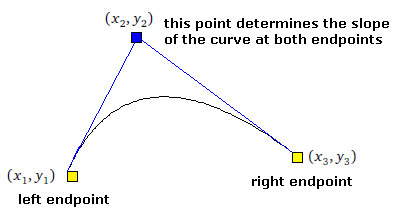

A cubic Bézier curve is determined by its two endpoints and two control points that are used to determine the slope at the endpoints. In the middle, the curve usually follows a cubic parametric equation. See the figure below:

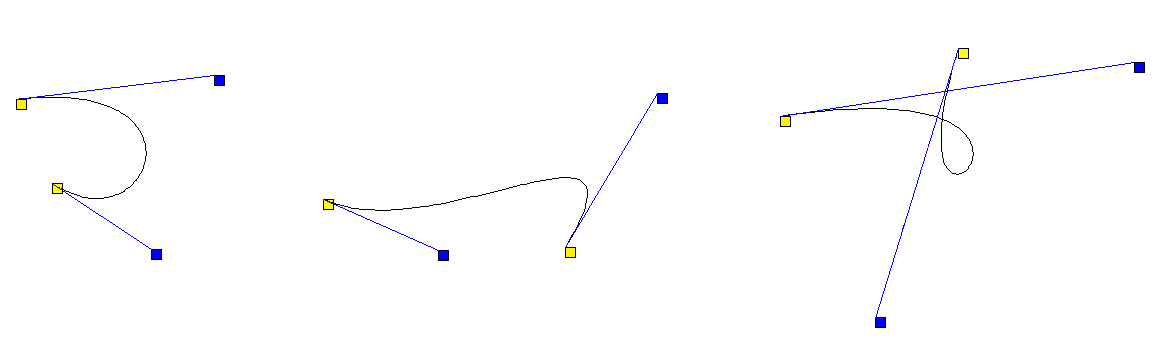

Here are a few more examples:

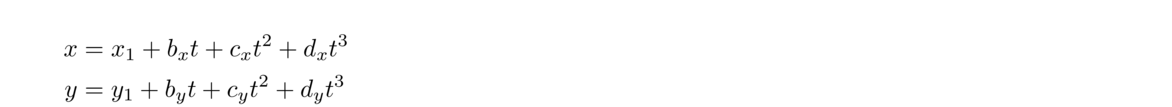

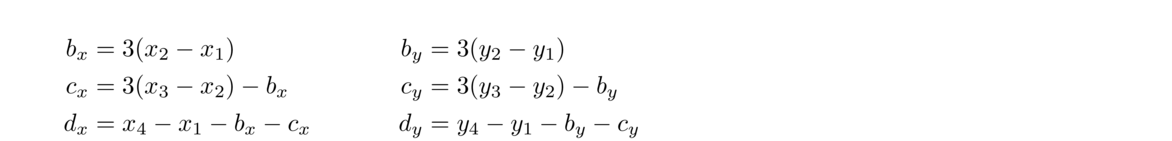

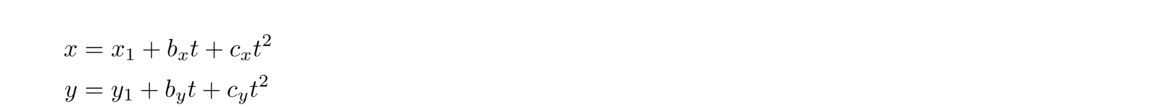

The parametric equations for a cubic Bézier curve are given below:

Sometimes quadratic Bézier curves are used. The parametric equations for these curves are quadratic and only one control point is used to control the slopes at both endpoints, as shown below:

The parametric equations for a quadratic Bézier curve are given below:

One can also define quartic and higher order Bézier curves, with more control points specifying the slope elsewhere on the curve, but, in practice, the added complexity is not worth the extra precision.

Just to summarize, the point of interpolation is that we have data that come from some underlying function, which we may or may not know. When we don't know the underlying function, we usually use interpolation to estimate the values of the function at points between the data points we have. If we do know the underlying function, interpolation is used to find a simple approximation to the function.

The first approach to interpolation we considered was finding a single polynomial goes through all the points. The Lagrange formula gives us a formula for that interpolating polynomial. It is useful in situations where an explicit formula for the coefficients is needed. Newton's divided differences is a process that finds the coefficients of the interpolating polynomial. A similar process, Neville's algorithm, finds the value of the polynomial at specific points without finding the coefficients.

When interpolating, sometimes we can choose the data points and sometimes we are working with an existing data set. If we can choose the points, and we want a single interpolating polynomial, then we should space the points out using the Chebyshev nodes.

Equally-spaced data points are actually one of the worst choices as they are most subject to the Runge phenomenon. And the more equally-spaced data points we have, the worse the Runge phenomenon can get. For this reason, if interpolating from an existing data set with a lot of points, spline interpolation would be preferred.

We also looked at Bézier curves which can be used for interpolation and have applications in computer graphics.

Another type of interpolation is Hermite interpolation, where if there is information about the derivative of the function being interpolated, a more accurate polynomial interpolation can be formed. There is also interpolation with trigonometric functions (such as with Fourier series), which has a number of important applications.

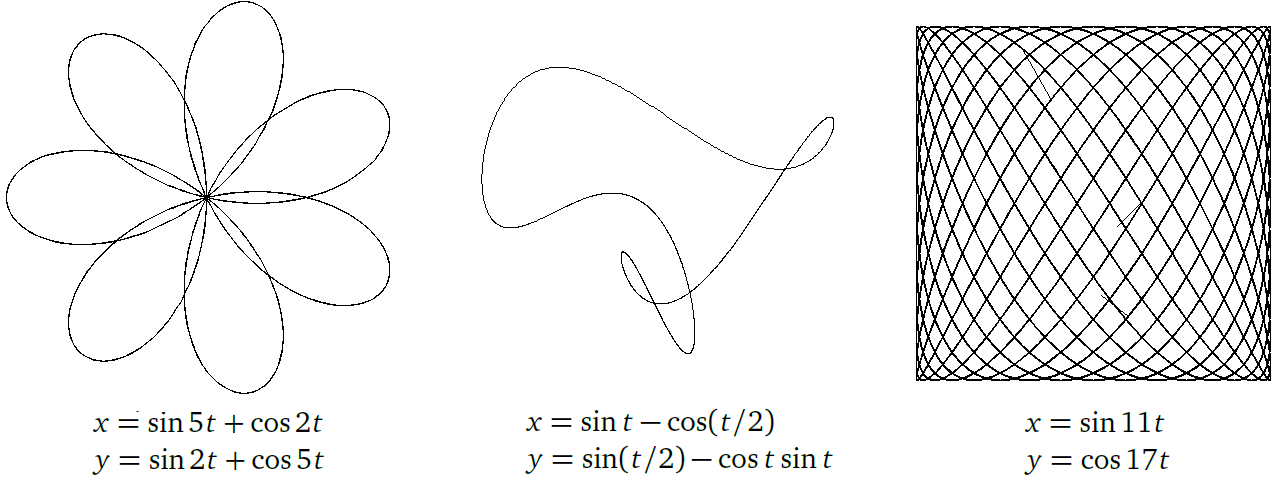

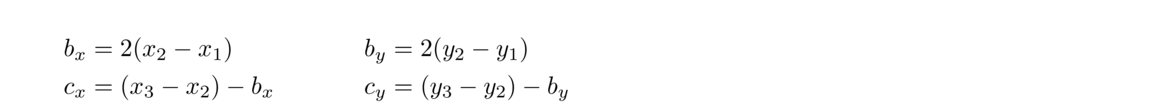

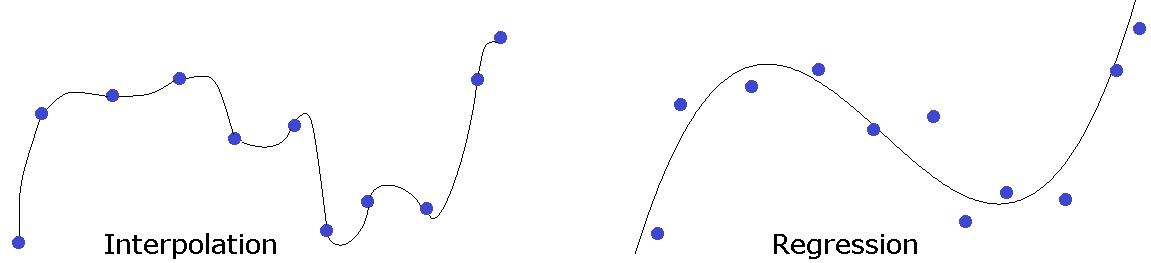

Finally, we note that interpolation is not the only approach to estimating a function. Regression (often using least-squares technique) involves finding a function that doesn't necessarily exactly pass through the data points, but passes near the points and minimizes the overall error. See the figure below.

Taking derivatives is something that is generally easy to do analytically. The rules from calculus are enough in theory to differentiate just about anything we have a formula for. But sometimes we don't have a formula, like if we are performing an experiment and just have data in a table. Also, some formulas can be long and computationally very expensive to differentiate. If these are part of an algorithm that needs to run quickly, it is often good enough to replace the full derivative with a quick numerical estimate. Numerical differentiation is also an important part of some methods for solving differential equations. And often in real applications, functions can be rather complex things that are defined by computer programs. Taking derivatives of such functions analytically might not be feasible.

The starting point for many methods is the definition of the derivative

In theory, a smaller values of h should give us a better approximations, but in practice, floating-point issues prevent that. It turns out that there are two competing errors here: the mathematical error we get from using a nonzero h, and floating-point error that comes from breaking the golden rule (we are subtracting nearly equal terms in the numerator).

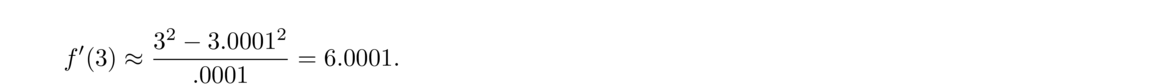

Here is a table of some h values and the approximate derivative of x2 at 3, computed in Python.

We see that the approximations get better for a while, peaking around h = 10–7 and then getting worse. As h gets smaller, our violation of the golden rule gets worse and worse. To see what is happening, here are the first 30 digits of the floating-point representation of (3+h)2, where h = 10–13:

9.000000000000600408611717284657

The last three “correct” digits are 6 0 0. Everything after that is an artifact of the floating-point representation. When we subtract 9 from this and divide by 10–13, all of the digits starting with the that 6 are “promoted” to the front, and we get 6.004086…, which is only correct to the second decimal place.

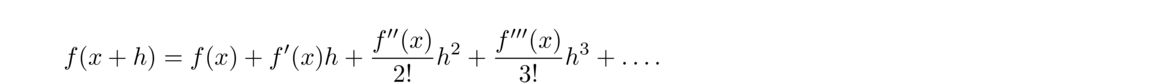

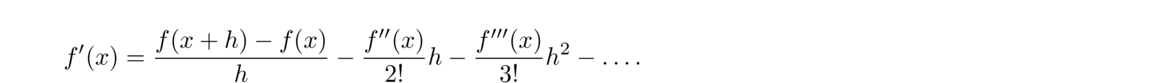

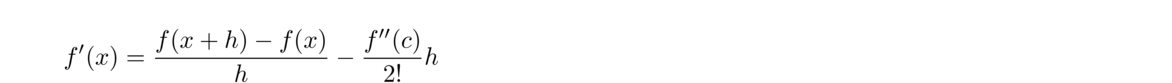

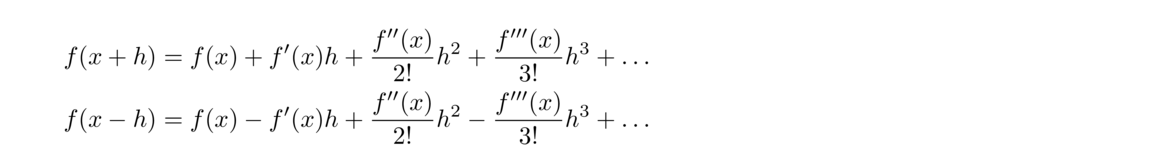

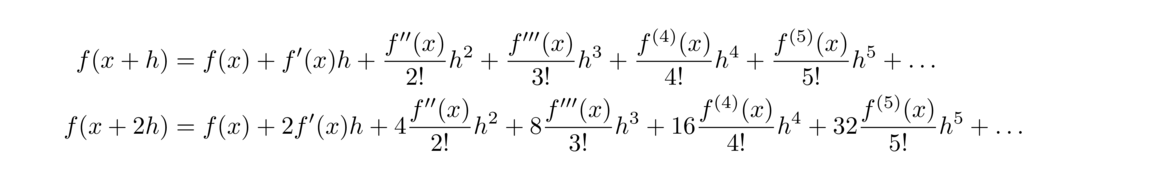

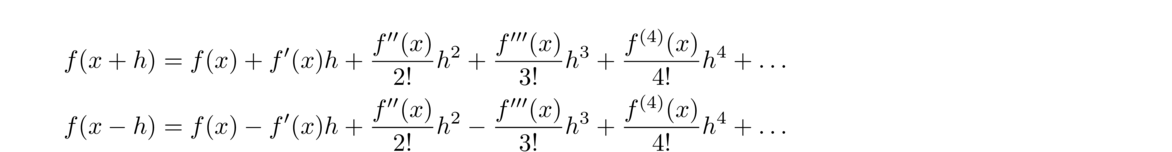

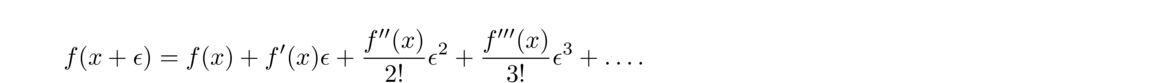

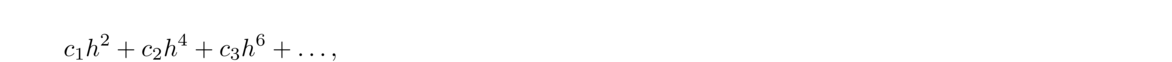

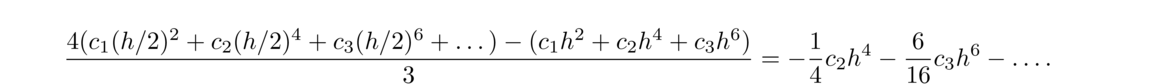

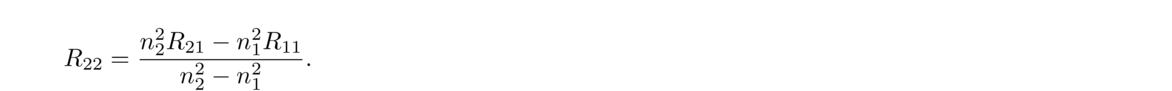

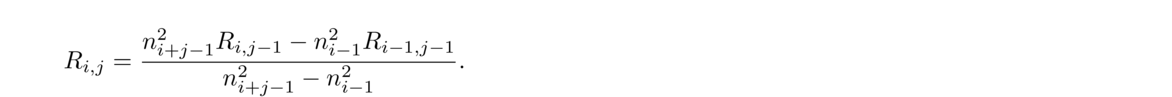

The mathematical error comes from the fact that we are approximating the slope of the tangent line with slopes of nearby secant lines. The exact derivative comes from the limit as h approaches 0, and the larger h is, the worse the mathematical error is. We can use Taylor series to figure out this error.

First, recall the Taylor series formula for f(x) centered at x = a is

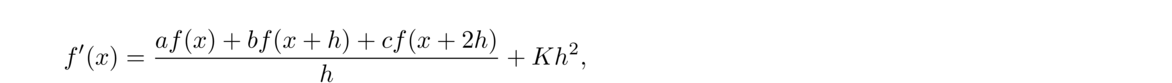

Just like with root-finding, a first order method is sometimes called linear, a second order quadratic, etc. The higher the order, the better, as the higher order allows us to get away with smaller values of h. For instance, all other things being equal, a second order method will get you twice as many correct decimal places for the same h as a first order method.

One the one hand, pure mathematics says to use the smallest h you possibly can in order to minimize the error. On the other hand, practical floating-point issues say that the smaller h is, the less accurate your results will be. This is a fight between two competing influences, and the optimal value of h lies somewhere in the middle. In particular, one can work out that for the forward difference formula, the optimal choice of h is x√ε, where ε is machine epsilon, which is roughly 2.2 × 10–16 for double-precision floating-point.

For instance, for estimating f′(x) at x = 3, we should use h = 3√2.2 × 10–16 ≈ 4 × 10–8. We see that this agrees with the table of approximate values we generated above.

In general, if for an nth order method, the optimal h turns out to be xn+1√ε.

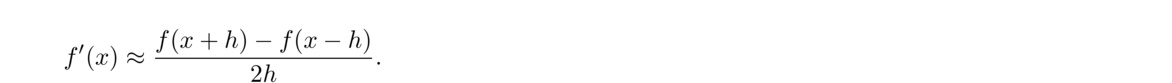

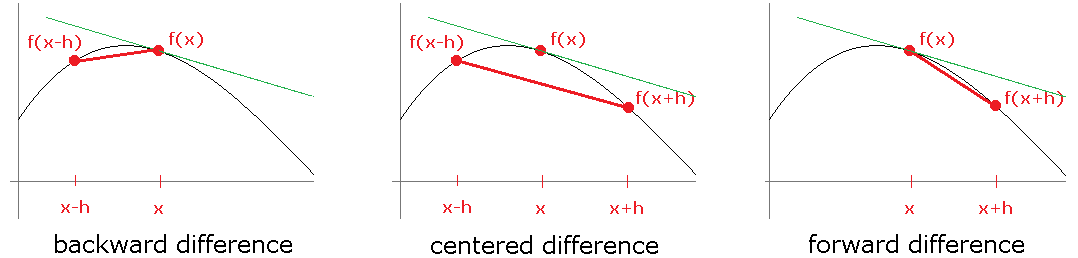

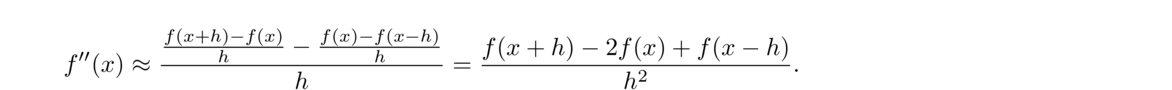

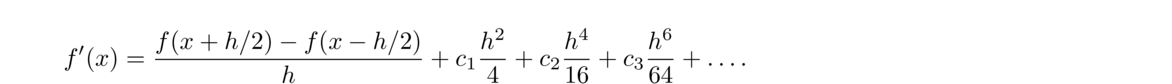

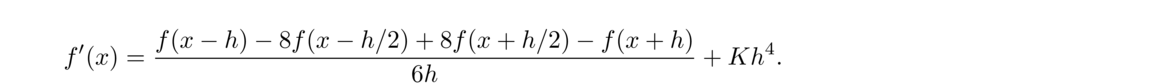

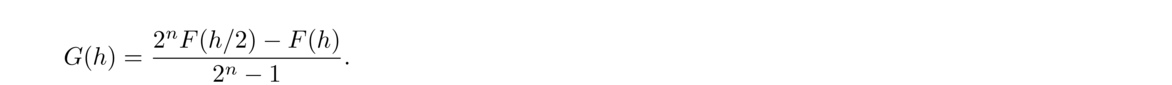

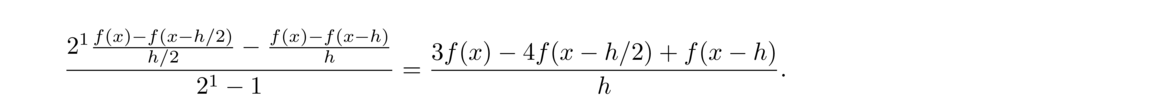

Thinking of f(x+h)–f(x)h as the slope of a secant line, we are led to another secant line approximation:

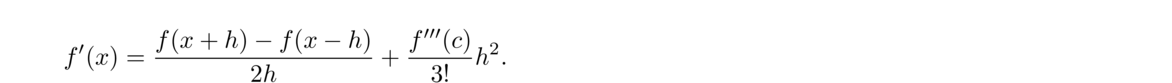

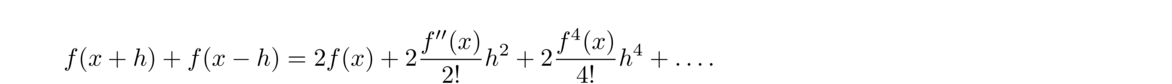

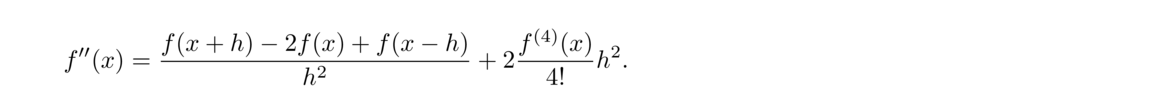

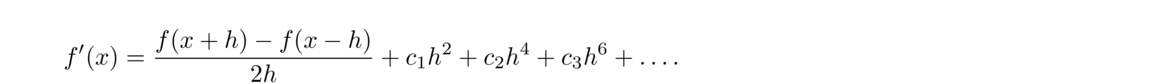

The centered difference formula is actually more accurate than the forward and backward ones. To see why, use Taylor series to write

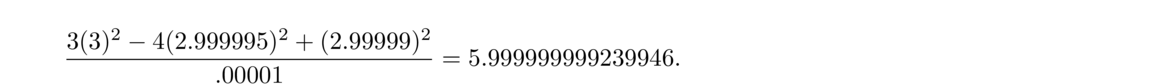

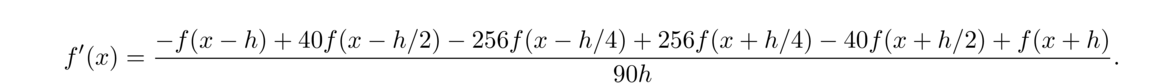

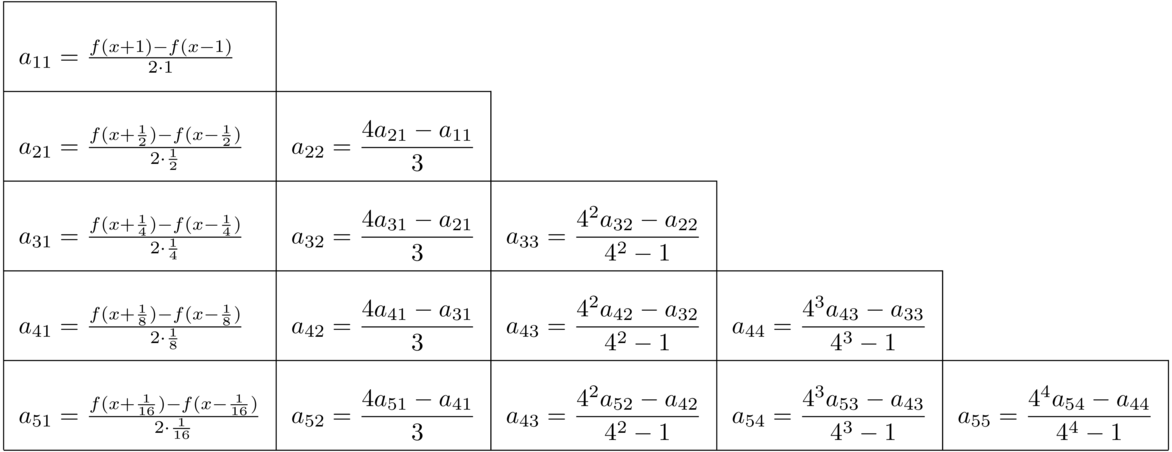

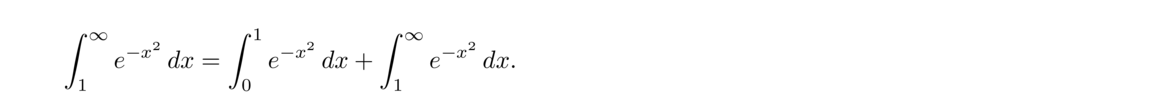

For the sake of comparison, here are the forward difference and centered difference methods applied to approximate the derivative of ex at x = 0 (which we know is equal to 1):