A Simple Introduction to Graph Theory

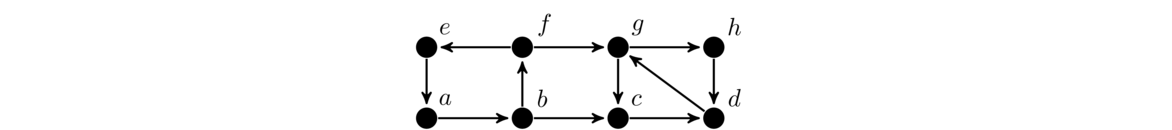

© 2019 Brian Heinold

Licensed under a Creative Commons Attribution-Noncommercial-Share Alike 4.0 Unported License

Here is a pdf version of the book.

Preface

These are notes I wrote up for my graph theory class in 2016. They contain most of the topics typically found in a graph theory course. There are proofs of a lot of the results, but not of everything. I've designed these notes for students that don't have a lot of previous experience in math, so I spend some time explaining certain things in more detail than is typical. My writing style is also pretty informal. There are a number of exercises at the end.

If you see anything wrong (including typos), please send me a note at heinold@msmary.edu.

Basics

What is a graph?

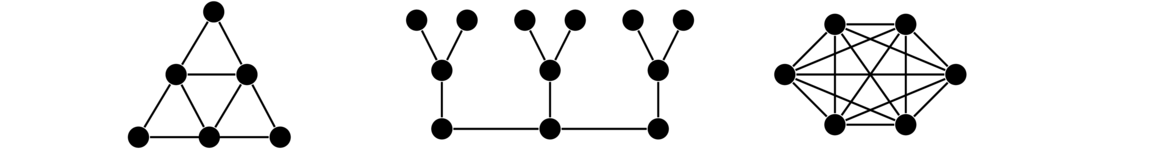

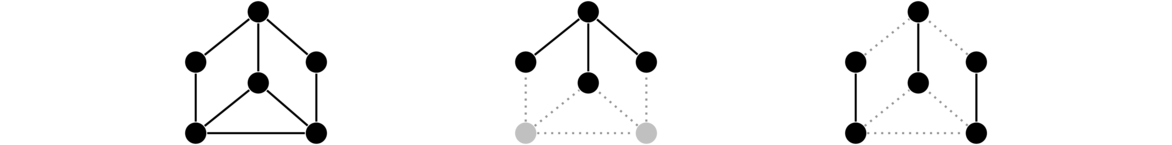

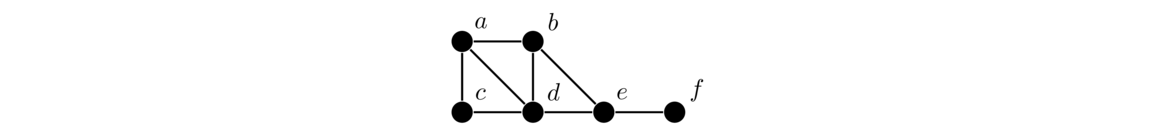

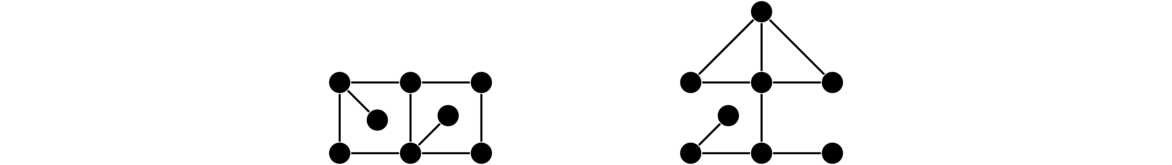

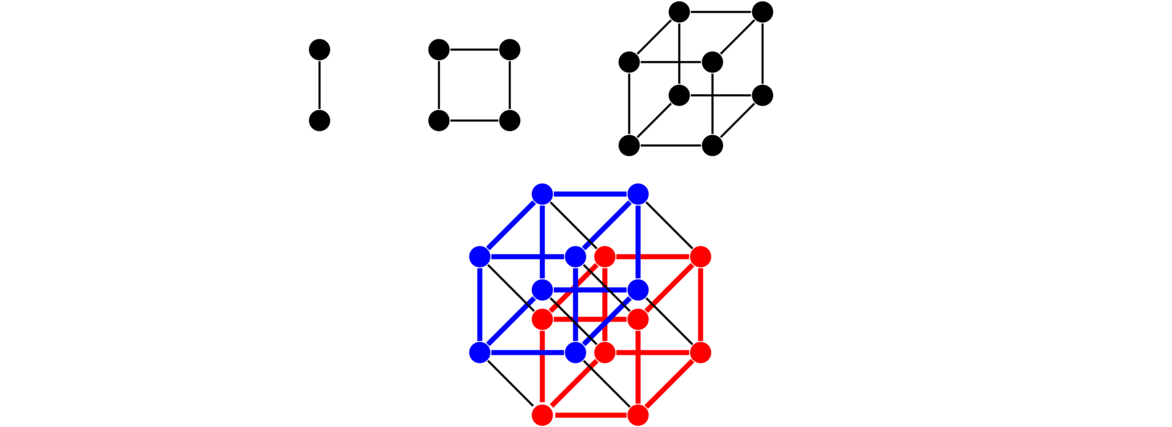

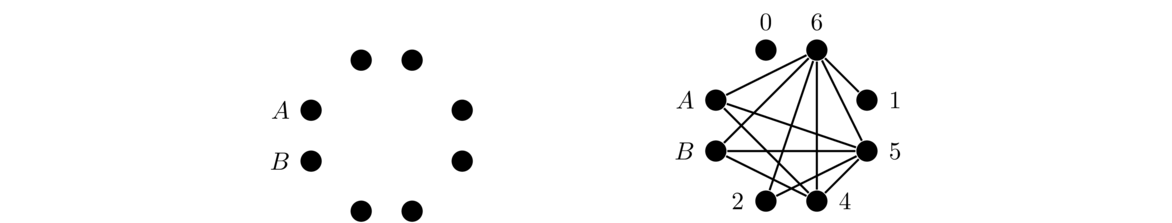

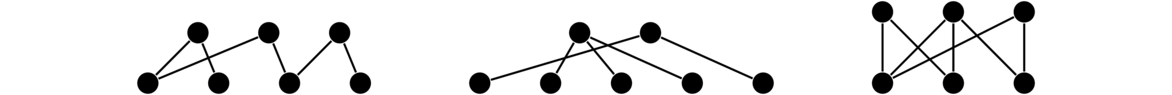

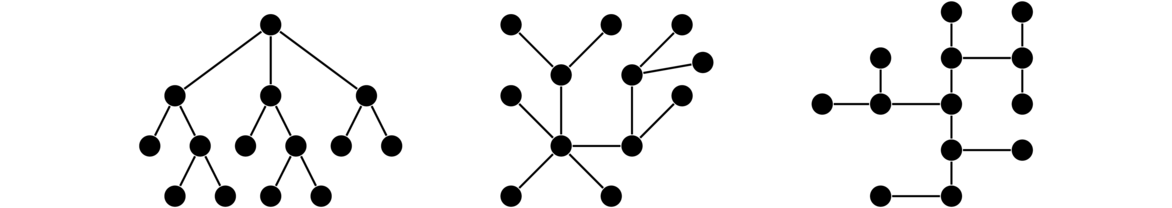

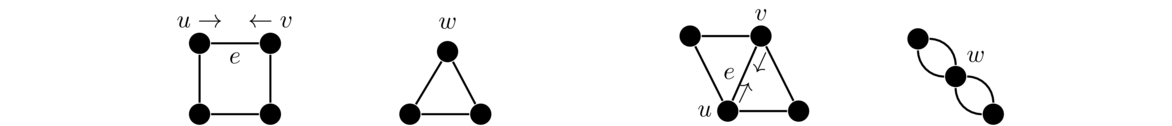

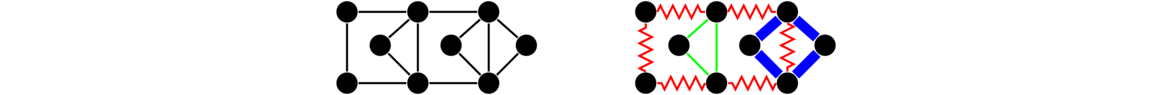

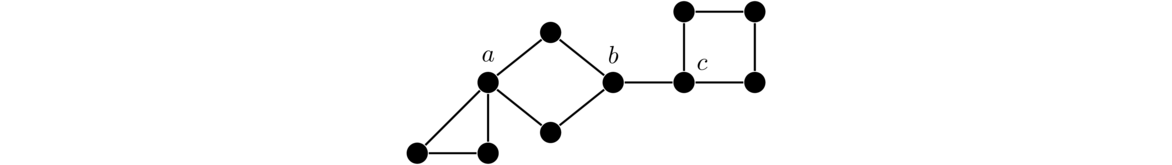

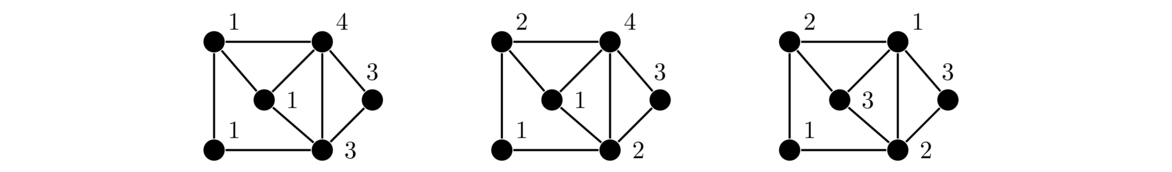

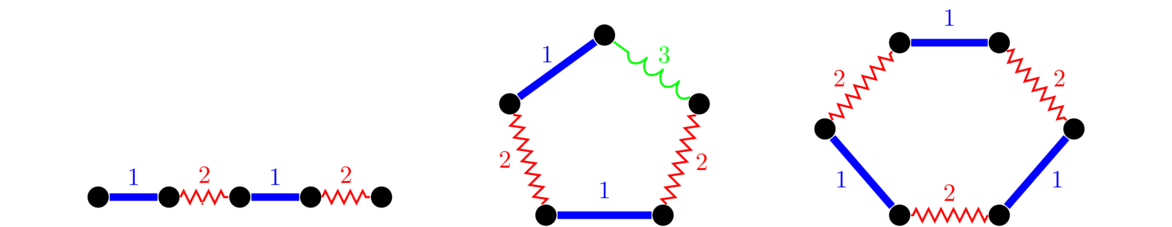

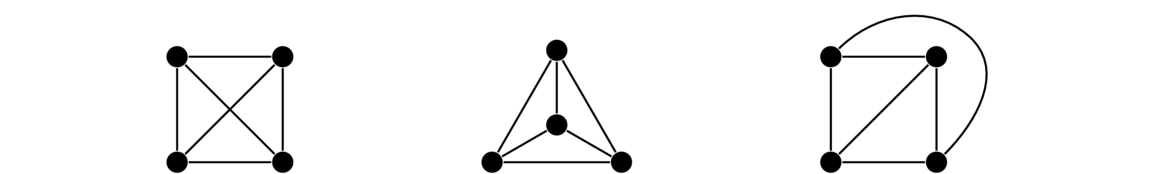

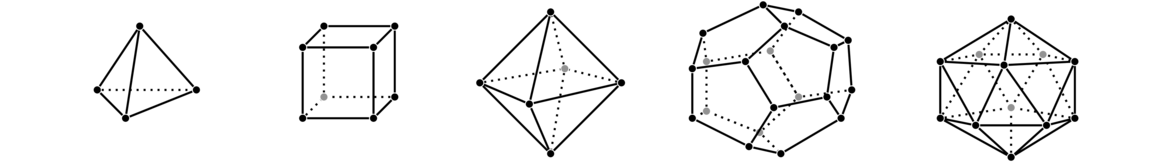

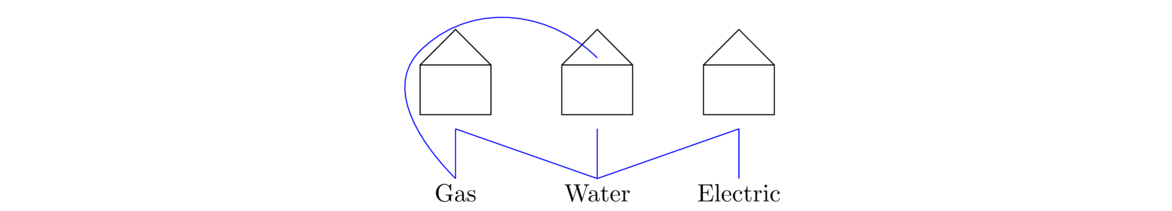

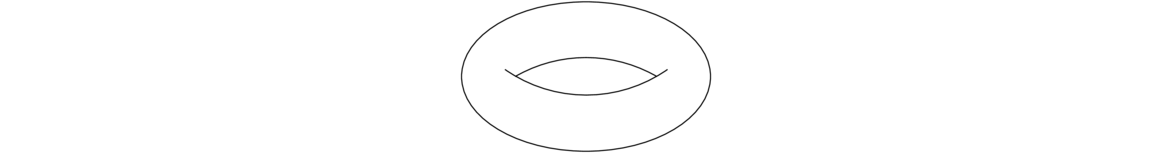

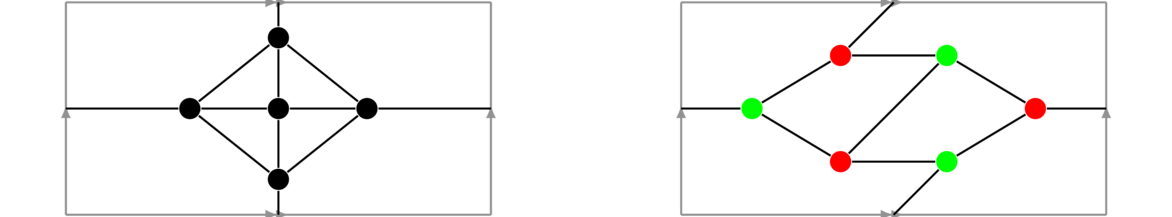

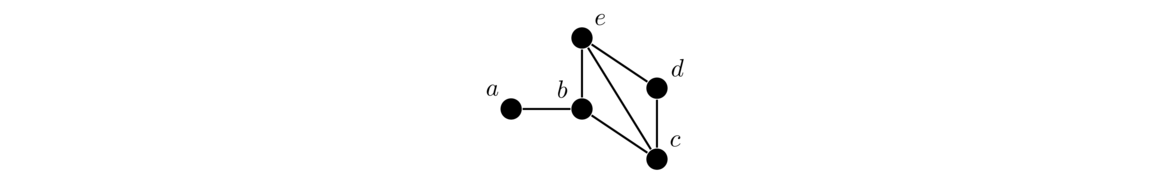

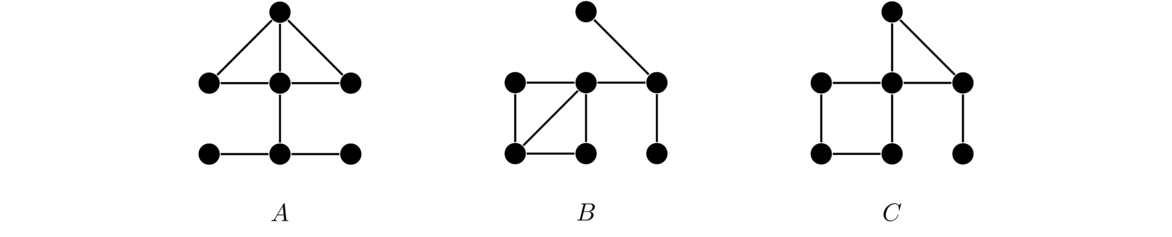

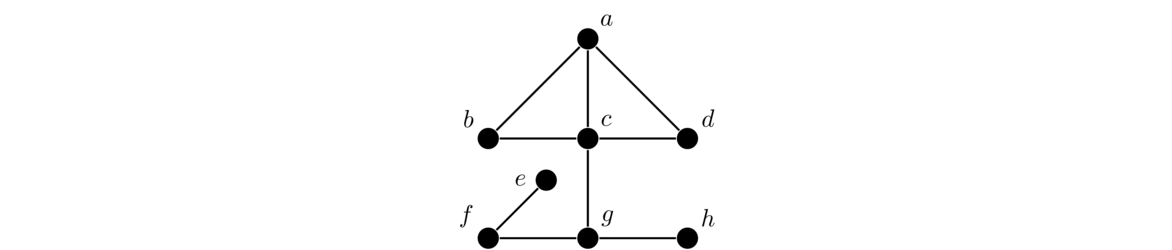

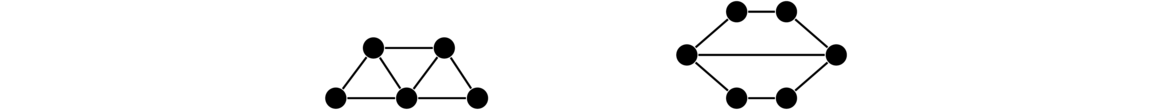

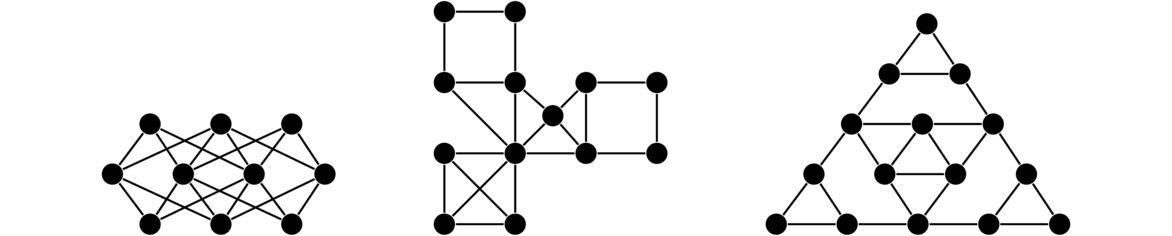

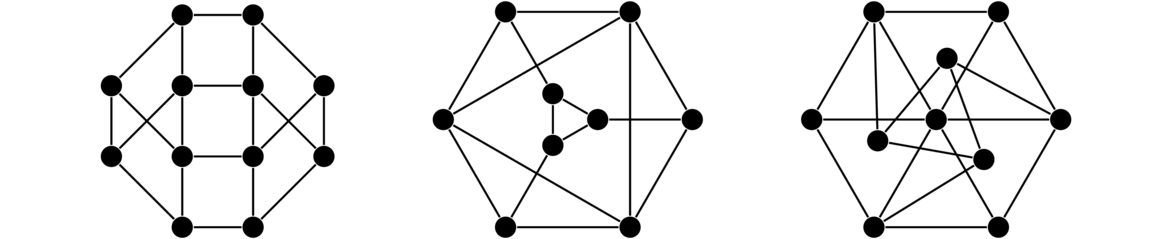

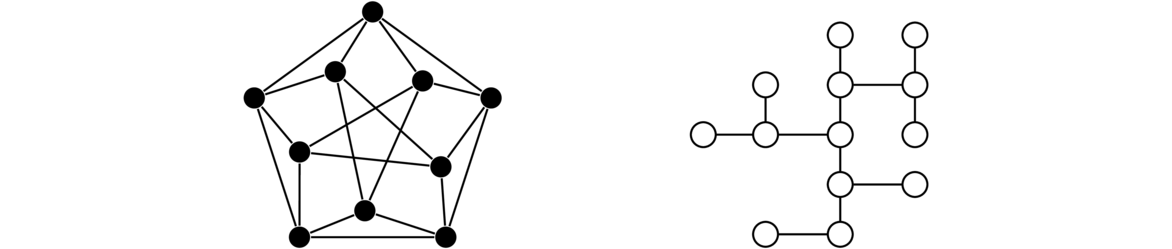

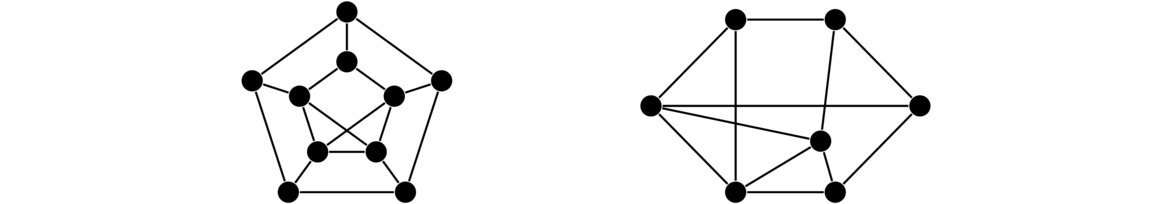

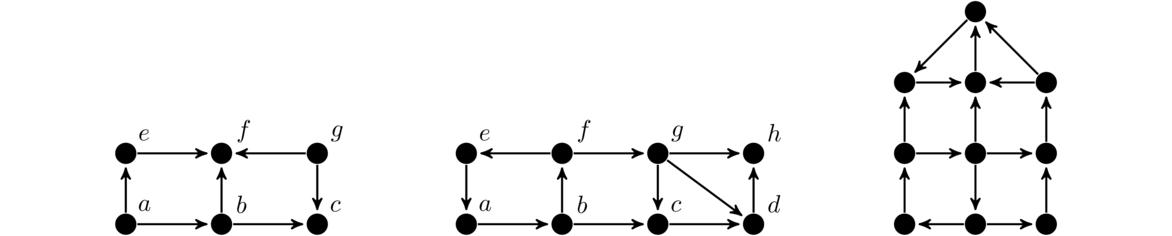

Graphs are networks of points and lines, like the ones shown below.

They are useful in modeling all sorts of real world things, as we will see, as well as being interesting in their own right. While the figure above hopefully gives us a reasonably good idea of what a graph is, it is good to give a formal definition to resolve any ambiguities.

So a graph is defined purely in terms of what its vertices (points) are, and which vertices are connected to which other vertices. Each edge (line) is defined by its two endpoints.

It doesn't matter how you draw the graph; all that matters is what is connected to what.

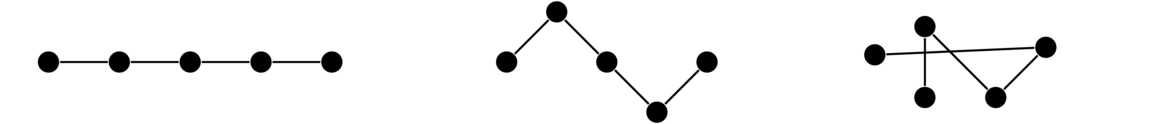

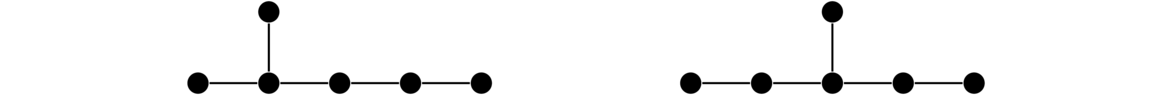

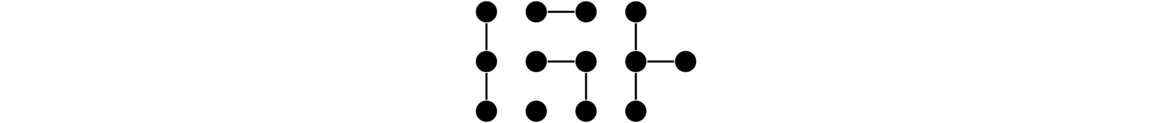

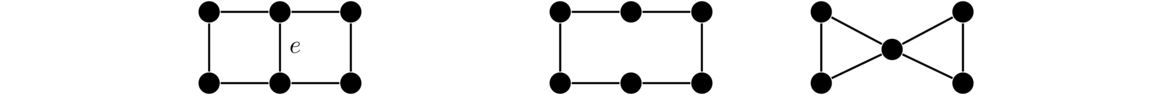

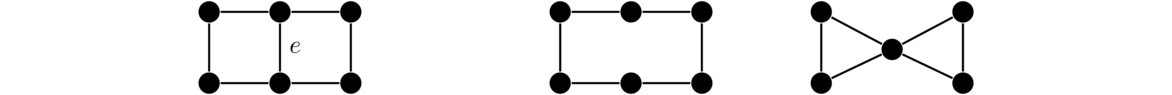

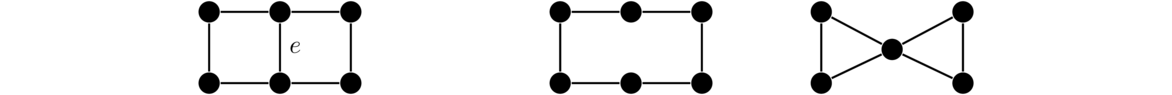

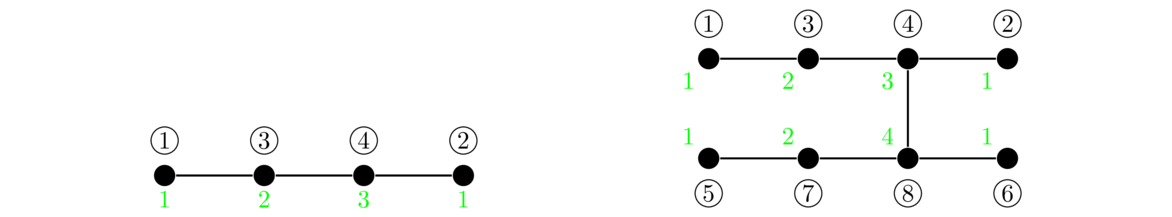

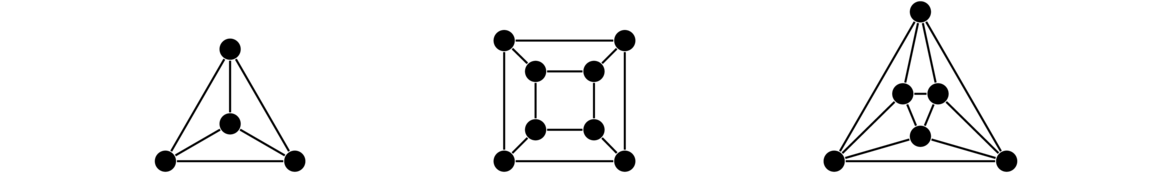

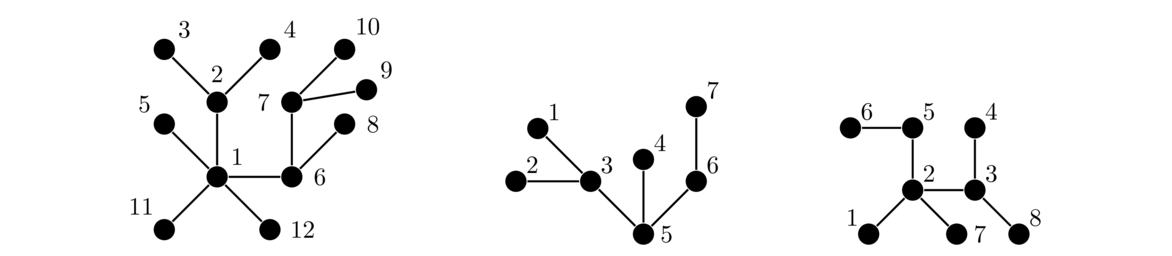

For instance, shown below are several ways of drawing the same graph. Notice that all three have the same structure of a string of five vertices connected in a row.

For the second and third examples above, we could imagine moving vertices around in order to “straighten out” the graph to look like the first example. Notice in the third example that edges are allowed to cross over each other. In fact, some graphs can't be drawn without some edges crossing. Edges are also allowed to curve. Remember that we are only concerned with which vertices are connected to which other ones. We don't care exactly how the edges are drawn.

In fact, we don't even have to draw a graph at all. The graph above can be simply defined by saying its vertices are the set V = {a,b,c,d,e} and its edges are the set E = {ab, bc, cd, de}. However, we will usually draw our graphs instead of defining them with sets like this.

Important terminology and notation

The terms and notations below will show up repeatedly.

- We will usually denote vertices with single letters like u or v. We will denote edges with pairs of letters, like uv to denote the edge between vertices u and v. We will denote graphs with capital letters, like G or H.

- Two vertices that are connected by an edge are said to be adjacent.

- An edge is said to be incident on its two endpoints.

- The neighbors of a vertex are the vertices it is adjacent to.

- The degree of a vertex is the number of edges it is an endpoint of. The notation deg(v) denotes the degree of vertex v.

- The set of vertices of a graph G, called its vertex set, is denoted by V(G). Similarly, the edge set of a graph is denoted by E(G).

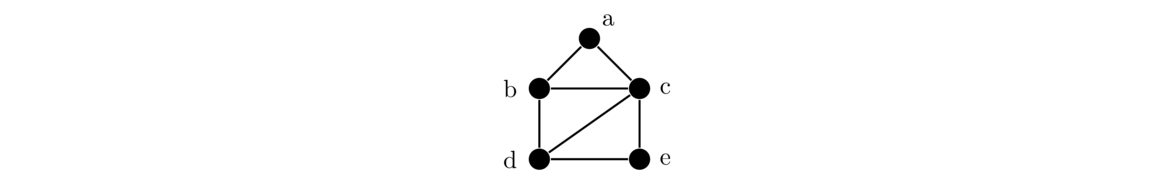

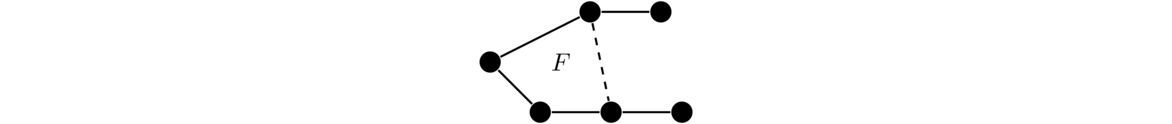

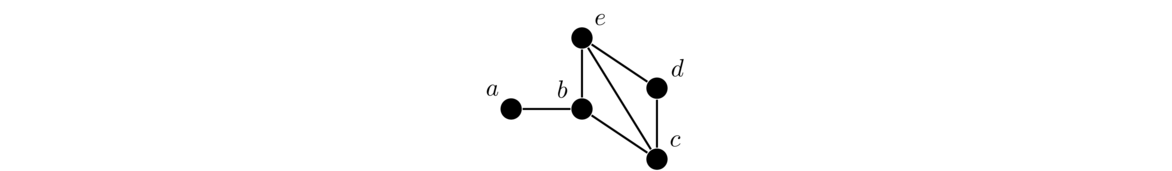

For example, in the graph below, the bottommost edge is between vertices d and e. We denote it as edge de. That edge is incident on d and e. Vertex d is adjacent to vertex e, as well as to vertices b and c. The neighbors of d are b, c, and e. And d has degree 3.

Finally, in these notes, all graphs are assumed to be finite and nonempty. Infinite graphs are interesting, but add quite a few complications that we won't want to bother with here.

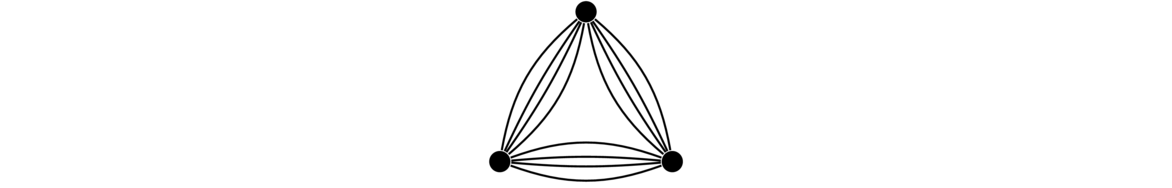

Multigraphs

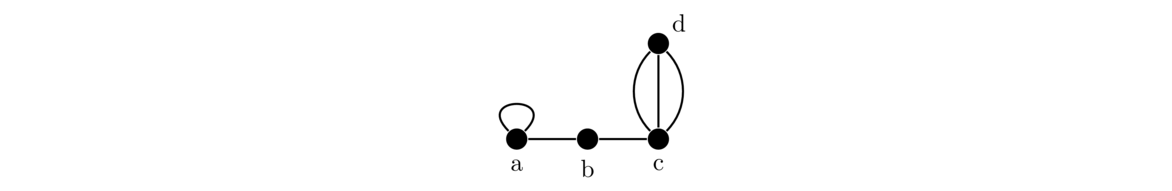

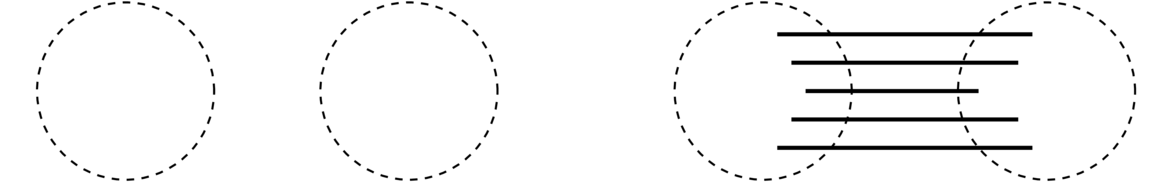

It is possible to have an edge from a vertex to itself. This is called a loop. It is also possible to have multiple edges between the same vertices. We use the term multiple edge to refer to edges that share the same endpoints. In the figure below, there is a loop at vertex a and multiple edges (three of them) between c and d.

Loops and multiple edges cause problems for certain things in graph theory, so we often don't want them. A graph which has no loops and multiple edges is called a simple graph. A graph which may have loops and multiple edges is called a multigraph. In these notes, we often will just refer to something as a graph, hoping it will be clear from the context whether loops and multiple edges are allowed. We will use the terms simple graph and multigraph when we need to be explicitly clear.

Note that loops and multiple edges don't fit our earlier definition of edges as being two-element subsets of the vertex set. Instead, a multigraph is defined as a triple consisting of a vertex set, an edge set, and a relation that assigns each edge two vertices, which may or may not be distinct.

Note also that loops count twice toward the degree of a vertex.

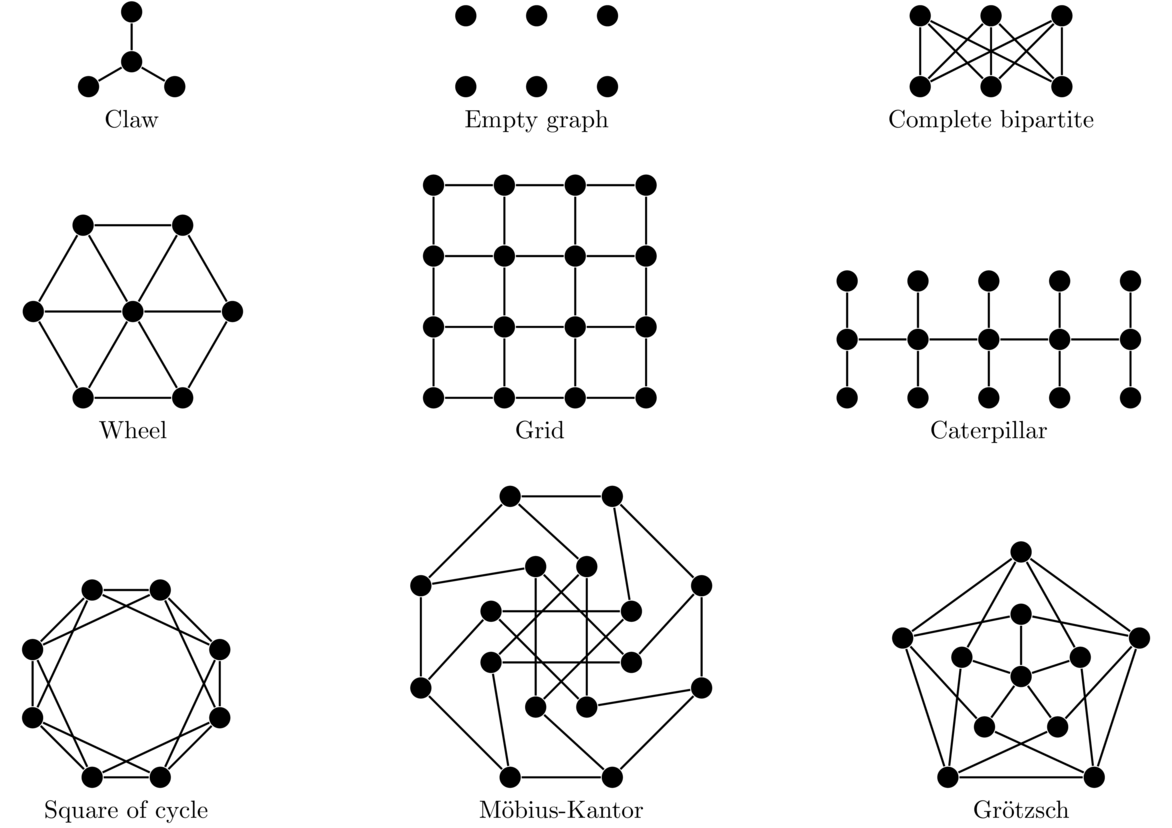

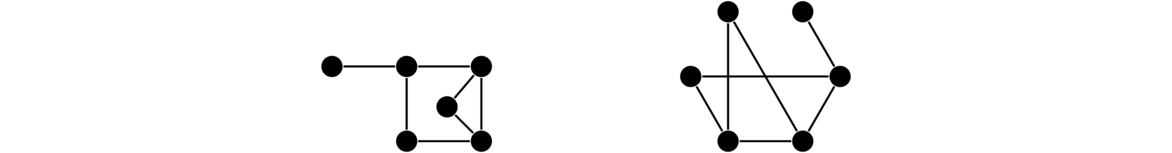

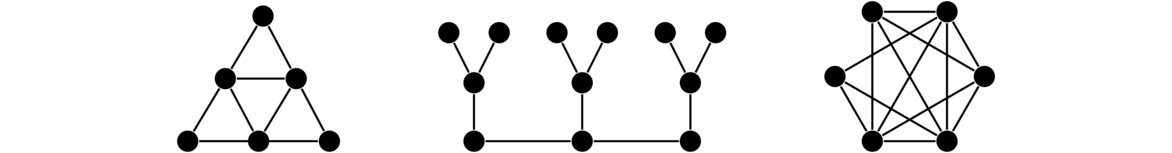

Common graphs

This section is a brief survey of some common types of graphs.

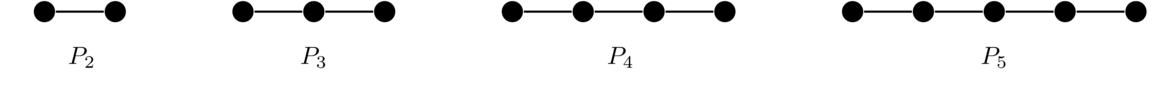

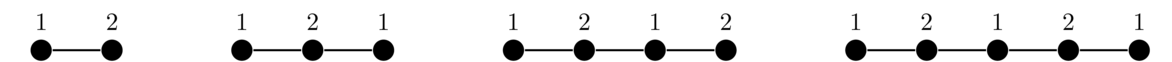

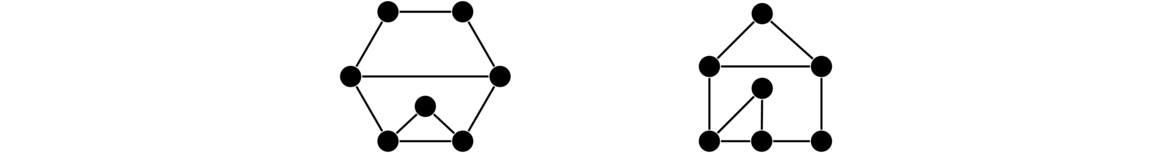

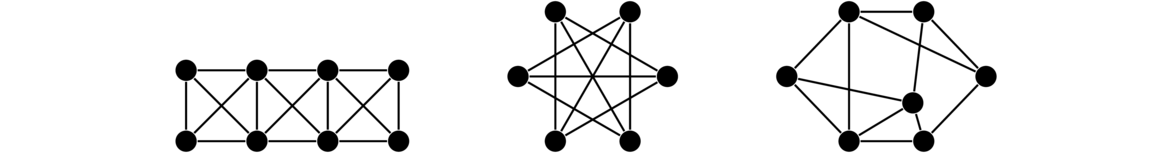

Paths

A graph whose vertices are arranged in a row, like in the examples below, is called a path graph (or often just called a path).

Formally, the path Pn has vertex set {v1, v2, … vn} and edge set {vivi+1: i = 1, 2, …, n-1 }.

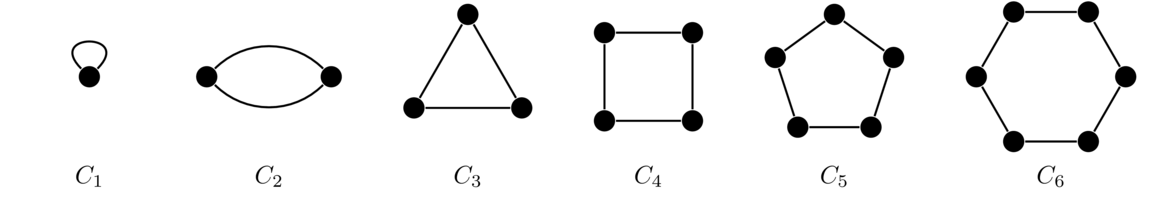

Cycles

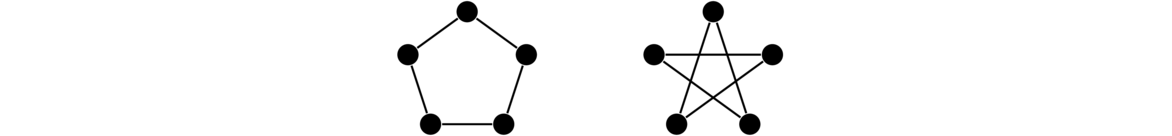

If we arrange vertices around a circle or polygon, like in the examples below, we have a cycle graph (often just called a cycle).

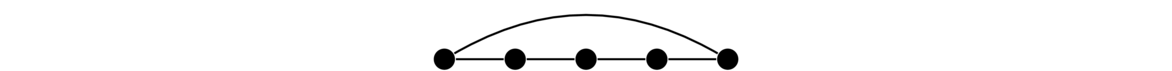

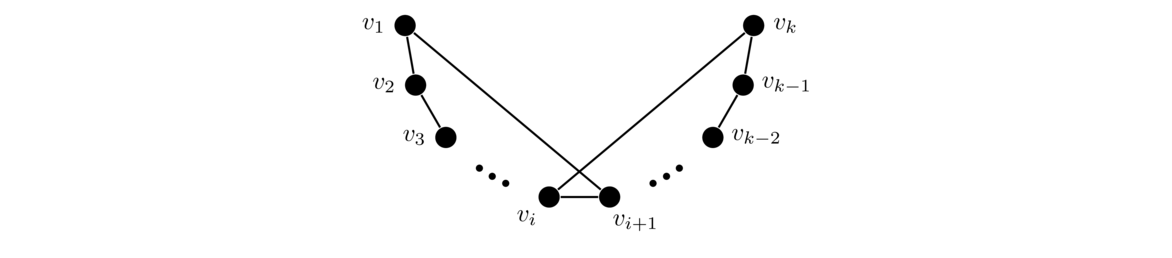

Another way to think of a cycle is as a path where the two ends of the path are connected up, like shown below.

Formally, the cycle Cn has vertex set {v1, v2, … vn} and edge set {vivi+1: i = 1, 2, …, n-1 } ∪ {vnv1}.

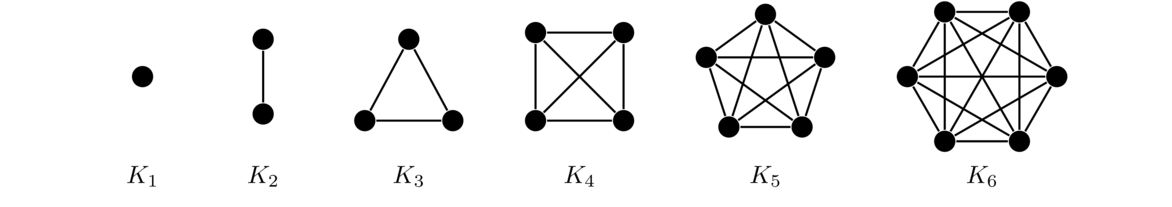

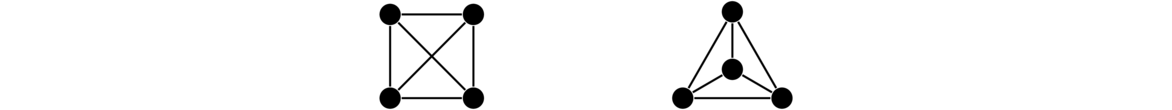

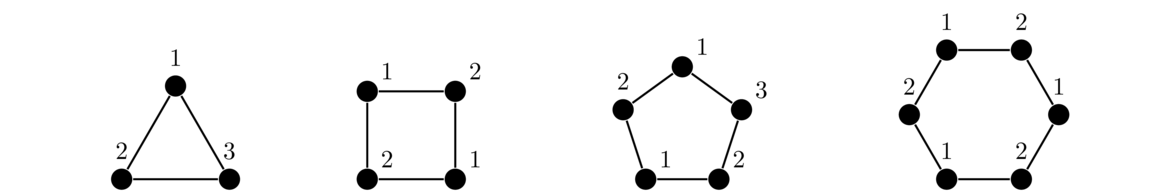

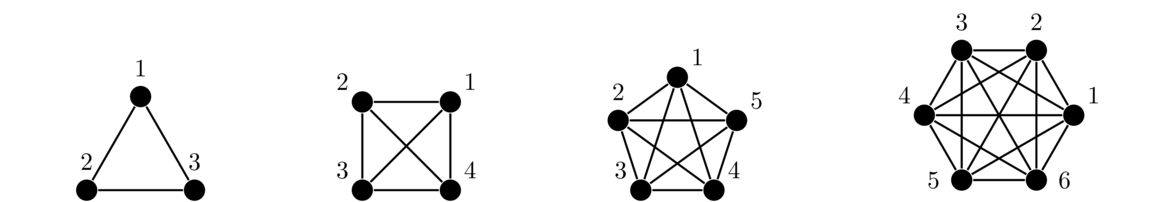

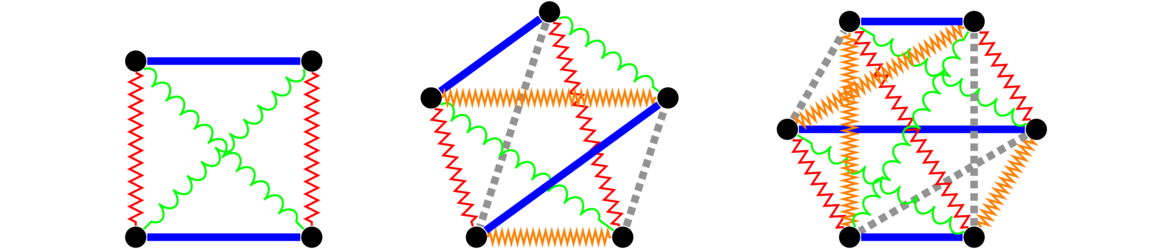

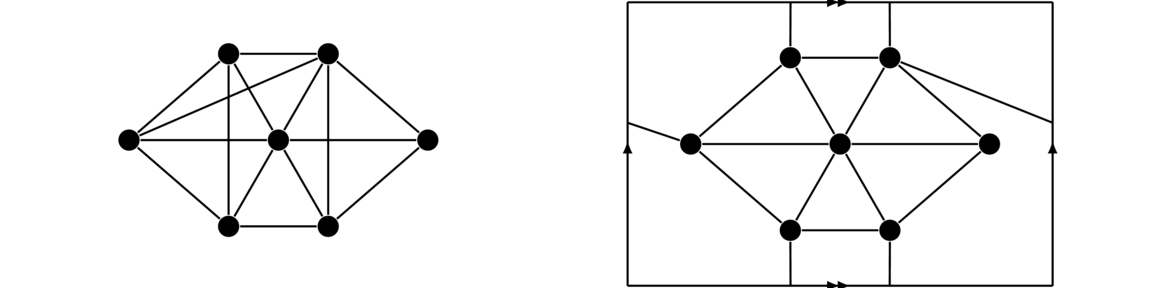

Complete graphs

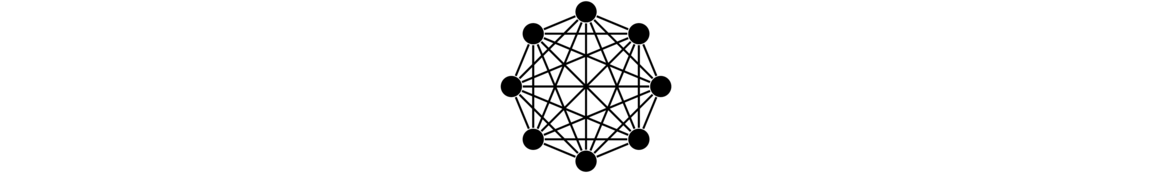

A complete graph is a simple graph in which every vertex is adjacent to every other vertex.

Formally, a complete graph Kn has vertex set {v1, v2, … vn} and edge set {vivj : 1 ≤ i < j ≤ n}.

Note: Notice that some graphs can be called by multiple names. For instance, P2 and K2 are the same graph. Similarly, C3 and K3 are the same graph, often called a triangle.

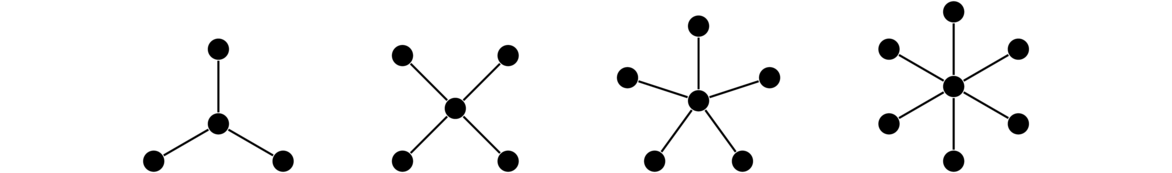

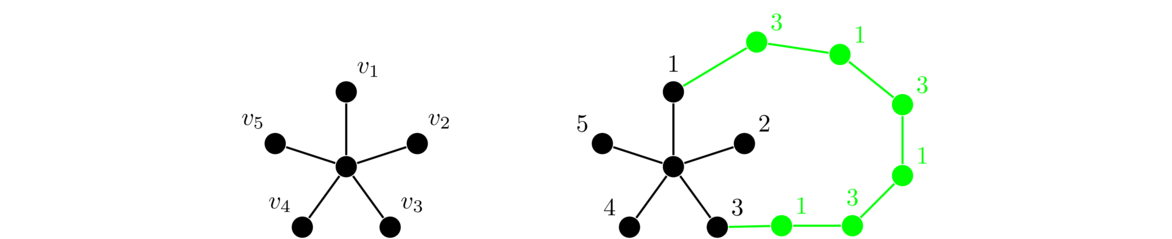

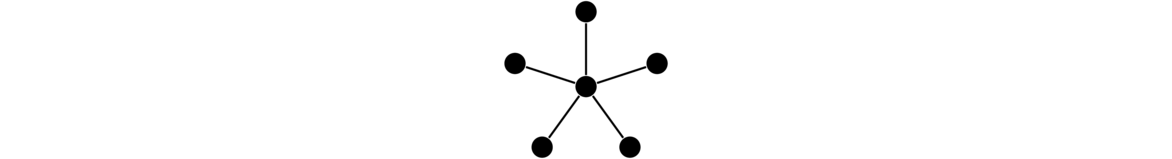

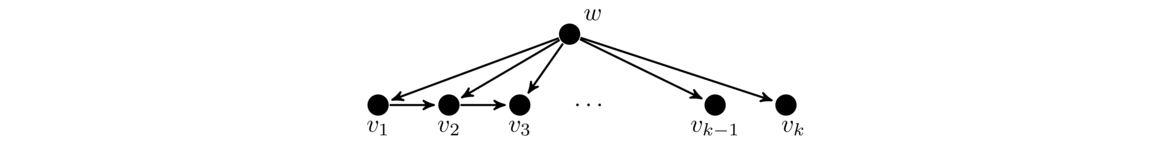

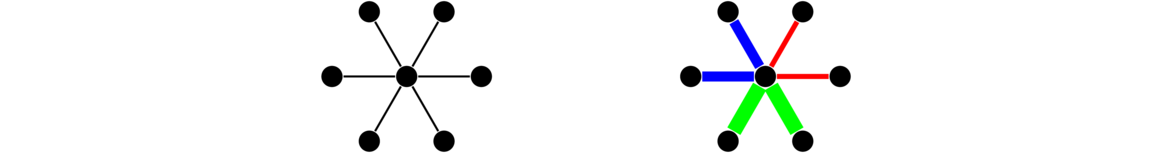

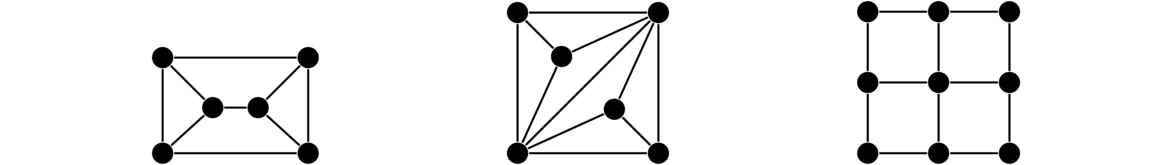

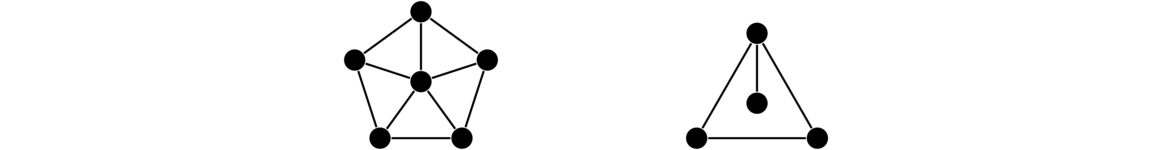

Stars

A star is a graph that consists of a central vertex and zero or more outer vertices of degree 1 all adjacent to the central vertex. There are no other edges or vertices in the graph. Some examples are shown below.

Formally, a star Sn has vertex set {v0} ∪ {vi : 1 ≤ i ≤ n} and edge set {v0vi : 1 ≤ i ≤ n}.

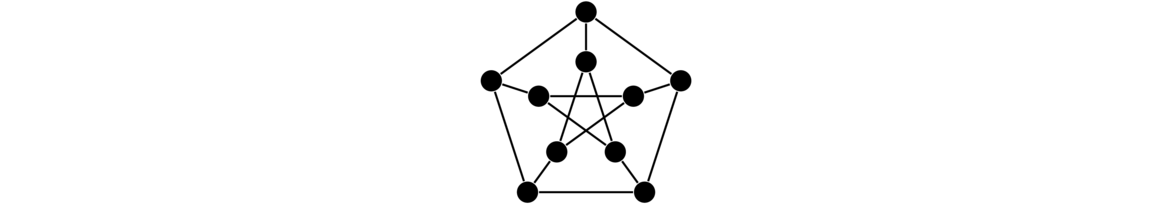

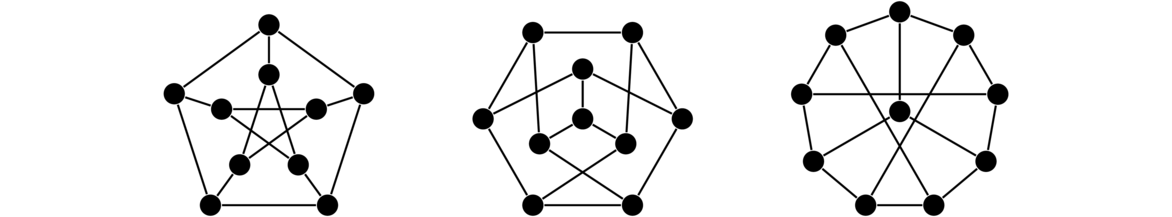

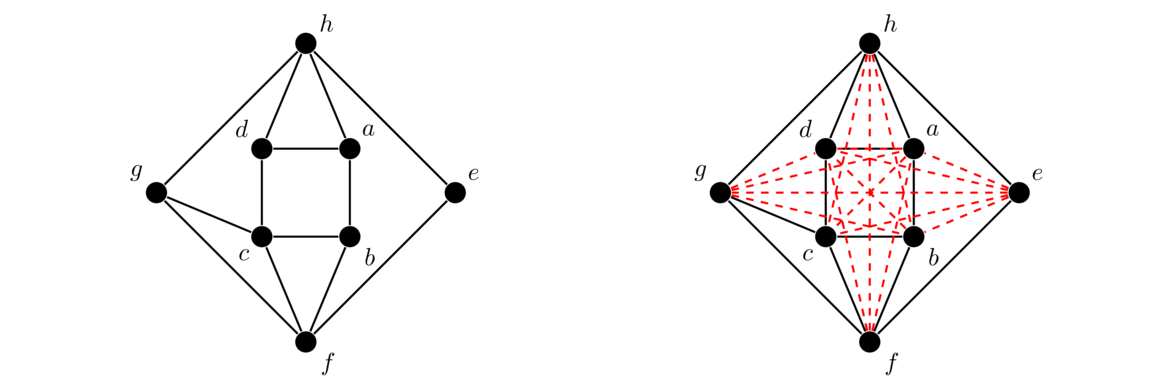

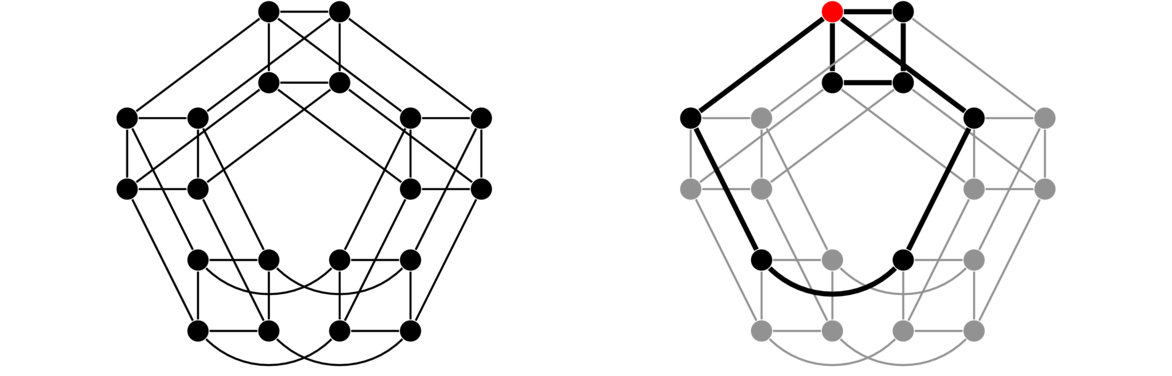

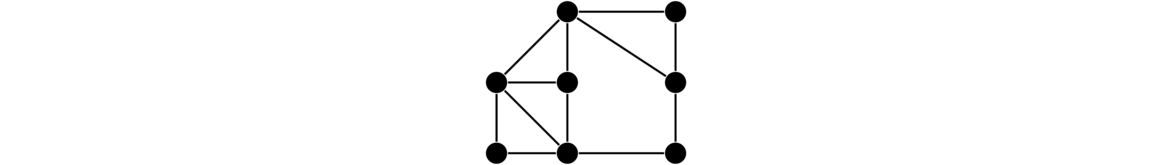

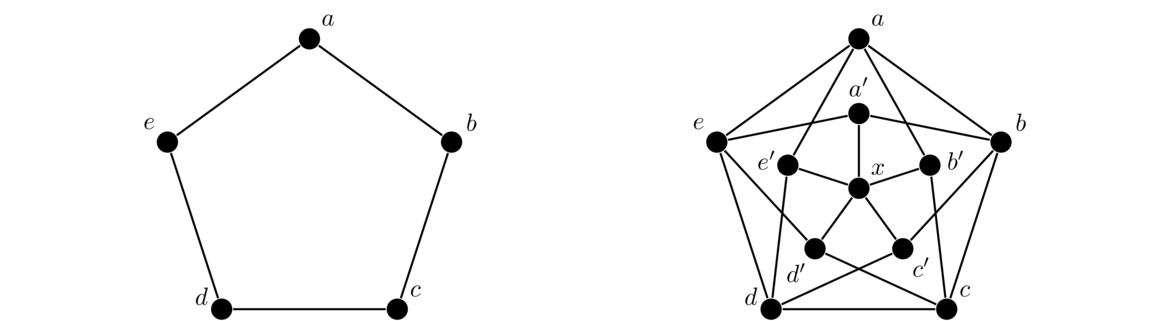

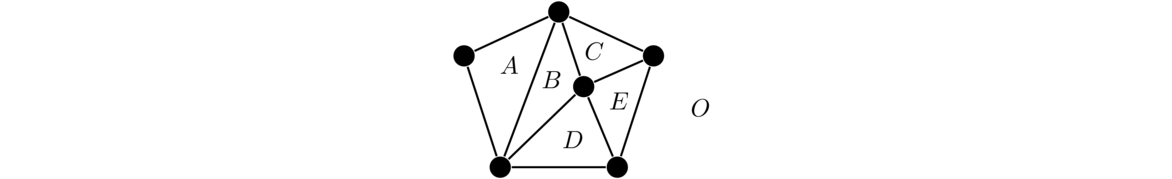

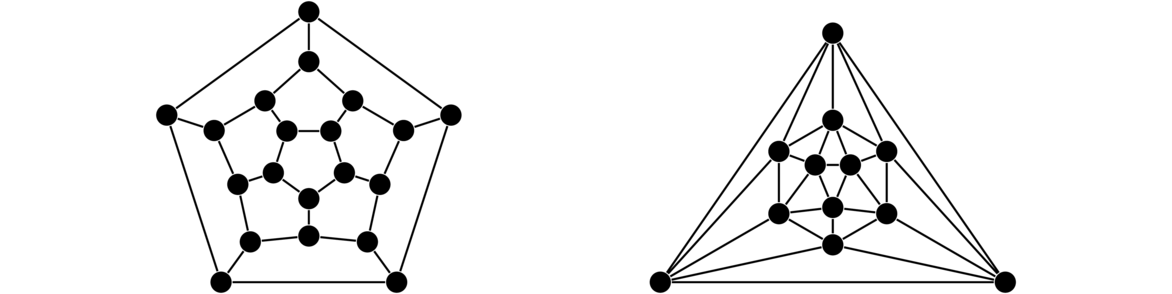

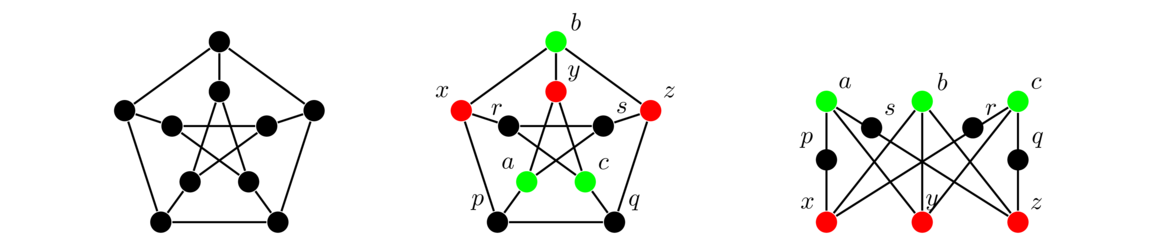

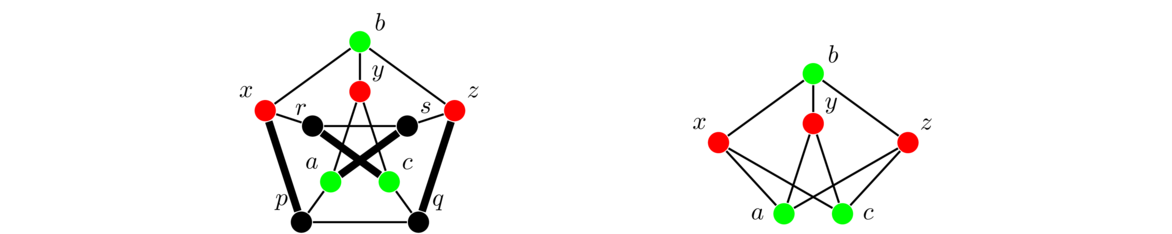

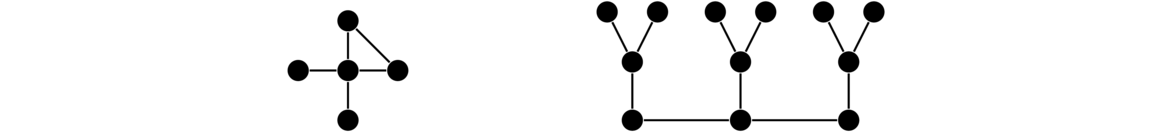

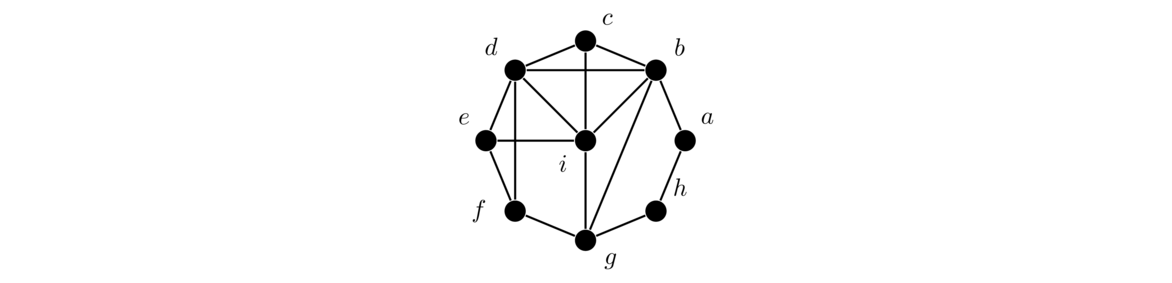

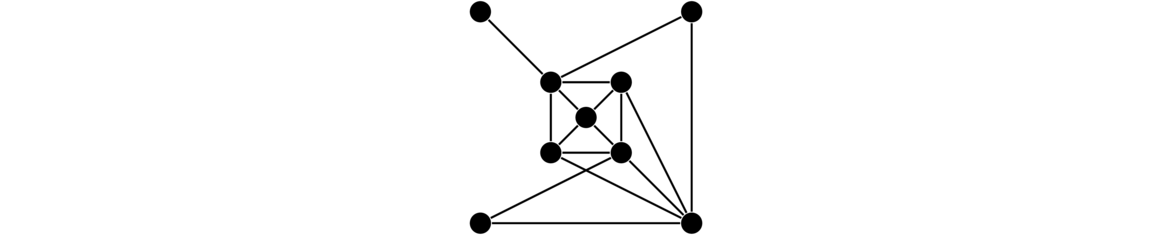

The Petersen graph

The Petersen graph is a very specific graph that shows up a lot in graph theory, often as a counterexample to various would-be theorems. Shown below, we see it consists of an inner and an outer cycle connected in kind of a twisted way.

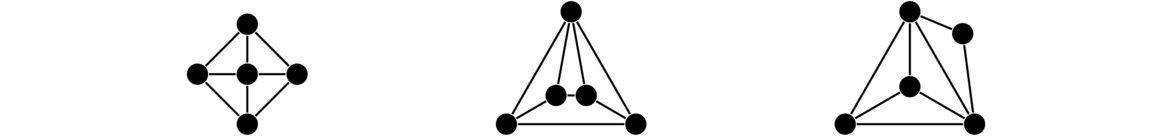

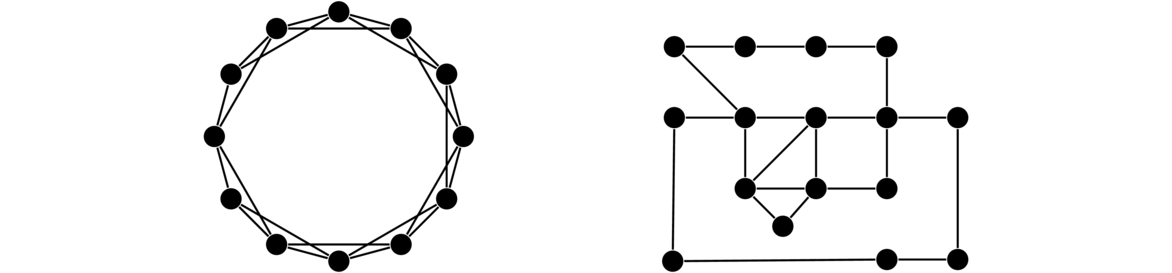

Regular graphs

A regular graph is one in which every vertex has the same degree. The term k-regular is used to denote a graph in which every vertex has degree k. There are may types of regular graphs. Shown below are a 2-regular, a 3-regular, and a 4-regular graph.

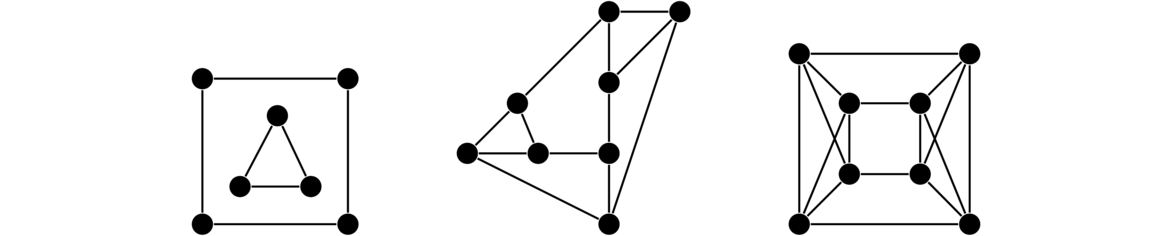

Others

There are various other classes of graphs. A few examples are shown below. Many have fun names like caterpillars and claws. It is not necessary to learn their names at this point.

Subgraphs

A subgraph is part of a graph, where we take some of its vertices and edges. Here is the formal definition.

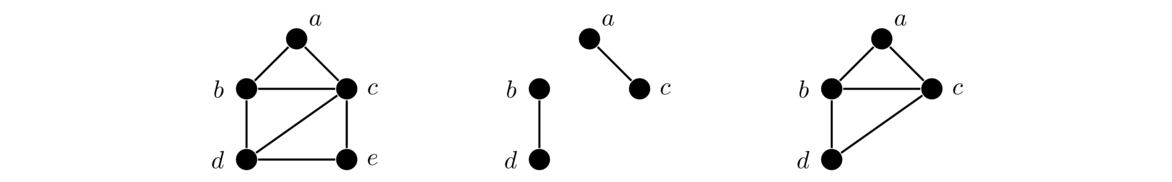

Shown below on the left is a graph. The two graphs to the right are subgraphs of it (with the vertices and edges that are not included in the subgraph shown in a lighter color; they are not part of the subgraph).

Note that if an edge is part of a subgraph, both of its endpoints must be. It doesn't make sense to have an edge without an endpoint.

Induced subgraphs

Often the subgraphs we will be interested in are induced subgraphs, where whenever two vertices are included, if there is an edge in the original graph between those two vertices, then that edge must be included in the subgraph as well.

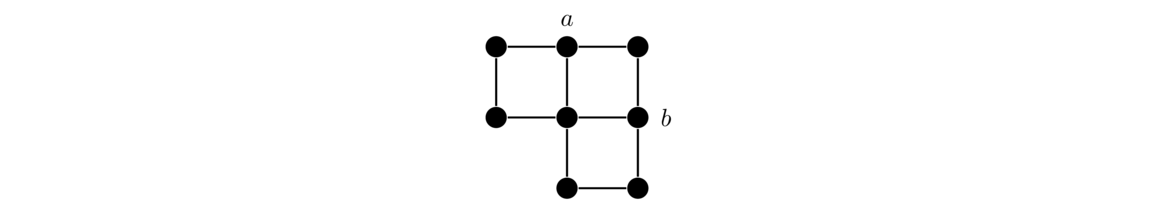

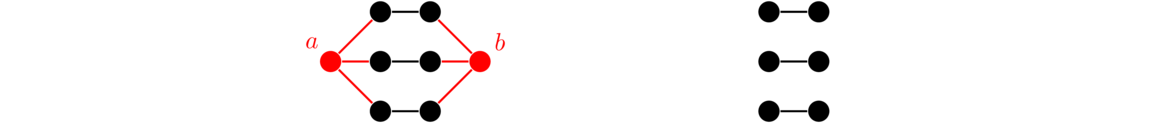

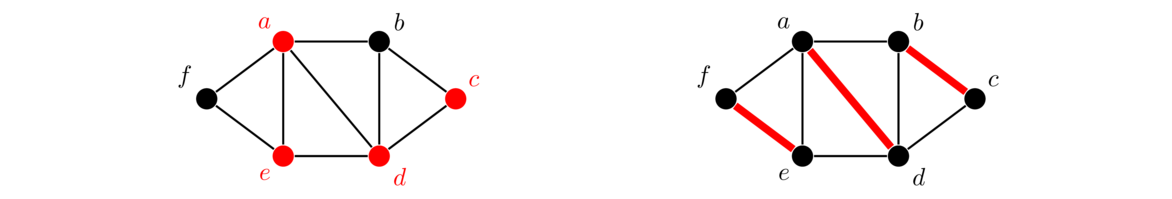

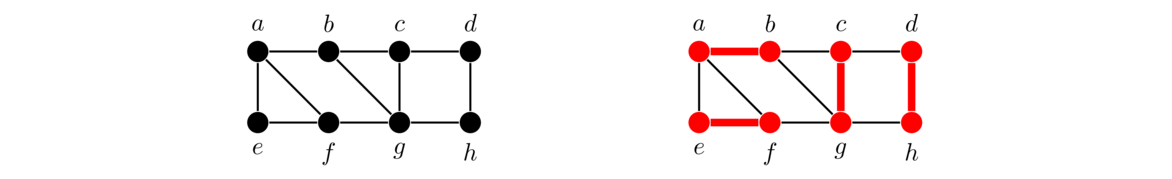

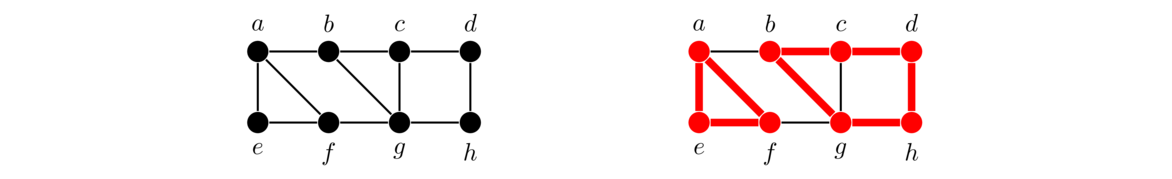

Below on the left we have a graph followed by two subgraphs of it. The first subgraph is not induced since it includes vertices a, b, c, and d, but not all of the edges from the original graph between those vertices, such as edge bc. The second subgraph is induced.

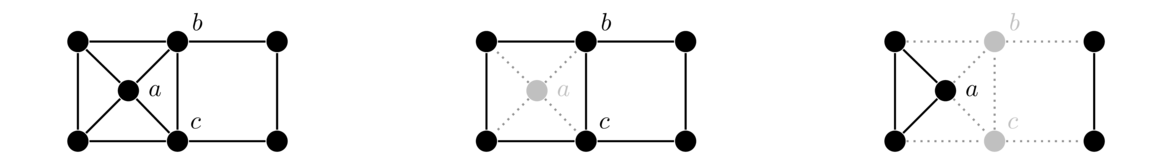

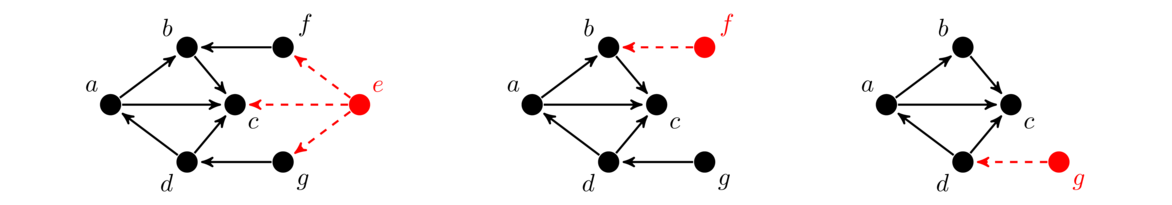

Removing vertices

Quite often, we will want to remove vertices from a graph. Whenever we do so, we must also remove any edges incident on those vertices. Shown below is a graph and two subgraphs obtained by removing vertices.

In the middle graph we have removed the vertex a, which means we have to remove its four edges. In the right graph, we have removed vertices b and c, which has the effect of breaking the graph into two pieces.

If G is a graph and S is a subset of its vertices, we will use the notation G-S for the graph obtained by removing the vertices of S. If S consists of just a single vertex v, we will write G-v instead. And if we are removing just a single edge e from a graph, we will denote that by G-e.

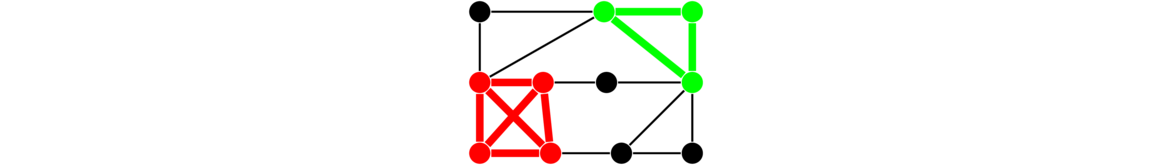

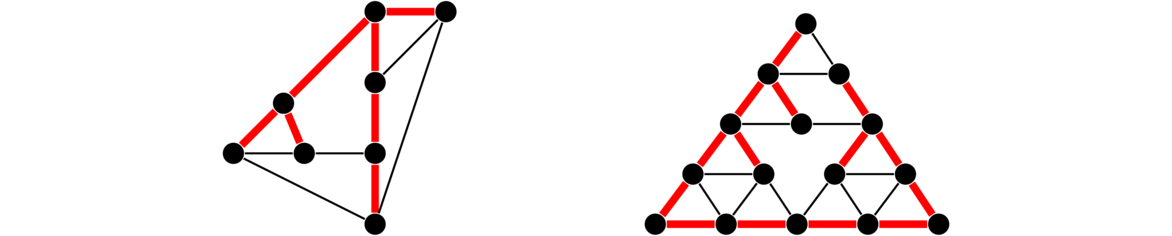

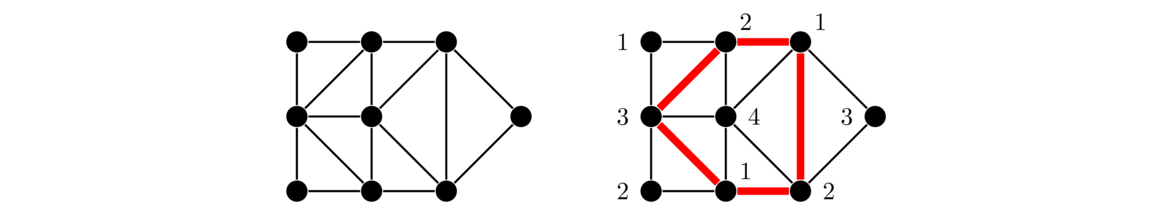

Special subgraphs

We will often be interested in paths and cycles in graphs. Any time we say a graph “contains a path” or “contains a cycle”, what we mean is that it has a subgraph that is a path or a cycle. Examples of a path and a cycle in a graph are highlighted below.

A subgraph that is a complete graph is called a clique. It is a subgraph in which every vertex in the subgraph is adjacent to every other vertex in the subgraph. For example, in the graph below we have a 4-clique in the lower left and a 3-clique (usually called a triangle) in the upper right.

One way to think about cliques is as follows: Suppose we have a graph representing a social network. The vertices represent people, with an edge between two vertices meaning that those people know each other. A clique in this graph is a group of people that all know each other.

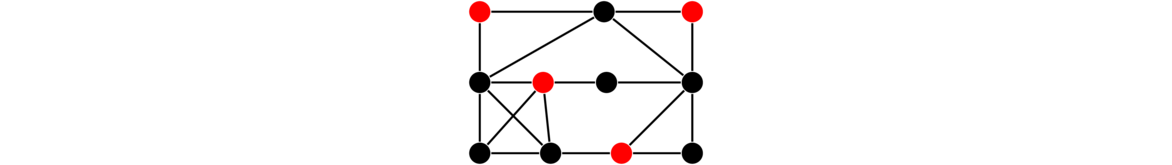

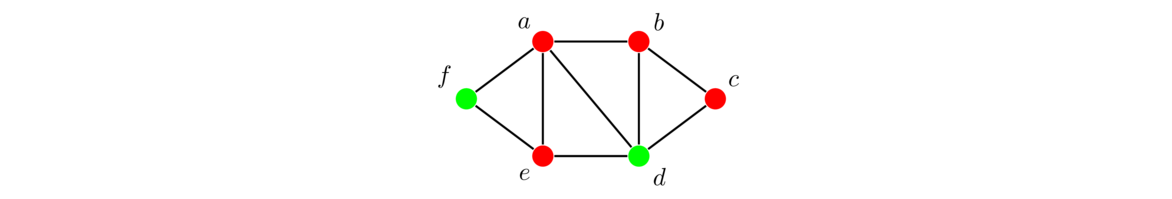

The opposite of a clique is an independent set. It is a collection of vertices in a graph in which no vertex is adjacent to any other. Keeping with the social network example, an independent set would be a collection of people that are all strangers to each other. An independent set is highlighted in the graph below.

Graph theorists are interested in the problem of finding the largest clique and largest independent set in a graph, both of which are difficult to find in large graphs.

Connectedness

Shown below on the left is a connected graph and on the right a disconnected graph.

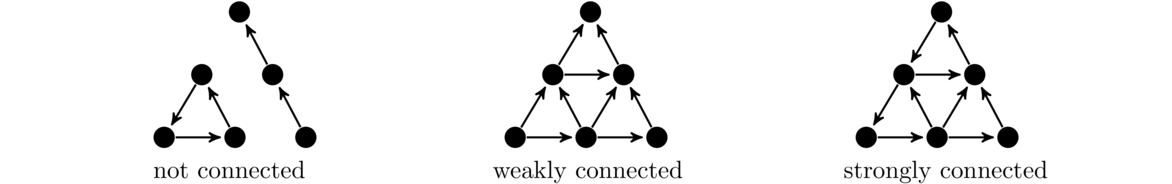

We see intuitively that a disconnected graph is in multiple “pieces,” but how would we define this rigorously? The key feature of a connected graph is that we can get from any vertex to any other. This is how we define connectivity. Formally, we have the following:

To reiterate—the defining feature of a connected graph is that it is possible to get from any vertex to any other. Later on, when we look at proofs, if we want to prove a graph is connected, we will need to show that no matter what vertices we pick, there must be a path between them.

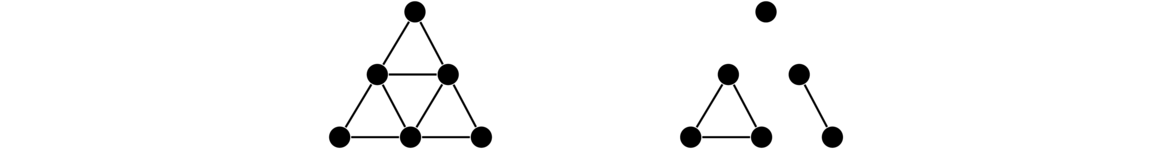

The pieces of a disconnected graph are called its components. For instance, in the disconnected graph above on the right, the three components are the triangle, the two vertices on the right connected by an edge, and the single vertex at the top.

Just like with connectivity, we hopefully have a clear idea of what a piece or component of a graph is, but how would we define it precisely? Before reading on, try to think about how you would define it.

One possible definition might be that a component is a connected subgraph. But that isn't quite right, as in the graph above on the right there are more than 3 connected subgraphs. For instance, the bottom part of the triangle is a connected subgraph but not a component. The trick is that a component is a connected subgraph that is as large as possible—that there is no other vertex that could be added to it and have it still remain connected.

Mathematicians use the term maximal for situations like this. A component is a maximal connected subgraph, one that cannot be made any larger and still remain connected. Here is the formal definition.

Another way to think about a component is that it is a connected subgraph that is not contained in any other connected subgraph.

Note that a connected graph has one component. Also, a component that consists of a single vertex is called an isolated vertex.

Other important terminology

Cut vertices and cut edges

Here are two important types of vertices and edges.

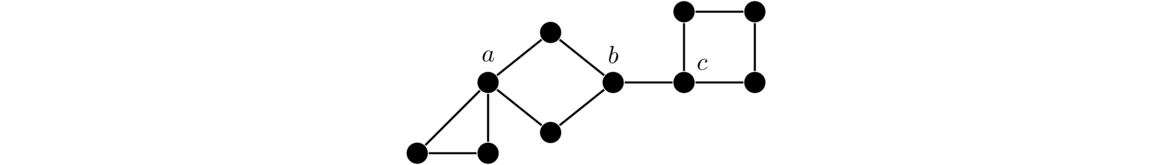

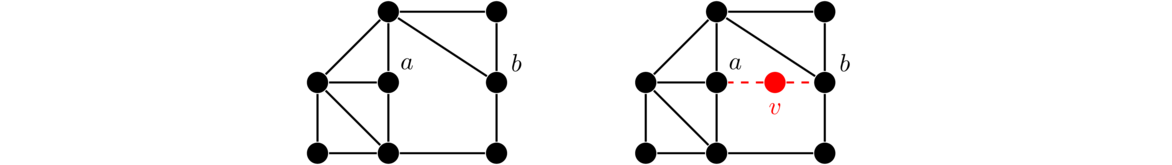

In particular, removing a cut vertex or a cut edge from a connected graph will disconnect the graph. For example, in the graph below on the left, a, b, and c are cut vertices, as deleting any one of them would disconnect the graph. Similarly, since deleting edge bc disconnects the graph, that makes bc a cut edge. There are no other cut vertices and cut edges in the graph.

Cut vertices and cut edges act like bottlenecks when it comes to connectivity. For instance, any path in the graph above from the leftmost to the rightmost vertices must pass through a, b, c, and the edge bc.

Removing a cut vertex could break a connected graph into two components or possibly more. For instance, removing the center of a star will break it into many components. Removing a cut edge can only break a connected graph into two components.

Distance

In a graph, the distance between two vertices is a measure of how far apart they are.

For example, in the graph below, b, c, and d are at a distance of 1 from a. Vertex e is at distance of 2 from a, and vertex f is at a distance of 3 from a.

Notice, for instance that there are multiple paths from a to d, but the shortest path is of length 1, so that is the distance.

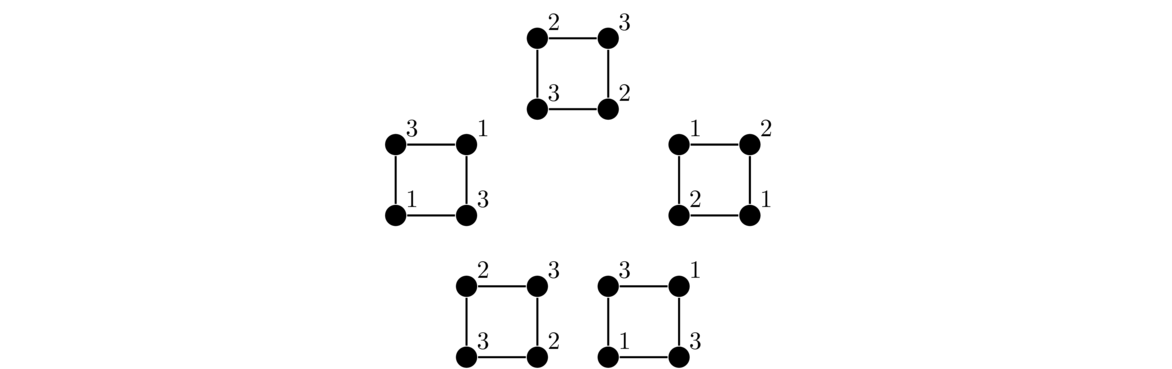

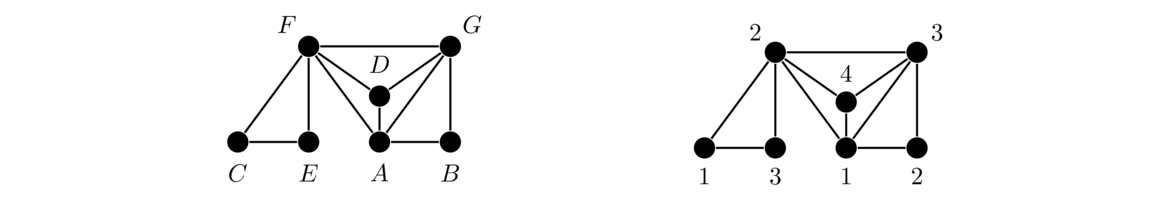

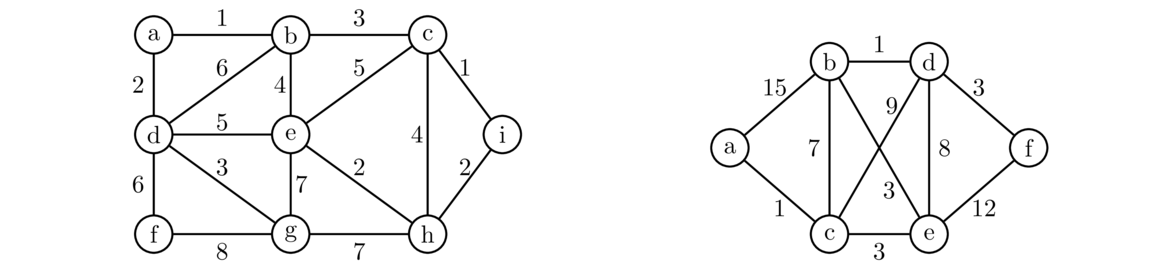

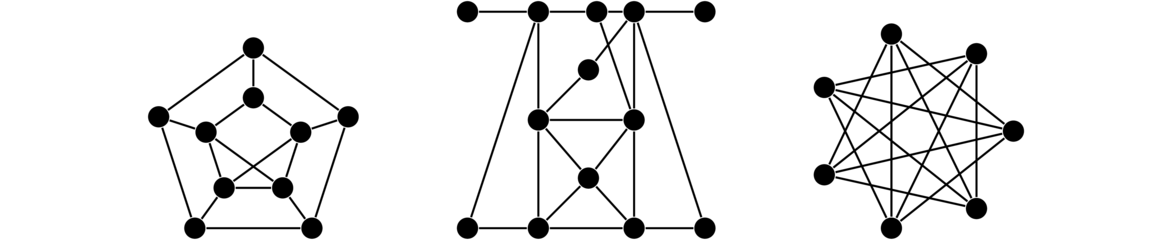

Graph isomorphism

The term isomorphism literally means “same shape.” It comes up in many different branches of math. In graph theory, when we say two graphs are isomorphic, we mean that they are really the same graph, just drawn or presented possibly differently. For example, the figure below shows two graphs which are isomorphic to each other.

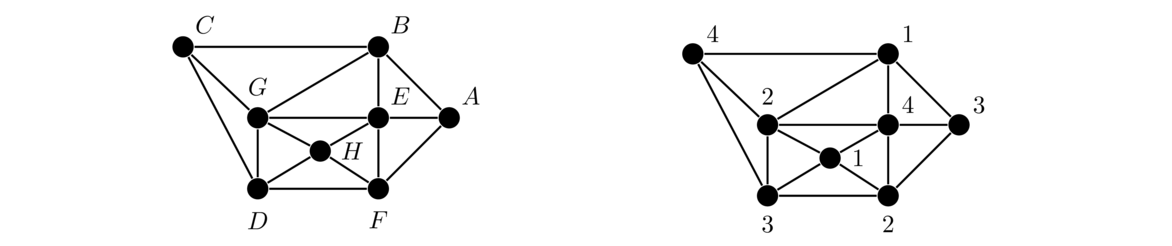

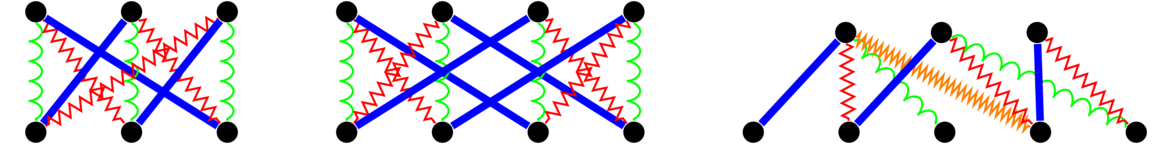

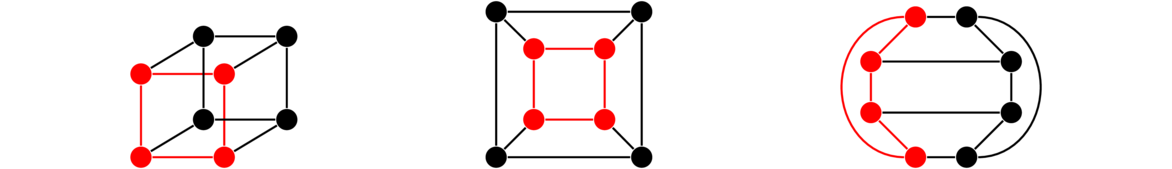

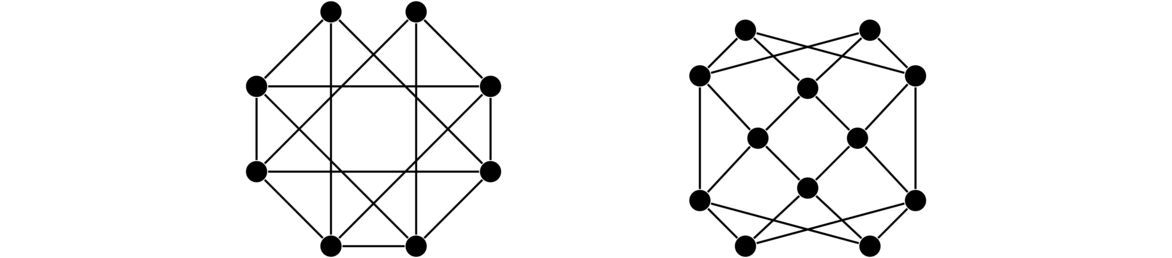

In both graphs, each vertex is adjacent to each other vertex, so these are two isomorphic copies of K4. For a simple example like this one, it's not too hard to picture morphing one graph into the other by sliding vertices around. But for graphs with many vertices, this approach is not feasible. It can actually be pretty tricky to show that two large graphs are isomorphic. For instance, the three graphs below are all isomorphic, and it is not at all trivial to see that.

Before we go any further, here is the formal definition:

In other words, for two graphs to be isomorphic, we need to be able to perfectly match up the vertices of one graph with the vertices of the other, so that whenever two vertices are adjacent in the first graph, the vertices they are matched up with must also be adjacent. And if those vertices are not adjacent in the original, the vertices they are matched up with must not be adjacent either.

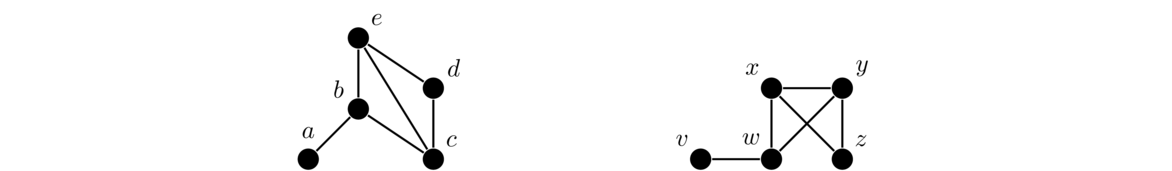

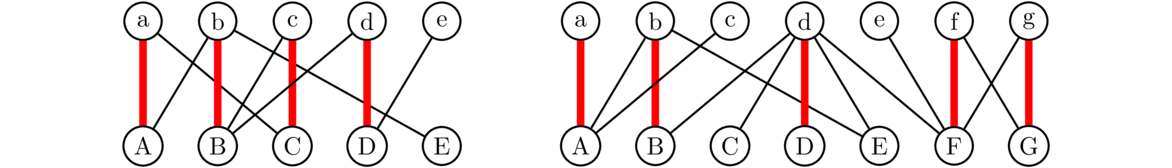

For example, we can use the definition to show the graphs below are isomorphic.

To do this, we match up the vertices as follows:

a ↔ v b ↔ w c ↔ x d ↔ z e ↔ y

Written in function notation, this matching is f(a) = v, f(b) = w, f(c) = x, f(d) = z, and f(e) = y. It is one-to-one and onto. We also need to check that all the edges work out. For instance, ab is an edge in the left graph. Since a and b are matched with v and w, we need vw to be an edge in the right graph, and it is. Similarly, there is no edge from a to e in the left graph. Since a and e are matched to v and y, we cannot have an edge in the right graph from v to y, and there is none. Though it's a little tedious, it's possible to check all the edges and nonedges in this way.

Showing two graphs are isomorphic can be tricky, especially if the graphs are large. If we just use the definition, there are many possible matchings we could try, and for each of those, checking that all the edges work out can take a while. There are, however, algorithms that work more quickly than this, and it is a big open question in the field of graph algorithms as to exactly how good these algorithms can be made.

On the other hand, it can often be pretty simple sometimes to show that two graphs are not isomorphic. We need to find a property that the two graphs don't share. Here is a list of a few properties one could use:

- If the two graphs have a different number of vertices or edges

- If the two graphs have different numbers of components

- If the vertex degrees are not exactly the same in each graph

- If the graphs don't contain exactly the same subgraphs

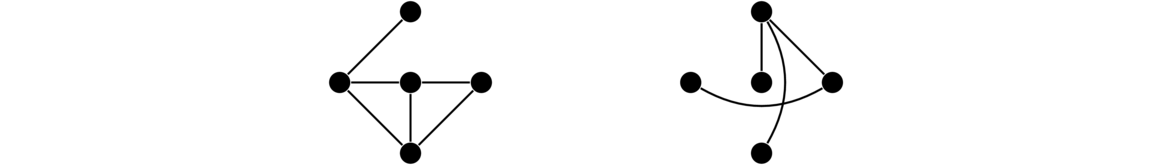

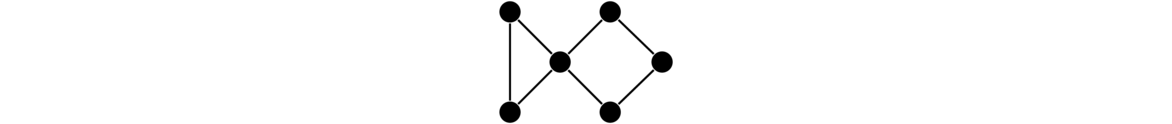

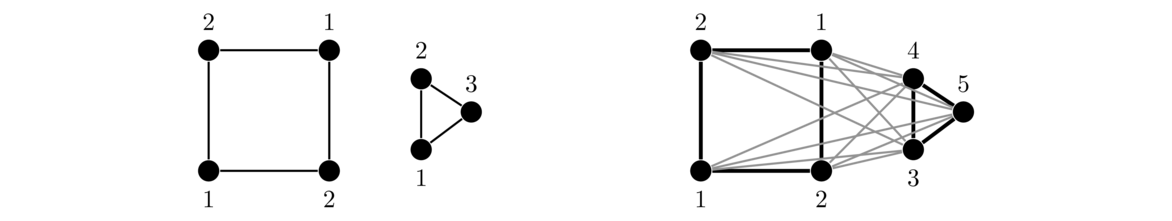

Here is an example. Both graphs below have the same number of vertices, both have the same number edges, both have one component, and both consist of two vertices of degree 1, two vertices of degree 2, and four vertices of degree 3. So the first three properties are no help. However, the right graph contains a triangle, while the left graph does not, so the fourth property comes to our rescue.

There are many other possibilities besides the four properties above. Sometimes, it takes a little creativity. For example, the graphs below are not isomorphic. Note that they do both have the same number of vertices and edges, and in fact the degrees of all the vertices are the same in both graphs. However, the right graph contains a vertex of degree 3 adjacent to two vertices of degree 1, while that is not the case in the left graph. Another way to approach this is that the left graph contains two paths of length 5 (the straight-line path along the bottom, and one starting with the top vertex and then turning left along the path), while the right graph just contains one path of length 5.

New graphs from old graphs

This section explores a few ways of using graphs to create new graphs.

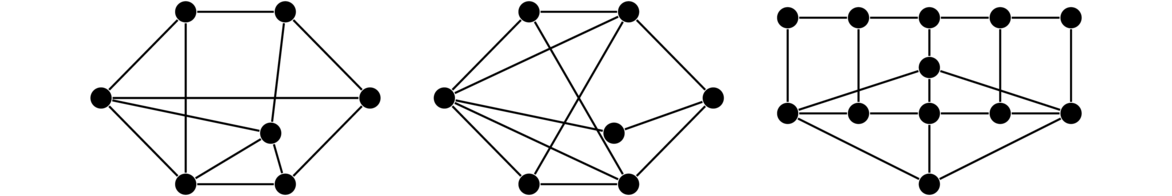

Complements

The complement of a graph G is a graph G with the same vertex set as G, but whose edges are exactly the opposite of the edges in G. That is, uv is an edge of G if and only if uv is not an edge of G. Shown below are a graph and its complement.

One way to think of the complement is that the original plus the complement equals a complete graph. So we can get the complement by taking a complete graph and removing all the edges associated with the original graph.

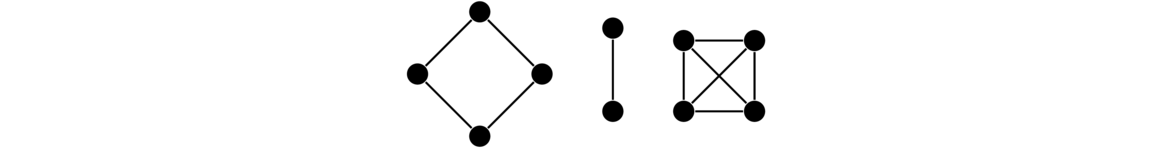

Union

The union of two graphs is obtained by taking copies of each graph and putting them together into one graph. Formally, given graphs G and H, their union G ∪ H has vertex set V(G ∪ H) = V(G) ∪ V(H) and edge set E(G ∪ H) = E(G) ∪ E(H). The same idea works with unions of more than two graphs. For instance, shown below is C4 ∪ K2 ∪ K4.

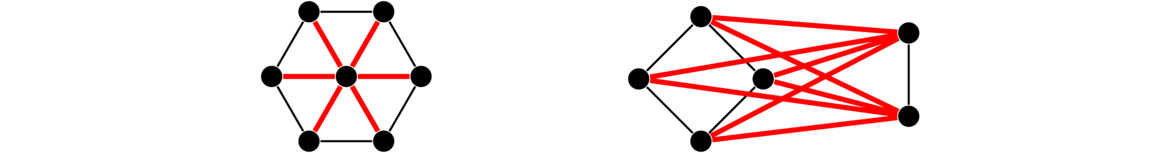

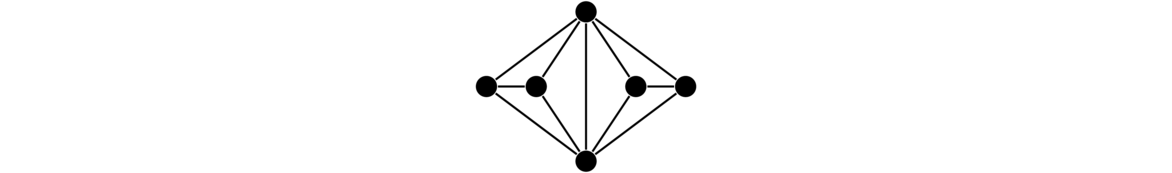

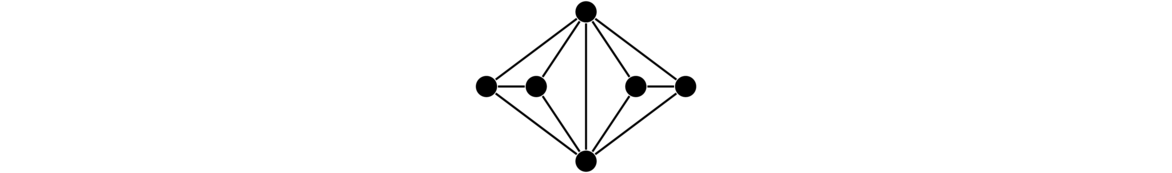

Join

The join of two graphs, G and H, is formed by taking a copy of G, a copy of H, and adding an edge from every vertex of G to every vertex of H.

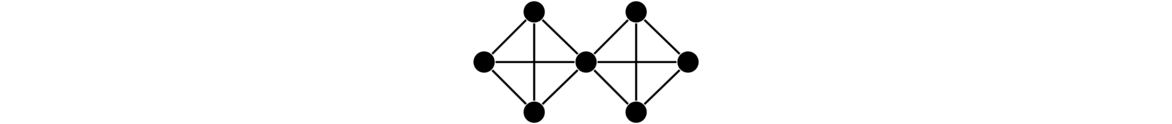

For example, shown below on the left is a type of graph called a wheel, which is K1 ∨ C6. It consists of a 6-cycle and a single vertex, with edges from that single vertex to every vertex of the cycle. On the right we have C4 ∨ K2.

Formally, the join G ∨ H has vertex set V(G∨ H) = V(G) ∪ V(H) and edge set E(G∨ H) = E(G) ∪ E(H) ∪ {gh : g ∈ V(G), h ∈ V(H)}.

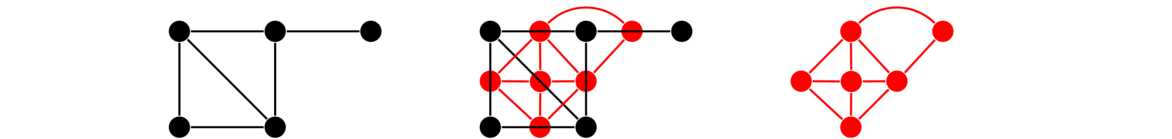

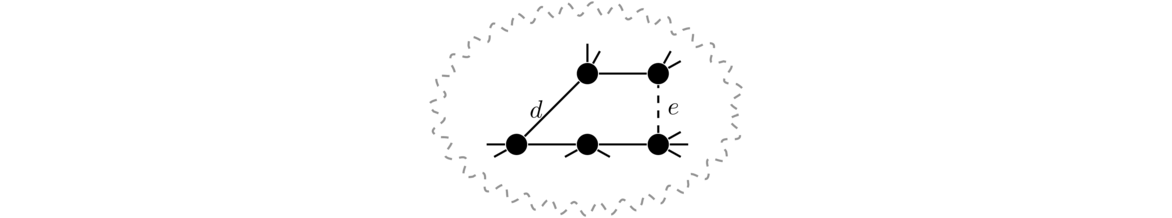

Line graph

The line graph, L(G), of a graph G is a graph that we create from the edges of G. Each edge of G becomes a vertex of L(G). Two vertices in L(G) are adjacent if and only if their corresponding edges in G share an endpoint. Shown below is an example of a graph and the construction of its line graph.

To create the line graph above, we start by making a vertex for each edge of the original graph. Then we create the edges of the line graph according to the rule above. For example, the top two edges of the original graph share an endpoint, so we will get an edge in the line graph between the vertices representing those edges. Similarly, the diagonal edge in the original shares endpoints with 4 other edges, so in the line graph it will have degree 4.

Sometimes questions about edges of G can be translated to questions about vertices of L(G).

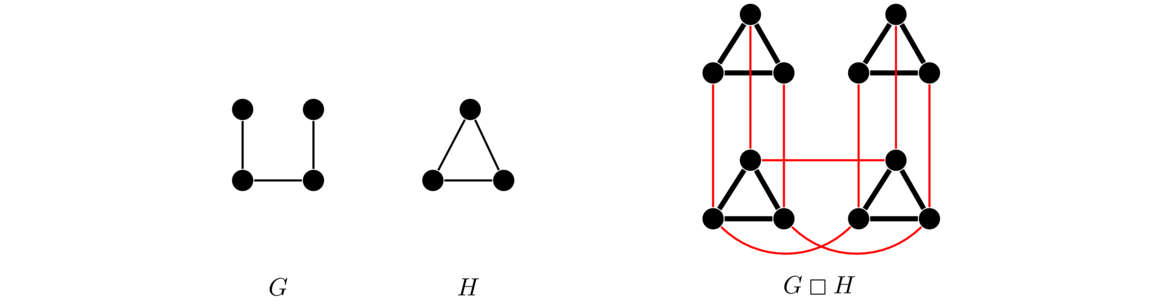

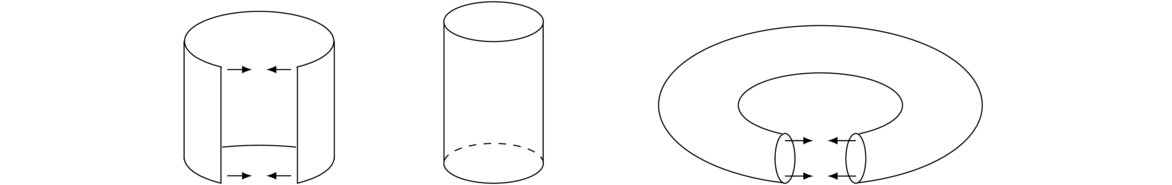

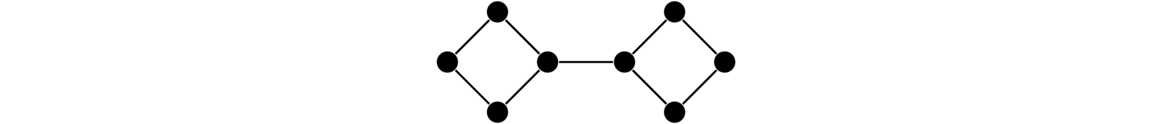

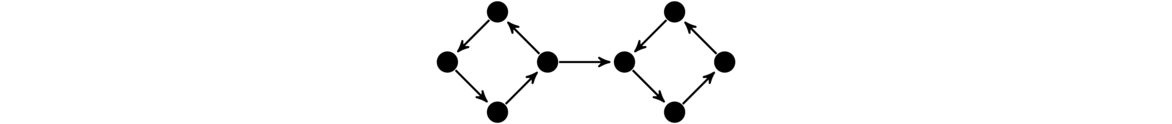

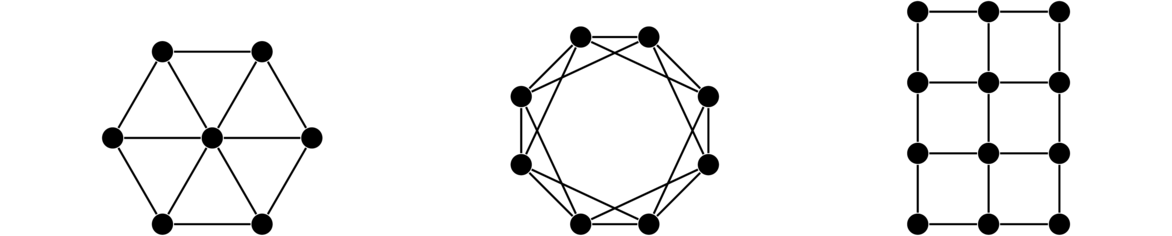

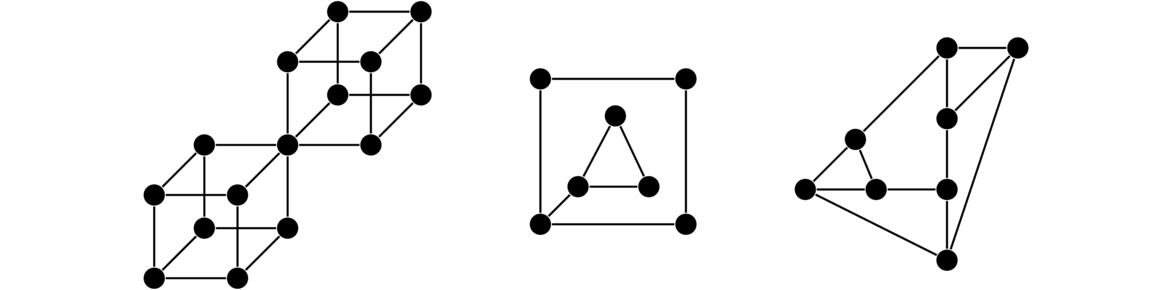

Cartesian product

The Cartesian product graph is related to the Cartesian product of sets. The basic idea is, starting with two graphs G and H, to get the Cartesian product G □ H, we replace each vertex of G with an entire copy of H. We then add edges between two copies provided there was an edge in G between the two vertices those copies replaced. And we only add edges between the two copies between identical vertices in the copies. An example is shown below.

Notice how each of the four vertices of G is replaced with a copy of the triangle, H. Then wherever there is an edge in G, we connect up the triangles, and we do this by connecting up like vertices with like vertices.

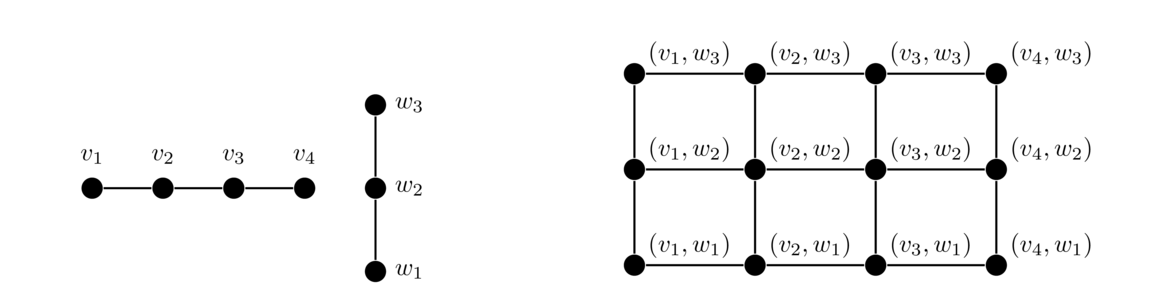

Formally, the vertex set of the Cartesian product G □ H is V(G) × V(H) (that is, all pairs (v,w), with v ∈ V(G) and w ∈ V(H)). The edges of the Cartesian product are as follows: for each v ∈ V(G) there is an edge between (v,w1) and (v,w2) if and only if w1w2 ∈ E(H); and for each w ∈ V(H), there is an edge between (v1,w) and (v2,w) if and only if v1v2 ∈ E(G).

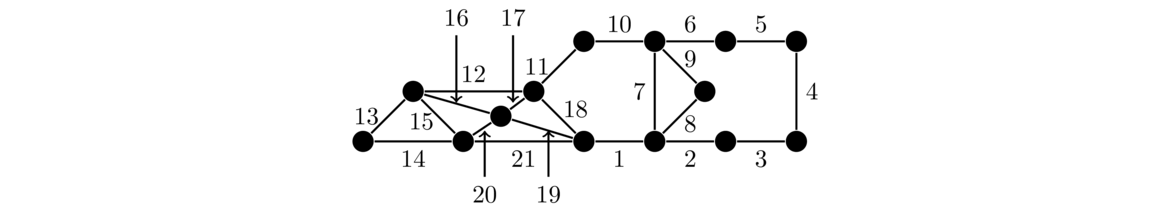

Here is another example of a Cartesian product: a grid graph. Here we have labeled the vertices with their “Cartesian coordinates.”

Hypercubes are important graphs defined by the Cartesian product. The first hypercube, Q1 is K2. The second hypercube, Q2 is Q1 □ K2, a square. The third hypercube Q3 is Q2 □ K2, which gives a graph representing an ordinary cube. In general, Qn = Qn-1 □ K2 for n ≥ 2. The first four are shown below. The last one is a drawing of a 4-dimensional cube on a flat plane. It is shown with the two copies of Q3 highlighted.

In general, each hypercube comes from two copies of the previous hypercube connected up according to the Cartesian product rules.

Note: Notice from the definition of the Cartesian product that G □ H is isomorphic to H □ G.

Proofs, Constructions, Algorithms, and Applications

Basic results and proving things about graphs

In these notes, we will cover a number of facts about graphs. It's nice to be sure that something is true and to know why it is true, and that's what a mathematical proof is for. Our proofs, like many in math, are designed to convince a mathematical reader that a result is true. To keep things succinct, we don't always include every detail, relying on the reader to fill in small gaps. The proofs themselves will often consist more of words than of symbols.

The Degree sum formula and the Handshaking lemma

Here is the first result that many people learn in graph theory.

Note that if we want to be a bit more formal, we can state the result above as follows:

In particular, the degree sum must be even. These leads to the following consequence, called the Handshaking lemma.

The “Handshaking lemma' comes from the following: Suppose some people at a party shake hands with others. Then there must be an even number of people who shook an odd number of hands. Here the people are vertices, with an edge between two vertices if the corresponding people shook hands.

To summarize: the sum of the degrees in any graph is twice the number of edges, and graphs with an odd number of odd-degree vertices are impossible. We will make use of these results repeatedly in these notes, so know them well.

An application of the Degree sum formula

Here is one use of the Degree sum formula.

The proof above, like the proof of the Handshaking lemma, is an example of a proof by contradiction. We start by assuming that the result is false, and end up contradicting something we know must be true. The conclusion then is that our result must be true. Proof by contradiction is a useful and powerful technique for proving things.

Another theorem about vertex degrees

Continuing with vertex degrees, here is another result that might be a little surprising.

Sometimes, with proofs like these, it helps to look at a specific example. Suppose we have n = 5 vertices. Then if the degrees are all different, the degrees must be 0, 1, 2, 3, and 4. That degree 4 vertex is adjacent to all of the others, including the degree 0 vertex, which isn't possible. So the degrees must be five integers in the range from 0 to 3 or five integers in the range from 1 to 4, meaning there will be a repeated degree.

Notice where in the proof the fact that the graph is simple is used—namely, in guaranteeing that we have to have a vertex adjacent to all others along with a vertex of degree 0. If we remove the requirement that the graph be simple and allow loops and multiple edges, then the result turns out to be false. It's a nice exercise to try to find counterexamples in that case.

Notice also that we use a proof by contradiction here again. Some mathematicians (but not this author) feel that proofs by contradiction are poor mathematical style and that direct proofs should be preferred. The proof above could be reworked into a direct proof using the pigeonhole principle. It's another nice exercise to try to do that.

A proof about connectedness

To get some more practice with proofs, here is another result, this one about connectedness.

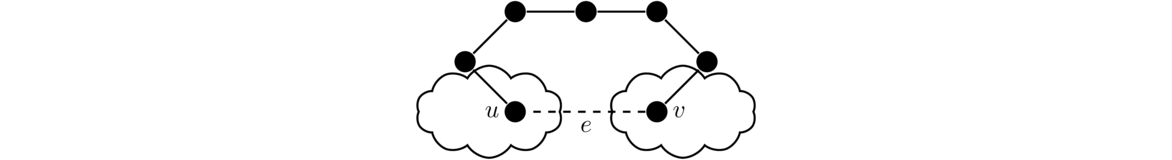

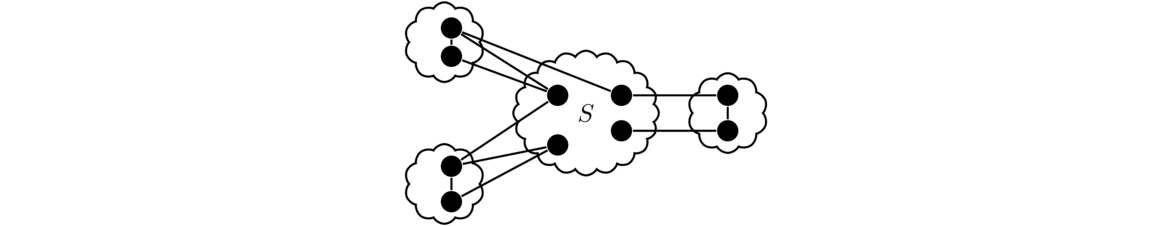

The proof is only a couple of lines, but a fair amount of thinking and work goes into producing those lines. To start, we go straight to the definition of connectedness. Specifically, to show G is connected, we have to show there is a path between any two vertices. Let's call them u and v, as we did in the proof. Next, it helps to draw a picture. We know that G is disconnected, so we might try a picture like the one below.

The picture shows two components, though there might be more. We know that when we take the complement, any two components of the original will be joined together by all possible edges between vertices. So if u and v started out in different components, they would be adjacent in the complement. So that's one case taken care of. But now what if u and v are in the same component? We can't forget about that case.

If u and v are in the same component, then there is a path between them in G, but maybe they end up separated when we take the complement. This turns out not to be a problem. Recall that we have all possible edges between any two components, so we can create a two-step path from u to v by jumping from u to some vertex w in a different component and then back to v.

To summarize: We are trying to find a path between any two vertices u and v in G. We have broken things into two cases: one where u and v are in different components, and one where u and v are in the same component. When doing proofs, it is often useful to break things into cases like this. And it is important to make sure that every possible case is covered.

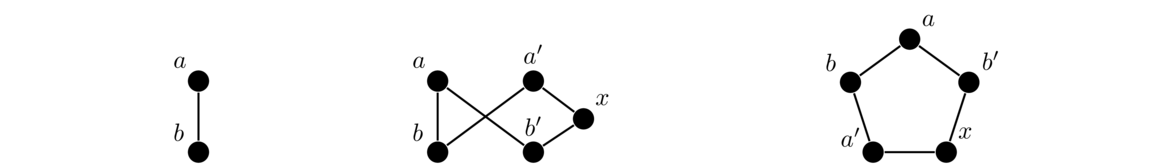

The theorem above says that the complement of a disconnected graph is connected. What if we try changing it around to ask if the complement of a connected graph is disconnected? It turns out not to be true in general. To show this we need a single counterexample, like the one below, showing C5 and its complement, both of which are connected.

In general, to prove something requires a logical argument, while to disprove something requires just a single counterexample.

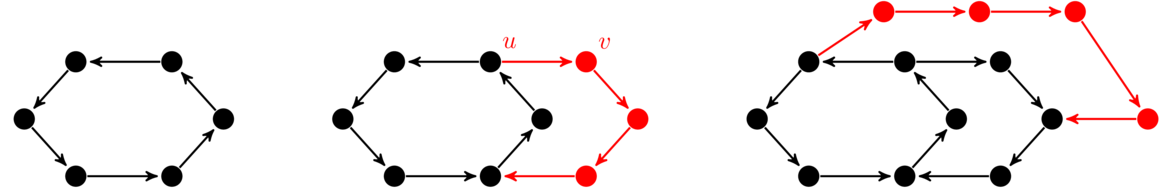

An if-and-only-if proof

Here is another statement, this one a biconditional (if-and-only-if statement).

It is essentially two statements in one, meaning there are two things to prove, namely:

- If e is a cut edge, then it is part of no cycle.

- If e is part of no cycle, then it is a cut edge.

It basically says that being a cut edge is equivalent to not being a part of a cycle. Those two concepts can be used interchangeably. Here is the proof of the theorem:

First, assume e is a cut edge. Then deleting it breaks the graph into more than one component, with u in one component and v in another. Suppose e were part of a cycle. Then going the other way along the cycle from u to v, avoiding edge e, would provide a path from u to v, meaning those vertices are in the same component, a contradiction. Thus e cannot be part of a cycle.

Next, assume e is part of no cycle. Then there cannot be any path between u and v other than along edge e, as otherwise we could combine that path along with e to create a cycle. Thus, if we delete e, there is no path from u to v, meaning u and v are in different components of G-e. So e is a cut edge.

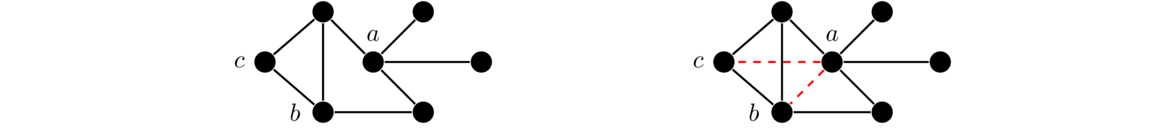

Often when trying to understand a proof like this, or in trying to come up with it yourself, it helps to draw a picture. Here is a picture to help with visualizing what happens in the proof.

Mathematical induction

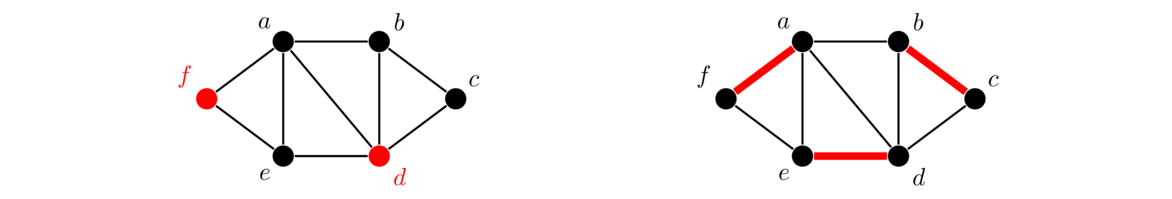

Consider the following problem: Alice and her husband Bob are at a party with 3 other couples. At the party some people shake hands with some others. No one shakes their own hand or the hand of their partner. Alice asks everyone else how many hands they each shook, and everyone gives a different answer. How many different hands did Bob shake?

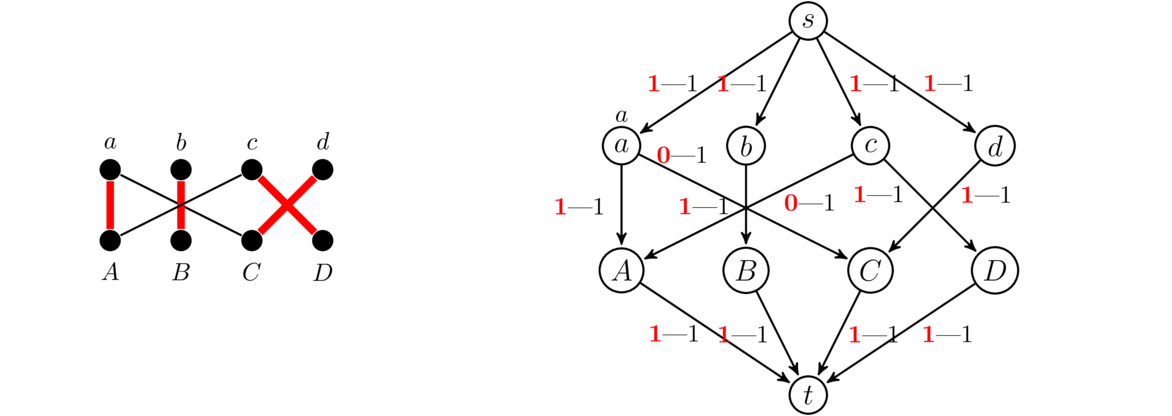

This seems like a bizarre question, like it shouldn't be answerable. Nevertheless, let's start by modeling it with a graph. The people become vertices and edges indicate handshakes. The degree of a vertex is how many hands that vertex's corresponding person shook. See below on the left for what our graph initially looks like.

There are 4 couples and 8 people total. Alice asks the other 7 people and gets 7 different answers. That means the answers must be the integers from 0 to 6. Let p0, p1, …, p6 be the names of the people that shook 0, 1, …, 6 hands, respectively.

Person p6 must have shaken hands with everyone at the party except p6 and the partner of p6. That means that the partner of p6 must be p0, since everyone else must have shaken at least one hand (namely the hand of p6).

It's important to note that p6 can't be Bob. Why? Since p6 shakes hands with everyone except themselves and their partner, that means there can't be any other vertex with degree 0 in the graph besides the partner of p6. If Bob were p6, then both p0 and Alice (who is not one of the pi) would have to shake 0 hands.

Now consider p5. That person shook hands with everyone except p5, the partner of p5 and p0. This means that p1 must be the partner of p5, since everyone else must have shaken at least two hands (namely the hands of p5 and p6. And by the same argument as above, p1 and p5 are not Alice and Bob.

A similar argument to the ones we have just looked at show that p4 and p2 are partners and they are not Alice and Bob. This means that Bob must be p3, having shaken 3 hands. The graph of this ends up looking like the one above on the right.

Suppose we generalize this to a party of n couples. How many hands must Bob have shaken in that case? The answer is n-1. We can show this by generalizing and formalizing the argument above using mathematical induction. As above, we model the party by a graph where the people are vertices and edges indicate handshakes.

The base case is a party with n = 1 couple, just Alice and Bob. Since they are partners, they don't shake each other's hands. So Bob shakes no hands, which is the same as n-1 with n = 1.

Now assume that at any party of n couples satisfying the setup of the problem, Bob shakes n-1 hands. Consider then a party of n+1 couples. We need to show that Bob shakes exactly n hands in this party.

Since the answers that Alice gets are all different, they must be the integers from 0 through 2n. Let p0, p1, …, p2n be the names of the people that shook 0, 1, …, 2n hands, respectively. Then p2n must have shaken hands with everyone else at the party except p2n and the partner of p2n. That is, vertex p2n is adjacent to all vertices of the graph except itself and one other vertex. That other person/vertex, must be p0. So p2n and p0 are partners. As before, p0 and p2n cannot be Alice and Bob (or Bob and Alice).

Now remove p0 and p2n from the party/graph. Doing so leaves a graph corresponding to n couples, where the degree of each vertex has been reduced by exactly 1. This party/graph satisfies the induction hypothesis—namely, it is a party of n couples and everyone that Alice asks has a different degree because their degrees were all different in the original and we have subtracted precisely 1 from all of those degrees.

By the induction hypothesis, Bob must have shaken n-1 hands among this party. Therefore in the original party, Bob shook n hands, those being the n-1 in the smaller party along with the hand of p2n.

If you have never seen induction, then the preceding may likely make no sense to you. Consider picking up a book on Discrete Math to learn some induction. But even if you have seen induction before, the preceding may seem odd. It almost seems like cheating, like we didn't really prove anything. The key here is the work that goes into setting the proof up. In particular, when we remove a couple from the graph to get to the smaller case, we can't remove just any couple. We need to remove a couple that preserves the parameters of the problem, especially the part about Alice getting all different answers. The hard work comes in planning things out. The finished product, the proof, ends up relatively short.

Constructions

Often we will want to build a graph that has certain properties. This section contains a few examples.

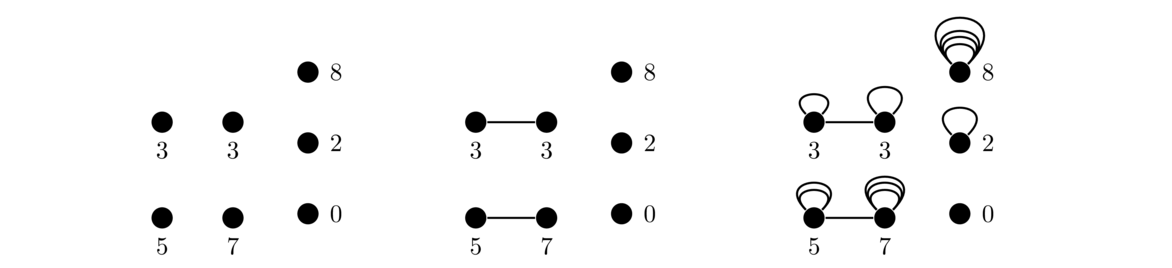

Constructing a multigraph with given degrees

The first question we will answer is this: given a sequence of integers, is there a multigraph for which those integers are the degrees of its vertices? The answer is yes, provided the sequence satisfies the Handshaking lemma, namely that there must be an even amount of odd integers in the sequence. We can show this by constructing the graph.

To see how it works, it helps to use an example. Let's take the sequence 0, 2, 3, 3, 5, 7, 8. Start by creating one vertex for each integer in the sequence. Then pair off the first two odd integers, 3 and 3, and add an edge between their corresponding vertices. Then do the same for the other two odd integers, 5 and 7. Adding the edges between pairs of odd degree vertices essentially takes care of the “odd part” of their degrees, leaving only an even number to worry about. We can use loops to take care of those even numbers since each loop adds 2 to a vertex's degree. See the figure below for the process.

This technique works in general. We start by pairing off the first two odd integers and adding an edge between their vertices, then pairing off the next two odd integers, etc. until we have worked through all the odd integers. It's possible to pair off all the odds since we know there must be an even number of them by the Handshaking lemma. After doing this, add loops to each vertex until its degree matches it sequence value.

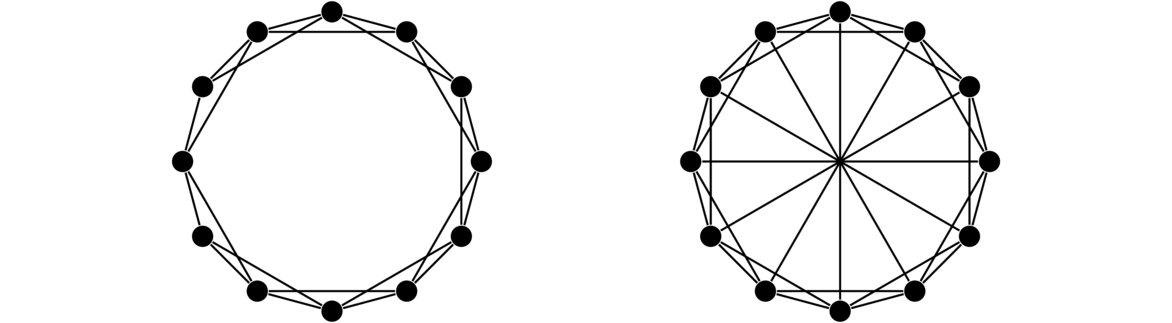

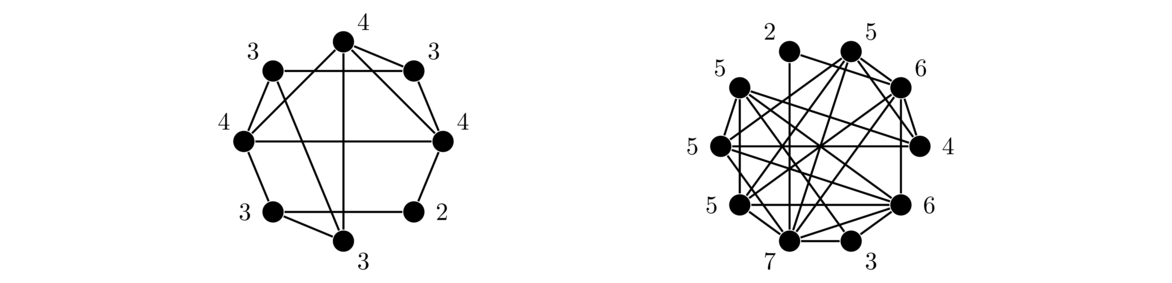

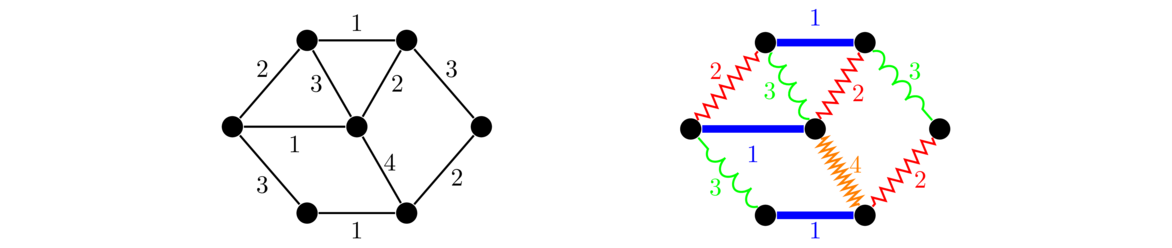

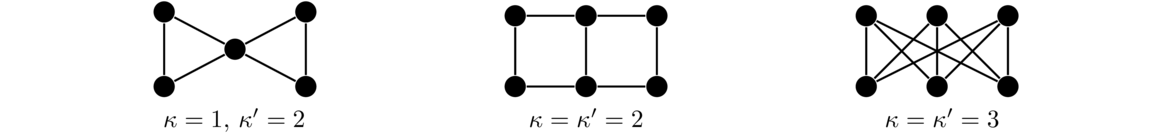

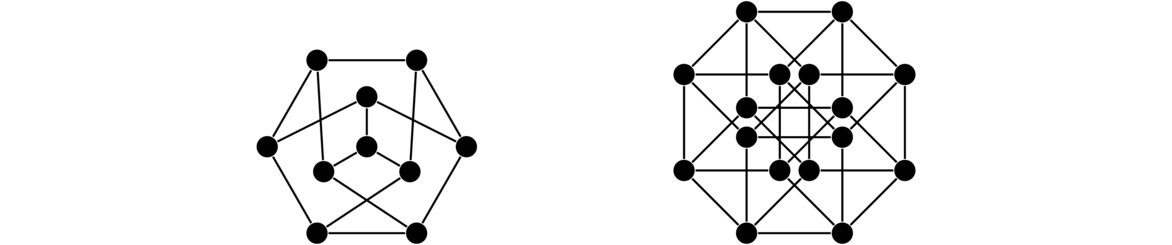

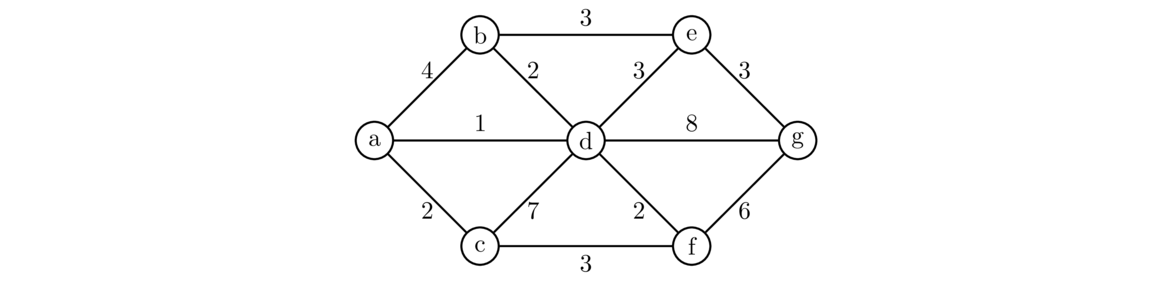

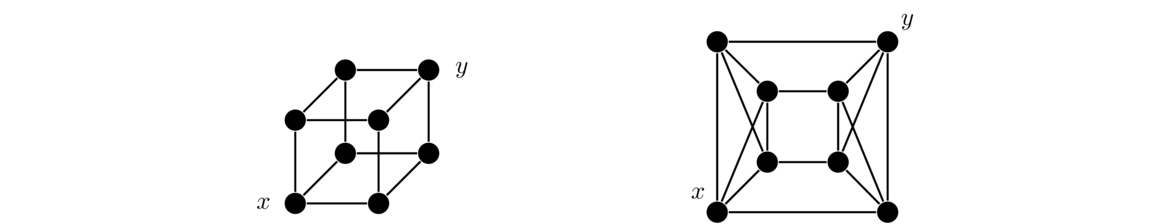

Constructing regular graphs

Our second example is to answer the following question: given integers r and n, is it possible to construct an r-regular simple graph with n vertices? The answer is yes provided r ≤ n-1 and at least one of r and n is even. We need r ≤ n-1 since n-1 is the largest degree a vertex can have in a simple graph, and we need at least one of r and n to be even by the Handshaking lemma.

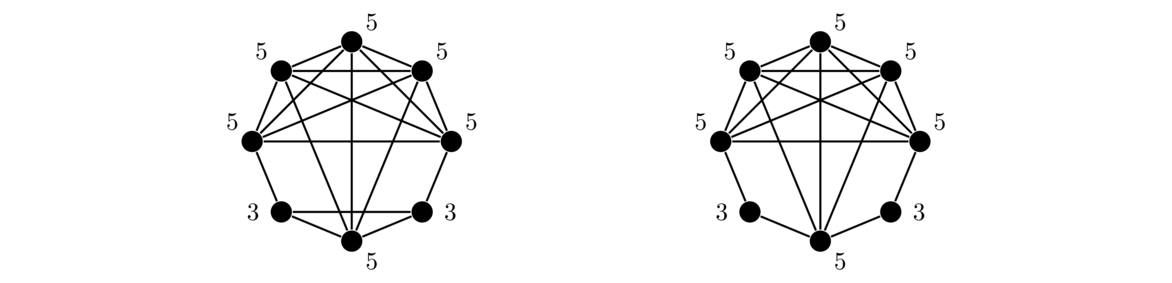

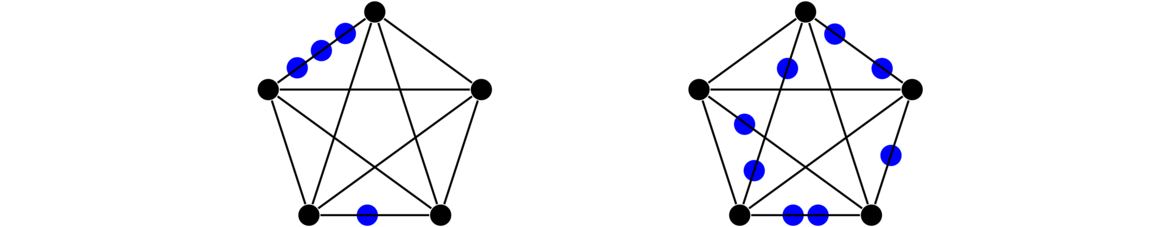

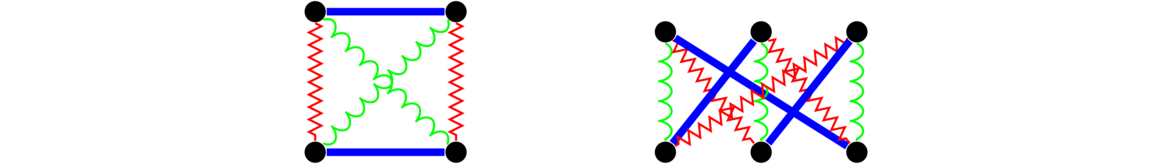

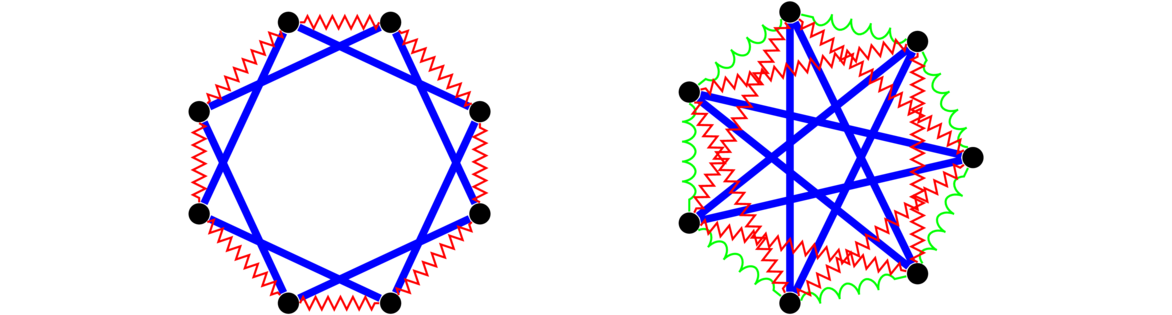

The construction is different depending on if r is even or odd. We'll do the even case first. Start by placing n vertices around a circle. This is a 0-regular graph on n vertices. To create a 2-regular graph from it, join each vertex to its two neighbors. The graph created this way is the cycle Cn. To create a 4-regular graph, join each vertex additionally to both vertices that are at a distance of 2 from it along the circle. To create a 6-regular graph, join each vertex to both vertices that are at a distance of 3 from it along the circle. This same idea can be extended to create larger regular graphs of even degree. The graph below on the left is an example of the 4-regular graph on 12 vertices created by this process.

To create a regular graph of odd degree, use the exact same process, but additionally connect each vertex to the vertex on the circle diametrically opposite to it. Shown above on the right is a 5-regular graph on 12 vertices. Note that in the figure, the lines all cross at the center, giving the illusion of a vertex there, but there is not one.

It is worth describing this construction formally. Here is one way to do it: Call the vertices v0, v1, …, vn-1. For an r-regular graph, where r is even, for each vertex vi, add edges from vi to v(i± k) mod n, for k = 1, 2, … r/2. If r is odd, add all of those edges along with an edge from vi to v(i+n/2) mod n.

These graphs are called Harary graphs, after the graph theorist Frank Harary.

Graph Representations

Graph theory has a lot of applications to real problems. Those problems often are described by graphs with hundreds, thousands, or even millions of vertices. For graphs of that size, we need a way of representing them on a computer. There a two ways this is usually done: adjacency matrices and adjacency lists.

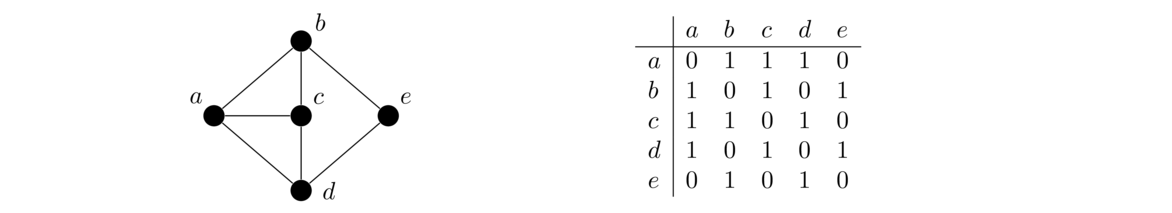

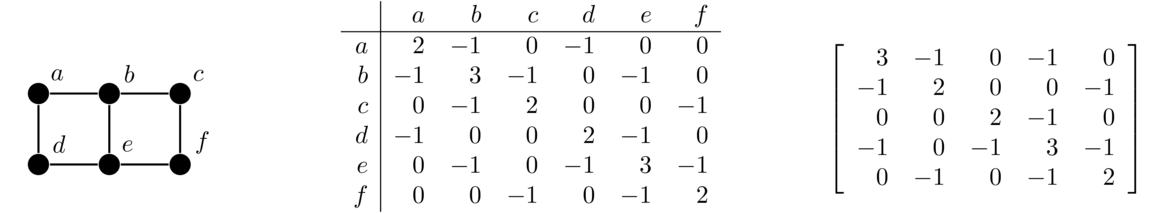

Adjacency matrix

The idea is that we use a matrix to keep track of which vertices are adjacent to which other vertices. An entry of 1 in the matrix indicates two vertices are adjacent and a 0 indicates they aren't adjacent. For instance, here is a graph and its adjacency matrix:

Adjacency lists

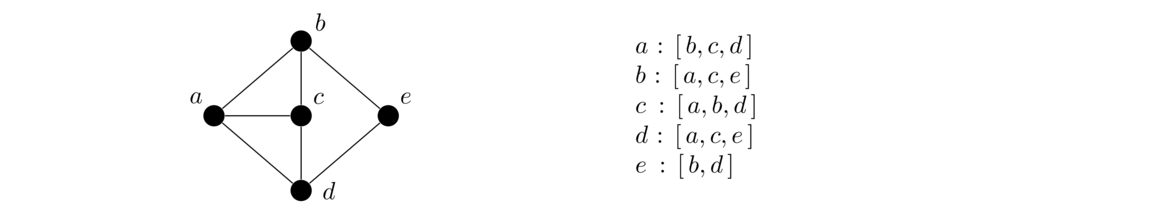

The second approach to implementing a graph is to use adjacency lists. For each vertex of the graph we keep a list of the vertices it is adjacent to. Here is a graph and its adjacency lists:

Adjacency matrices vs. adjacency lists

Adjacency matrices make it very quick to check if two vertices are adjacent. However, if the graph is very large, adjacency matrices can use a lot of space. For instance, a 1,000,000 vertex graph would require 1,000,000 × 1,000,000 entries, having a trillion total entries. This would require an unacceptably large amount of space.

Adjacency lists use a lot less space than adjacency matrices if vertex degrees are relatively small, which is the case for many graphs that arise in practice. For this reason (and others) adjacency lists are used more often than adjacency matrices when representing a graph on a computer.

Coding a graph class

Here is a quick way to create a graph class in Python using adjacency lists:

class Graph(dict):

def add(self, v):

self[v] = set()

def add_edge(self, u, v):

self[u].add(v)

self[v].add(u)

One thing worth noting is that we are actually using adjacency sets instead of lists. It's not strictly necessary, but it does make a few things easier later on. The class inherits from the Python dictionary class, which gives us a number of useful things for free. For instance, if our graph is called G with a vertex 'a', then G['a'] will give us the neighbors of that vertex. We have created two methods for the class. The first one adds a vertex. To do so, it creates an entry in the dictionary with an empty adjacency list (set). The other method is for adding an edge. We do that by adding each endpoint to the other endpoint's adjacency list.

Below is an example showing how to create a graph and add some vertices and edges:

G = Graph()

G.add('a')

G.add('b')

G.add('c')

G.add_edge('a', 'b')

G.add_edge('a', 'c')

Here is a quicker way to add a bunch of vertices and edges:

G = Graph()

for v in 'abcde':

G.add(v)

for e in 'ab ac bc ad de'.split():

G.add_edge(e[0], e[1])

Below we show how to print a few facts about the graph:

print(G)

print('Neighbors of a: ', G['a'])

print('Degree of a:', len(G['a']))

print('Is there a vertex z?', 'z' in G)

print('Is there an edge from a to b?', 'b' in G['a'])

print('Number of vertices:', len(G))

for v in G: # loop over and print all the vertices

print(v)

Algorithms

One of the most important parts of graph theory is the study of graph algorithms. An algorithm is, roughly speaking, a step-by-step process for accomplishing something. For instance, the processes students learn in grade school for adding and multiplying numbers by hand are examples of algorithms. In graph theory, there are algorithms to find various important things about a graph, like finding all the cut edges or finding the shortest path between two vertices.

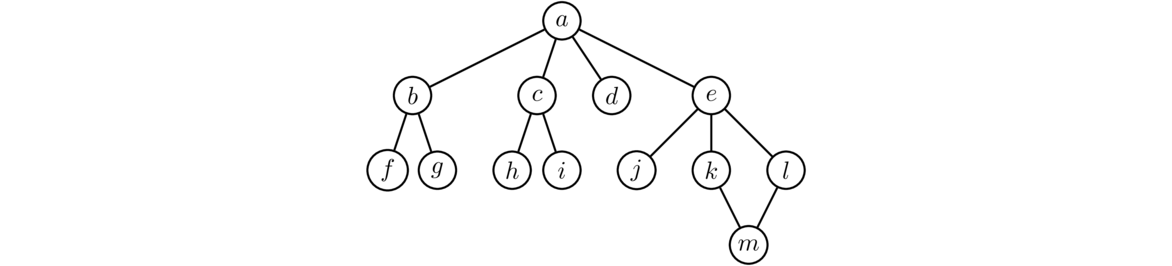

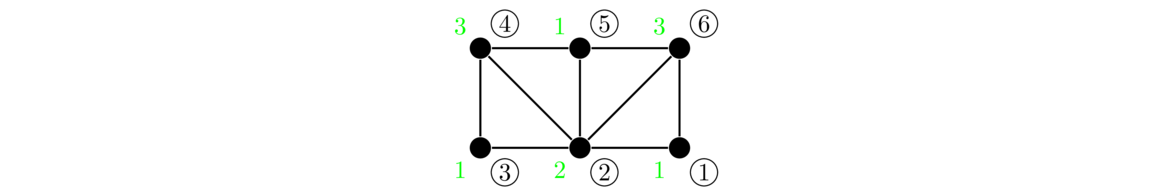

One of the most fundamental tasks on a graph is to visit the vertices in a systematic way. We will look at two related algorithms for this: breadth-first search (BFS) and depth-first search (DFS). The basic idea of each of these is we start somewhere in the graph, then visit that vertex's neighbors, then their neighbors, etc., all the while keeping track of vertices we've already visited to avoid getting stuck in an infinite loop.

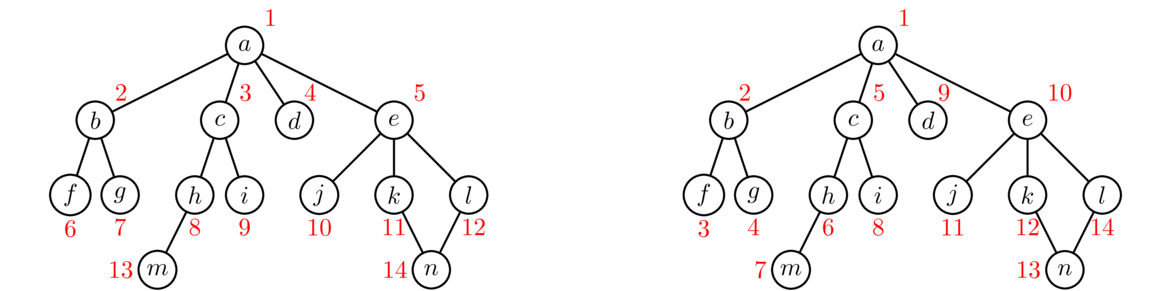

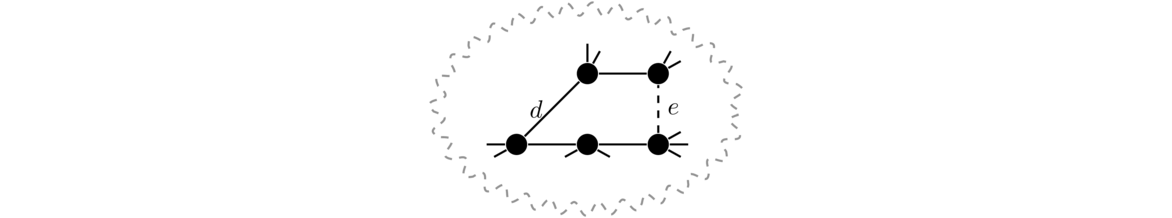

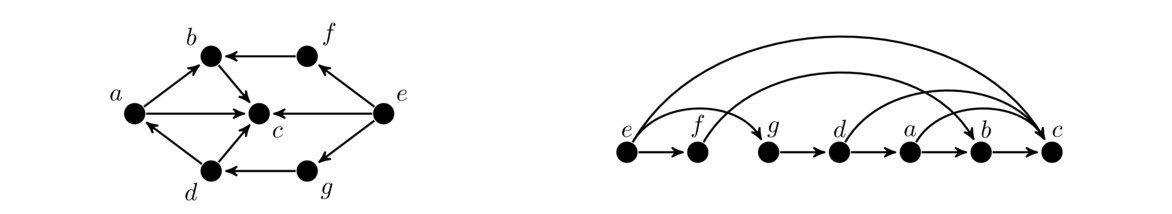

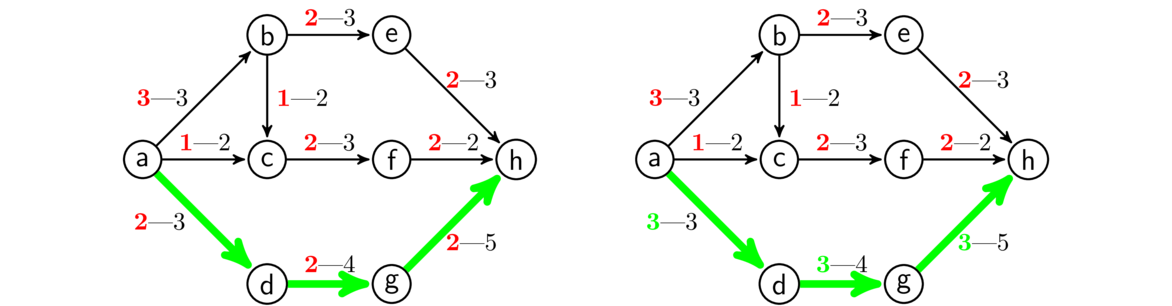

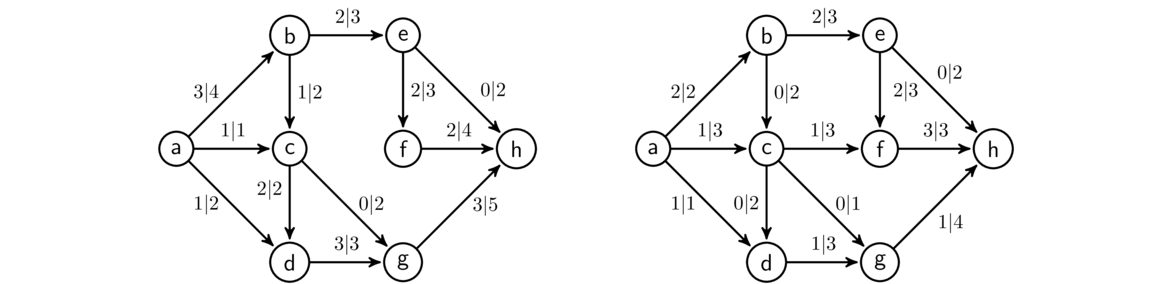

The figure below shows the order in which BFS and DFS visit the vertices of a graph, starting at vertex a. Assume that when choosing between neighbors, it goes with the one that is alphabetically first.

Notice how BFS fans out from vertex a. Every vertex at distance 1 from a is visited before any vertex at distance 2. Then every vertex at distance 2 is visited before any vertex at distance 3. DFS, on the other hand, follows a path down into the graph as far as it can go until it gets stuck. Then it backtracks to its previous location and tries searching from there some more. It continues this strategy of following paths and backtracking.

These are the two key ideas: BFS fans out from the starting vertex, doing a systematic sweep. DFS just goes down into the graph until it gets stuck, and then backtracks. If we are just trying to find all the vertices in a graph, then both BFS and DFS will work equally well. However, if we are searching for vertices with a particular property, then depending on where those vertices are located in relation to the start vertex, BFS and DFS will behave differently. BFS, with its systematic search, will always find the closest vertex to the starting vertex. However, because of its systematic sweep, it might take BFS a while before it gets to vertices far from the starting vertex. DFS, on the other hand, can very quickly get far from the start, but will often not find the closest vertex first.

Code for BFS

In both BFS and DFS, we start at a given vertex and see what we can find from there. The searching process consists of visiting a vertex, looking at its neighbors, and adding any new neighbors to two separate lists. One list (actually a set) is for keeping track of what vertices we have already found. The other list keeps track of the order we will visit vertices to see what their neighbors are. Here is some code implementing BFS:

def bfs(G, start):

found = {start}

waiting = [start]

while waiting:

w = waiting.pop(0)

for n in G[w]:

if n not in found:

found.add(n)

waiting.append(n)

return found

The function takes two parameters: a graph G and a vertex start, which is the vertex we start the search at. We maintain a set called found that remembers all the vertices we have found, and we maintain a list called waiting that keeps of the vertices we will be visiting. It acts like a queue. Initially, both start out with only start in them. We then continually do the following: pop off a vertex from the waiting list and loop through its neighbors, adding any neighbor we haven't already found into both the found set and waiting list.

The while loop keeps running as long as the waiting list is not empty, i.e. as long as there are still vertices we haven't explored. At the end, we return all the vertices that were discovered by the search.

Let's run BFS on the graph below, starting at vertex a. The first thing the algorithm does is add each of the neighbors of a to the waiting list and found set. So at this point, the waiting list is [b,c,d,e]. BFS always visits the first vertex in the waiting list, so it visits b next and adds its neighbors to the list and set. So now the waiting list is [c,d,e,f,g]. For the next step, we take the first thing off the waiting list, which is c and visit it. We add its neighbors h and i to the list and set. This gives us a waiting list of [d,e,f,g,h,i]. As the process continues, the waiting list will start shrinking and the found set will eventually include all the vertices.

Note that the code has been deliberately kept small. It has the effect of finding all the vertices that can be reached from the starting vertex. We will soon see how to modify this code to do more interesting things.

Also note that We could use a list for found instead of a set, but the result would be substantially slower for large graphs. The part where we check to see if a vertex is already in the found set is the key. The algorithm Python uses to check if something is in a set is much faster than the corresponding list-checking algorithm.

Code for DFS

One way to implement DFS is to use the exact same code as BFS except for one extremely small change: Use waiting.pop() instead of waiting.pop(0). Everything else stays the same. Using waiting.pop() changes the order in how we choose which vertex to visit next. DFS visits the most recently added vertex, while BFS visits the earliest added vertex. BFS treats the list of vertices to visit as a queue, while DFS treats the list as a stack. Here is the code:

def dfs(G, start):

found = {start}

waiting = [start]

while waiting:

w = waiting.pop()

for n in G[w]:

if n not in found:

found.add(n)

waiting.append(n)

return found

Though it works, it's probably not the best way to implement DFS. Recall that DFS works by going as down in the tree until it gets stuck, then backs up one step, tries going further along a different path until it gets stuck, backs up again, etc. One benefit of this approach is that it doesn't require too much memory. The search just needs to remember info about the path it is currently on. The code above, on the other hand, adds all the neighbors of a vertex into the waiting list as soon as it finds them. This means the waiting list can grow fairly large, like in BFS. Below is a different approach that avoids this problem.

def dfs(G, start):

waiting = [start]

found = {start}

while waiting:

w = waiting[-1]

N = [n for n in G[w] if n not in found]

if len(N) == 0:

waiting.pop()

else:

n = N[0]

found.add(n)

waiting.append(n)

return found

You'll often see DFS implemented recursively. It's probably more natural to implement it that way. Here is a short function to do just that:

def dfs(G, w, found):

found.add(w)

for x in G[w]:

if x not in found:

dfs(G, x, found)

We have to pass it a set to hold all the vertices it finds. For instance, if we're starting a search at a vertex labeled 'a', we could create the set as S = {'a'} and then call dfs(G, 'a', S).

Applications of BFS and DFS

The algorithms as presented above act as something we can build on for more complex applications. Small modifications to them will allow us to find a number of things about a graph, such as a shortest path between two vertices, the graph's components, its cut edges, and whether the graph contains any cycles.

As an example, here is a modification of BFS to determine if there is a path between two vertices in a graph:

def is_connected_to(G, u, v):

found = [u]

waiting = [u]

while waiting:

w = waiting.pop(0)

for x in G[w]:

if x == v:

return True

elif x not in found:

found.append(x)

waiting.append(x)

return False

The main change is when we look at neighbors, if the vertex we are looking for shows up, then we stop immediately and return True. If we get all the way through the search and haven't found the vertex, we return False.

Shortest path

Here is another example, this one a modification to BFS to return the shortest path between two vertices in a graph. The key difference between this code and the BFS code is that we replace the found set with a Python dictionary that keeps track of each vertex's parent. That is, instead of keeping track only of the vertices that we have found, we also keep track of what vertex we were searching from at the time. This allows us, once we find the vertex we are looking for, to backtrack from vertex to vertex to give the exact path.

def shortest_path(G, u, v):

waiting = [u]

found = {u : None} # A dictionary instead of a set

while waiting:

w = waiting.pop(0)

for x in G[w]:

if x == v: # If we've found our target, then backtrack to get path

Path = [x]

x = w

while x != None:

Path.append(x)

x = found[x]

Path.reverse()

return Path

if x not in found:

found[x] = w

waiting.append(x)

return [] # return an empty list if there is no path between the vertices

Note also that we use BFS here and not DFS. Remember that BFS always searches all the vertices at distance 1, followed by all the vertices at distance 2, etc. So whatever path it finds is guaranteed to be the shortest. Replacing BFS with DFS here would give still us a path between the vertices, but it could be long and meandering.

Components

The basic versions of BFS and DFS that we presented return all the vertices in the same component as the starting vertex. With a little work, we can modify this to return a list of all the components in the graph. We do this by looping over all the vertices in the graph and running a BFS or DFS using each vertex as a starting vertex. Of course, once we know what component a vertex belongs to, there is no sense in running a search from it, so in our code we'll skip vertices that we have already found. Here is the code:

def components(G):

component_list = []

found = set()

for w in G:

if w in found: # skip vertices whose components we already know

continue

# now run a BFS starting from vertex w

found.add(w)

component = [w]

waiting = [w]

while waiting:

v = waiting.pop(0)

for u in G[v]:

if u not in found:

waiting.append(u)

component.append(u)

found.add(u)

component_list.append(component)

return component_list

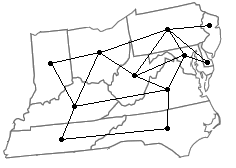

Applications of graphs

Graphs lend themselves to solving a wide variety of problems. We will see a number of applications in later chapters. In this section we introduce a few applications of material we have already covered.

Graph theory for solving an old puzzle

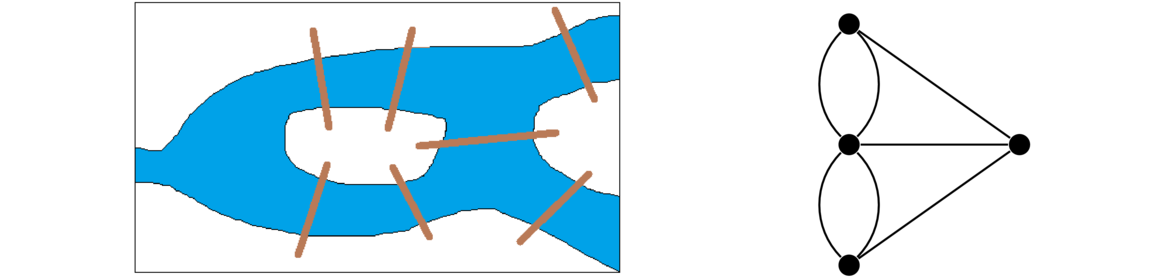

This first application doesn't really apply to the real world, but it does demonstrate how we can model something with a graph. It is about the following puzzle that is over 1000 years old:

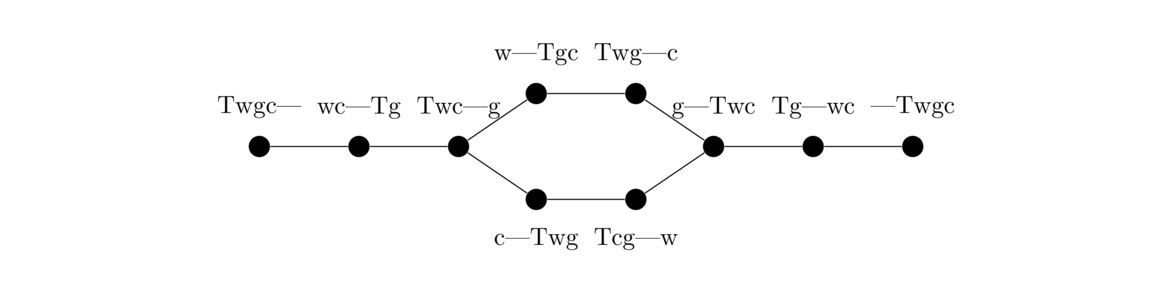

A traveler has to get a wolf, a goat, and a cabbage across a river. The problem is that the wolf can't be left alone with the goat, the goat can't be left alone with the cabbage, and the boat can only hold the traveler and a single animal/vegetable at once. How can the traveler get all three items safely across the river?

To model this problem with a graph, it helps to think of the possible “states” of the puzzle. For example, the starting state consists of the traveler, wolf, goat, and cabbage all on one side of the river with the other side empty. We can represent this state by the string “Twgc|”, where T, w, g, and c stand for the traveller, wolf, goat, and cabbage. The vertical line represents the river. The state where the wolf and cabbage are on the left side of the river and the traveller and goat are on the right side would be represented by the string “wc|Tg”.

To model this as a graph, each state/string becomes a vertex, and we have an edge between two vertices provided it is possible, following the rules of the puzzle, to move from the state represented by the one vertex to the state represented by the other in one crossing. For instance, we would have an edge between Twgc| and wc|Tg, because in we can get from one to the other in one crossing by sending the traveler and the goat across the river. Shown below is the graph we obtain.

We have omitted impossible states, like wg|Tc, from the graph above. Once we have a graph representation, we could use an algorithm like DFS or BFS to search for a path from the starting state, Twgc|, to the ending state, |Twgc. Now obviously that would be overkill for this problem, but it could be used for a more complex puzzle.

For example, you may have seen the following water jug problem: You have two jugs of certain sizes, maybe a 7-gallon and an 11-gallon jug, and you want to use them to get exactly 6 gallons of water. You are only allowed to (1) fill up a pail to the top, (2) dump a pail completely out, or (3) dump the contents of one pail into another until the pail is empty or the other is full. There is no estimating allowed.

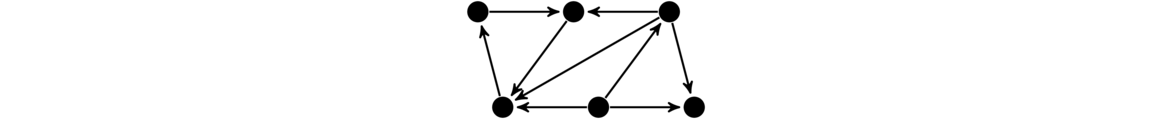

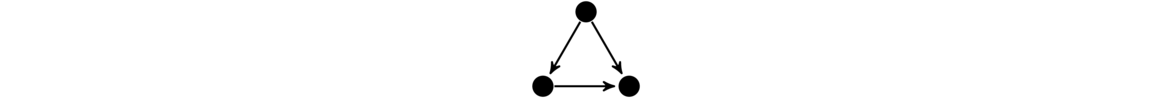

To model this as a graph, the vertices are states which can be represented as pairs (x,y) indicating the amount of water currently in the two jugs. Edges represent valid moves. For instance, from the state (5,3), one could go to (0,3) or (5,0) by dumping out a pail, one could go to (7,3) or (5,11) by filling a pail up, or one could go to (0,8) by dumping the first into the second. One note is that these edges are one-way edges. We will cover those later when we talk about digraphs. But the main point is that with a little work, we can program in all the states and the rules for filling and emptying pails, and then run one of the searching algorithms to find a solution.

This technique is useful a number of other contexts. For instance, many computer chess-playing programs create a graph representing a game, where the states represent where all the pieces are on the board, and edges represent moves from one state to another. The program then searches as far out in the graph as it can go (it's a big graph), ranking each state according to some criteria, and then choosing its favorite state to move to.

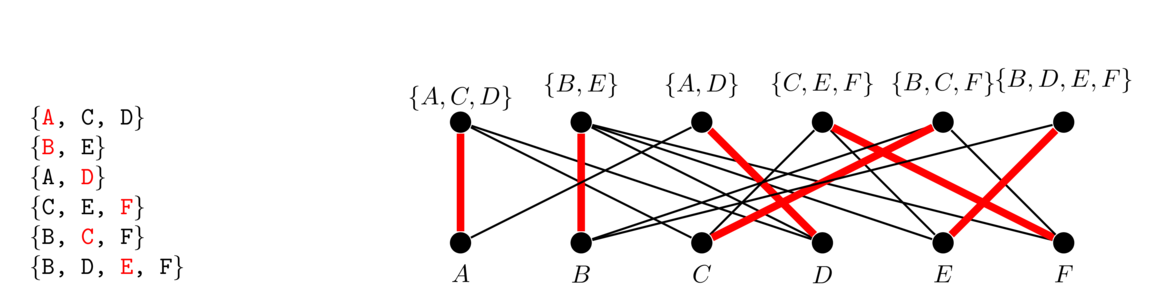

Graphs and movies

From the International Movie Database (IMDB), it is possible to get a file that contains a rather large number of movies along with the people that have acted in each movie. A fun question to ask is to find a path from one actor X to another Y, along the lines of X acted in a movie with A who acted in a movie with B who acted in a movie with Y. There are all sorts of things we could study about this, such as whether it is always possible to find such a path or what the length of the shortest path is.

We can model this as a graph where each actor gets a vertex and each movie also gets a vertex. We then add edges between actors and the movies they acted in. The file is structured so that on each line there is a movie name followed by all the actors in that movie. The entries on each line are separated by slashes, like below:

Princess Bride, The (1987)/Guest, Christopher (I)/Gray, Willoughby/Cook, ...

Here is some code that parses through the file and fills up the graph:

G = Graph()

for line in open('movies.txt'):

line = line.strip()

L = line.split('/')

G.add(L[0])

for x in L[1:]:

if x not in G:

G.add(x)

G.add_edge(L[0], x)

We can then use a modified BFS to find the shortest path between one actor and another. Asking whether it is always possible to find a path is the same as asking whether the graph is connected. It turns out not to be, but it is “mostly” connected. That is, we can run a variation of BFS or DFS to find the components of the graph, and it turns out that the graph has one very large component, containing almost all of the actors and movies you have ever heard over. The graph contains a few dozen other small components, corresponding to isolated movies all of whose actors appeared only in that movie and nowhere else.

Word ladders

A word ladder is a path from one word to another by changing one letter at a time, with each intermediate step being a real word. For instance, one word ladder from this to that is this → thin → than → that.

We can model this as a graph whose vertices are words, with edges whenever two words differ by a single letter. Here is the code to build up a graph of four-letter words. For it, you'll need to find a list of words with one word per line. There are many such lists online.

words = [line.strip() for line in open('wordlist.txt')]

words = set(w for w in words if len(w)==4)

alpha = 'abcdefghijklmnopqrstuvwxyz'

G = Graph()

for w in words:

G.add(w)

for w in words:

for i in range(len(w)):

for a in alpha:

n = w[:i] + a + w[i+1:]

if n in words and n != w:

G.add_edge(w, n)

The trickiest part of the code is the part that adds the edges. To do that, for each word in the list, we try changing each letter of the word to all possible other letters, and add an edge whenever the result is a real word. Once the graph is built, we can call one of the searches to find word ladders, like below:

print(shortest_path(G, 'wait', 'lift'))

It is also interesting to look at the components of this graph. The list of words I used had 2628 four-letter words. The graph has 103 components. One of those components is 2485 words long, indicating that there is a word ladder possible between most four-letter words. Of the other components, 82 of them had just one word. Words in these components include void, hymn, ends, and onyx. The remaining components contain between 2 and 6 words, the largest of which contains the words achy, ache, ashy, acme, acne, and acre.

Real applications

The applications presented here are of more of a fun than a practical nature, but don't let that fool you. Graph theory is applied to real problems in many fields. Here is a very small sample: isomer problems in chemistry, DNA sequencing problems, flood fill in computer graphics, and matching organ donors to recipients. There are also many networking examples such as real and simulated neural networks, social networks, packet routing on the internet, where to locate web servers on a CDN, spread of disease, and network models of viral marketing.

Bipartite Graphs and Trees

Bipartite graphs

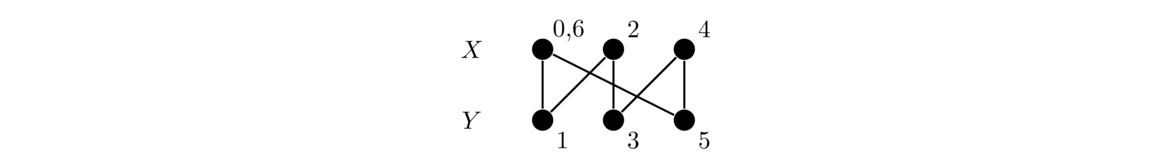

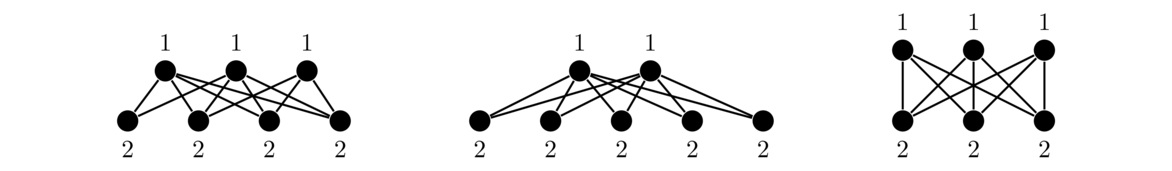

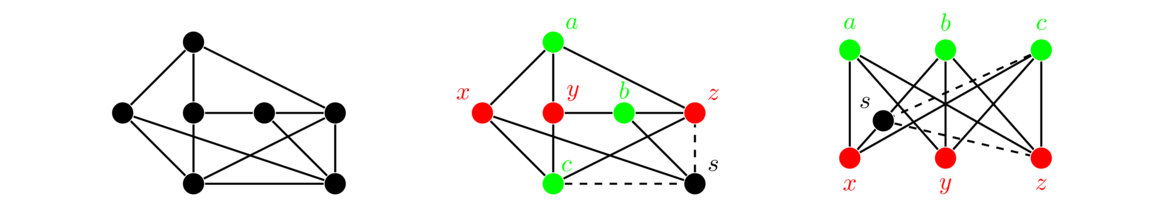

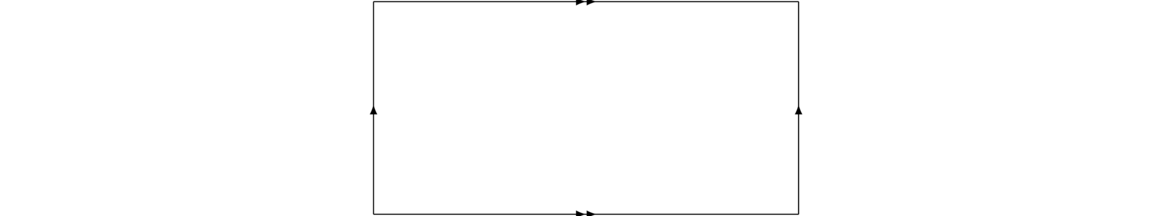

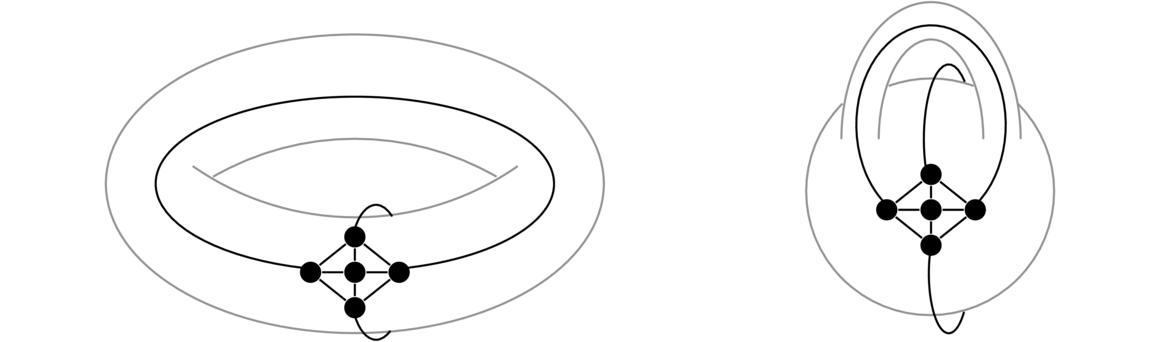

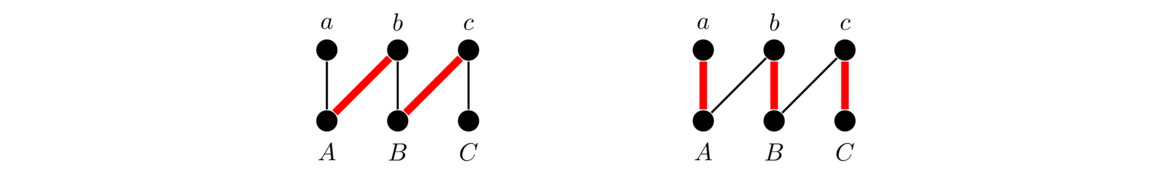

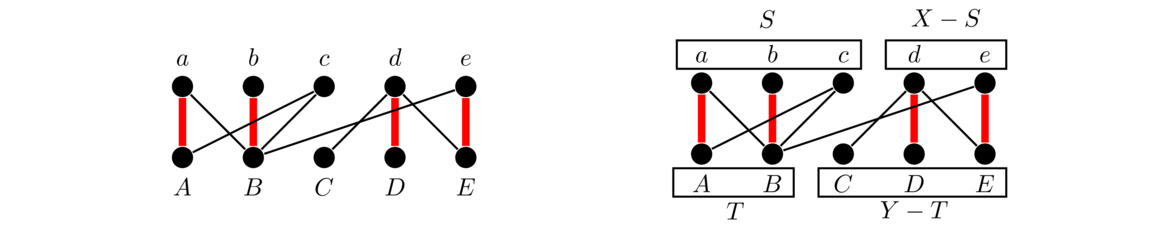

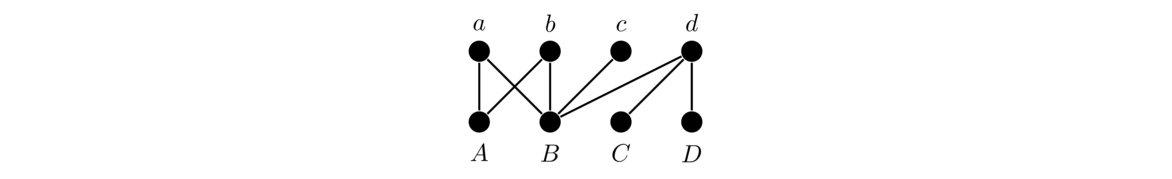

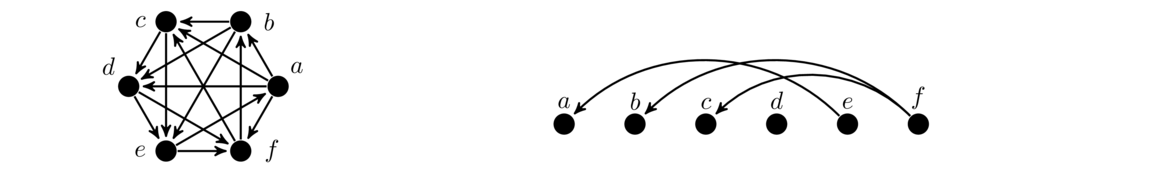

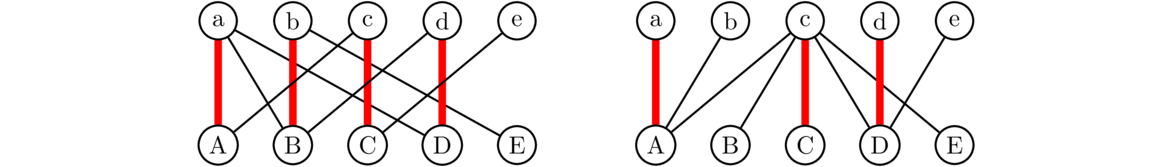

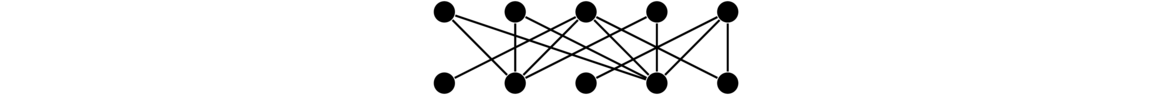

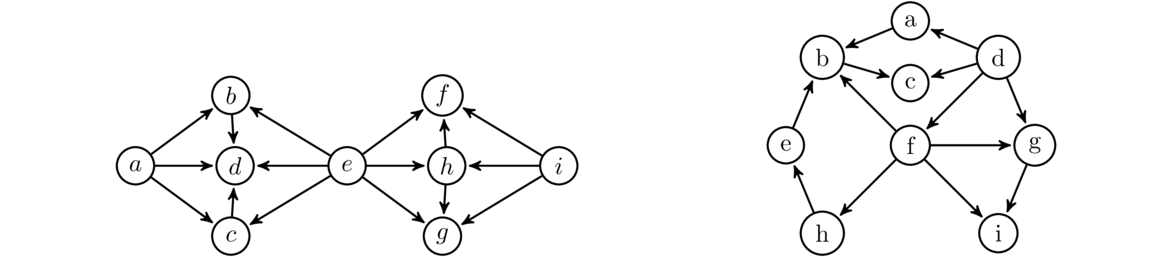

Shown below are a few bipartite graphs.

Notice that the vertices are broken into two parts: a top part and a bottom part. Edges are only possible between the two parts, not within a part, with the top and bottom parts both being independent sets. Here is the formal definition of a bipartite graph:

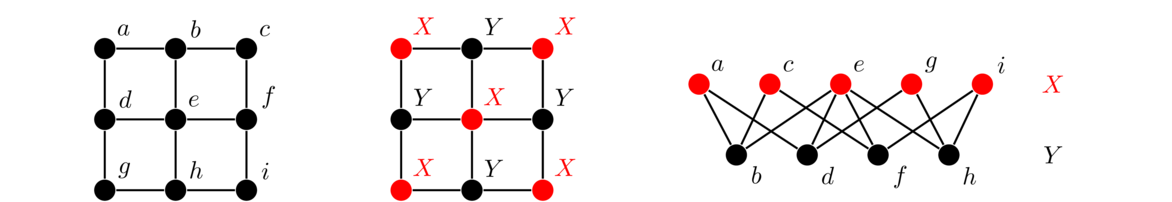

In short, in a bipartite graph, it is possible to break the vertices into two parts, with edges only going between the parts, never inside of one. We typically draw bipartite graphs with the X partite set on top and the Y partite set on the bottom, like in the examples above. However, many graphs that are not typically drawn this way are bipartite. For example, grids are bipartite, as can be seen in the example below.

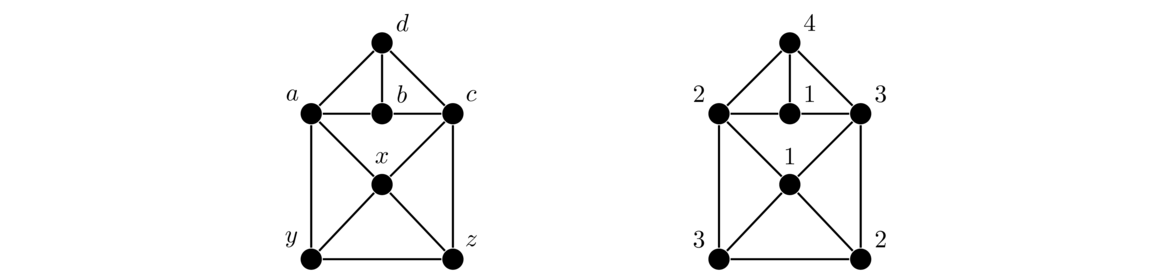

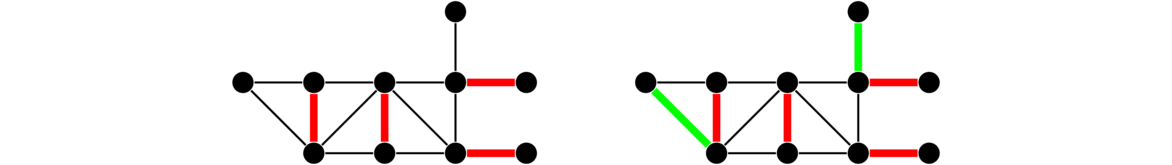

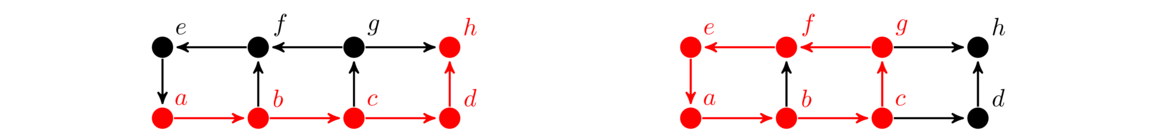

How to tell if a graph is bipartite

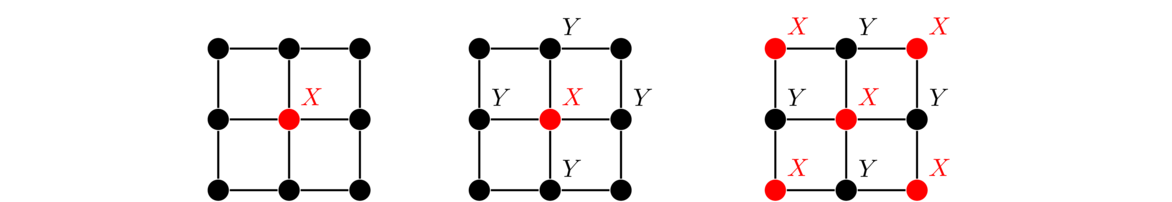

The approach to telling if a graph is bipartite is this: Start at any vertex and label it with an X. Label each of its neighbors with a Y. Label each of their neighbors with an X. Keep doing this, visiting each vertex in the graph, labeling its neighbors with the opposite of its own label. If it ever happens that two adjacent vertices get the same label or that some vertex ends up labeled with both an X and a Y, then the graph is not bipartite. If we get through every vertex without this happening, then the graph is bipartite, with the vertices labeled X and the vertices labeled Y forming the two parts of the bipartition. An example is shown below, starting at the middle vertex.

This can be coded using the BFS/DFS code from the last chapter to visit the vertices.

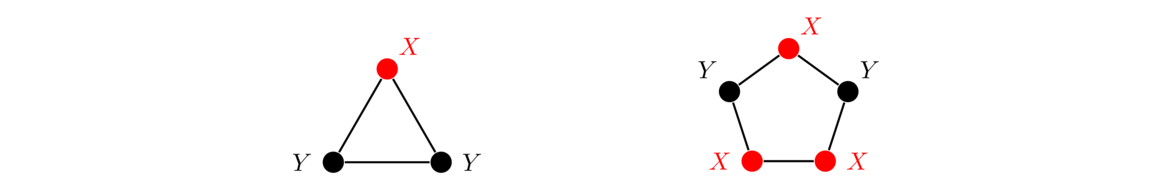

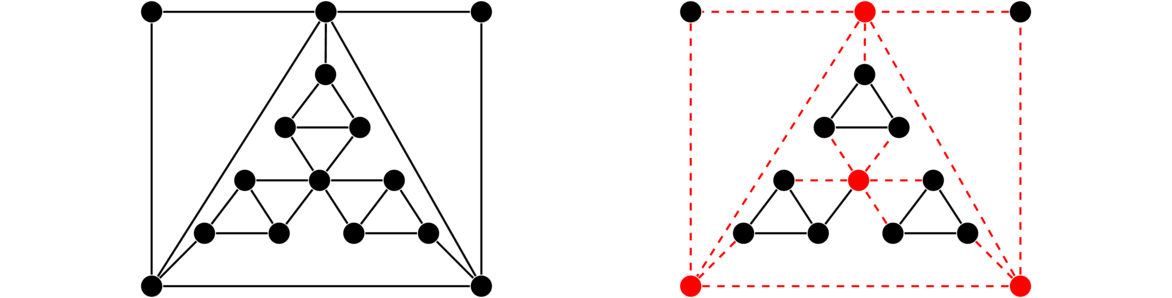

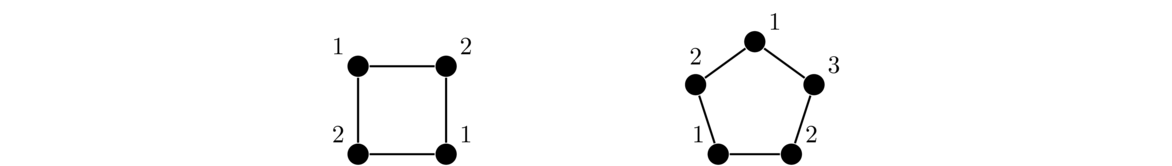

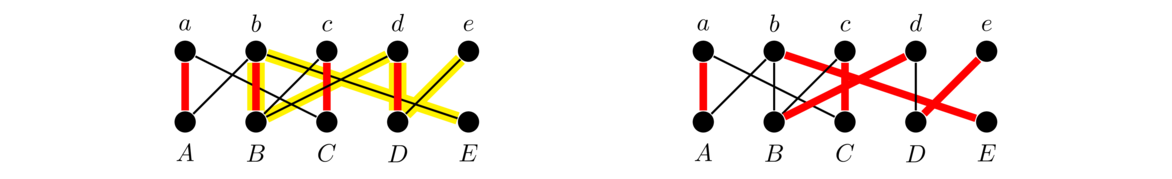

Suppose we try the algorithm on the triangle C3. We start by labeling the top vertex with an X. Then its two neighbors get labeled with Y, and we have a problem because they are adjacent. A similar problem happens with C5. See below.

In fact, any odd cycle suffers from this problem, meaning that no odd cycle can be bipartite.

So bipartite graphs can't have odd cycles. What is remarkable is that odd cycles are the only thing bipartite graphs can't have. Namely, any graph without any odd cycles must be bipartite.

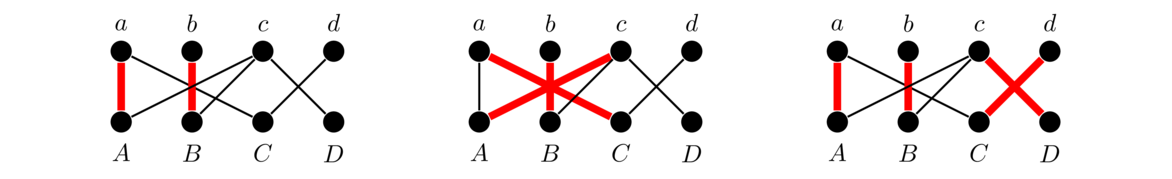

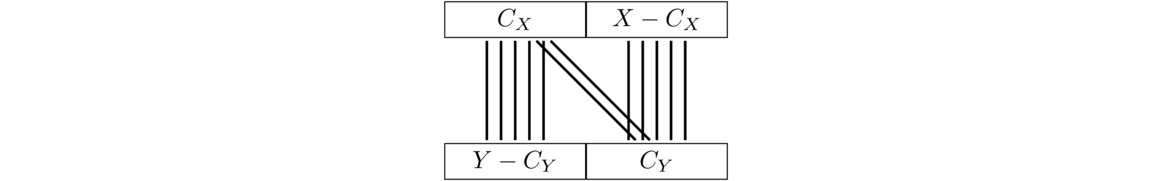

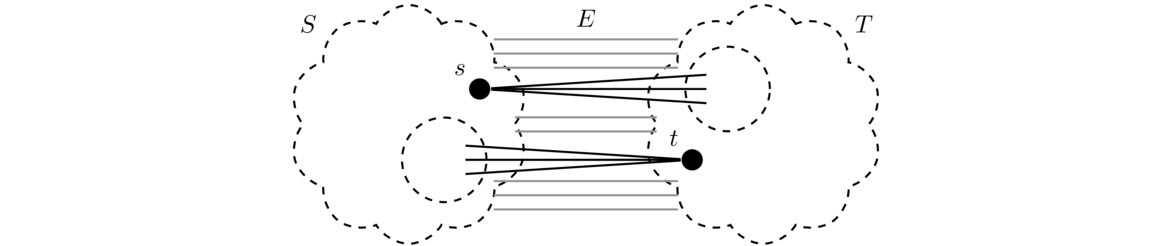

Now suppose we have a graph with no odd cycles. Pick any component of the graph and pick any vertex a in that component. Let X be all the vertices in the component at an even distance from a and let Y be all the vertices in the component at an odd distance from a. We claim this forms a bipartition of the component. To show this, it suffices to show there are no edges within X or within Y.

Suppose there were such an edge between vertices u and v, with both in X or both in Y. So u and v are both either at an even distance from a or both at an odd distance from a. Recall that the distance between vertices is the length of the shortest path between them. Consider the shortest paths from a to u and from a to v. These paths might share some vertices. Let b be the last vertex those paths have in common (which might possibly just be a itself). The parts of the two paths that go from a to b must have the same length or else we could use the shorter one to shorten the other's path from a to either u or v, contradicting that it's already the shortest.

The path from b to u followed by edge uv, followed by the path from v to b forms a cycle. Its length is 1 plus the distance from b to u plus the distance from b to v, and this must be odd since the distances from b to u and from b to v must both be odd or both be even, meaning their sum is even. This is a contradiction, so there cannot be any edge within X or within Y. Thus X and Y form a bipartition of the component. We can do this same process for every component to get a bipartition of the entire graph.

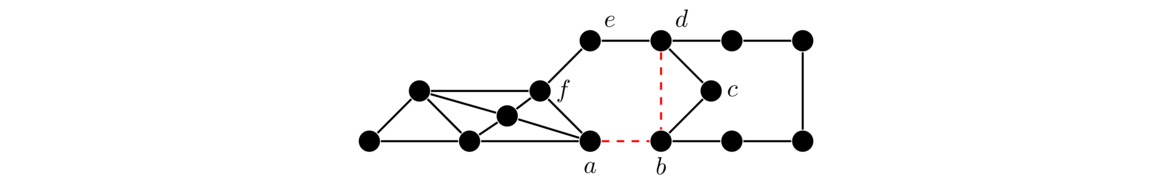

The picture below shows an example of the odd cycle created in the second part of the proof.

The second part of the proof relies on a clever idea, that of picking a vertex and breaking the graph into two groups: vertices that are an even distance from it and vertices that are an odd distance from it. If you want to remember how the proof goes, just remember that key idea, and the rest consists of a little work to make sure that the idea works. Many theorems in graph theory (and math in general) are like this, where there is one big idea that the proof revolves around. Learn that idea and you can often work out the other small details to complete the proof.

Usually when there is a nice characterization of something in graph theory like this, there is a simple algorithm that goes along with it. That is the case here. Though we won't do it here, it's not too hard to adapt the proof above to show that the algorithm of the previous section correctly tells if a graph is bipartite.

More on bipartite graphs

Bipartite graphs have a number of applications, some of which we will see later.

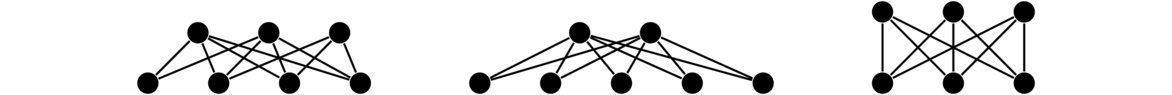

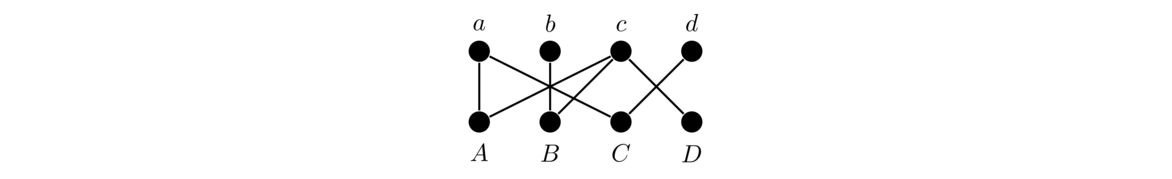

A particularly important class of bipartite graphs are complete bipartite graphs. These are bipartite graphs with all possible edges between the two parts. The notation Km,n denotes a complete bipartite graph with one part of m vertices and the other of n vertices. Shown below are K3,4, K2,5, and K3,3.

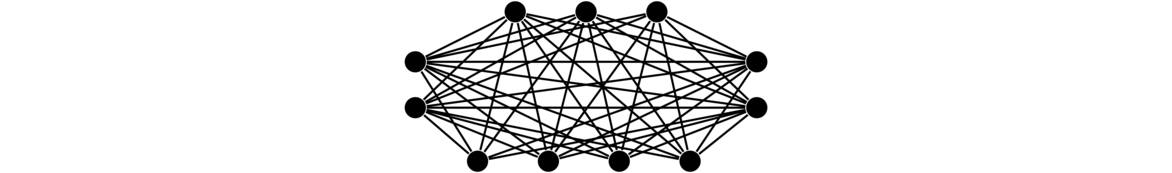

Bipartite graphs are a special case of multipartite graphs, where instead of two partite sets there can be several. For instance, shown below is a complete multipartite graph with four parts.

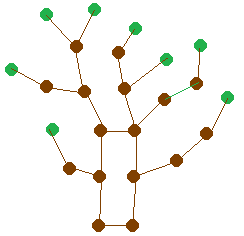

Trees

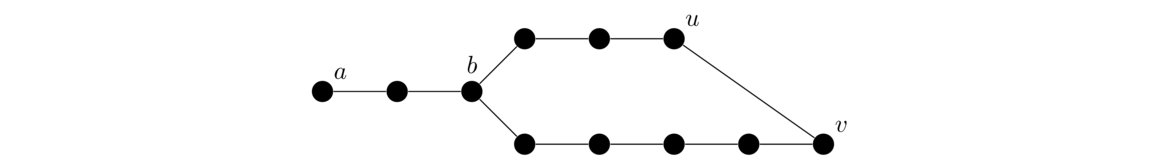

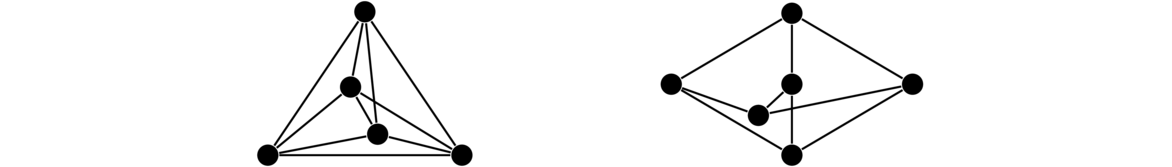

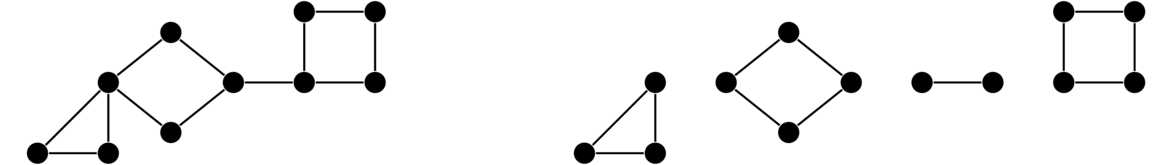

Trees are graphs that have a variety of important applications in math and especially computer science. Shown below are a few trees.

Trees have a certain tree-like branching to them, which is where they get their name. It is a little tricky to define a tree in terms of this branching idea. A simpler definition is the following:

From this definition, the following properties follow:

- In any tree, there is exactly one path from every vertex to every other vertex.

- Trees have the least number of edges a graph can have and still be connected. In particular, every edge of a tree is a cut edge.

- Trees have the most number of edges a graph can have without having a cycle. In particular, adding any edge to a tree creates exactly one cycle.

- Every tree with n vertices has exactly n-1 edges.

We won't formally prove the first three, but here are a few reasons why they are true:

Regarding the first property, if there were two paths to a vertex, then we could combine them to get a cycle. Regarding the second property, recall that Theorem 6 says cut edges are precisely those edges that don't lie on a cycle. For the third property, if you add an edge between vertices u and v, that would create a cycle involving edge uv and the path that is guaranteed to exist between u and v.

Leaves and induction

The last property of the previous section provides a nice opportunity to use induction. But first, we need the following definition and theorem.

That is, leaves are the vertices that are at the ends of a tree. A leaf has only one neighbor. And every nontrivial tree has a leaf, as stated below.

We will use this fact and induction now to prove that every tree with n vertices has exactly n-1 edges.

The base case of a tree on one vertex with no edges fits the property. Now assume that all trees on n vertices contain n-1 edges. Let T be a tree on n+1 vertices. By Theorem 8, T has a leaf v. The graph T-v has n vertices and is a tree since removing a vertex cannot create a cycle, and removing a leaf cannot disconnect the graph. So by the induction hypothesis, T-v has n-1 edges. Since one edge was removed from T to get T-v, T must have n edges. Thus the result is true by induction.

This technique of pulling off a leaf in an induction proof is useful in proofs involving trees.

Alternate definitions of trees

Any of the tree properties mentioned earlier can themselves be used to reformulate the definition of a tree.

- T is a tree.

- Every pair of vertices of T is connected by exactly one path.

- T is connected and removing any edge disconnects it.

- T has no cycles and adding any edge creates a cycle.

- T is connected graph with n vertices and n-1 edges.

- T is an acyclic graph with n vertices and n-1 edges.

The proof of any part of this is not difficult and involves arguments similar to those we gave earlier. In addition to these, there are other ways to combine tree properties to get alternate characterizations of trees.

Forests

If we remove connectedness from the definition of a tree, then we have what is called a forest.

So, technically, a tree is also a forest, but most of the time, we think of a forest as a graph consisting of several components, all of which are trees (as you might expect from the name forest). A forest is shown below.

More about trees

Trees and forests are bipartite. By definition, they have no cycles, so they have no odd cycles, making them bipartite by Theorem 7.

Trees have innumerable applications, especially in computer science. There, rooted trees are the focus. These are trees where one vertex is designated as the root and the tree grows outward from there. With a root it makes sense to think of vertices as being parents of other vertices, sort of like in a family tree. Of particular interest are binary trees, where each vertex has at most two children. Binary trees are important in many algorithms of computer science, such as searching, sorting, and expression parsing.

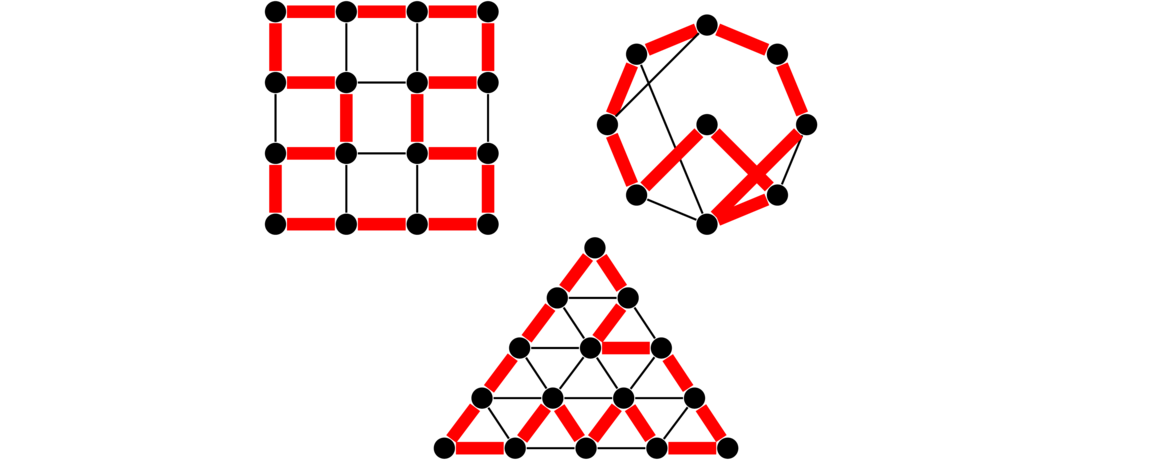

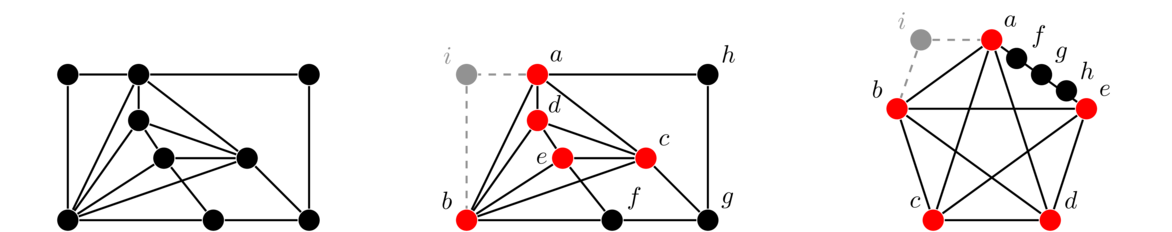

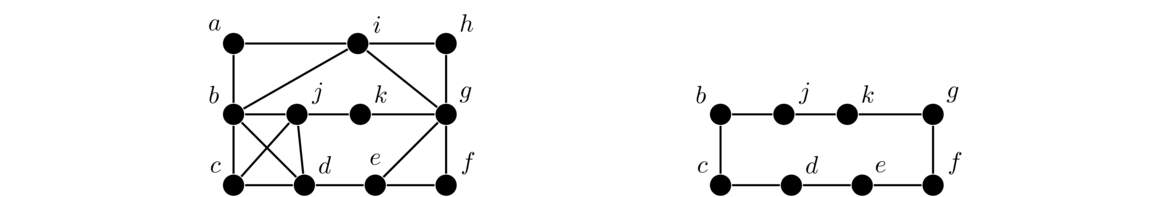

Spanning trees

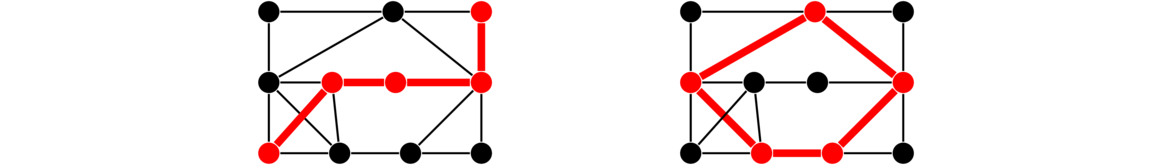

A problem that comes up often is to find a connected subgraph of a graph that uses as few edges as possible. Essentially, we want to be able to get from any vertex to any other, and we want to use as few edges as we can to make this possible. Having a cycle in the subgraph would mean we have a redundant edge, so we don't want any cycles. Thus we are looking for a connected subgraph with no cycles that includes every vertex of the original graph. Such a graph is called a spanning tree. Shown below are two graphs with spanning trees highlighted.

Spanning trees have a number of applications. A nice example shows up in computer network packet routing. Here we have a bunch of computers networked together. Represented as a graph, the computers become vertices and direct connections between two computers correspond to edges. When one computer wants to send a packet of information to another, that packet is transferred between computers on the network until it gets to its destination, in a process called routing. Cycles in that network can cause a packet to bounce around forever. So the computers each run an algorithm designed to find a spanning tree—that is, a subgraph of the network that has no cycles yet still makes it possible to get from any computer to any other computer.

A nice way to find a spanning tree in a graph uses a small variation of the DFS/BFS algorithms we saw earlier. We build up the tree as follows: The first time we see a vertex, we add it to the tree along with the edge to it from the current vertex being searched. If we see the vertex again when searching from another vertex, we don't add the edge that time. Essentially, we walk through the graph and add edges that don't cause cycles until we have reached every vertex. An alternate approach would be to remove edges from the graph until there are no cycles left, making sure to keep the graph connected at all times.

Counting spanning trees

Mathematicians like to count things, and spanning trees are no exception, especially as there is some interesting math involved. In this section, we will use the notation τ(G) to denote the number of spanning trees of a graph G.

One simple fact is that if T is a tree, then τ(T) = 1. Another relatively simple fact is that τ(Cn) = n. This comes from the fact that leaving off any one of the n edges of a cycle creates a different spanning tree, and removing any more than one edge would not leave a tree.

One other fact is that if a graph has a cut vertex, like in the graph below, then the cut vertex essentially separates the graph into two parts, and the spanning trees of the two parts will have no interaction except through that cut vertex. So we can get the total number of spanning trees by multiplying the numbers of spanning trees on either side of the cut vertex, giving 3 × 4 = 12 in total.

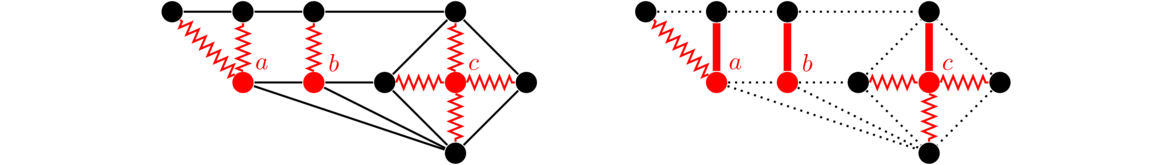

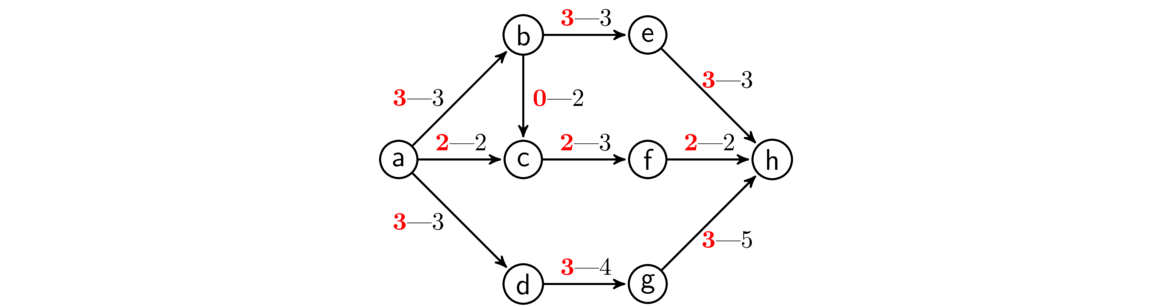

One formula for the number of spanning trees relies on something called the contraction of an edge. Contraction is where we essentially “contract” or pinch an edge down to a single point, where the edge is removed and its two endpoints are reduced to a single vertex. Two examples are shown below, where the edge e = uv is contracted into a new vertex w.

Here is the formal definition:

Using this, we get the following formula for the number of spanning trees of a graph G:

Why does this work? First, the spanning trees of G-e are essentially the same as the spanning trees of G that don't include e. Second T(G · e) counts the spanning trees of G that do contain e. This is because any such spanning tree has to include the new double-vertex of G · e, which corresponds to including edge e in the original, since the new double-vertex stands in place of e and its two endpoints.

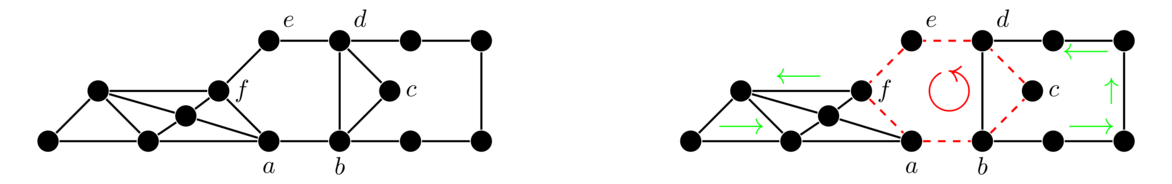

Here is an example. We will compute the number of spanning trees of G = P3 □ P2 as shown below.

Taking the middle edge e out of G leaves us with C6. Any 5 edges of C6 (basically leaving out just one edge) make a spanning tree of C6, so there are 6 spanning trees of C6. Contracting the middle edge e of G leaves us with the bowtie graph shown above. Any spanning tree must include 2 edges from the left half of the graph and 2 from the right. There are 3 ways to include 2 edges on the left and 3 ways to include 2 on the right, so there are 9 possible spanning trees. So in total in G, we have τ(G) = 6 + 9 = 15.

In general, this spanning tree formula can be recursively applied. That is, we break G into G-e and G · e and then break each of those up further and so on until we get to graphs whose spanning trees we can easily count. For really large graphs, this would get out of hand quickly, as the number of graphs to look at grows exponentially.

Prüfer codes

In this section, we will count the spanning trees of the complete graph Kn. This is the same as the number of ways to connect up n items into a tree. There is a nice technique for this, called Prüfer codes.

We start with a tree with vertices labeled 1 through n. The key step of the Prüfer code process is this:

Take the smallest numbered leaf, remove it, and record the number of its neighbor.

Repeat this step until there are just two vertices left. The result is an (n-2)-tuple of integers. An example of the process is shown below.

Notice that, at each step, the vertex we remove is the one that has the smallest label. We don't record its label in the sequence; instead we record the label of its neighbor. This is what allows us to reconstruct the tree from its sequence.

To do this reconstruction, we do the following: Let P denote the Prüfer sequence, let V denote the sequence of integers from 1 to n, representing the n vertices of the graph. Note that V will always be two elements longer than P. Here is the key step of the reconstruction process:

Choose the smallest integer v of V that is not in P, and choose the first integer p of P. Add an edge between vertices v and p, and remove v and p from their corresponding sequences.

Repeat this step until P is empty. At this point, there will be two integers left in V. Add an edge between their corresponding vertices. An example is shown below, reversing the process of the previous example.

At each step, we take the first thing in P and the smallest thing in V that is not in P. So we won't always be taking the first thing in V.

The Prüfer encoding and decoding processes are inverses of each other. There is a little work that goes into showing this, but we will omit it here. We end up getting a one-to-one correspondence between labeled trees and Prüfer codes. Now each Prüfer code has n-2 entries, each of which can be any integer from 1 through n, so there are nn-2 possible Prüfer codes and hence that many labeled trees on n vertices. Each of these labeled trees is equivalent to a spanning trees on Kn, so we have τ(Kn) = nn-2. This result is known as Cayley's theorem.

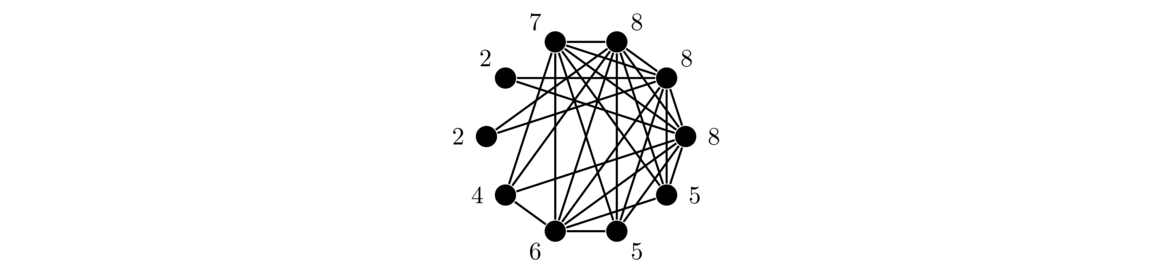

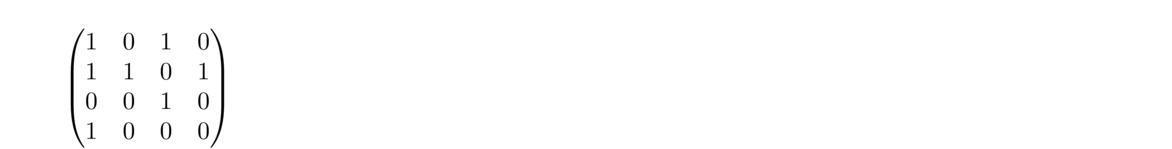

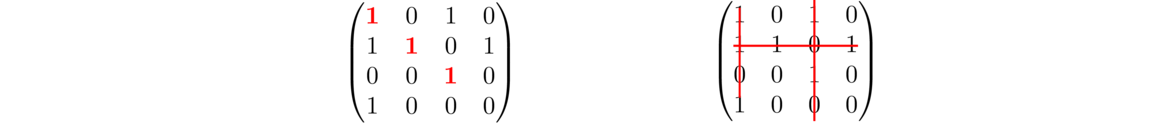

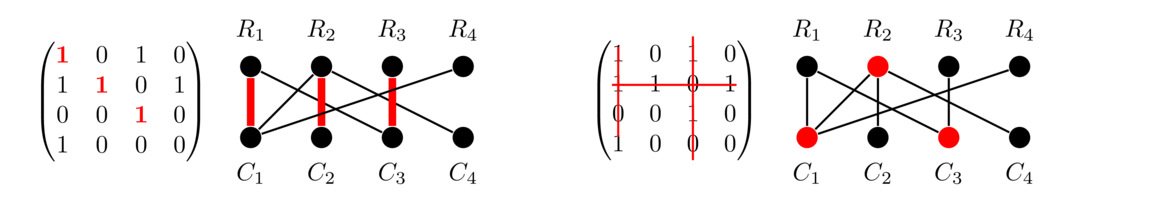

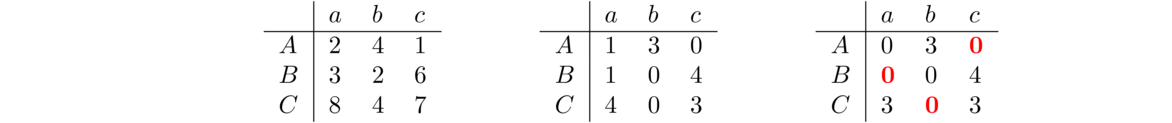

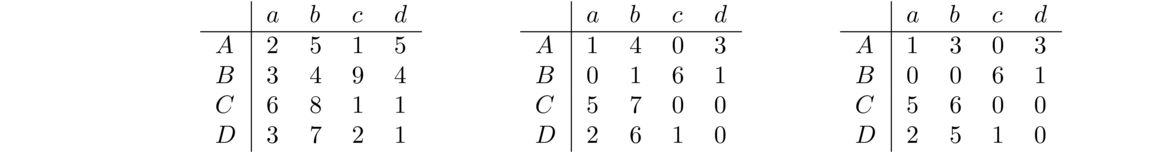

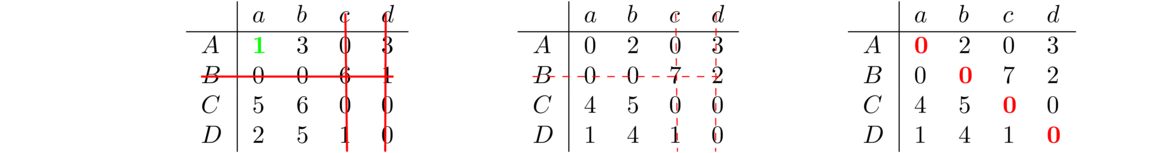

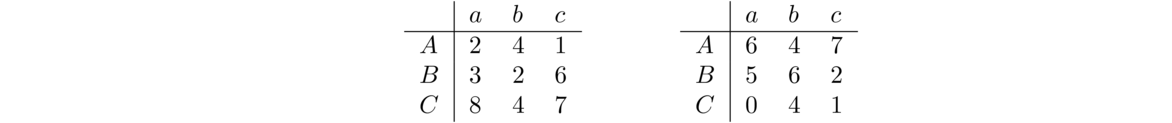

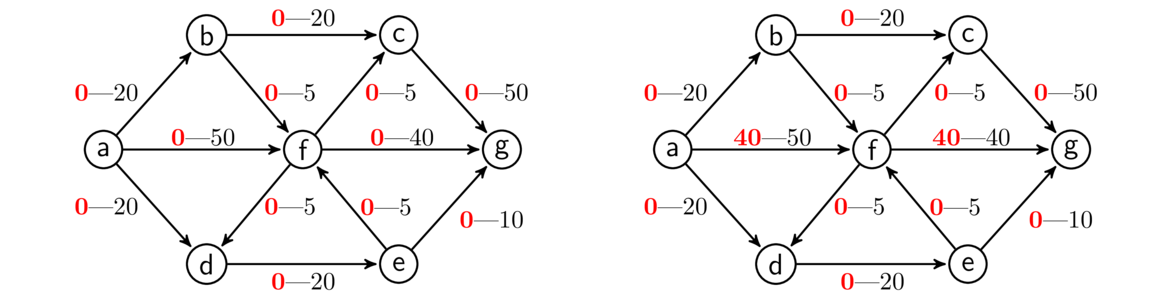

For those who have seen linear algebra, there is a nice technique using matrices to count spanning trees. We start with the adjacency matrix of the graph and replace all entries with their negatives. Then replace all the entries along the diagonal with the degrees of the vertices that correspond to that location in the matrix.

Then delete any row and column and take the determinant. The absolute value of the determinant gives the number of spanning trees of the graph. This remarkable result is called the Matrix tree theorem.

For example, shown below is a graph, followed by its adjacency matrix modified as mentioned above, followed by the matrix obtained by removing the first row and column. Taking the determinant of that matrix gives 15, which is the number of spanning trees in the graph.

When we count spanning trees, some of the spanning trees are likely isomorphic to others. For instance, we know that τ(C6) is 6, but each of those six spanning trees is isomorphic to all of the others, since they are all copies of P6. A much harder question to answer is how many isomorphism classes of spanning trees are there. For C6, the answer is 1, but the answer is not known for graphs in general.

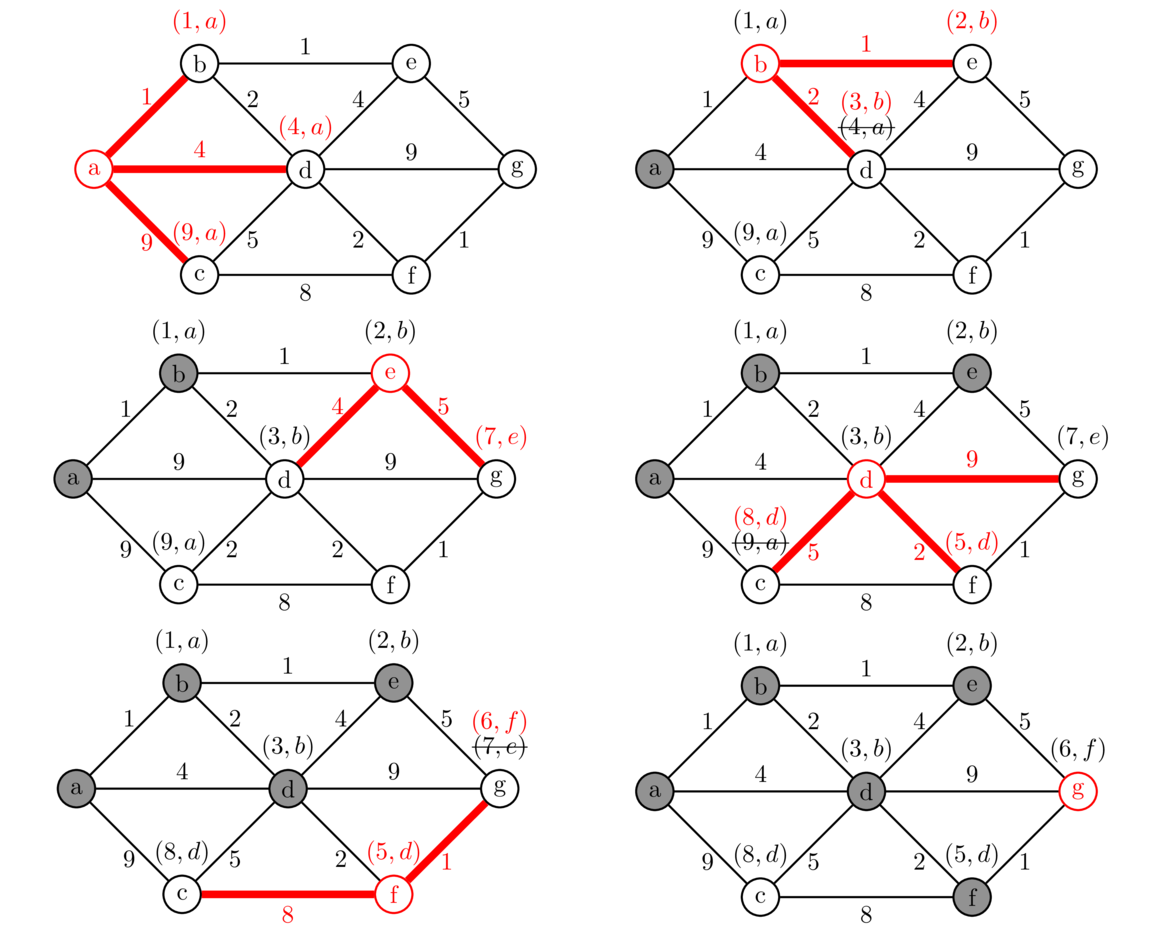

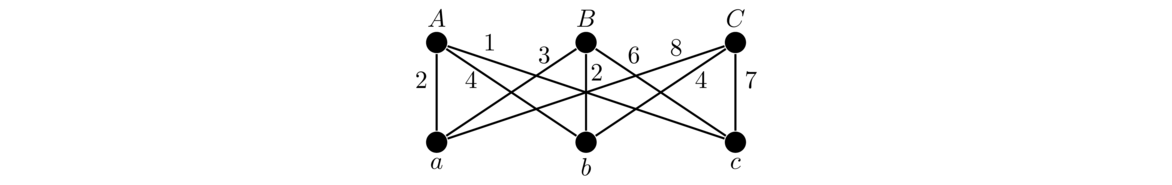

Minimum spanning trees

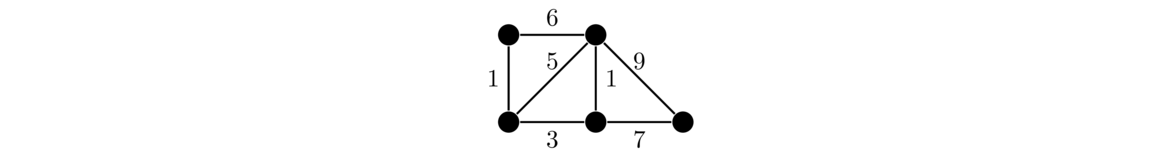

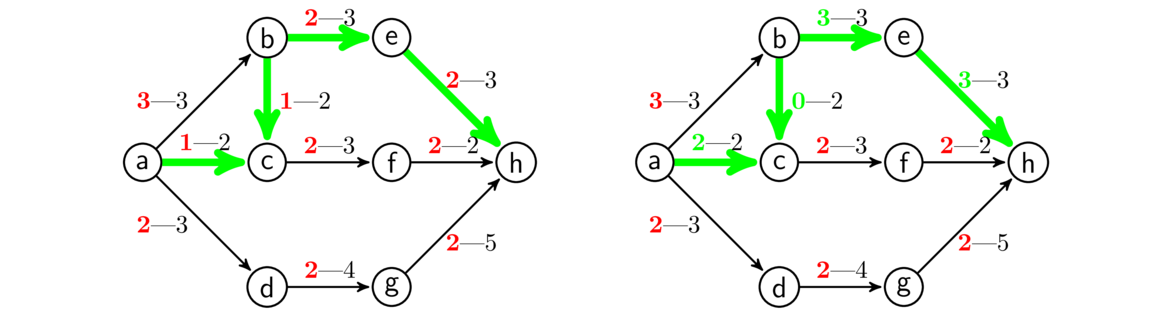

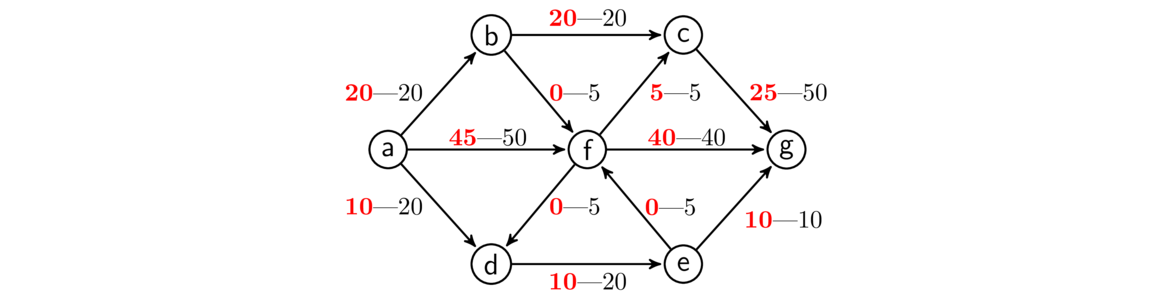

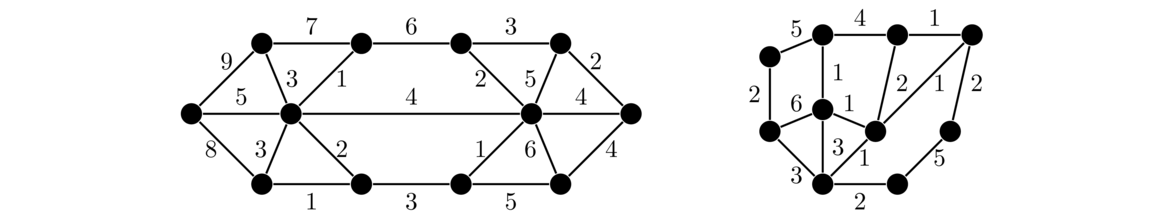

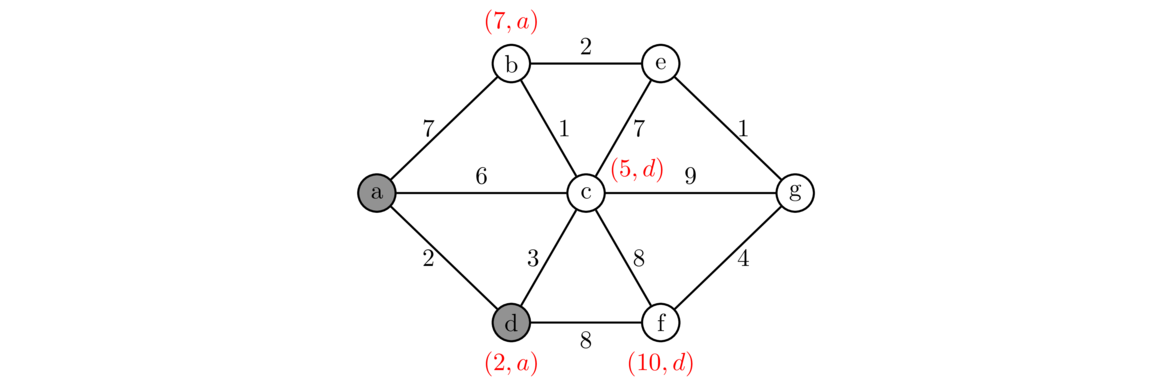

A weighted graph is a graph where we label each edge with a number, called its weight, like shown below.

Weighted graphs are useful for modeling many problems. The weight often represents the “cost” of using that edge. For example, the graph above might represent a road network, where the vertices are cities and the edges are roads that can be built between the cities. The weights could represent the cost of building those roads.

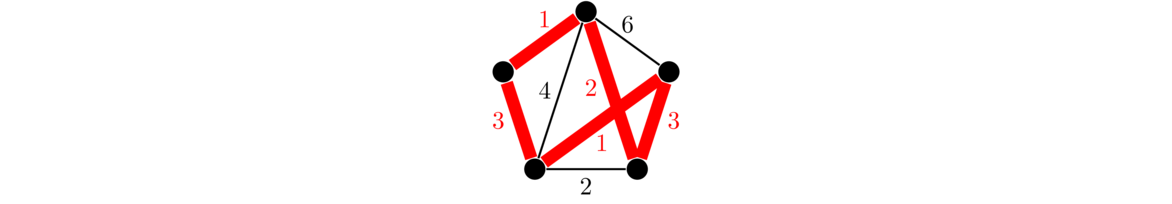

Continuing with this, suppose we want to build just enough roads to make it possible to get from any city to any other, and we want to do so as cheaply as possible. Here is the answer for the graph above:

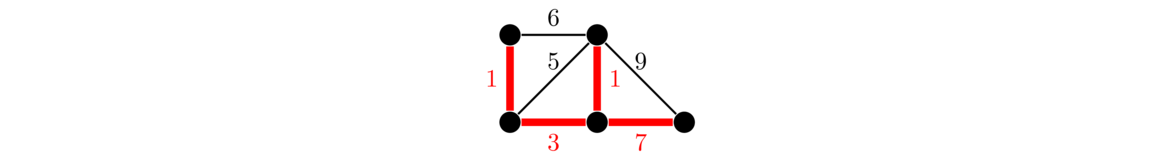

When solving this problem, since we are trying to do things as cheaply as possible, we don't want any cycles, as that would mean we have a redundant edge. And we want to be able to get to any vertex in the graph, so what we want is a minimum spanning tree, a spanning tree where the sum of all the edge weights in the tree is as small as possible. There are several algorithms for finding minimum spanning trees. We will look at two: Kruskal's algorithm and Prim's algorithm.

Kruskal's algorithm

Kruskal's algorithm builds up a spanning tree of a graph G one edge at a time. The key idea is this:

At each step of the algorithm, we take the edge of G with the smallest weight such that adding that edge into the tree being built does not create a cycle.

We can break edge-weight ties however we want. We continue adding edges according to the rule above until it is no longer possible. In a graph with n vertices, we will always need to add exactly n-1 edges.

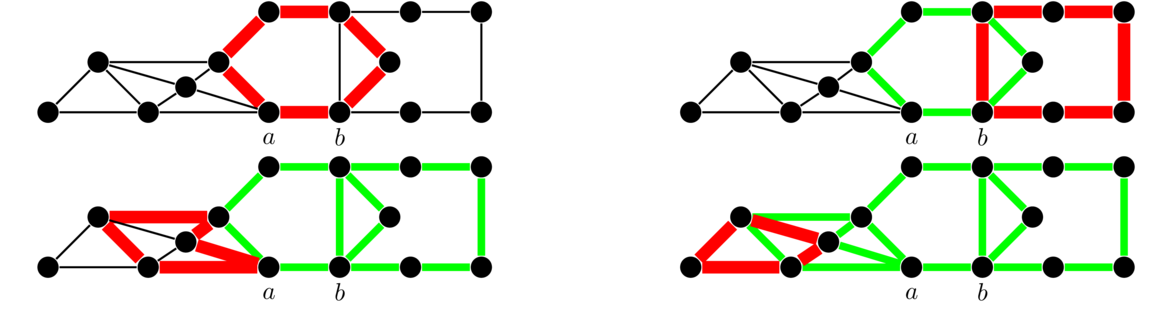

An example is shown below. Edges are highlighted in the order they are added.

Kruskal's algorithm is an example of a greedy algorithm. It is called that because at each step it chooses the cheapest available edge without thinking about the future consequences of that choice. It is remarkable that this strategy will always give a minimum spanning tree.

It's worth talking about why Kruskal's algorithm works. We should be sure that the graph T that it builds is actually a spanning tree of the graph. First, T clearly has no cycles because Kruskal's algorithm by definition can't create one. Next, T is connected, since if it weren't, we could add an edge between components of T without causing a cycle. Finally, T is spanning (includes every vertex), since if it weren't, we could add an edge from T to a vertex not in T without causing a cycle. We know that trees on n vertices always have n-1 edges, so we will need to add exactly n-1 edges.

Now let's consider why Kruskal's tree T has minimum weight. Consider some minimum spanning tree S that is not equal to T. As we go through the Kruskal's process and add edges, eventually we add an edge e to T that is not part of S. Suppose we try to add e to S. That would create a cycle by the basic properties of trees. Along that cycle there must be some other edge d that is not in T because T is a tree and it can't contain every edge of that cycle. See below where a hypothetical portion of S is shown along with d and e.